The multi-compute cluster architecture of ApsaraDB for SelectDB is designed for two typical scenarios:

• Write and read isolation: In the traditional data warehouse architecture, data is written and read in the same compute cluster. During business write peaks or sudden spikes in write pressure, the performance and stability of query services can be affected due to resource preemption. By introducing multi-compute clusters, independent clusters can handle writes and reads separately. This ensures that even under high write pressure, computational tasks can be executed without compromising service stability.

• Online and offline business isolation: Many data analytics scenarios will use the same data to support multiple business operations. For example, one business might use a copy of data to support data queries for end-users, while another business might use the same dataset for internal user operational analysis. These different business requirements often have distinct latency and availability needs. Traditional architectures typically store redundant data in different systems to meet these varying requirements, leading to increased storage costs and maintenance overhead. By supporting the multi-compute cluster architecture, ApsaraDB for SelectDB can use a single copy of the data with independently isolated compute resources to meet both online and offline business needs, providing significant cost savings and simplified operations for users.

ApsaraDB for SelectDB is a fully managed real-time data warehouse service based on Apache Doris, utilizing a new cloud-native architecture that supports compute-storage separation. After the compute layer and the storage layer are separately designed, the compute layer can support extremely flexible and fast scaling because it has no data status. Meanwhile, the storage layer can be easily shared by multiple compute resources because it is decoupled from compute. Therefore, we introduced the multi-compute cluster capability in ApsaraDB for SelectDB to better meet user needs through innovations in the data warehouse architecture.

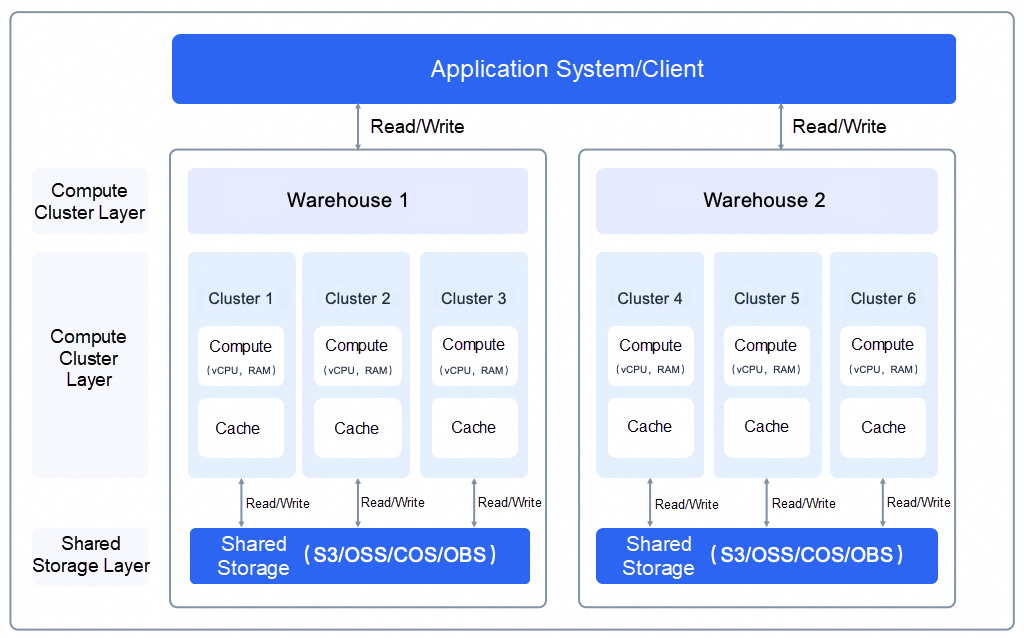

In the architecture design of ApsaraDB for SelectDB, a warehouse instance can contain multiple clusters, similar to compute queues and compute groups in a distributed system. Data is persisted in an underlying shared storage layer, which can be accessed by multiple clusters. Each cluster itself is a distributed system, consisting of one or more BE nodes. Due to the slower access speed of remote storage in the storage-compute separation architecture, we have introduced local caching on the compute nodes to accelerate data access.

For example, in the following architecture diagram, warehouse 1 contains cluster 1, cluster 2, and cluster 3, all of which can access the data stored in the shared storage.

When using multiple clusters, users can connect to the warehouse instance and switch between different compute clusters using commands. Here is an example of using multi-compute clusters for read-write separation:

• Connect to ApsaraDB for SelectDB through a MySQL client and create databases and tables by using cluster_1.

# Switch to cluster_1.

USE @cluster_1;

# Create a database and a table.

CREATE DATABASE test_db;

USE test_db;

CREATE TABLE test_table

(

k1 TINYINT,

k2 DECIMAL(10, 2) DEFAULT "10.05",

k3 CHAR(10) COMMENT "string column",

k4 INT NOT NULL DEFAULT "1" COMMENT "int column"

)

COMMENT "my first table"

DISTRIBUTED BY HASH(k1) BUCKETS 16;• Write sample data by using cluster_2 through Stream Load.

curl --location-trusted -u admin:admin_123 -H "cloud_cluster:cluster_2" -H "label:123" -H "column_separator:," -T data.csv http://host:port/api/test_db/test_table/_stream_loadThe sample data in data.csv:

1,0.14,a1,20

2,1.04,b2,21

3,3.14,c3,22

4,4.35,d4,23• Connect to ApsaraDB for SelectDB through a MySQL client and query data by using cluster_3.

# Switch to cluster_3.

USE @cluster_3;

# Perform the query

SELECT * FROM test_table;

In a cloud-native storage-compute separation architecture, the implementation of multi-compute clusters may seem technically simple. However, from a product perspective, achieving mature and user-friendly multi-compute cluster capabilities that can be effectively applied in real-world business scenarios still requires deep design for many key points. In this section, we will introduce some of these key points.

After separating storage and compute, data is stored in a shared storage layer that can be accessed by multiple clusters. After data is written to one cluster, can another cluster immediately access this data? If not, there will be a certain data latency, which is unacceptable for many highly real-time business scenarios.

To achieve strong consistent data access, ApsaraDB for SelectDB not only realizes data sharing, but also implements in-depth reconstruction to realize metadata sharing. When data is written to the shared storage via one cluster, it first updates the shared metadata before returning the write result. When other clusters access the data, they can retrieve the latest data information from the shared metadata center, ensuring strong consistent data sharing. This means that once data is successfully written to ApsaraDB for SelectDB through any cluster, it immediately becomes visible to other clusters.

Based on shared storage, multi-read is relatively easy to implement, but can writes be performed by only one cluster? If so, is the cluster pre-determined manually, and do all write jobs need to be manually changed if it fails? Alternatively, should a distributed lock be introduced to coordinate among multiple clusters to decide which cluster is responsible for writing?

More challenging is the scenario where the original writing cluster becomes suspended, potentially leading to conflicts as multiple clusters attempt to write simultaneously. Solving these issues can significantly increase the complexity of the data warehouse architecture. As a result, many relational databases still adopt a one-write-multi-read architecture after years of exploration.

ApsaraDB for SelectDB, considering the characteristics of data warehouse scenarios, has undergone deep design to achieve multi-write and multi-read capabilities, simplifying user operations and reducing system complexity. Specifically, in data warehouse scenarios, high write throughput is achieved through small-batch and high-concurrency writes. Data latency at the second level is sufficient for most users, and the write transaction concurrency in data warehouses is much lower compared with the hundreds of thousands of transactions per second required by relational databases. Therefore, ApsaraDB for SelectDB can leverage the MVCC (Multi-Version Concurrency Control) mechanism and use a shared metadata center for transaction coordination. Data is first submitted to multiple clusters for conversion processing, and then distributed coordination is performed during the metadata update phase (data-taking effect process). The cluster that first obtains the lock is successfully written, while other clusters retry. Since the main cost of data writes lies in the conversion phase, the distributed coordination mechanism and optimistic lock design enable multi-read and multi-write capabilities while leveraging multiple clusters to further enhance concurrent write throughput.

In a storage-compute separation architecture, remote shared storage systems like object storage or HDFS typically have poor access performance for a single IO request, with performance decreasing by dozens of times compared with that of local storage. How can the query performance of compute clusters in a storage-compute separation architecture be ensured? Furthermore, when using multiple clusters to support read-write separation and online-offline isolation scenarios, how can the query performance of multiple clusters be guaranteed?

ApsaraDB for SelectDB addresses these challenges by providing a meticulously designed cache management mechanism that automates query performance optimization and meets the flexible tuning needs of users:

• For a single compute cluster, ApsaraDB for SelectDB caches data using an LRU (Least Recently Used) strategy by default. When the cache size is sufficient to store all hot data, the performance of storage-compute separation systems can match that of storage-compute integration systems. The single-replica design of local caches and the low cost of remote storage make the storage cost of storage-compute separation architectures significantly lower than that of integrated architectures. ApsaraDB for SelectDB also provides manual cache control strategies to ensure that data in certain tables is prioritized for caching. Additionally, during auto scaling of the cluster, ApsaraDB for SelectDB automatically preheats or migrates the cache based on statistical information to ensure smooth query service during changes.

• For multiple compute clusters, ApsaraDB for SelectDB offers cross-cluster cache synchronization capabilities, allowing cached data from existing clusters to be synchronized to other clusters, thus accelerating query performance. It also supports cache synchronization control at the partition level. Each compute cluster has its own independent cache, and users can control the cache size as needed.

In a single warehouse with multiple compute clusters, the compute resources are mutually independent, ensuring complete isolation between compute clusters. However, when multiple compute clusters are available within a warehouse, how can we prevent users from misusing clusters to avoid interference between different business operations? Additionally, since storage resources are shared and have limited bandwidth and QPS capabilities, how can we ensure that access to shared storage by one cluster does not interfere with other clusters?

ApsaraDB for SelectDB provides a comprehensive solution for permission control and resource isolation to ensure the orderly operation of the multi-compute cluster architecture:

• ApsaraDB for SelectDB offers a simple and easy-to-use permission mechanism for compute clusters. Similar to permission allocation for databases and tables, only users who have been granted permissions for a specific cluster can use the cluster, preventing misuse of clusters.

• ApsaraDB for SelectDB allows you to throttle the storage bandwidth and IOPS based on the cluster specifications for access to storage resources. If the bandwidth exceeds the limit, requests are queued to avoid interference between multiple clusters.

The multi-compute cluster architecture was originally designed to meet the needs of read-write isolation and online-offline business isolation scenarios. After the multi-compute cluster solution for ApsaraDB for SelectDB went live, nearly half of the users have utilized multi-compute clusters. We unexpectedly discovered that the application potential of multi-compute clusters continues to expand:

• Elastic temporary cluster: In practical usage, considering business isolation, users often need a cluster for temporary tasks. For example, administrators might keep an isolated testing cluster for daily access, set up a fully simulated cluster for testing new features before their official release, or handle month-end or temporary data processing tasks using an independent cluster. To better meet these needs, ApsaraDB for SelectDB provides a series of complementary capabilities, such as mixed billing models that support both monthly subscribed and pay-as-you-go clusters within the same warehouse, and the ability to reduce costs by stopping idle compute resources in pay-as-you-go clusters.

• Cross-zone disaster recovery: In the current deployment architecture, the metadata center and shared storage already support cross-zone disaster recovery. Users can place multiple clusters in different zones to achieve end-to-end cross-zone disaster recovery. Since most of the request processing is completed within a single cluster, cross-zone access is only required for a small amount of metadata retrieval. This approach has minimal impact on query performance. If a zone fails, you can quickly switch the business to another zone with a single command.

• Cluster switching for changes: When you need to perform certain change operations on a cluster, you can achieve smooth transitions through dual-cluster switching. For example, when reducing the cache capacity of a cluster, since the current scaling feature does not support cache reduction, users can replace the old cluster with a new one that has lower cache capacity. Additionally, we plan to support dual-cluster switching for major version upgrades of ApsaraDB for SelectDB. This will allow for safe rollbacks if issues arise during the upgrade process, ensuring the stability of major version upgrades, which is a crucial use case.

During online operations, we continuously collect user feedback and observe user pain points. Two design aspects have particularly caught our attention and are currently undergoing optimization and reconstruction:

• Cluster naming design: For many cloud users, the concepts of instances and clusters are well-established. A cluster is typically the smallest unit that users purchase on the cloud console, and in products like MongoDB and Elasticsearch, a cluster is often equivalent to an instance. In the architecture design of SelectDB, a warehouse or instance is the smallest unit purchased, and a cluster is a group of compute resources within the warehouse. This inconsistency in concept design has confused many users. AsparaDB for SelectDB is gradually adjusting the system architecture to align with more intuitive concepts, such as referring to compute clusters as compute queues or compute groups.

• Default permission policy: SelectDB allows you to control the permissions on multiple clusters to prevent cluster misuse from causing interference between clusters. By default, the common user does not have the permissions to use clusters and needs to be assigned. This design has created a significant barrier for new users, as many encounter issues with querying data initially, and it also increases the usage cost for those who only need a single cluster. AsparaDB for SelectDB is currently rethinking the cluster permission design to ensure that, by default, users have permission to use all clusters, while advanced permission control for multiple clusters will be available as an optional feature for users to enable as needed.

[Infographic] Highlights | Database New Features in October 2024

Introduction to the Intelligent Elasticity Model of AnalyticDB

ApsaraDB - June 24, 2024

Alibaba Clouder - March 24, 2020

Alibaba Cloud MaxCompute - August 31, 2020

ApsaraDB - November 17, 2020

ApsaraDB - July 29, 2022

ApsaraDB - December 25, 2023

Hologres

Hologres

A real-time data warehouse for serving and analytics which is compatible with PostgreSQL.

Learn More Tair

Tair

Tair is a Redis-compatible in-memory database service that provides a variety of data structures and enterprise-level capabilities.

Learn More Realtime Compute for Apache Flink

Realtime Compute for Apache Flink

Realtime Compute for Apache Flink offers a highly integrated platform for real-time data processing, which optimizes the computing of Apache Flink.

Learn More Data Lake Analytics

Data Lake Analytics

A premium, serverless, and interactive analytics service

Learn MoreMore Posts by ApsaraDB