In Part 1 of this article, we covered the concept and implementation of sharding in NewSQL. In Part 2, we will discuss in further detail about distributed transactions and database governance.

As mentioned in the previous article, database transactions must meet the Atomicity, Consistency, Isolation, and Durability (ACID) standard:

On a single data node, transactions that can be accessed only by a single database resource are called local transactions. However, in the SOA-based distributed application environment, more and more applications require that the same transaction can access multiple database resources and services. To meet this requirement, distributed transactions have emerged.

Although relational databases provide perfect native ACID support for local transactions, they inhibit system performance in distribution scenarios. The top priority for distributed transactions is how to either enable databases to meet the ACID standard in distribution scenarios or find an alternative solution.

The earliest distributed transaction model was X/Open Distributed Transaction Processing (DTP), or the XA protocol for short.

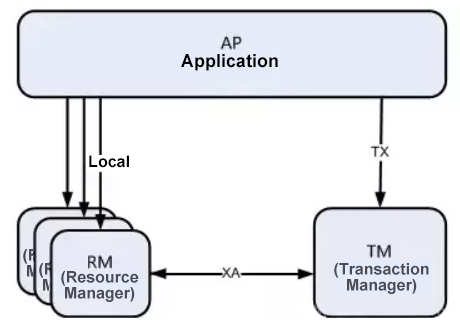

In the DTP model, a global transaction manager (TM) is used to interact with multiple resource managers (RMs). The global TM manages the global transaction status and the resources involved in the transactions. The RM is responsible for specific resource operations. The following shows the relationship between the DTP model and the application:

DTP model

The XA protocol uses two-phase commit to ensure the atomicity of distributed transactions. The commit process is divided into the prepare phase and the commit phase.

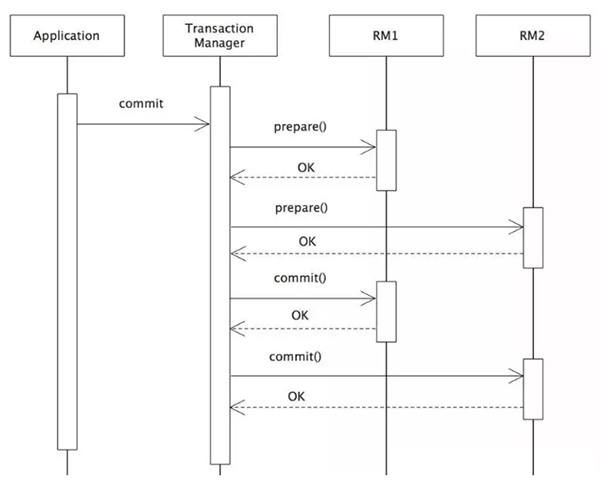

The following figure shows the transaction process of the XA protocol:

XA transaction process

Two-phase commit is the standard implementation of the XA protocol. It divides the commit process of a distributed transaction into two phases: prepare and commit/rollback.

After an XA global transaction is started, all transaction branches lock the resources based on the local default isolation level and record the undo and redo logs. Then, the TM initiates a prepare vote to query all the transaction branches whether the transaction can be committed. If all the transaction branches reply "yes", the TM initiates commit again. If a reply is "no", TM initiates rollback. If all replies in the prepare phase are "yes" but an exception (such as system downtime) occurs in the commit process, after the node service is restarted, the transaction is committed again based on XA_recover to ensure data consistency.

The distributed transactions implemented based on the XA protocol are not intrusive for the business. Its greatest advantage is the transparency to users. Users can use XA-based distributed transactions the same way they use local transactions. The XA protocol can rigidly implement the ACID standard of transactions.

However, the rigid implementation of the ACID standard of transactions is a double-edged sword.

During transaction execution, all the required resources must be locked. Therefore, the XA protocol is more suitable for short transactions with a fixed execution time. In a long transaction, data must be used exclusively, resulting in a significant decline in the concurrent performance of the business systems that rely on hotspot data. Because of this, in scenarios where high-concurrency performance is the top concern, XA-based distributed transactions are not the best choice.

If the transaction that implements the ACID standard is a rigid transaction, then the BASE-based transaction is a soft transaction. BASE refers to the combination of Basic Availability, Soft State, and Eventual Consistency.

ACID transactions impose rigid isolation requirements. During transaction execution, all the resources must be locked. The idea of the soft transaction is to shift the mutex operation from the resource level to the business level through the business logic. The soft transaction can improve the system throughput by lowering the strong consistency requirements.

Given that a timeout retry may occur in a distributed system, operations in a soft transaction must be idempotent to prevent the problems caused by multiple requests. Models for implementing soft transactions include Best Efforts Delivery (BED), Saga, and Try-Confirm-Cancel (TCC).

BED is the simplest type of soft transaction. It is applicable to scenarios where database operations do succeed. NewSQL automatically records the failed SQL statements and reruns these statements until they are successfully executed. The rollback function is unavailable to BED soft transactions.

The implementation of BED soft transactions is very simple but imposes rigid scenario requirements. On one hand, BED features unlocked resources and minimal performance loss. On the other hand, its disadvantage lies in the transaction not being possible to roll back when multiple commit attempts fail. BED is solely applicable to business scenarios where transactions do succeed. BED improves performance at the cost of the transaction rollback function.

Saga was derived from a paper published in 1987 by Hector Garcia-Molina and Kenneth Salem.

Saga transactions are more suitable for scenarios where long-running transactions are used. A saga transaction consists of several local transactions, each of which has a transaction module and compensation module. When any of these local transactions becomes faulty, the corresponding compensation method is called to ensure the final consistency of the transactions.

The saga model splits a distributed transaction into multiple local transactions. Each of these local transactions has its own transaction module and compensation module. When any of these local transactions fails, the corresponding compensation method is called to restore the original transaction, ensuring the final consistency of the transactions.

Assume that all saga transaction branches (T1, T2, ..., Tn) have their corresponding compensations (C1, C2, ..., Cn-1). Then, the saga system can ensure the following:

The saga model supports both forward recovery and reverse recovery. Forward recovery attempts to retry the transaction branch that currently fails. It can be implemented on the precondition that every transaction branch does succeed. In contrast, backward recovery compensates all the completed transaction branches when any transaction branch fails.

Obviously, providing compensation for transactions in forward recovery is unnecessary. If the transaction branches in the business will eventually succeed, forward recovery can lower the complexity of the saga model. In addition, forward recovery is also a good choice when compensation transactions are difficult to be implemented.

In theory, compensation transactions never fail. However, in a distributed world, servers may crash, networks may fail, and IDCs may experience power failures. Therefore, it is necessary to provide a rollback mechanism upon fault recovery, such as manual intervention.

The saga model removes the prepare phase, which exists for the XA protocol. Therefore, transactions are not isolated. For this reason, when two saga transactions simultaneously use the same resource, problems, such as missing updates and reading of dirty data, may occur. If this is the case, for an application that uses saga transactions, the logic of resource locking must be added to the application-level logic.

TCC implements distributed transactions by breaking down the business logic. As the name implies, the TCC transaction model requires the business system to provide the following sections of business logic:

The TCC model only provides a two-phase atomic commit protocol to ensure the atomicity of distributed transactions. The isolation of transactions is implemented by the business logic. In the TCC model, isolation is to shift locks from the database resource level to the business level by transforming the business. This releases underlying database resources, lowers the requirements of the distributed transaction lock protocol, and improves system concurrency.

Although the TCC model is the most ideal for implementing soft transactions, the application must provide three interfaces that can be called by the TM to implement the Try, Confirm, and Cancel operations. Therefore, the business transformation is relatively costly.

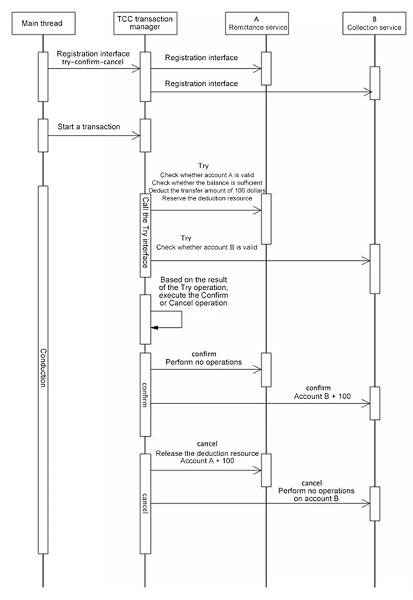

Assume that account A transfers 100 dollars to account B. The following figure shows the transformation of the business to support TCC:

TCC process

The Try, Confirm, and Cancel interfaces must be separately implemented for remittance and collection services. Meanwhile, they must be injected to the TCC TM in the service initialization phase.

Remittance Service

Try

Confirm

Cancel

Collection Service

Try

Confirm

Cancel

The preceding description indicates that the TCC model is intrusive for the business and the transformation difficulty is high.

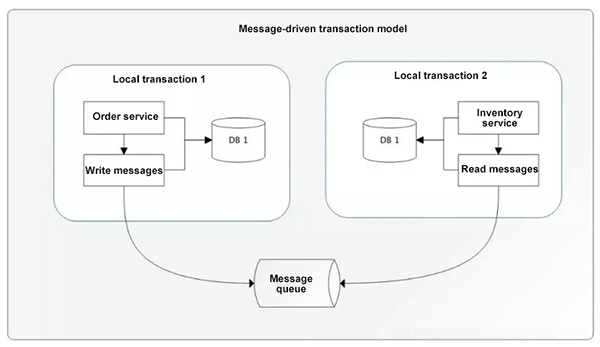

The message consistency scheme is used to ensure the consistency of upstream and downstream application data operations through the message middleware. The basic idea is to place local operations and sending messages into a local transaction. Then, the downstream applications subscribe to a message from the messaging system and perform the corresponding operation after receiving the message. This essentially relies on the message retry mechanism to achieve final consistency. The following figure shows the message-driven transaction model:

Message-driven transaction model

The disadvantages of this model stem from the fact that the degree of coupling is high and the message middleware must be introduced to the business system, increasing the complexity of the system.

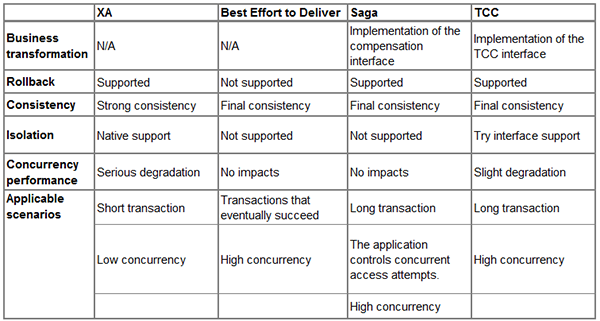

In general, neither ACID-based strong-consistency transactions nor BASE-based final-consistency transactions are "silver bullets". Therefore, for either to be optimally useful, they must be used in their most appropriate scenarios. The following table provides a comparison of these models to help developers choose the most suitable model. Due to a high degree of coupling between message-driven transactions and business systems, this model is excluded from the table.

Comparison of transaction models

The pursuit of strong consistency will not necessarily lead to the most rational solution. For distributed systems, we recommend that you use the "soft outside and hard inside" design scheme. "Soft outside" means using soft transactions across data shards to ensure the final consistency of data in exchange for optimal performance. "Hard inside' means using local transactions within the same data shard to achieve the ACID standard.

As described in the previous article, service governance is also applicable to the basic governance of the database. Basic governance includes the configuration center, registry, rate limiter, circuit breaker, failover, and tracker.

The major difference between database governance and service governance is that the database is stateful and each data node has its own persistent data. This makes it difficult to achieve auto scaling as a service does.

When the access traffic and data volume of the system exceeds the previously evaluated expectations, in most cases, the database needs to be resharded. When using policies such as date-based sharding, you can directly expand the capacity without migrating legacy data. However, in most scenarios, legacy data in a database cannot be directly mapped to a new sharding policy. To modify the sharding policy, you must migrate the data.

For traditional systems, the most feasible scheme is to stop services, migrate the data, and then restart the services. However, adopting this scheme imposes extremely high data migration costs on the business side, and engineers of the business side must accurately estimate the amount of data.

In Internet scenarios, the system availability requirement is quite demanding, and the possibility of explosive traffic growth is higher than that in traditional industries. In the cloud=native service architecture, auto scaling is a common requirement and can be easily implemented. This makes the auto scaling function of data, which is equivalent to that of services, an important capability for cloud-native databases.

In addition to system pre-sharding, another implementation of auto scaling is online data migration. Online data migration is often described as "changing the engines of an airplane in mid-flight". Its biggest challenge is ensuring that the migration process is immune to services. Online data migration can be performed after the sharding policy of the database is modified. This may be, for example, changing the sharding mode of splitting the database into 4 smaller databases based on the result of ID mod 4 to the sharding mode of splitting the database into 16 smaller databases based on the result of ID mod 16. Meanwhile, a series of system operations can be performed to ensure that the data is correctly migrated to the new data node and that the database-dependent services are completely unaware of the migration process.

Online data migration can be implemented in four steps:

Online data migration can be implemented not only to expand the data capacity but also to support online DDL operations. Database-native DDL operations do not support transactions. In addition, tables will be locked for a long time when you perform DDL operations on a large number of tables. Therefore, you can perform online data migration to support online DDL operations. The online DDL operation is consistent with the data migration process, which simply requires you to create an empty table with the modified DDL and then complete the preceding four steps.

Breaking the Limits of Relational Databases: An Analysis of Cloud-Native Database Middleware (1)

Building a Serverless PDF Text Recognition Using Function Compute with Node.js in 10 Minutes

2,593 posts | 792 followers

FollowAlibaba Clouder - January 30, 2019

Alibaba Clouder - July 16, 2020

Alibaba Clouder - March 23, 2021

ApsaraDB - January 17, 2024

Alibaba Cloud_Academy - August 28, 2023

Aliware - January 4, 2021

2,593 posts | 792 followers

FollowLearn More

ApsaraDB RDS for PostgreSQL

ApsaraDB RDS for PostgreSQL

An on-demand database hosting service for PostgreSQL with automated monitoring, backup and disaster recovery capabilities

Learn More Data Transmission Service

Data Transmission Service

Supports data migration and data synchronization between data engines, such as relational database, NoSQL and OLAP

Learn MoreMore Posts by Alibaba Clouder

Raja_KT February 8, 2019 at 8:11 am

My say is that database vendor(s), should document upfront on the features, they support and what it can and what it cannot. I have provided lots of inputs to vendors of HTAP, NewSQL as a Technical user, so that it will be easy for those who do use or do PoCs.