The development and transformation of database technology is on the rise. NewSQL has emerged to combine various technologies, and the core functions implemented by the combination of these technologies have promoted the development of the cloud-native database.

This article provides an in-depth insight into cloud-native database technology Among the three types of NewSQL, the new architecture and Database-as-a-Service types involve many underlying implementations related to the database, and thus will not be elaborated here. This article focuses on the core functions and implementation principles of transparent sharding middleware. The core functions of the other two NewSQL types are similar to those of sharding middleware but have different implementation principles.

Regarding performance and availability, traditional solutions that store data on a single data node in a centralized manner can no longer adapt to the massive data scenarios created by the Internet. Most relational database products use B+ tree indexes. When the data volume exceeds the threshold, the increase in the index depth leads to an increased disk I/O count, the substantially degrading query performance. In addition, highly concurrent access requests also turn the centralized database into the biggest bottleneck of the system.

Since traditional relational databases cannot meet the requirements of the Internet, increasing numbers of attempts have been made to store data in NoSQL databases that natively support data distribution. However, NoSQL is not compatible with SQL Server and its ecosystem is yet to be improved. Therefore, NoSQL cannot replace relational databases, and the position of the relational databases is secure.

Sharding refers to the distribution of the data stored in a single database to multiple databases or tables based on a certain dimension to improve the overall performance and availability. Effective sharding measures include database sharding and table sharding of relational databases. Both sharding methods can effectively prevent query bottlenecks caused by a huge data volume that exceeds the threshold.

In addition, database sharding can effectively distribute the access requests of a single database, while table sharding can convert distributed transactions into local transactions whenever possible. The multi-master-and-multi-slave sharding method can effectively prevent the occurrence of single-points-of-data and enhance the availability of the data architecture.

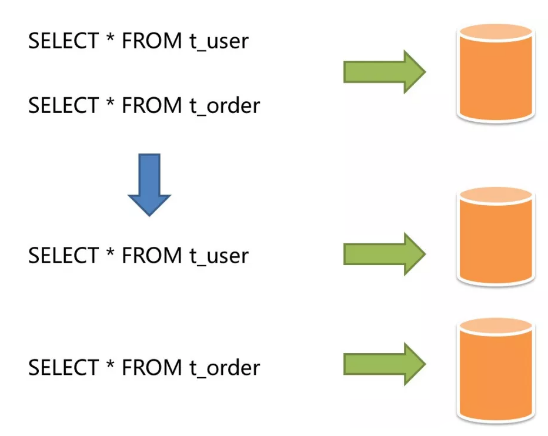

Vertical sharding is also known as vertical partitioning. Its key idea is the use of different databases for different purposes. Before sharding is performed, a database can consist of multiple data tables that correspond to different businesses. After sharding is performed, the tables are organized according to business and distributed to different databases, balancing the workload among different databases, as shown below:

Vertical sharding

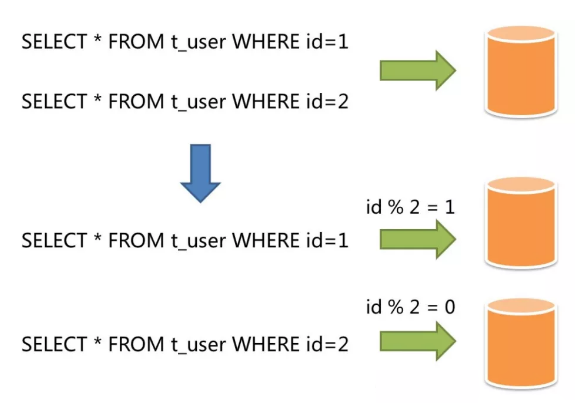

Horizontal sharding is also known as horizontal partitioning. In contrast to vertical sharding, horizontal sharding does not organize data by business logic. Instead, it distributes data to multiple databases or tables according to a rule of a specific field, and each shard contains only part of the data.

For example, if the last digit of an ID mod 10 is 0, this ID is stored into database (table) 0; if the last digit of an ID mod 10 is 1, this ID is stored into database (table) 1, as shown below:

Horizontal sharding

Sharding is an effective solution to the performance problem of relational databases caused by massive data.

In this solution, data on a single node is split and stored into multiple databases or tables, that is, the data is sharded. Database sharding can effectively disperse the load on databases caused by highly concurrent access attempts. Although table sharding cannot mitigate the load of databases, you can still use database-native ACID transactions for the updates across table shards. Once cross-database updates are involved, the problem of distributed transactions becomes extremely complicated.

Database sharding and table sharding ensure that the data volume of each table is always below the threshold. Vertical sharding usually requires adjustments to the architecture and design, and for this reason fails to keep up with the rapidly changing business requirements on the Internet. Therefore, it cannot effectively remove the single-point bottleneck. Horizontal sharding theoretically removes the bottleneck in the data processing of a single host and supports flexible scaling, making it the standard sharding solution.

Database sharding and read/write separation are the two common measures for heavy access traffic. Although table sharding can resolve the performance problems caused by massive data, it cannot resolve the problem of slow responsiveness caused by excessive requests to the same database. For this reason, database sharding is often implemented in horizontal sharding to handle the huge data volume and heavy access traffic. Read/write separation is another way to distribute traffic. However, you must consider the latency between data reading and data writing when designing the architecture.

Although database sharding can resolve these problems, the distributed architecture introduces new problems. Because the data is widely dispersed after database sharding or table sharding, application development and O&M personnel have to face extremely heavy workloads when performing operations on the database. For example, they need to know the specific table shard and the home database for each kind of data.

NewSQL with a brand new architecture resolves this problem in a way that is different from that of the sharding middleware:

Cross-database transactions present a big challenge to distributed databases. With appropriate table sharding, you can reduce the amount of data stored in each table and use local transactions whenever possible. Proper use of different tables in the same database can effectively help to avoid the problem caused by distributed transactions. However, in scenarios where cross-database transactions are inevitable, some businesses still require the transactions to be consistent. On the other hand, Internet companies turned their back on XA-based distributed transactions due to their poor performance. Instead, most of these companies use soft transactions that ensure eventual consistency.

Database throughput is challenged by a huge bottleneck due to increasing system access traffic. For applications with a large number of concurrent reads and few writes, you can split a single database into primary and secondary databases. The primary database is used for the addition, deletion, and modification of transactions, while the secondary database is for queries. This effectively prevents the row locking problem caused by data updates and dramatically improves the query performance of the entire system.

If you configure one primary database and multiple secondary databases, query requests can be evenly distributed to multiple data copies, further enhancing the system's processing capability.

If you configure multiple primary databases and multiple secondary databases, both the throughput and availability of the system can be improved. In this configuration, the system still can run normally when one of these databases is down or a disk is physically damaged.

Read/write separation is essentially a type of sharding. In horizontal sharding, data is dispersed to different data nodes. In read/write separation, however, read and write requests are respectively routed to the primary and secondary databases based on the results of SQL syntax analysis. Noticeably, data on different data nodes are consistent in read/write separation but are different in horizontal sharding. By using horizontal sharding in conjunction with read/write separation, you can further improve system performance, but system maintenance becomes complicated.

Although read/write separation can improve the throughput and availability of the system, it also results in data inconsistency, both between multiple primary databases and between the primary and secondary databases. Moreover, similar to sharding, read/write separation also increases database O&M complexity for the application development and O&M personnel.

As the key benefit of read/write separation, the impacts of read/write separation are transparent to users, allowing them to use the primary and secondary databases as common databases.

Sharding consists of the following processes: statement parsing, statement routing, statement modification, statement execution, and result aggregation. Database protocol adaptation is essential to ensure low-cost access by original applications.

In addition to SQL, NewSQL is compatible with the protocols for traditional relational databases, reducing access costs for users. Open source relational database products act as native relational databases by implementing the NewSQL protocol.

Due to the popularity of MySQL and PostgreSQL, many NewSQL databases implement the transport protocols for MySQL and PostgreSQL, allowing MySQL and PostgreSQL users to access NewSQL products without modifying their business codes.

MySQL Protocol

Currently, MySQL is the most popular open source database product. To learn about its protocol, you can start with the basic data types, protocol packet structures, connection phase, and command phase of MySQL.

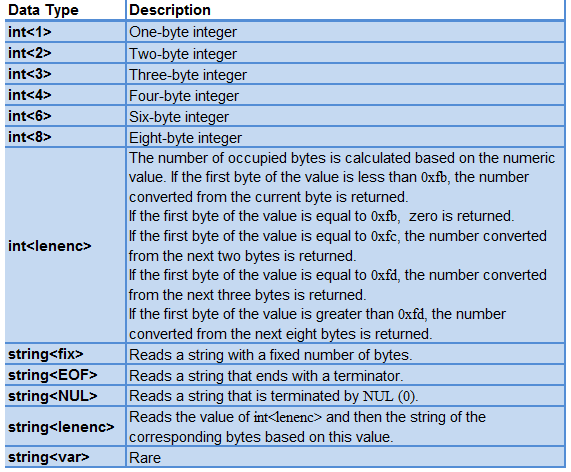

Basic data types

A MySQL packet consists of the following basic data types defined by MySQL:

Basic MySQL data types

When binary data needs to be converted to the data that can be understood by MySQL, the MySQL packet is read based on the number of digits pre-defined by the data type and converted to the corresponding number or string. In turn, MySQL writes each field to the packet according to the length specified in the protocol.

Structure of a MySQL Packet

The MySQL protocol consists of one or more MySQL packets. Regardless of the type, a MySQL packet consists of the payload length, sequence ID, and payload.

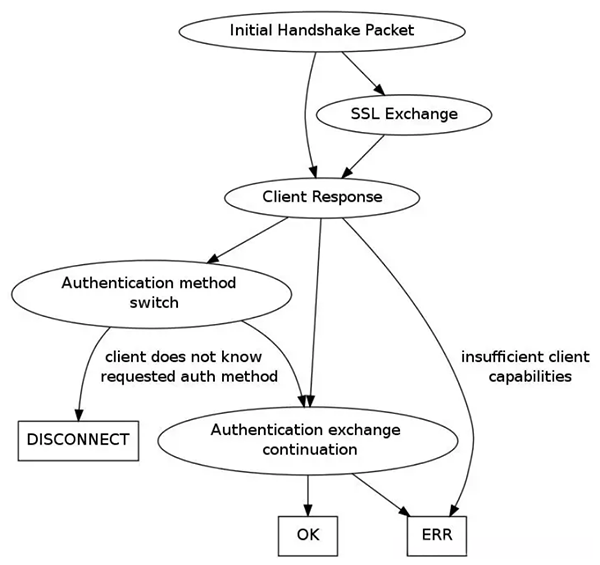

Connection Phase

In the connection phase, a communication channel is established between the MySQL client and server. Then, three tasks are completed in this phase: exchanging the capabilities of the MySQL client and server (Capability Negotiation), setting up an SSL communication channel, and authenticating the client against the server. The following figure shows the connection setup flow from the MySQL server perspective:

Flowchart of the MySQL connection phase

The figure excludes the interaction between the MySQL server and client. In fact, MySQL connection is initiated by the client. When the MySQL server receives a connection request from the client, it exchanges the capabilities of the server and client, generates the initial handshake packet in different formats based on the negotiation result, and writes the packet to the client. The packet contains the connection ID, server's capabilities, and ciphertext generated for authorization.

After receiving the handshake packet from the server, the MySQL client sends a handshake packet response. This packet contains the user name and encrypted password for accessing the database.

After receiving the handshake response, the MySQL server verifies the authentication information and returns the verification result to the client.

Command Phase

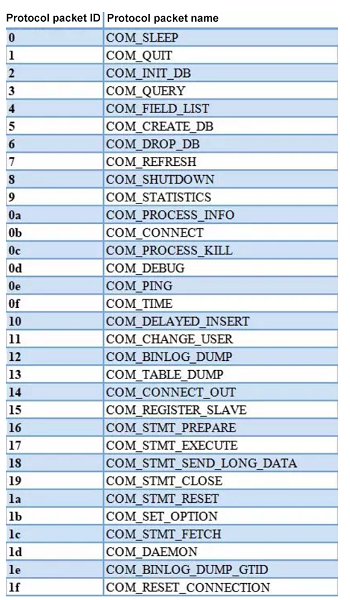

The command phase comes after the successful connection phase. In this phase, commands are executed. MySQL has a total of 32 command packets, whose specific types are listed below:

MySQL command packets

MySQL command packets are classified into four types: text protocol, binary protocol, stored procedure, and replication protocol.

The first bit of the payload is used to identify the command type. The functions of packets are indicated by their names. The following describes some important MySQL command packets:

COM_QUERY

COM_QUERY is an important command that MySQL uses for queries in plain text format. It corresponds to java.sql.Statement in JDBC. COM_QUERY itself is simple and consists of an ID and SQL statement:

1 [03] COM_QUERY

string[EOF] is the query the server will execute

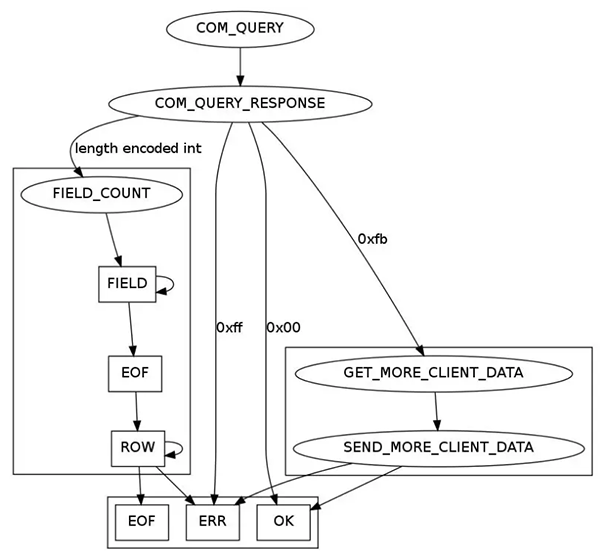

The COM_QUERY response packet is complex, as shown below:

MySQL COM_QUERY flowchart

Depending on the scenario, four types of COM_QUERY responses may be returned. These are query result, update result, file execution result, and error.

If an error, such as network disconnection or incorrect SQL syntax, occurs during execution, the MySQL protocol sets the first bit of the packet to 0xff and encapsulates the error message into the ErrPacket and returns it.

Given that it is rare that files are used to execute COM_QUERY, this case is not elaborated here.

For an update request, the MySQL protocol sets the first bit of the packet to 0x00 and returns an OkPacket. The OkPacket must contain the number of row records affected by this update operation and the last inserted ID.

Query requests are most complex. For such requests, an independent FIELD_COUNT packet must be created based on the number of result set fields that the client obtains by reading int. Then, independent COLUMN_DEFINITION packets are sequentially generated based on the details of each column of the returned field. The metadata information of the query field ends with an EofPacket. Later, Text Protocol Resultset Rows of the packet will be generated row by row and be converted to the string format regardless of the data type. Finally, the packet still ends with an EofPacket.

The java.sql.PreparedStatement operation in JDBC consists of the following five MySQL binary protocol packets: COM_STMT_PREPARE, COM_STMT_EXECUTE, COM_STMT_ CLOSE, COM_STMT_RESET, and COM_ STMT_SEND_LONG_DATA. Among these packets, COM_STMT_PREPARE and COM_STMT_ EXECUTE are most important. They correspond to connection.prepareStatement() and connection.execute()&connection.executeQuery()&connection.executeUpdate() in JDBC, respectively.

COM_STMT_PREPARE

COM_STMT_PREPARE is similar to COM_QUERY, both of which consist of the command ID and the specific SQL statement:

1 [16] COM_STMT_PREPARE

string[EOF] the query to prepare

The returned value of COM_STMT_PREPARE is not a query result but a response packet that consists of the statement_id, the number of columns, and the number of parameters. Statement_id is the unique identifier that MySQL assigns to an SQL statement after the pre-compilation is completed. Based on the statement_id, you can retrieve the corresponding SQL statement from MySQL.

For an SQL statement registered by the COM_STMT_PREPARE command, only the statement_id (rather than the SQL statement itself) needs to be sent to the COM_STMT_EXECUTE command, eliminating the unnecessary consumption of the network bandwidth.

Moreover, MySQL can pre-compile the SQL statements passed in by COM_STMT_PREPARE into the abstract syntax tree for reuse, improving SQL execution efficiency. If COM_QUERY is used to execute the SQL statements, you must re-compile each of these statements. For this reason, PreparedStatement is more efficient than Statement.

COM_STMT_EXECUTE

COM_STMT_EXECUTE consists of the statement-id and the parameters for the SQL. It uses a data structure named NULL-bitmap to identify the null values of these parameters.

The response packet of the COM_STMT_EXECUTE command is similar to that of the COM_QUERY command. For both response packets, the field metadata and query result set are returned and separated by the EofPacket.

Their differences lie in that Text Protocol Resultset Row is replaced with Binary Protocol Resultset Row in the COM_STMT_EXECUTE response packet. Based on the type of the returned data, the format of the returned data is converted to the corresponding MySQL basic data type, further reducing the required network transfer bandwidth.

Other Protocols

In addition to MySQL, PostgreSQL and SQL Server are also open source protocols and can be implemented in the same way. In contrast, another frequently used database protocol, Oracle, is not open-source and cannot be implemented in the same way.

Although SQL is relatively simple compared to other programming languages, it is still a complete programming language. Therefore, it essentially works in the same way as other languages in terms of parsing SQL grammar and parsing other languages (such as Java, C, and Go).

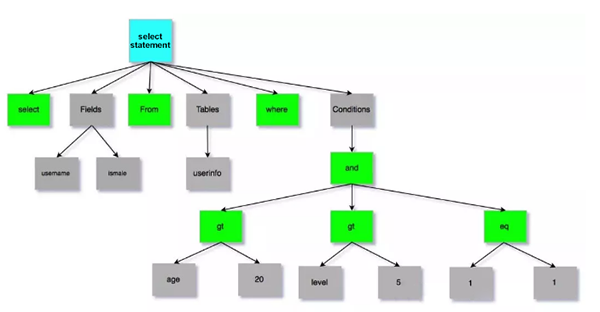

The parsing process is divided into lexical parsing and syntactic parsing. First, the lexical parser splits the SQL statement into words that cannot be further divided. Then, the syntactic parser converts the SQL statement to an abstract syntax tree. Finally, the abstract syntax tree is accessed to extract the parsing context.

The parsing context includes tables, Select items, Order By items, Group By items, aggregate functions, pagination information, and query conditions. For a NewSQL statement of the sharding middleware type, the placeholders that may be changed are also included.

By using the following SQL statement as an example: select username, ismale from userinfo where age > 20 and level > 5 and 1 = 1, the post-parsing abstract syntax tree is as follows:

Abstract syntax tree

Many third-party tools can be used to generate abstract syntax trees, among which ANTLR is a good choice. ANTLR generates Java code for the abstract syntax tree based on the rules defined by developers and provides a visitor interface. Compared with code generation, the manually developed abstract syntax tree is more efficient in execution but the workload is relatively high. In scenarios where performance requirements are demanding, you can consider customizing the abstract syntax tree.

The sharding strategy is to match databases and tables according to the parsing context and generate the routing path. SQL routing with sharding keys can be divided into single-shard routing (where the equal mark is used as the sharding operator), multi-shard routing (where IN is used as the sharding operator), and range routing (where BETWEEN is used as the sharding operator). SQL statements without sharding keys adopt broadcast routing.

Normally, sharding policies can be incorporated by the database or be configured by users. Sharding policies incorporated in the database are relatively simple and can generally be divided into mantissa modulo, hash, range, tag, time, and so on. More flexible, sharding policies set by users can be customized according to their needs.

NewSQL with the new architecture does not require SQL statement rewriting, which is only required for NewSQL statements of the sharding middleware type. SQL statement rewriting is used to rewrite SQL statements into ones that can be correctly executed in actual databases. This includes replacing the logical table name with the actual table name, rewriting the start and end values of the pagination information, adding the columns that are used for sorting, grouping, and auto-increment keys, and rewriting AVG as SUM or COUNT.

Results merging refers to merging multiple execution result sets into one result set and returning it to the application. Results merging is divided into stream merging and memory merging.

In Part 2 of this article, we will discuss in further detail about distributed transactions and database governance.

Five Key Business Drivers You Should Consider Before Migration

Breaking the Limits of Relational Databases: An Analysis of Cloud-Native Database Middleware (2)

2,599 posts | 764 followers

FollowAlibaba Clouder - July 16, 2020

Alibaba Clouder - January 30, 2019

Alibaba Clouder - March 23, 2021

ApsaraDB - January 17, 2024

Alibaba Cloud_Academy - August 28, 2023

Aliware - January 4, 2021

2,599 posts | 764 followers

FollowLearn More

ApsaraDB RDS for PostgreSQL

ApsaraDB RDS for PostgreSQL

An on-demand database hosting service for PostgreSQL with automated monitoring, backup and disaster recovery capabilities

Learn More Data Transmission Service

Data Transmission Service

Supports data migration and data synchronization between data engines, such as relational database, NoSQL and OLAP

Learn MoreMore Posts by Alibaba Clouder

Raja_KT February 8, 2019 at 8:08 am

Vertical, Hortizonal sharding, read/write separation , NewSQL.... There is no one size fits for all