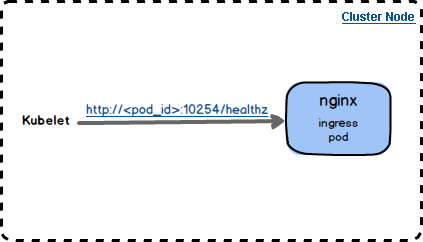

Kubernetes provides the Liveness and Readiness mechanisms for checking the health of pods. It uses the livenessProbe and readinessProbe configured during the deployment of an Ingress Controller to check the health of the controller. The configuration is as follows:

livenessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

readinessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1When the kubelet regularly checks the health of pods in the Nginx Ingress Controller, it uses the HTTP protocol to send GET requests similar to the following:

curl -XGET http://<NGINX_INGRESS_CONTROLLER_POD_ID>:10254/healthzIf the check is successful, an OK message will appear. If the check fails, the failure information will appear.

The following analyzes the internal health check logic of the Nginx Ingress Controller so that you can know what happens inside the Nginx Ingress Controller and why port 10254 and path /healthz are used when the kubelet sends a health check request.

When the Nginx Ingress Controller starts, use goroutine to start an HTTP server:

// Initialize an HTTP request handler.

mux := http.NewServeMux()

go registerHandlers(conf.EnableProfiling, conf.ListenPorts.Health, ngx, mux)The registerHandlers method is implemented as follows:

func registerHandlers(enableProfiling bool, port int, ic *controller.NGINXController, mux *http.ServeMux) {

// Register a health check handler.

healthz.InstallHandler(mux,

healthz.PingHealthz,

ic,

)

// Use Prometheus to obtain metrics.

mux.Handle("/metrics", promhttp.Handler())

// Obtain the Ingress Controller version information.

mux.HandleFunc("/build", func(w http.ResponseWriter, r *http.Request) {

w.WriteHeader(http.StatusOK)

b, _ := json.Marshal(version.String())

w.Write(b)

})

// Stop the Ingress Controller pod.

mux.HandleFunc("/stop", func(w http.ResponseWriter, r *http.Request) {

err := syscall.Kill(syscall.Getpid(), syscall.SIGTERM)

if err != nil {

glog.Errorf("Unexpected error: %v", err)

}

})

// Obtain the monitored performance information.

if enableProfiling {

mux.HandleFunc("/debug/pprof/", pprof.Index)

mux.HandleFunc("/debug/pprof/heap", pprof.Index)

mux.HandleFunc("/debug/pprof/mutex", pprof.Index)

mux.HandleFunc("/debug/pprof/goroutine", pprof.Index)

mux.HandleFunc("/debug/pprof/threadcreate", pprof.Index)

mux.HandleFunc("/debug/pprof/block", pprof.Index)

mux.HandleFunc("/debug/pprof/cmdline", pprof.Cmdline)

mux.HandleFunc("/debug/pprof/profile", pprof.Profile)

mux.HandleFunc("/debug/pprof/symbol", pprof.Symbol)

mux.HandleFunc("/debug/pprof/trace", pprof.Trace)

}

// Start an HTTP server.

server := &http.Server{

Addr: fmt.Sprintf(":%v", port), // Assign the listening port.

Handler: mux,

ReadTimeout: 10 * time.Second,

ReadHeaderTimeout: 10 * time.Second,

WriteTimeout: 300 * time.Second,

IdleTimeout: 120 * time.Second,

}

glog.Fatal(server.ListenAndServe())

}The registerHandlers method implementation shows that the started HTTP server is listening to the conf.ListenPorts.Health port. The port value is parsed from the healthz-port parameter during the startup of the Nginx Ingress Controller.

httpPort = flags.Int("http-port", 80, `Port to use for servicing HTTP traffic.`)

httpsPort = flags.Int("https-port", 443, `Port to use for servicing HTTPS traffic.`)

statusPort = flags.Int("status-port", 18080, `Port to use for exposing NGINX status pages.`)

sslProxyPort = flags.Int("ssl-passthrough-proxy-port", 442, `Port to use internally for SSL Passthrough.`)

defServerPort = flags.Int("default-server-port", 8181, `Port to use for exposing the default server (catch-all).`)

healthzPort = flags.Int("healthz-port", 10254, "Port to use for the healthz endpoint.")If the healthz-port parameter value is not specified during the startup of the Nginx Ingress Controller, the default port number 10254 is used.

The registerHandlers method implementation also shows that a request handler is registered through the healthz.InstallHandler method for health check.

func InstallHandler(mux mux, checks ...HealthzChecker) {

// If no health check is specified, only PingHealthz is registered by default.

if len(checks) == 0 {

glog.V(5).Info("No default health checks specified. Installing the ping handler.")

checks = []HealthzChecker{PingHealthz}

}

glog.V(5).Info("Installing healthz checkers:", strings.Join(checkerNames(checks...), ", "))

// Register the root health check handler. The root handler will call other specific handlers in turn.

mux.Handle("/healthz", handleRootHealthz(checks...))

for _, check := range checks {

// Register other specific health check handlers.

mux.Handle(fmt.Sprintf("/healthz/%v", check.Name()), adaptCheckToHandler(check.Check))

}

}The healthz.InstallHandler method implementation shows that the registered root health check request path is /healthz. Other health checks can be extended based on the HealthzChecker API.

When checking the health of pods in the Nginx Ingress Controller, the kubelet triggers the internal method handleRootHealthz.

func handleRootHealthz(checks ...HealthzChecker) http.HandlerFunc {

return http.HandlerFunc(func(w http.ResponseWriter, r *http.Request) {

failed := false

var verboseOut bytes.Buffer

for _, check := range checks {

if err := check.Check(r); err != nil {

// don't include the error since this endpoint is public. If someone wants more detail

// they should have explicit permission to the detailed checks.

glog.V(6).Infof("healthz check %v failed: %v", check.Name(), err)

fmt.Fprintf(&verboseOut, "[-]%v failed: reason withheld\n", check.Name())

failed = true

} else {

fmt.Fprintf(&verboseOut, "[+]%v ok\n", check.Name())

}

}

// always be verbose on failure

if failed {

http.Error(w, fmt.Sprintf("%vhealthz check failed", verboseOut.String()), http.StatusInternalServerError)

return

}

if _, found := r.URL.Query()["verbose"]; !found {

fmt.Fprint(w, "ok")

return

}

verboseOut.WriteTo(w)

fmt.Fprint(w, "healthz check passed\n")

})

}This method calls the registered health check handlers in turn. If all health checks are successful, an OK message will appear. If any health check fails, the failure information will appear.

Two health check handlers are registered during the startup of the Nginx Ingress Controller: healthz.PingHealthz and controller.NGINXController.

This handler is the default implementation of the HealthzChecker API. The implementation logic is simple.

// PingHealthz returns true automatically when checked

var PingHealthz HealthzChecker = ping{}

// ping implements the simplest possible healthz checker.

type ping struct{}

func (ping) Name() string {

return "ping"

}

// PingHealthz is a health check that returns true.

func (ping) Check(_ *http.Request) error {

return nil

}This handler is the specific code implementation of the Nginx Ingress Controller. The HealthzChecker interface is also implemented for mandatory health check of managed resources.

const (

ngxHealthPath = "/healthz"

nginxPID = "/tmp/nginx.pid"

)

func (n NGINXController) Name() string {

return "nginx-ingress-controller"

}

func (n *NGINXController) Check(_ *http.Request) error {

// 1. Check the health of Nginx. The access URL is http://0.0.0.0:18080/healthz.

res, err := http.Get(fmt.Sprintf("http://0.0.0.0:%v%v", n.cfg.ListenPorts.Status, ngxHealthPath))

if err != nil {

return err

}

defer res.Body.Close()

if res.StatusCode != 200 {

return fmt.Errorf("ingress controller is not healthy")

}

// 2. If dynamic-configuration is enabled, check the back-end service information that Nginx maintains in the memory. The access URL is http://0.0.0.0:18080/is-dynamic-lb-initialized.

if n.cfg.DynamicConfigurationEnabled {

res, err := http.Get(fmt.Sprintf("http://0.0.0.0:%v/is-dynamic-lb-initialized", n.cfg.ListenPorts.Status))

if err != nil {

return err

}

defer res.Body.Close()

if res.StatusCode != 200 {

return fmt.Errorf("dynamic load balancer not started")

}

}

// 3. Check whether the main Nginx process is running properly.

fs, err := proc.NewFS("/proc")

if err != nil {

return errors.Wrap(err, "unexpected error reading /proc directory")

}

f, err := n.fileSystem.ReadFile(nginxPID)

if err != nil {

return errors.Wrapf(err, "unexpected error reading %v", nginxPID)

}

pid, err := strconv.Atoi(strings.TrimRight(string(f), "\r\n"))

if err != nil {

return errors.Wrapf(err, "unexpected error reading the nginx PID from %v", nginxPID)

}

_, err = fs.NewProc(pid)

return err

}The port for accessing Nginx is n.cfg.ListenPorts.Status, which is parsed from the status-port parameter of the Nginx Ingress Controller. The default value is 18080.

The Nginx configuration file shows that port 18080 is the listening port during Nginx startup. Therefore, this port can be used to check the health of Nginx.

# used for NGINX healthcheck and access to nginx stats

server {

listen 18080 default_server backlog=511;

listen [::]:18080 default_server backlog=511;

set $proxy_upstream_name "-";

# Access the path. If 200 is returned, Nginx can receive requests.

location /healthz {

access_log off;

return 200;

}

# Check whether the memory contains back-end service information.

location /is-dynamic-lb-initialized {

access_log off;

content_by_lua_block {

local configuration = require("configuration")

local backend_data = configuration.get_backends_data()

if not backend_data then

ngx.exit(ngx.HTTP_INTERNAL_SERVER_ERROR)

return

end

ngx.say("OK")

ngx.exit(ngx.HTTP_OK)

}

}

# Obtain the basic monitored statistics.

location /nginx_status {

set $proxy_upstream_name "internal";

access_log off;

stub_status on;

}

# 404 is returned by default.

location / {

set $proxy_upstream_name "upstream-default-backend";

proxy_pass http://upstream-default-backend;

}

}In summary, the health check of the Nginx Ingress Controller mainly involves the following check items:

Phased Release of Application Services through Kubernetes Ingress Controller

223 posts | 33 followers

FollowAlibaba Cloud Native - April 10, 2023

Alibaba Cloud Native - February 15, 2023

Alibaba Cloud Community - August 21, 2024

Alibaba Cloud Native Community - January 6, 2026

Alibaba Cloud Community - May 7, 2025

Alibaba Cloud Native Community - April 21, 2025

223 posts | 33 followers

Follow ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More Container Registry

Container Registry

A secure image hosting platform providing containerized image lifecycle management

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More Elastic Container Instance

Elastic Container Instance

An agile and secure serverless container instance service.

Learn MoreMore Posts by Alibaba Container Service