Paxos (as a classic distributed consensus algorithm) is used as an example in various textbooks. However, due to its abstraction, few people develop consensus libraries based on native Paxos, while RAFT is a more consensus algorithm implemented in the industry. RAFT's paper can be found in the following reference material (In Search of an Understandable Consensus Algorithm). RAFT solves many engineering implementation problems in the Paxos algorithm by introducing a strong leadership role. RAFT introduces the concept of log + state machine, making multi-node synchronization highly abstract. A lot of problems have been solved. The reason why I go against the tide to implement Paxos is mainly that:

This implementation code refers to the concept in RAFT and the implementation and architecture design of phxpaxos to implement the multi-Paxos algorithm, which is mainly strengthened for thread safety and module abstraction. The network, member management, logs, snapshots, and storage are accessed in the form of interfaces. The algorithm is designed as event-driven and only contains header files, which is convenient for porting and expanding.

This article assumes readers have some understanding of the Paxos protocol and will not explain too much about the derivation and proof of the Paxos algorithm and some basic concepts. It mainly focuses on the engineering implementation of Paxos. If readers are interested in the derivation of the Paxos algorithm, please check the relevant paper materials in the references.

Paxos is so famous. What cool things can we do after writing a library?

Most intuitively, you can implement a distributed system based on Paxos, which has:

Based on the log + state machine of the Paxos system, we can easily implement high-availability services with the state (such as a distributed KV storage system). Combined with snapshot + member management, this service can provide many advanced functions (such as online migration and dynamic multi-replica addition). Isn’t it charming? Let's implement the following algorithm.

Talk is cheap; show me the code!

First, put the link to the code repository.

I am used to writing the basic class algorithm library as a header file, which is convenient for subsequent code reference and porting to other projects. The compiler can fully inline various functions. The disadvantage is that the compilation time is slower. In the public codes, only a log library (spdlog, also header only) is brought to reduce additional project references. Unit tests are relatively simple to write. Anyone interested can add more tests.

The original text Paxos Made Simple is used for reference below to avoid misunderstanding caused by the translation.

A consensus algorithm ensures that a single one among the proposed values is chosen.

- Only a value that has been proposed may be chosen.

- Only a single value is chosen.

- A process never learns that a value has been chosen unless it actually has been.

The purpose of the simplest consensus algorithm is to negotiate a universally accepted value among a bunch of peer nodes, and this value is proposed by one of the nodes and can be known by all nodes after it is determined.

The derivation of the Paxos algorithm has been described in many articles, and I will not repeat it here. After all, the main goal of this article is to implement a Paxos library, so let’s focus on the implementation of the codes.

Phase 1 (prepare)

- A proposer selects a proposal number n and sends a prepare request with number n to a majority of acceptors.

- If an acceptor receives a prepare request with number n greater than any prepare request to which it has already responded, it responds to the request with a promise not to accept any more proposals numbered less than n and with the highest-numbered proposal (if any) it has accepted.

Phase 2 (accept)

- If the proposer receives a response to its prepare requests (numbered n) from a majority of acceptors, it sends an accept request to each of those acceptors for a proposal numbered n with a value v, where v is the value of the highest-numbered proposal among the responses, or is any value if the responses reported no proposals.

- If an acceptor receives an accept request for a proposal numbered n, it accepts the proposal unless it has already responded to a prepare request having a number greater than n.

The most basic process is these two rounds of voting. If we want to implement voting, we need to implement the codes for the entities in the description.

The base.h defines the entities required in the algorithm, including voting ballot_number_t, value value_t, acceptor state state_t, and messages passed between roles message_t.

struct ballot_number_t final {

proposal_id_t proposal_id;

node_id_t node_id;

};

struct value_t final {

state_machine_id_t state_machine_id;

utility::Cbuffer buffer;

};

struct state_t final {

ballot_number_t promised, accepted;

value_t value;

};

struct message_t final {

enum type_e {

noop = 0,

prepare,

prepare_promise,

prepare_reject,

accept,

accept_accept,

accept_reject,

value_chosen,

learn_ping,

learn_pong,

learn_request,

learn_response

} type;

// Sender info.

group_id_t group_id;

instance_id_t instance_id;

node_id_t node_id;

/**

* Following field may optional.

*/

// As sequence number for reply.

proposal_id_t proposal_id;

ballot_number_t ballot;

value_t value;

// For learner data transmit.

bool overload; // Used in ping & pong. This should be consider when send learn request.

instance_id_t min_stored_instance_id; // Used in ping and pong.

std::vector<learn_t> learn_batch;

std::vector<Csnapshot::shared_ptr> snapshot_batch;

};At the same time, base.h defines the base class Cbase of a node, which is used to describe the state, current log instance id, and lock of the base node. It also provides some basic index promotion, message sending and receiving, member identification, and message storage functions. Part of the codes for Cbase are intercepted below.

template<class T>

class Cbase {

// Const info of instance.

const node_id_t node_id_;

const group_id_t group_id_;

const write_options_t default_write_options_;

std::mutex update_lock_;

std::atomic<instance_id_t> instance_id_;

Cstorage &storage_;

Ccommunication &communication_;

CpeerManager &peer_manager_;

bool is_voter(const instance_id_t &instance_id);

bool is_all_peer(const instance_id_t &instance_id, const std::set<node_id_t> &node_set);

bool is_all_voter(const instance_id_t &instance_id, const std::set<node_id_t> &node_set);

bool is_quorum(const instance_id_t &instance_id, const std::set<node_id_t> &node_set);

int get_min_instance_id(instance_id_t &instance_id);

int get_max_instance_id(instance_id_t &instance_id);

void set_instance_id(instance_id_t &instance_id);

bool get(const instance_id_t &instance_id, state_t &state);

bool get(const instance_id_t &instance_id, std::vector<state_t> &states);

bool put(const instance_id_t &instance_id, const state_t &state, bool &lag);

bool next_instance(const instance_id_t &instance_id, const value_t &chosen_value);

bool put_and_next_instance(const instance_id_t &instance_id, const state_t &state, bool &lag);

bool put_and_next_instance(const instance_id_t &instance_id, const std::vector<state_t> &states, bool &lag);

bool reset_min_instance(const instance_id_t &instance_id, const state_t &state);

bool broadcast(const message_t &msg,

Ccommunication::broadcast_range_e range,

Ccommunication::broadcast_type_e type);

bool send(const node_id_t &target_id, const message_t &msg);

};The proposer.h is responsible for implementing the behavior of the proposer in the Paxos algorithm, including proposing resolutions and processing messages replied by the acceptor.

The on_prepare_reply processes the response that the acceptor returns to the prepare process. Compared with the description in the Paxos paper, the message needs to be detected in detail. After determining that it is a message that needs to be processed in the current context, it is added to the response statistics set. Finally, according to the principle of majority, a further judgment is made on whether to abandon or continue to enter the next accept process.

response_set_.insert(msg.node_id);

if (message_t::prepare_promise == msg.type) {

// Promise.

promise_or_accept_set_.insert(msg.node_id);

// Caution: This will move value to local variable, and never touch it again.

update_ballot_and_value(std::forward<message_t>(msg));

} else {

// Reject.

reject_set_.insert(msg.node_id);

has_rejected_ = true;

record_other_proposal_id(msg);

}

if (base_.is_quorum(working_instance_id_, promise_or_accept_set_)) {

// Prepare success.

can_skip_prepare_ = true;

accept(accept_msg);

} else if (base_.is_quorum(working_instance_id_, reject_set_) ||

base_.is_all_voter(working_instance_id_, response_set_)) {

// Prepare fail.

state_ = proposer_idle;

last_error_ = error_prepare_rejected;

notify_idle = true;

}The on_accept_reply handles the response of the acceptor to return the accept process. Here, according to the description in Paxos, the majority principle is used to determine whether the proposal is finally passed. If it is passed, it enters the chosen process and broadcasts the determined value.

response_set_.insert(msg.node_id);

if (message_t::accept_accept == msg.type) {

// Accept.

promise_or_accept_set_.insert(msg.node_id);

} else {

// Reject.

reject_set_.insert(msg.node_id);

has_rejected_ = true;

record_other_proposal_id(msg);

}

if (base_.is_quorum(working_instance_id_, promise_or_accept_set_)) {

// Accept success.

chosen(chosen_msg);

chosen_value = value_;

} else if (base_.is_quorum(working_instance_id_, reject_set_) ||

base_.is_all_voter(working_instance_id_, response_set_)) {

// Accept fail.

state_ = proposer_idle;

last_error_ = error_accept_rejected;

notify_idle = true;

}The acceptor.h implements the behavior of the acceptor in the Paxos algorithm, processes the request of the proposer, and persists and pushes up the log instance id. Cacceptor also has an important mission, which is to load the existing state during initialization to ensure the state of promise and the value of accept.

The on_prepare corresponds to the processing after receiving the prepare request, decides to return the message for the proposal voting number, and persists the promise state.

if (msg.ballot >= state_.promised) {

// Promise.

response.type = message_t::prepare_promise;

if (state_.accepted) {

response.ballot = state_.accepted;

response.value = state_.value;

}

state_.promised = msg.ballot;

auto lag = false;

if (!persist(lag)) {

if (lag)

return Cbase<T>::routine_error_lag;

return Cbase<T>::routine_write_fail;

}

} else {

// Reject.

response.type = message_t::prepare_reject;

response.ballot = state_.promised;

}The on_accept handles the processing of the received accept request accordingly. Based on its state and the proposal number, it decides whether to update the current state or return a rejection. Eventually, the appropriate accept state and value are persisted.

if (msg.ballot >= state_.promised) {

// Accept.

response.type = message_t::accept_accept;

state_.promised = msg.ballot;

state_.accepted = msg.ballot;

state_.value = std::move(msg.value); // Move value to local variable.

auto lag = false;

if (!persist(lag)) {

if (lag)

return Cbase<T>::routine_error_lag;

return Cbase<T>::routine_write_fail;

}

} else {

// Reject.

response.type = message_t::accept_reject;

response.ballot = state_.promised;

}The on_chosen is to process the message determined by the corresponding value of the proposer broadcast. After discrimination, it will push up the current log instance id and let the current node enter the judgment of the next value (multi-Paxos logic).

if (base_.next_instance(working_instance_id_, state_.value)) {

chosen_instance_id = working_instance_id_;

chosen_value = state_.value;

} else

return Cbase<T>::routine_error_lag;We have realized the basic functions of the two basic roles in the paper. It is also clear that these two roles can do nothing but determine a fixed value. At this time, the multi-Paxos algorithm needs to be introduced. Since it is useless to determine a value, determining a series of values can be combined with the state machine to achieve more complex functions. This is the log instance id mentioned earlier. This is u64 starting from 0:

typedef uint64_t instance_id_t; // [0, inf)Now, it is simple to implement a multi-value sequence, and each value is confirmed using Paxos's algorithm. As shown in the following figure, instance_id_t starts from 0 and increases in sequence. The proposer uses the prepare and accept process to determine the values in sequence. Value is a series of interfaces. We can achieve strong consistent synchronization among multiple nodes through the state machine.

| instance_id_t | 0 | 1 | 2 | 3 | ... | inf |

| value_t | a=1 | b=2 | b=a+1 | a=b+1 | … | |

| Paxos | prepare accept |

prepare accept |

prepare accept |

prepare accept |

... |

It is easy to find that the determination of each value requires at least two communications with RT (messages of on_choosen can be pipelined without delay) +2 disk IO, which is quite costly. However, the article on Paxos puts forward a multi-Paxos idea.

The key to the efficiency of this approach is that in the Paxos consensus algorithm, the value to be proposed is not chosen until phase 2. Recall that, after completing phase 1 of the proposer's algorithm, either the value to be proposed is determined or else the proposer is free to propose any value.

In short:

At this time, an ideal situation is that after a node is preempted and approved with a proposer id, it is submitted continuously with accept. The determination of each value is condensed to 1 communication RT +1 disk IO, which is the optimal cost of multi-node data synchronization.

| instance_id_t | 0 | 1 | 2 | 3 | ... | inf |

| value_t | a=1 | b=2 | b=a+1 | a=b+1 | … | |

| Paxos | prepare accept |

accept | accept | accept | ... |

Meanwhile, we can introduce some mechanisms based on implementation to speed up unnecessary processes and optimize performance.

The learner is used to learn the identified log instance quickly.

To learn that a value has been chosen, a learner must find out that a proposal has been accepted by a majority of acceptors. The obvious algorithm is to have each acceptor, whenever it accepts a proposal, respond to all learners, sending them the proposal. This allows learners to find out about a chosen value as soon as possible, but it requires each acceptor to respond to each learner-a number of responses equal to the product of the number of acceptors and the number of learners.

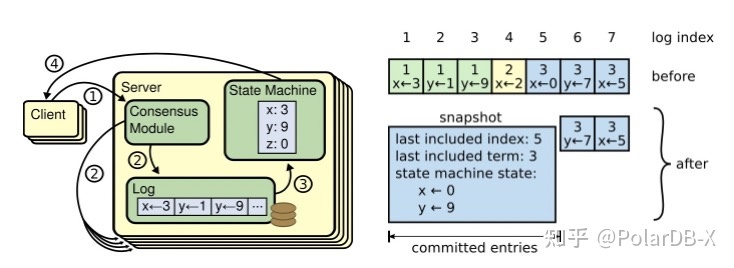

The approach in the paper is to ask all acceptors to determine the value of the majority. Here, we broadcast the proposer id through the on_choosen of the proposer, letting other nodes know which value has been determined, pushing up the log instance id quickly, and helping the nodes to know which values can be passed to the state machine for playback. In the form of ping packets, the learner.h understands the determined log instance ids of each peer node and selects the appropriate node for fast learning. In actual engineering, according to the backwardness and the cropped log, it will choose whether to learn by log or snapshot.

According to the description in the Paxos Made Live, the difficulty of implementing the correct Paxos lies in implementing the standard Paxos algorithm and more, such as the assumption that its message transmission and storage are reliable (non-Byzantine errors) and an accurate judgment of quorum (member changes). The way to solve this problem is to use the interface to separate this part from the core algorithm and leave it to a more professional person or library to solve. However, we only concentrate on the current algorithm, optimization, and scheduling (making the library stateless). Meanwhile, this separation allows Paxos to work on existing storage and network systems, avoiding additional storage or network redundancy and performance failure.

Therefore, all assumptions and implementations of non-Paxos algorithms are connected to the core algorithm through interfaces. Valid values: storage, communication, member management, and state machine and snapshot. Of course, the code provides the simplest queue-based communication for testing purposes, which can simulate memory storage and non-Byzantine errors (such as random latency, disorder, and package loss). The following appendix will attach the storage implemented by RocksDB, implementation of member management + member state machine + snapshot that supports changes, and a TCP & UDP hybrid communication system based on ASIO.

The three roles of proposer acceptor learner are complete. The following needs a management object to merge them to form a Paxos Group. Here, we use the Cinstance class instance.h to implement a zero-loss interface through a template, avoid the call cost of virtual functions, and completely connect the three roles and the Cbase that handles log instance advancement, communication, storage, and state machine. Cinstance provides a blocking interface with traffic control, gives various timeout parameters, and submits a value to the Paxos Group for the convenience of external calls, which returns after success or timeout. To fully decouple roles directly, all interfaces related to role state flow are exposed and processed in Cinstance in callback mode. This interactive information can also be processed intuitively in a code context, minimizing logical bugs.

void synced_value_done(const instance_id_t &instance_id, const value_t &value);

void synced_reset_instance(const instance_id_t &from, const instance_id_t &to);

Cbase::routine_status_e self_prepare(const message_t &msg);

Cbase::routine_status_e self_chosen(const message_t &msg);

void on_proposer_idle();

void on_proposer_final(const instance_id_t &instance_id, value_t &&value);

void value_chosen(const instance_id_t &instance_id, value_t &&value);

void value_learnt(const instance_id_t &instance_id, value_t &&value);

void learn_done();

Cbase::routine_status_e take_snapshots(const instance_id_t &peer_instance_id, std::vector<Csnapshot::shared_ptr> &snapshots);

Cbase::routine_status_e load_snapshots(const std::vector<Csnapshot::shared_ptr> &snapshots);The implementation here is an engineering one. Only the basic ideas are mentioned here. Please refer to the codes for specific implementation:

The serialized value is ready. Everything is ready except the state machine to implement a complete application with the state. There is a complete description in RAFT. Here, we also design the implementation with a log + state machine. A snapshot interface is provided to help the learner learn quickly. Furthermore, due to the snapshot, we do not need to keep the complete log. The corresponding log instance id can be replayed quickly through the snapshot to achieve faster learning. Similarly, logs, state machines, and snapshots are implemented using operations. Please see the codes in state_machine.h for more information. Many auxiliary operations are reserved in the operations to facilitate snapshot acquisition and application without blocking.

class Csnapshot {

public:

// Get global state machine id which identify myself, and this should be **unique**.

virtual state_machine_id_t get_id() const = 0;

// The snapshot represent the state machine which run to this id(not included).

virtual const instance_id_t &get_next_instance_id() const = 0;

};

class CstateMachine {

public:

// Get global state machine id which identify myself, and this should be **unique**.

virtual state_machine_id_t get_id() const = 0;

// This will be invoked sequentially by instance id,

// and this callback should have ability to handle duplicate id caused by replay.

// Should throw exception if get unexpected instance id.

// If instance's chosen value is not for this SM, empty value will given.

virtual void consume_sequentially(const instance_id_t &instance_id, const utility::Cslice &value) = 0;

// Supply buffer which can move.

virtual void consume_sequentially(const instance_id_t &instance_id, utility::Cbuffer &&value) {

consume_sequentially(instance_id, value.slice());

}

// Return instance id which will execute on next round.

// Can smaller than actual value only in concurrent with consuming, but **never** larger than real value.

virtual instance_id_t get_next_execute_id() = 0;

// Return smallest instance id which not been persisted.

// Can smaller than actual value only in concurrent with consuming, but **never** larger than real value.

virtual instance_id_t get_next_persist_id() = 0;

// The get_next_instance_id of snapshot returned should >= get_next_persist_id().

virtual int take_snapshot(Csnapshot::shared_ptr &snapshot) = 0;

// next_persist_id should larger or equal to snapshot after successfully load.

virtual int load_snapshot(const Csnapshot::shared_ptr &snapshot) = 0;

};Secondly, the algorithm provides two sets of value chosen callback interfaces to realize more advanced functions. One is the callback synced_value_done in the critical area of log instance id advancement, and the other is the asynchronous callback of value_chosen. They are respectively applicable to state control strongly related to log instance id (such as member management, which will be mentioned later) and common state machines. Asynchronous callbacks are outside the critical zone, occupying event-driven threads. However, the overall throughput of the Paxos algorithm is not affected. A synchronous queue CstateMachineBase ensures the sequence of log applications.

So far, we have realized most of the requirements for distributed consistency libraries, but there is another common and important requirement: dynamic member management in practical distributed consistency libraries. There are several ways to implement this function:

joint consensus (two-phase approach)

• Log entries are replicated to all servers in both configurations.

• Any server from either configuration may serve as a leader.

• Agreement (for elections and entry commitment) requires separate majorities from both the old and new configurations.

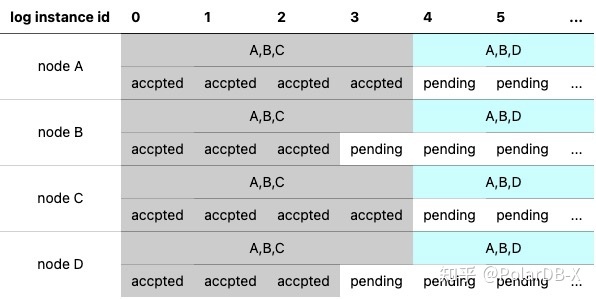

The reason why RAFT does not adopt one-step change is that one-step change will lead to multiple groups of quorums that do not intersect in the intermediate state. As in the scenario in the following example: node C needs to be replaced with node D. In log instance id 3, due to delay and other reasons, nodes A and C have not changed their members, still treating ABC as members. AC, as quorum, accept a value. The nodes BD that know the latest member is ABC can still be used as a quorum to accept another value, which leads to the failure of the Paxos algorithm.

The essence of this problem is that when the consensus algorithm is carried out, the members are not atomic changes but in the intermediate state between the nodes. This problem can be solved by introducing the member change into the log, replaying it on each node through the state machine, and using the correct member group in different log instance id situations through multi-version member control. As such, member changes are integrated into the Paxos algorithm, and the change that becomes an atom occurs.

It is easy to find that parameters of group id (multi-Paxos Group) and log instance id are passed in the communication and member management interfaces (the communication interface is obtained in the message), which is convenient for the management of dynamic member changes during implementation.

class Ccommunication {

public:

virtual int send(const node_id_t &target_id, const message_t &message) = 0;

enum broadcast_range_e {

broadcast_voter = 0,

broadcast_follower,

broadcast_all,

};

enum broadcast_type_e {

broadcast_self_first = 0,

broadcast_self_last,

broadcast_no_self,

};

virtual int broadcast(const message_t &message, broadcast_range_e range, broadcast_type_e type) = 0;

};

class CpeerManager {

public:

virtual bool is_voter(const group_id_t &group_id, const instance_id_t &instance_id,

const node_id_t &node_id) = 0;

virtual bool is_all_peer(const group_id_t &group_id, const instance_id_t &instance_id,

const std::set<node_id_t> &node_set) = 0;

virtual bool is_all_voter(const group_id_t &group_id, const instance_id_t &instance_id,

const std::set<node_id_t> &node_set) = 0;

virtual bool is_quorum(const group_id_t &group_id, const instance_id_t &instance_id,

const std::set<node_id_t> &node_set) = 0;

};So far, a complete and modular Paxos library has been implemented, which can complete most of the capabilities we expect with great expansion capabilities. Of course, trade-offs exist when implementing this library. This library only implements one Paxos Group and can only determine one value sequence by sequence. This is to have the ability to grab the master and discard the ability of the pipeline quickly (the hole that the pipeline preempts quickly is very unfriendly to the implementation of state machines). Of course, multiple GROUP can be used to implement a pipeline, whose efficiency will not be much different. More optimizations (such as the hybrid persistence of stored logs and state machines and the GROUPING(BATCHING) of messages) can be used at will on the provided interfaces.

Several examples of extended code are provided here for reference, including:

An Interpretation of PolarDB-X Source Codes (10): Life of Transactions

ApsaraDB - October 24, 2022

ApsaraDB - September 11, 2024

ApsaraDB - November 1, 2022

ApsaraDB - October 24, 2022

ApsaraDB - October 25, 2022

ApsaraDB - October 18, 2022

LedgerDB

LedgerDB

A ledger database that provides powerful data audit capabilities.

Learn More PolarDB for Xscale

PolarDB for Xscale

Alibaba Cloud PolarDB for Xscale (PolarDB-X) is a cloud-native high-performance distributed database service independently developed by Alibaba Cloud.

Learn More ApsaraDB for OceanBase

ApsaraDB for OceanBase

A financial-grade distributed relational database that features high stability, high scalability, and high performance.

Learn More Managed Service for Prometheus

Managed Service for Prometheus

Multi-source metrics are aggregated to monitor the status of your business and services in real time.

Learn MoreMore Posts by ApsaraDB