By Lu Chen and Dong Chen

The OpenYurt project aims to decentralize the powerful control capabilities of Kubernetes on the cloud to edge testing and integrate massive heterogeneous edge resources into a unified edge computing platform. However, some features of edge scenarios do not conform to the presuppositions of Kubernetes, which is designed to run on the cloud. This is what OpenYurt needs to solve. Edge autonomy was created in this context.

Unlike a secure and stable cloud network environment, edge nodes and cloud nodes are usually not in the same network plane and need to be connected to the cloud through the Internet in edge scenarios. The public network connection brings several problems (such as the high cost of public network traffic, the need for cross-domain communication capabilities, and the instability of public network connections) this article will discuss. The OpenYurt system solves all of these problems.

Today, we want to share the OpenYurt community's thoughts on these questions and the OpenYurt edge autonomy designed for it.

Let's see how native Kubernetes behaves under an unstable network environment. When the network connection of a node is interrupted, the Kubernetes cluster has [1] a series of actions to handle this event.

When a node fails to report a heartbeat, the Kubernetes cluster determines that the node has an exception. As an abnormal resource, the node is no longer suitable for supporting upper-layer applications. This approach is appropriate for machines online 24 hours a day in the data center, but such a strategy remains to be discussed in a network environment with complex edge scenarios.

First, in some edge scenarios, edge nodes need to actively interrupt network connections to support network disconnection maintenance requirements. As such, native Kubernetes will evict edge containers, and some edge components will report errors (or exit) due to APIServer connection failure and resource synchronization failure. This is unacceptable. More in-depth, there may be two reasons behind the phenomenon that the node cannot report a heartbeat. Either the machine fails and hangs up with all workload, or the machine is still running normally, but the network is disconnected. Kubernetes does not differentiate between the two cases and directly sets the node without a heartbeat to Not Ready. However, in edge scenarios, network disconnection is a common scenario or a requirement. We can distinguish the two types of causes and only migrate and rebuild pods when nodes fail.

Secondly, there is a typical type of edge service that requires that pods not be evicted even when a node fails. They need to bind specific pods to specific nodes. For example, the application of image processing needs to be bound to the machine corresponding to the camera, and the application of smart transportation needs to be fixed on the machine at a certain intersection. This requirement to bind nodes violates the Kubernetes design concept of isolating underlying resources from upper-layer applications. However, this is a requirement of edge services and needs to be supported by OpenYurt.

Finally, we need to consider the situation of network disconnection and restart. In the native Kubernetes architecture, the container information of the slave agent (Kubelet) is stored in the memory, and the service data cannot be obtained from the cloud when the network is disconnected. If the edge node or the Kubelet of the edge node is restarted abnormally, the service container cannot be recovered.

In order to summarize the requirements for edge autonomy in one sentence, ensure that edge services continue to run in weak or even disconnected environments. We need to solve the following problems to achieve this capability under the Kubernetes system.

OpenYurt offers a complete set of solutions from cloud to edge to address the challenges of edge autonomy.

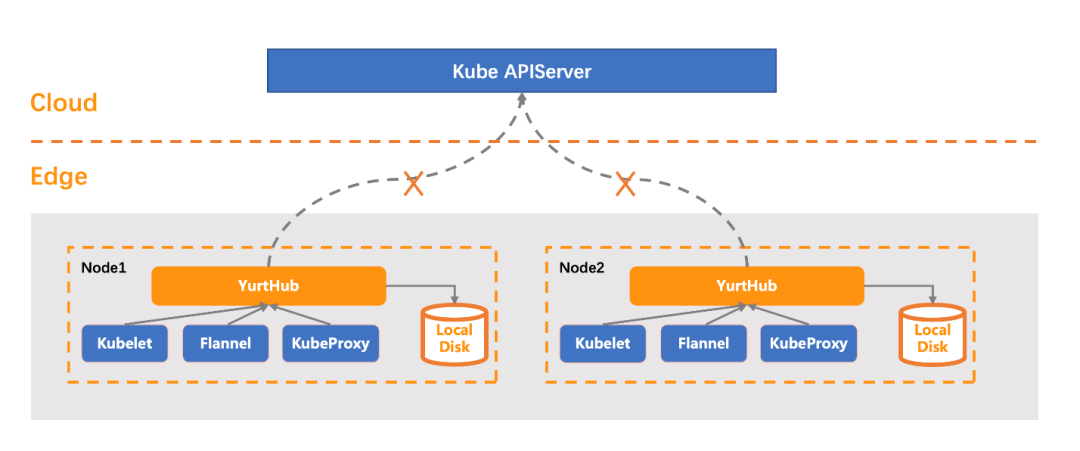

At the edge, OpenYurt introduces an important component: YurtHub. YurtHub provides web caching and request proxy capabilities on edge nodes. System components (such as kubelet) on nodes and communication between business containers and the cloud will be proxied through this component.

YurtHub solves the problem of network disconnection and restart (problem 1), and the additional encapsulation of APIServer at this layer also extends [2] many other important OpenYurt capabilities.

OpenYurt enhances the pod eviction policy of native Kubernetes to a certain extent. In native Kubernetes, if the heartbeat of an edge node has not been reported for a certain period, the cloud controller will evict the pod on the node (delete and rebuild it on the normal node). Edge businesses have different requirements in cloud-edge collaboration scenarios. Some businesses expect that when the network on the cloud side is disconnected, and the heartbeat cannot be reported (the node itself is normal), the business pods can be maintained (no eviction occurs), and the pods are migrated and rebuilt only when the node fails.

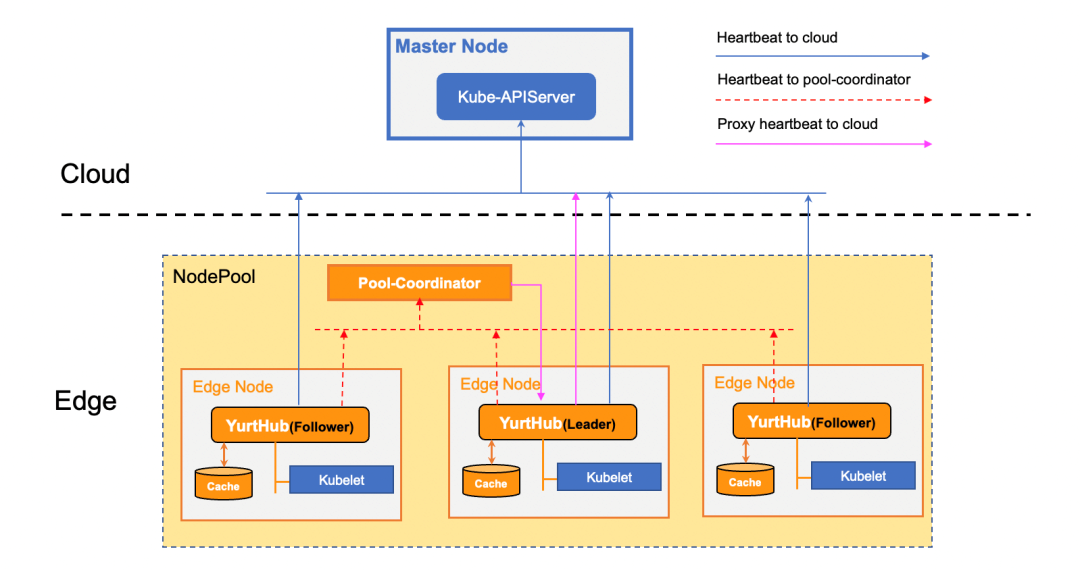

OpenYurt 1.2 provides a centralized heartbeat proxy mechanism based on Pool-Coordinator and YurtHub, as shown in the following figure:

The heartbeat proxy mechanism implemented by Pool-Coordinator and YurtHub ensures that the heartbeat of a node can continue to be reported to the cloud when the node is disconnected from the cloud edge network. This ensures that pods on the node are not evicted (problem 2). At the same time, the node whose heartbeat is reported by the proxy will also be added with special taints in real-time to restrict scheduling a new pod to this node.

Some edge services require that pods are not evicted when a node fails, and the service is bound to the node. OpenYurt provides two perspectives to solve this problem.

The first is from the perspective of the node. For example, you want all pods on this machine to be bound to this machine. Then, we can tag this node node.beta.openyurt.io/autonomy=true.

The second perspective is from the business, such as the aforementioned smart transportation business, which wants its lifecycle to be consistent with the lifecycle of the nodes it runs on. OpenYurt version 1.2 adds the apps.openyurt.io/binding label. If a pod has this label, it means the pod needs the ability to bind nodes.

In both methods, the binding capability is implemented by adding toleration to the corresponding pods.

The cloud-side network connection is unstable in the edge scenario. Therefore, the edge needs to have a certain degree of autonomy in the absence of cloud support. Based on the native Kubernetes architecture, OpenYurt provides a non-intrusive solution to solve several pain points of edge autonomy (such as node restart, node deportation, and node business binding).

OpenYurt 1.2 enhances edge autonomy based on the Pool-Coordinator + YurtHub architecture. There is still a lot of room for improvement in the edge autonomy field. For example, in addition to maintaining basic pods in the disconnected state, OpenYurt will provide node pool O&M capabilities in later versions. Interested colleagues are welcome to participate in the construction and explore the de facto standard of a stable and reliable non-invasive cloud-native edge computing platform.

[1] A series of actions to handle the event

https://github.com/kubernetes/enhancements/blob/master/keps/sig-node/589-efficient-node-heartbeats/README.md

[2] Many other important OpenYurt capabilities

https://openyurt.io/docs/core-concepts/yurthub/

Distributed End-to-End Tracing Analysis of Message Queue for Apache RocketMQ x OpenTelemetry

508 posts | 48 followers

FollowAlibaba Developer - March 3, 2022

Alibaba Developer - January 21, 2021

Alibaba Developer - April 18, 2022

Alibaba Developer - July 9, 2021

Alibaba Container Service - November 7, 2024

Alibaba Developer - March 30, 2022

508 posts | 48 followers

Follow Accelerated Global Networking Solution for Distance Learning

Accelerated Global Networking Solution for Distance Learning

Alibaba Cloud offers an accelerated global networking solution that makes distance learning just the same as in-class teaching.

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More Networking Overview

Networking Overview

Connect your business globally with our stable network anytime anywhere.

Learn More Managed Service for Prometheus

Managed Service for Prometheus

Multi-source metrics are aggregated to monitor the status of your business and services in real time.

Learn MoreMore Posts by Alibaba Cloud Native Community