By Cloud-Native SIG

The secure container that hosts a single container in a micro virtual machine (VM) is now used in serverless computing, as the containers are isolated through the microVMs. There are high demands on the high-density container deployment and high-concurrency container startup to improve both the resource utilization and user experience, as user functions are fine-grained in serverless platforms. Our investigation shows that the entire software stacks, containing the cgroups in the host operating system, the guest operating system, and the container rootfs for the function workload, together result in low deployment density and slow startup performance at high-concurrency. We therefore propose and implement a lightweight secure container runtime, named RunD, to resolve the above problems through a holistic guest-to-host solution. With RunD, over 200 secure containers can be started in a second, and over 2500 secure containers can be deployed on a node with 384GB of memory.

As the primary implementation in Serverless computing, function compute achieves more refined pay-as-you-go and higher data-center resource utilization by isolating fine-grained functions provided by developers and elastically managing and using resources. With the development of cloud-native technology and the popularity of microservices architecture, applications are increasingly split into finer-grained functions and deployed in Serverless mode.

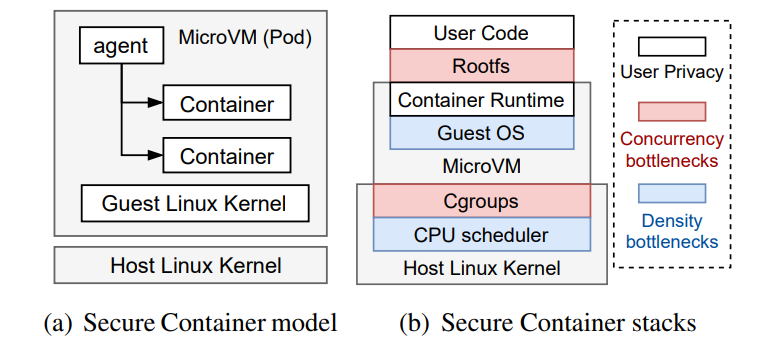

However, due to its low isolation and security, function compute based on traditional container technologies has been gradually replaced with secure container technologies that combine MicroVM with containers to provide strong isolation and low latency for applications. Secure container often creates a normal container within the lightweight microVM as shown in Figure 1(a). In such way users can build serverless services based on exsiting container infrastructure and ecosystem. It ensures compatibility with the container runtime in the MicroVM. Kata Containers and FireCracker provide practical experience in implementing such secure containers

(Figure 1: The state-of-the-art secure container model, and several bottlenecks in the architecture stacks. )

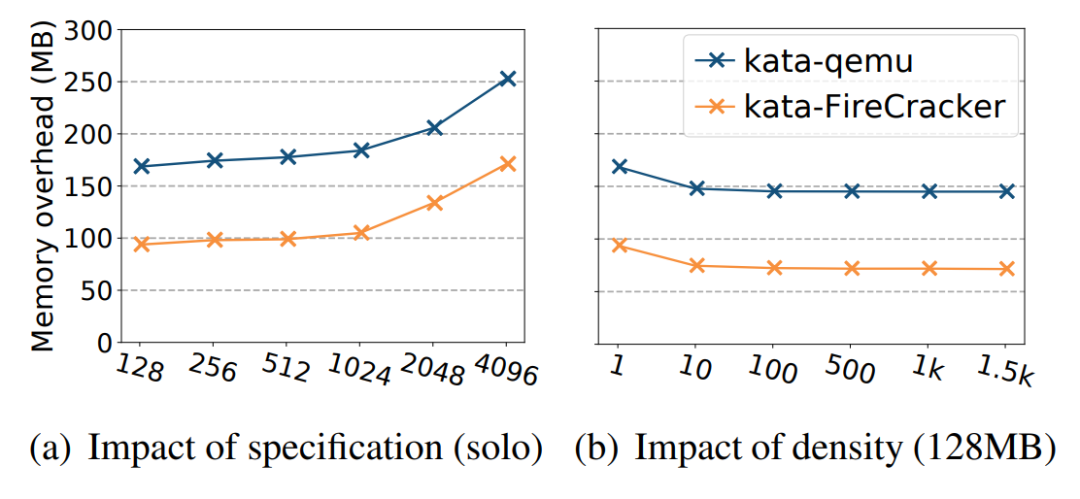

The lightweight and short-term features of functions make high-density container deployment and high-concurrency container startup essential for serverless computing. For instance, 47% of Lambdas run with the minimum memory specification of 128MB in AWS, about 90% of the applications never consume more than 400MB in Microsoft Azure. Since a physical node often has large memory space (e.g., 384GB), it should be able to host many functions. Meanwhile, a large number of function invocations may arrive in a short time. However, the overhead of secure containers significantly reduces the deployment density of functions, and the concurrency of starting containers.

Figure 1(b) shows the architecture hierarchy of a secure container. In general, the guest operating system (GuestOS) in the microVM and resource scheduling on the host are offloaded to the cloud provider. The rootfs is a filesystem and acts as the execution environment of user code. It is created by the host and passed to the container runtime in the microVM. On the host side, cgroups are used to allocate resources to secure containers, and the CPU scheduler manages the resource allocation. The complex hierarchy of secure containers brings extra overhead.

Starting from Kata-containers, a SOTA open-source secure container technology, this article puts forward three key observations and challenges for high-density deployment of secure containers in function compute scenarios through an in-depth analysis of the bottleneck in the host-to-guest architecture:

We propose and implement a lightweight secure container runtime, named RunD, to resolve the above problems through a holistic guest-to-host solution.RunD solves the three high-density and high-concurrency challenges above by introducing an efficient file system with read/write separation, the pre-patched process, the streamlined guest kernel, and a globally maintainable lightweight cgroup pool.

According to our evaluation, RunD boots to application code in 88ms, and can launch 200 secure containers per second on a node. On a node with 384GB memory, over 2,500 secure containers can be deployed with RunD. RunD is adopted as Alibaba serverless container runtime serving more than 1 million functions and almost 4 billion invocations daily. The online statistics demonstrate that RunD enables the maximum deployment density of over 2,000 containers per node and supports booting at most 200 containers concurrently with a quick end-to-end response.

The section above introduces RunD. This section discusses the current design of secure containers and why RunD is necessary.

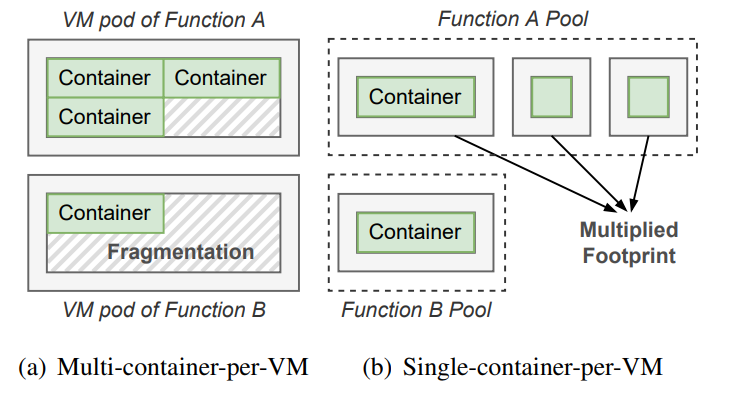

Based on different levels of security/isolation requirements, there are generally two categories of secure containers in the production environments. Figure 2(a) shows the multi-container-per-VM secure container model that only isolates functions. In the model, a virtual machine (VM) hosts the containers for the invocations of the same function. The containers in the same VM share the guest operating system of the VM. In this case, the invocations to different functions are isolated, but the invocations to the same function are not isolated. Since the number of required containers for each function varies, this model results in memory fragmentations. Though the memory fragmentations can be reclaimed at runtime, it may significantly affect the function performance, and even crash the VM when the memory hot-unplug fails.

(Figure 2: Two practices of the secure container model)

Figure 2(b) shows the single-container-per-VM secure container model that isolates each function invocation. Current serverless computing providers mainly use this secure container model. In this model, each invocation is served with a container in a microVM. This model does not introduce memory fragmentations, but the microVMs themselves show heavy memory overhead. It is obvious that each microVM needs to run its exclusive guest operating system, multiplying the memory footprints. The secure container depends on the security model of hardware virtualization and VMM, explicitly treating the guest kernel as untrusted through syscall inspections. With the prerequisite of isolation and security, this work targets the single-container-per-VM secure container model.

If the secure container is applied for function isolation in the Serverless scenario, the fine-grained natural isolation will bring new and complex scenarios. Thus, one hard requirement and two derivative requirements need to be met:

In this section, we analyze the problems of achieving highconcurrency startup and high-density deployment with secure containers.

We use Kata container as the representative secure container to perform the following studies.

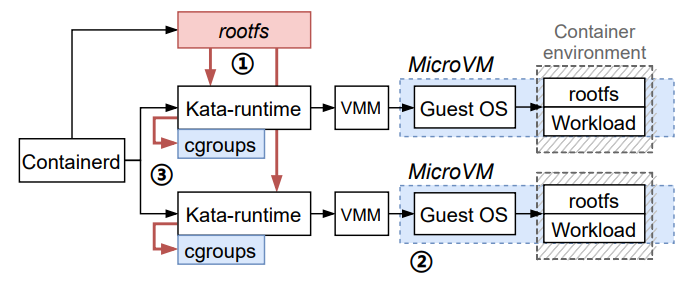

Figure 3: The steps of starting up multiple Kata containers concurrently. The concurrency bottleneck results from creating rootfs (step ① in red block) and creating cgroups (step ③ in red flowline). The density bottlenecks result from the memory footprint of the microVM (step ② in blue block) and the scheduling of massive cgroups (step ③ in blue block).

Figure 3 shows the steps of starting Kata containers. First, containerd concurrently creates the container runtime Kataruntime and prepares runc-container rootfs. Second, the hypervisor loads the GuestOS and the prepared rootfs to launch a runc-container in the microVM. Third, the function workload is downloaded into the container and may start to run.

Comparing with starting traditional containers, we have two observations when starting up secure containers.

Figure 3 also shows three bottlenecks we found that result in the above two observations. In general, the inefficiency of creating rootfs and cgroups results in low container startup concurrency. The high memory footprint and scheduling overhead result in low container deployment density. We analyze the bottlenecks in the following subsections.

In general, rootfs can be exposed to the container runtimes in the microVMs through two interfaces to construct the image layers: filesystem sharing (e.g. 9pfs, virtio-fs) and block device (e.g. virtio-blk)

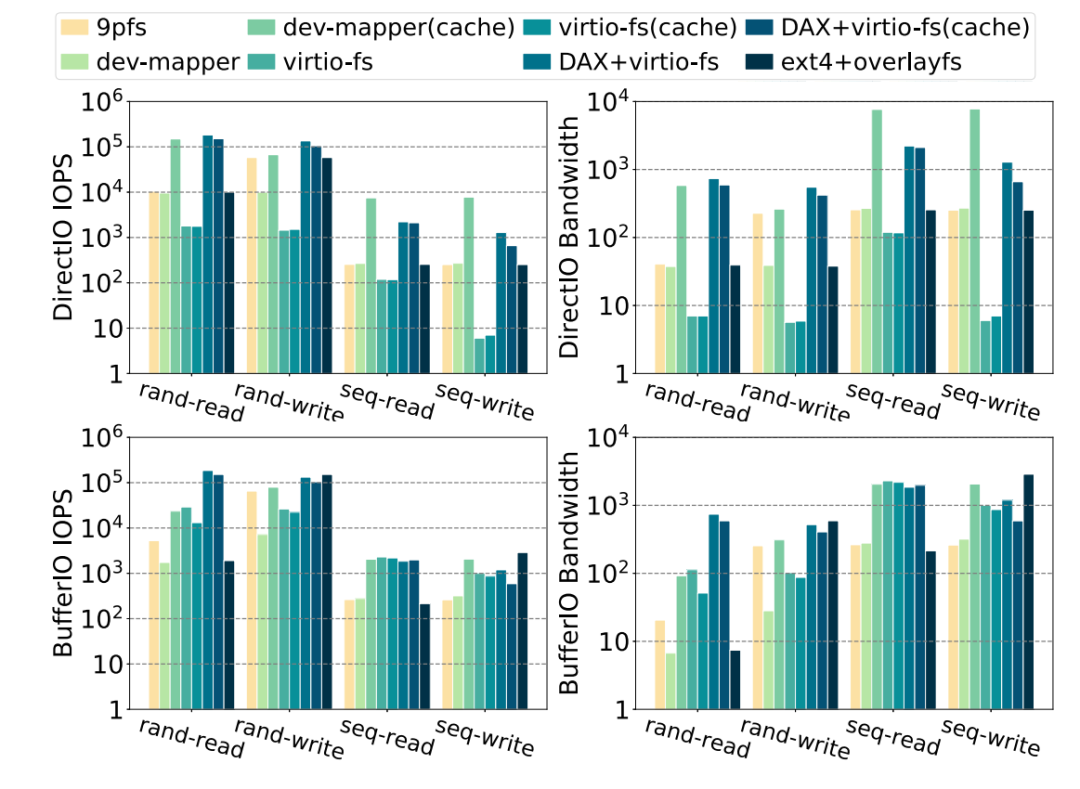

We need to compare the performances between 9p, devicemapper, and virtio-fs solutions to select a suitable container rootfs solution in high-concurrency scenarios, which is mainly divided into buffer I/O and direct I/O in sequential read, sequential write, random read, random write, random read and write, and focus on IOPS and bandwidth data. In addition, runc is tested to compare the data in the ext4 + overlayfs storage environment on the host.

(Figure 4: The IOPS/bandwidth performance of rand/seq directIO/BufferIO read/write when using different rootfs mapping in Kata-runtime)

With the comparison of the three solutions above, we abandon the high-density and high-concurrency container architecture based on the 9p solution because of the problem of POSIX semantic compatibility and poor performance in the mmap operation. Then, we tested the performance of devicemapper and virtio-fs solutions and found the following problems with the two solutions:

These are the insights we came up with: Under the existing kata architecture and storage solution, any single storage solution is not suitable for high-density and high-concurrency scenarios. We need to change the storage of rootfs to the read/write splitting mode. In terms of read, we can use the dax+virtio-fs solution to share the page cache of host and guest on the premise of ensuring performance, thus reducing memory overheads. In terms of write, the application of high-overhead solutions based on the device mapper requires reducing the creation time of devices on the writable layer and solving the problem of double pagecache. Virtio-fs-based low-performance solutions need to be evolved to meet the premise of high I/O stress scenarios and reduce the CPU overhead on the host. Therefore, none of the existing solutions are applicable, and a replacement solution is needed.

The single instance resource in high-density scenarios directly affects the number of deployed containers. In high-density deployment, apart from the resource footprint generated by a single instance to provide the specification size required by users, the resource footprint of other components often leads to high overhead. The memory footprint of the firecracker is reduced to 5MB when it is released, but this is only the footprint of the firecracker VMM. The running kernel includes code segments, data segments, and memory resources (such as the struct page for the kernel) to manage memory and the running rootfs. The additional footprint of the instance cannot be evaluated only from the memory footprint of the VMM. For example, the actual average memory overhead caused by starting several AWS Lambda functions with 128MB memory is 71MB, while the memory overhead brought by traditional QEMU is even higher, reaching 145MB.

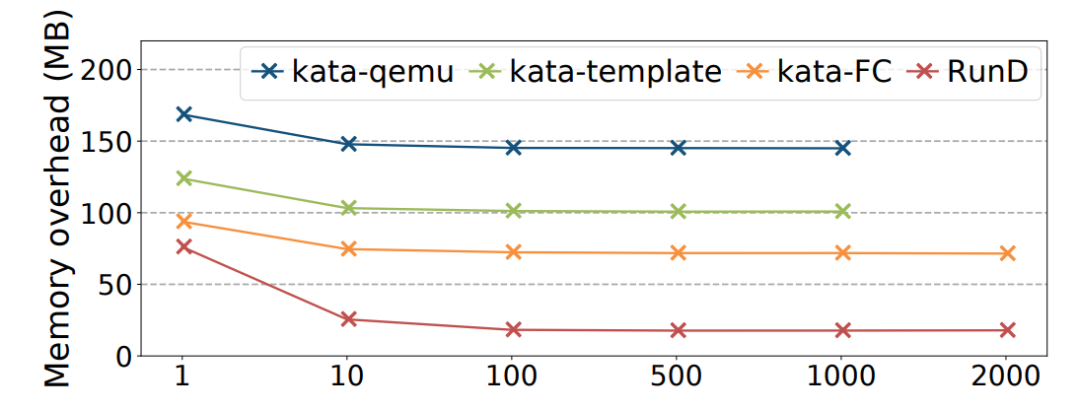

(Figure 5: Average Memory Overhead of Kata Secure Containers with Different Hypervisors)

Currently, the state-of-the-art solution is to use templates to solve double page cache for high-density deployment. In general, the guest kernel of each secure container instance on the same physical machine is the same. The template uses mmap technology to replace the file I/O operation and directly maps the kernel file to the guest memory. This way, the guest kernel can be loaded into memory on demand, and its text/rodata segments can be shared among multiple kata instances. Moreover, the content that is not accessed in the template will not be loaded into physical memory, so the average memory consumption of secure container instances will naturally be greatly reduced.

However, due to the self-modifying code in the operating system kernel, template technology is not as efficient as we expect. When the self-modifying code is running, instructions are modified on demand. The Linux kernel relies heavily on self-modifying code to improve the startup and runtime performance. As a result, a lot of memory that should be read-only is still being modified by the guest kernel. We start a virtual machine with CentOS 4.19 kernel from a template to study the impact of self-modifying code. After a 128MB instance is started through the template, it is found that 17M (18432K) of guest kernel codes and read-only data are accessed less than 10M (10012K) during the startup process, and out of the 10M data accessed, more than 7M (7928K) of the data is modified. This example shows that when using mmap to reduce the memory consumption of kernel image files, self-modifying code reduces the sharing efficiency.

Therefore, our second insight is that the amount of memory available for sharing decreases when we use the template technology due to the self-modifying nature of the kernel. If we can solve the problem brought by the self-modifying code when the guest kernel starts during the creation process of using templates, we can save more memory to offset most of the dynamic memory consumption.

Cgroup is designed for resource control and abstraction of processes. In serverless computing, the frequency of function invocations shows high variation. In this case, the corresponding secure containers are frequently created and recycled. For instance, in our serverless platform, at most 200 containers would be created and recycled on a physical node concurrently in a second. The frequent creating and recycling challenge the cgroup mechanism on the host.

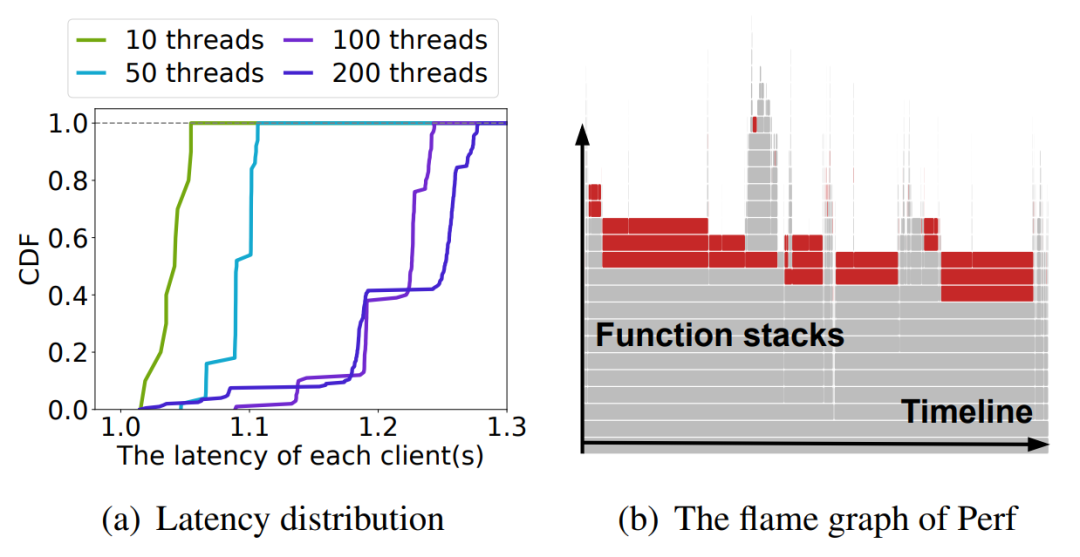

We measure the performance of cgroup operations when creating 2,000 containers concurrently. In the experiment, we use different numbers of threads to perform cgroup operations. Figure 6(a) shows the cumulative distribution of container creating latencies. In the single-thread scenario, the time taken to create and initialize cgroups when the container is started is about 1ms. In the scenario where the total number of cgroups created is the same and multiple threads are parallel, the performance can be slightly improved in the scenario of a low concurrent startup. In the high-concurrency scenario, counter-intuitively, although the number of cgroups created per thread has decreased, the total time consumption has instead increased, even less effective than the continuous operation of a single thread.

(Figure 6: The performance of cgroup operations when creating 2,000 containers concurrently.)

The reason is that the implementation of cgroup is very complicated, involving more than ten types of resource control (cgroup subsys). The kernel introduces cgroup_mutex, css_set_lock, freezer_mutex, freezer_lock, and other large locks to simplify the design of cgroup, which makes cgroup-related operations serialized. In the high-concurrency scenario, serial execution on the entire creation procedure slows down the efficiency of concurrent creation and increases the long tail latency. Figure 6(b) shows the flame graph of creating 2,000 cgroups using 10 threads concurrently. In the figure, the red parts show the case that “mutex locks” are active. When the cgroup mutex uses the optimistic spinning by default, the spinner cgroups experience the optimistic spinning if they fail to acquire the lock. It will lead to heavy CPU consumption and belated exiting of the critical section in the multi- threaded scenarios,thus affecting the running function instances.

In addition, the cgroup mechanism is designed for general business scenarios and is not designed with the idea that it will be widely used in cloud-native scenarios. A common observation is that there are often more than 10,000 cgroups with thousands of containers on a compute node. The PELT (Per-Entity Load Tracking) for load balancing in CFS will iterate over all cgroups and processes when scheduling these containers. In this scenario, the frequent context switching and hotspot functions that involve high-precision calculation in the scheduler become a bottleneck, accounting for 7.6% of the CPU cycles of the physical node, according to our measurement

In view of the findings above, our third insight is that the cgroup on the host has the bottleneck of high density and high concurrency in the Serverless scenario. We need to:

1) Reduce the size of the critical section introduced by the mutex lock in cgroup or (preferably) eliminate it

2) At the same time, simplify the functions of cgroup and reduce the complexity of cgroup design

The above analysis reveals the bottlenecks in the host, the microVM, and the guest in achieving the high-concurrency startup and high-density deployment. We propose RunD, a holistic secure container solution that resolves the problem of duplicated data across containers, high memory footprint per VM, and high host-side cgroup overhead. In this section, we first show a general design overview of RunD, and then present the design of each component to resolve the corresponding problem.

When designing RunD, we have a key implication for serverless runtime. The negligible host-side overhead in a traditional VM can cause amplification effects in the FaaS scenario with high-density and high-concurrency, and any trivial optimization can bring significant benefits.

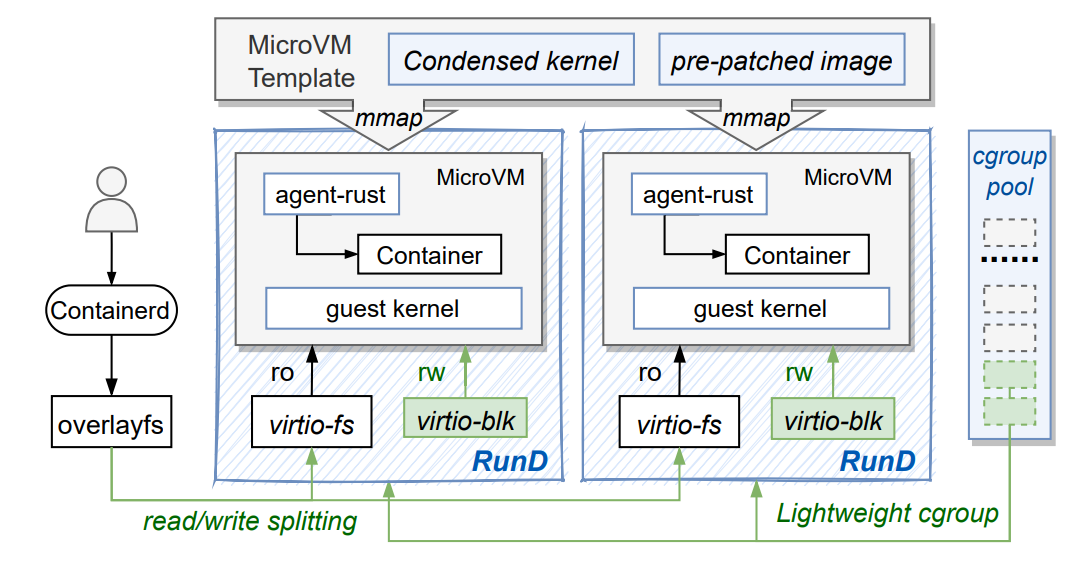

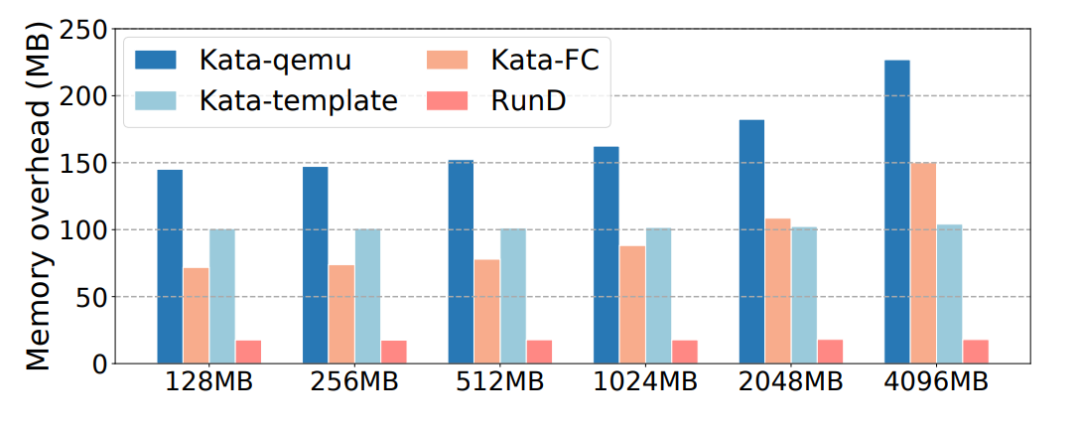

(Figure 7: The lightweight serverless runtime of RunD.)

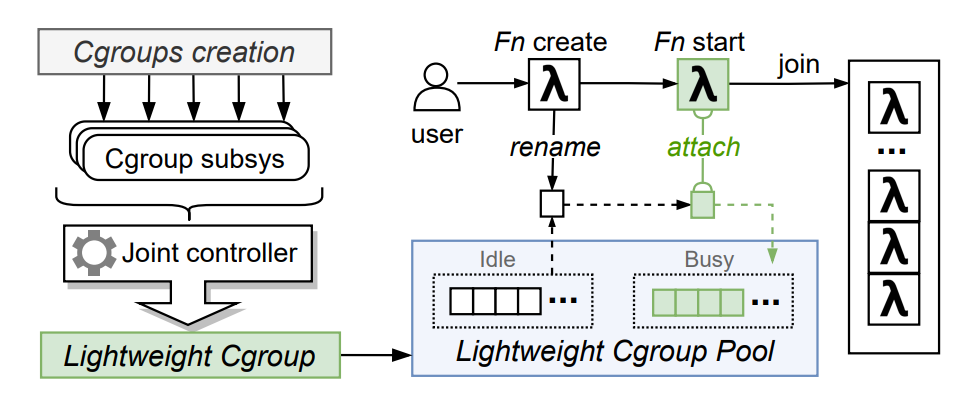

Figure 7 shows the RunD design and summarizes the host-to-guest full-stack solution. RunD runtime makes a read/write splitting by providing the read-only layer to virtio-fs, using the builtin storage file to create a volatile writeable layer to virtioblk, and mounting the former and latter as the final container rootfs using overlayfs. RunD leverages the microVM template that integrates the condensed kernel and adopts the prepatched image to create a new microVM, further amortizing the overhead across different microVMs. RunD renames and attaches a lightweight cgroup from the cgroup pool for management when a secure container is created.

Based on the above optimization, a secure container is started in the following steps, when RunD is used as the secure container runtime.

We analyzed the advantages and disadvantages of various solutions in high-density and high-concurrency scenarios in container rootfs. The fundamental problems faced by container rootfs in microVM are problems caused by demands for data persistence. Traditional storage requirements and scenarios require very high data stability and data consistency; data cannot be lost under any circumstances. However, data persistence is completely unnecessary in function compute scenarios. All we need is temporary storage. When the node on the host is down and restarted, we only need to reinitialize and create new containers. The previous data is no longer needed. Therefore, we can completely put down this burden and make full use of the temporary storage that does not require persistence.

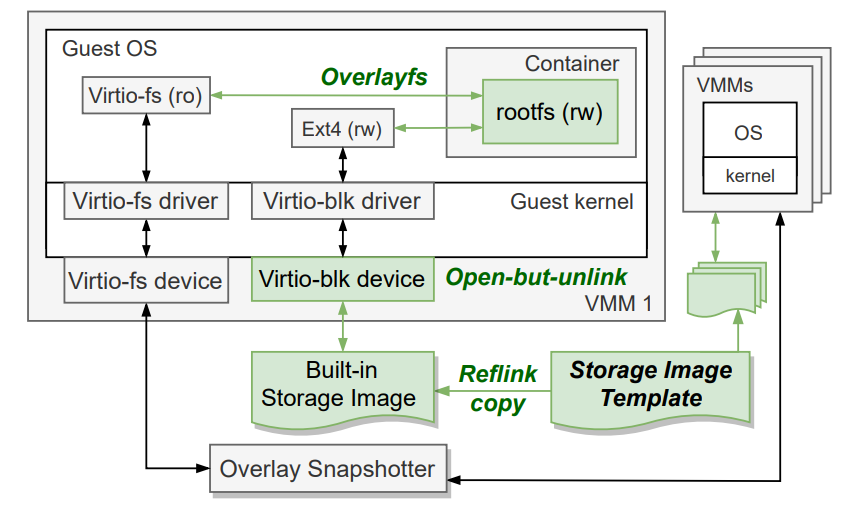

We can divide the container image into read-only and writable layers. Then, the snapshotter of containerd is only responsible for providing the read-only content of the image. The writable layer resources should belong to the sandbox with temporary storage features, which decouples the creation of containers from the creation of writable layer devices. We use the reflink function of xfs file system to bind the backend file of the writable layer device with the lifecycle of the sandbox, (i.e., RunD), avoid the problem of double pagecache as much as possible, and realize the CoW of writable layer files. At the same time, we use the characteristics of open-but-unlinked files, so we only need to do reflink copy once when creating the sandbox. After the file is opened, RunD unlinks it, and no other lifecycle management is required, so files are automatically deleted after the sandbox exits. The architecture we introduced is shown in Figure 8 below:

(Figure 8: Read/Write Splitting of Container rootfs)

The CoW at the writable layer files is implemented through reflink. Since reflink is essentially a block device solution, the read and write performance is virtually the same as the devicemapper. Compared with the traditional rootfs implementation based on block devices, the disk usage of reflink method is significantly reduced (from 60% (4500 iops, 100MB/s) to 20% (1500 iops, 8MB/s)) in the scenario of concurrent creation of 200 containers. The average time to create writable layer devices is reduced from 207ms to 0.2ms, a drop of 4 orders of magnitude!

In the Serverless scenario, many features in the guest kernel are unnecessary and memory-intensive, so we can turn off these options when compiling. When streamlining the guest kernel, we obey the following principles:

The memory overhead is reduced by about 16MB, and the kernel file volume is reduced by about 4MB through streamlining the guest kernel case by case. First, we apply the streamlined kernel and the container rootfs storage mentioned in the preceding section to the template technology and then solve the problem of kernel self-modification after starting the container from the template.

We found that the self-modification of kernel code segments only exists at startup. After startup, these code segments will no longer be modified, and all RunD instances in the same physical machine generally use the same kernel. Their initialization process is the same, so their modified code segments are also the same. Therefore, we can generate a kernel file (pre-patched kernel image) after modifying the code segment in advance and then replace the original kernel file with it. This way, we can share the kernel file as much as possible between instances of different secure containers. In order to adapt to this mode, we have also done a small amount of hack work on the kernel and fixed some problems that may cause the kernel panic when using the pre-patched kernel image.

The template technology can reduce the memory usage of a single instance to achieve high-density deployment and is also very suitable for high-concurrency scenarios of secure containers because it can create a snapshot of a secure container that has been started at a certain time and use it as a launch template for other secure containers. Thus, we can use this template to start multiple secure containers quickly. The essence is to record the results of the same step in the startup process of each secure container and then skip the same step directly to start the secure container directly based on the intermediate result.

In the previous section, we analyzed that the cgroup on the host has become one of the bottlenecks of containers in high-density and high-concurrency scenarios. The natural idea is to reduce the number of cgroups and related operations. Additional research reveals optimization opportunities in two areas:

Therefore, we use the lightweight cgroup to manage each RunD instance. As shown in Figure 9, when we mount cgroups, we aggregate all cgroup subsys (cpu, cpuacct, cpuset, blkio, memory, freezer)) onto one lightweight cgroup. This helps RunD reduce redundant cgroup operations during container startup and significantly reduces the total number of cgroups and system calls.

(Figure 9: Lightweight Cgroup and Pooling Design)

At the same time, we maintain a cgroup resource pool and mark all initially created cgroups as idle. A mutex is used to protect the idle linked list. When a function instance is created, the cgroup is obtained from the idle linked list and marked busy. When the container starts, the thread is attached to the corresponding allocated cgroup. When a Function Compute instance is destroyed, only the corresponding instance process needs to be killed, and the cgroup does not need to be deleted. The cgroup is returned to the resource pool and marked as idle. It should be noted that the cgroup resource pool management involves synchronization and mutual exclusion issues. It is necessary to ensure that this critical section is small, or the effect of improving parallelism cannot be achieved.

The Lightweight cgroup pool removes the cgroup creation and initialization operations and uses the cgroup rename mechanism to allocate cgroups to new instances, significantly reducing the critical section and improving parallelism. With this simple idea, the cgroup operation time is reduced significantly, the creation speed is increased by 15 times, and the performance optimization of nearly 94% is achieved.

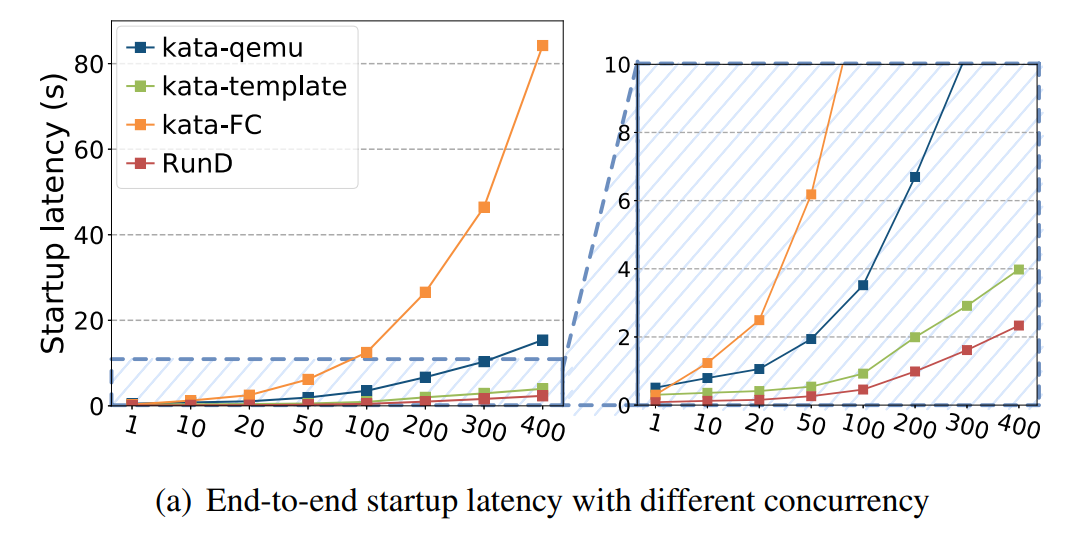

(Figure 10: End-to-end Latency, Distribution, and CPU Time in High-Concurrency Creation Scenarios)

Conclusion on Concurrency Performance: RunD can start a single sandbox within 88ms and start 200 sandboxes concurrently within one second. Compared with the existing technology, it also has minimal latency fluctuation and CPU overhead.

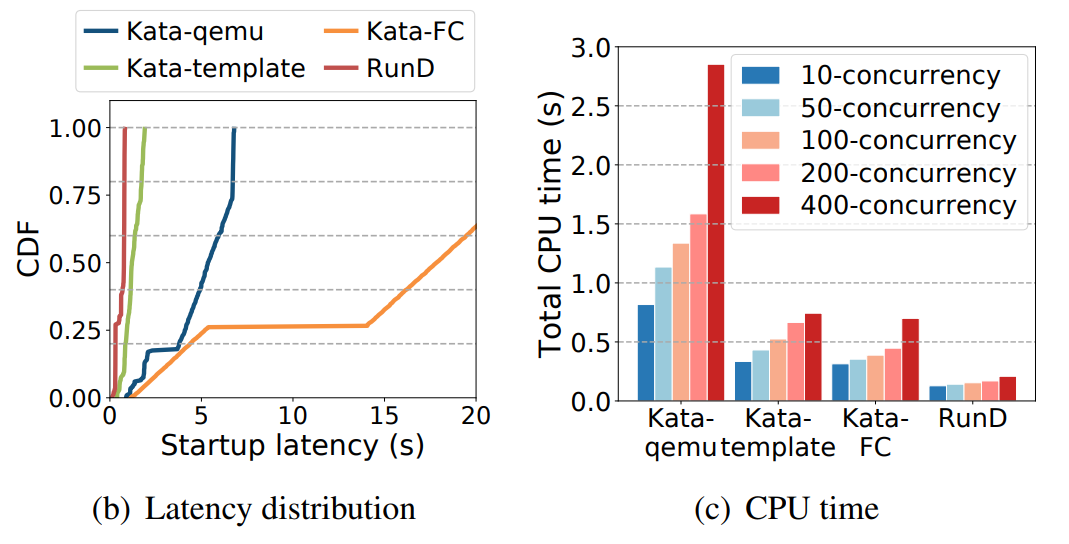

(Figure 11: Sandbox Memory Overhead of Different Runtimes (at 100 Densities))

(Figure 12: Average Memory Overhead at Different Densities (Missing Points Indicate the Maximum Deployment Density))

Conclusion on High-Density Deployment: RunD supports deploying more than 2,500 sandboxes with 128MB memory on the node with 384GB memory. The average memory usage of each sandbox is less than 20MB.

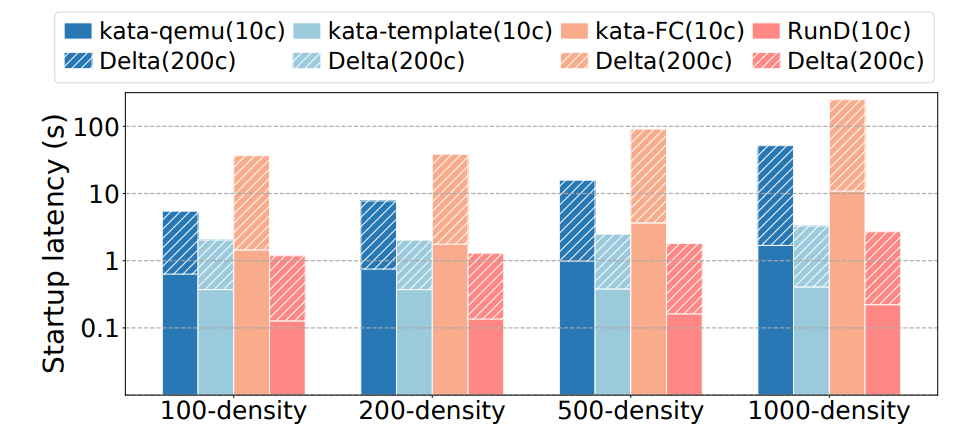

(Figure 13: The end-to-end startup latency at different deployment densities. (10c/200c means a 10/200-way concurrent startup, and the Delta means the overhead increment compared with a 10-way concurrent statrup))

Conclusion on High Concurrency Capability under High-Density Deployment: RunD provides better performance and stability in supporting high-concurrency creation under high-density deployment.

According to the experimental evaluation, RunD can start in 88 milliseconds and launch over 200 secure containers per second on a single node with 104 cores and 384GB of memory, with over 2,500 function instances deployed at high density. RunD has been launched as a Serverless runtime of Alibaba Cloud. It provides services for more than 1 million functions and nearly 4 billion calls every day and actively promotes the evolution of Kata 3.0 architecture.

96 posts | 6 followers

FollowAlibaba Cloud Community - March 3, 2023

Alibaba Cloud Community - November 14, 2024

Alibaba Developer - September 23, 2020

Aliware - July 21, 2021

Alibaba Developer - January 28, 2021

Alibaba Clouder - November 11, 2020

96 posts | 6 followers

Follow NAT(NAT Gateway)

NAT(NAT Gateway)

A public Internet gateway for flexible usage of network resources and access to VPC.

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More Serverless Workflow

Serverless Workflow

Visualization, O&M-free orchestration, and Coordination of Stateful Application Scenarios

Learn MoreMore Posts by OpenAnolis