By Huang Li, Head of Kuaishou Online Messaging System

Kuaishou's optimization of the RocketMQ community version is generally to build capabilities on its outer layer rather than making major internal changes because big internal changes are not conducive to later version upgrades. Even if the internal RocketMQ is modified, we will try our best to give back the new features to the community through PR.

Kuaishou regularly upgrades RocketMQ. During Spring Festival 2022, we boldly used the RocketMQ 4.9.3-SNAPSHOT version that had not been officially released, smoothly passing the most important activities of the year for Kuaishou. This also confirmed the compatibility and stability of the community version of RocketMQ.

In the two years since RocketMQ was introduced to Kuaishou, it has grown from zero to hundreds of billions of messages per day. Kuaishou is the earliest large-scale user of transactional messages in the RocketMQ community version. Currently, there are more than 10 billion transactional messages and scheduled messages every day, realizing automatic load balance and disaster recovery across IDCs and achieving multi-level lanes (projects) that do not interfere with each other. Multiple projects can be developed at the same time and can fall back.

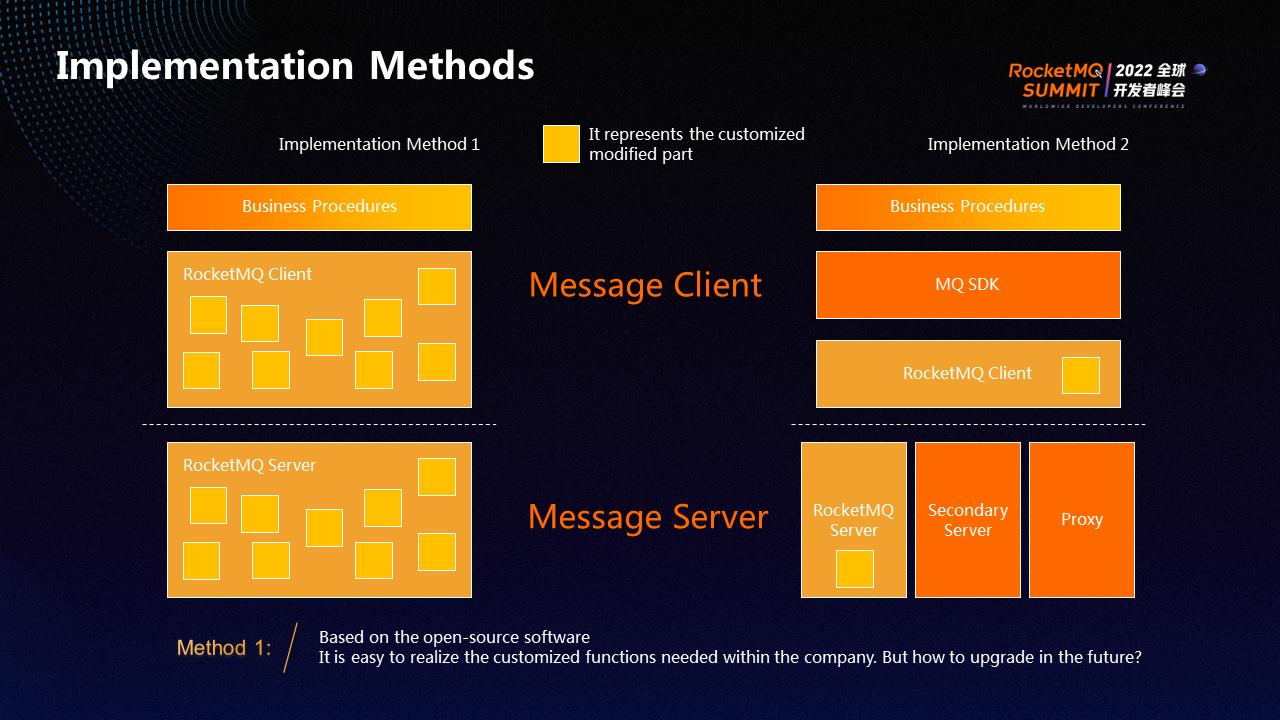

RocketMQ can be implemented through two methods:

Method 1: Based on open-source software, users can quickly and easily implement the required features. However, subsequent upgrades are inconvenient.

Method 2: Make only minor modifications to the client and server of RocketMQ. If there is a lack of capability in the server, a secondary server will be developed, or a proxy will be used to supplement it. We wrapped a layer of MQ SDK on the upper layer of the client to shield users from specific implementations. Kuaishou implements RocketMQ based on this method.

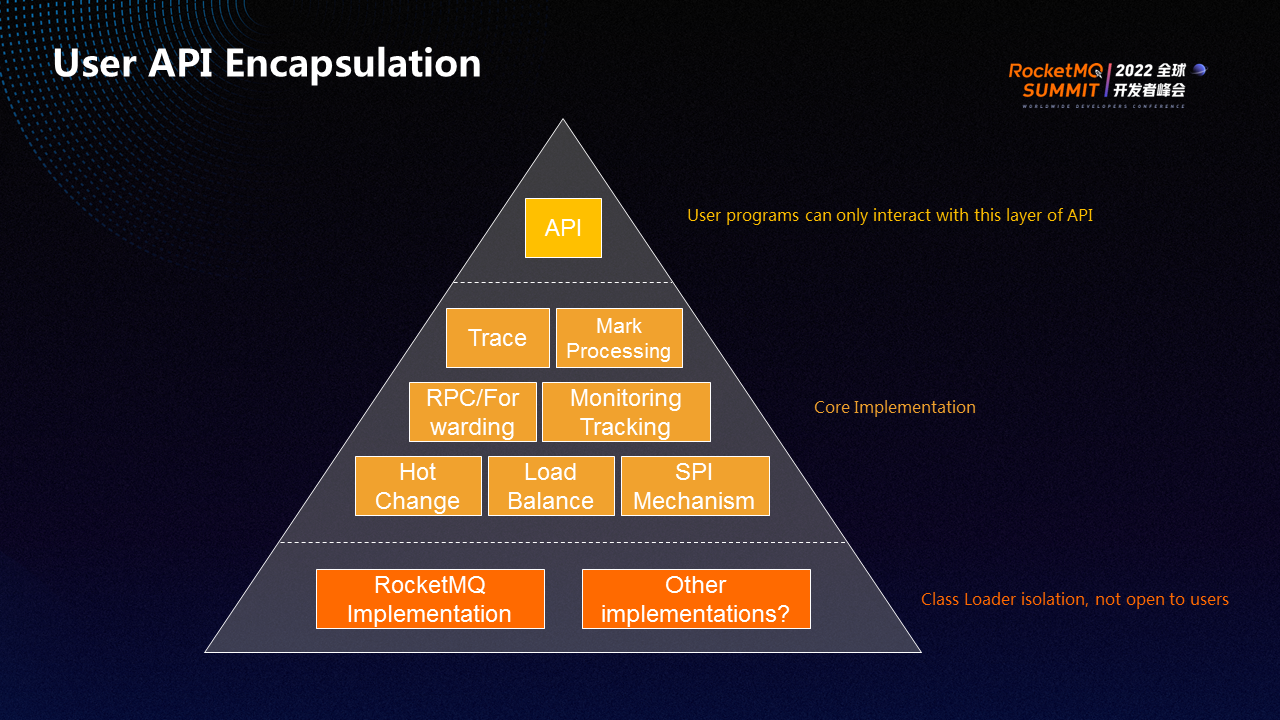

As shown in the figure above, MQ SDK is divided into three layers. The top layer is API, and users interact with this layer. The middle layer is the core layer, which can implement various common capabilities. The bottom layer is responsible for interacting with specific MQ. Currently, only RocketMQ is implemented, but other message middleware may be implemented in the future.

The middle layer implements the capability of thermal change. The user configuration is not written in the code but is specified on the platform. After it is specified, the SDK directly loads the new configuration and automatically reloads the user program without restarting.

RocketMQ allocates a Logic topic. The business code does not need to care about where the cluster is located, the current environment, or data markers (such as stress testing and swimming lanes). It only needs to use the very simple API we provide. The complex processes are all encapsulated in the core layer, shielded from business users.

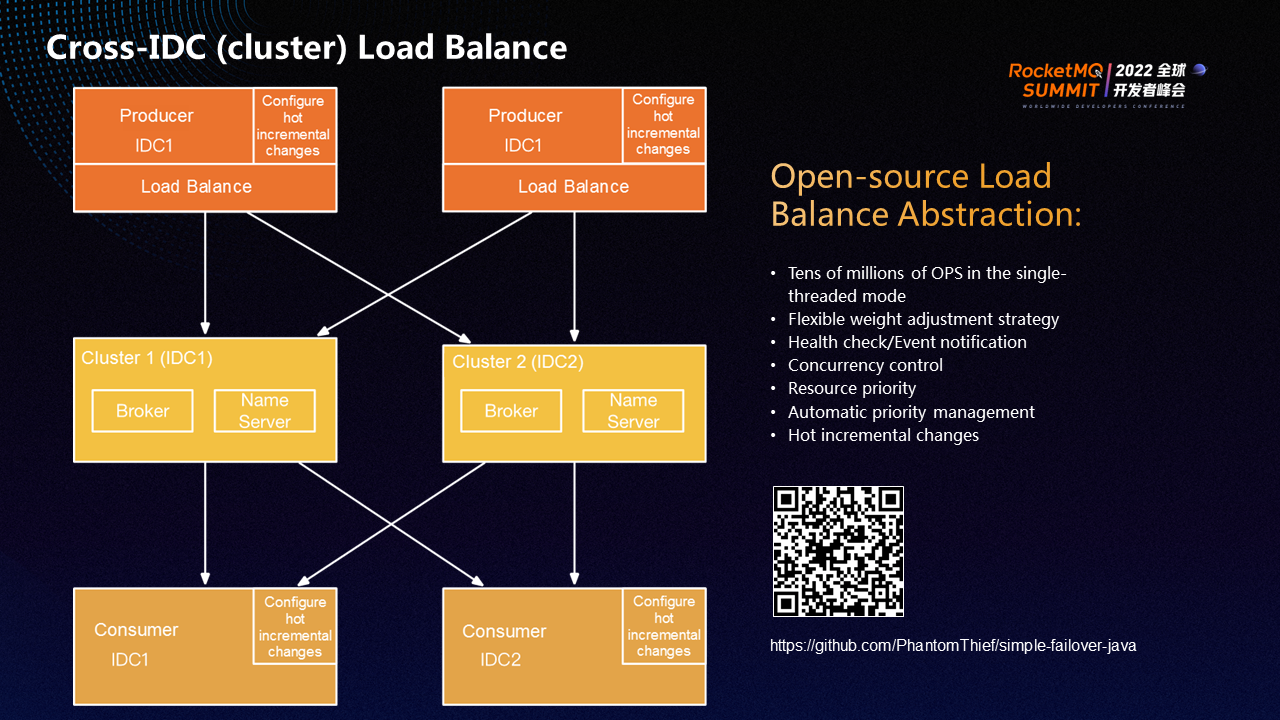

The preceding figure shows the implementation process of cross-IDC load balance.

All Logic topics are mapped to the two data centers in the middle of the preceding figure, and one cluster is deployed in each data center. A RocketMQ cluster can have multiple brokers. Producers and consumers have fault awareness and transfer capabilities for brokers. They can use NameServer to discover which broker is faulty to avoid connecting to it. We encapsulate a layer of load balance on the upper layer of the cluster and implement it on the client side to achieve better control.

Each Logic topic is assigned to two clusters, and the producer connects to both clusters during production. Two clusters are located in different IDCs. The latency between two IDCs in the same region is about 1-2ms. The consumer side is also dual-connected to both clusters. Once a data center or cluster fails, traffic will be automatically transferred to another data center immediately.

We abstracted and encapsulated the automatic load balance (automatic failover) of the production side into an open-source project. The Failover component is not related to RocketMQ. When the main caller makes an RPC or message production call, the health status of the callee is checked. If the callee is unhealthy or responds slowly, its weight will be lowered.

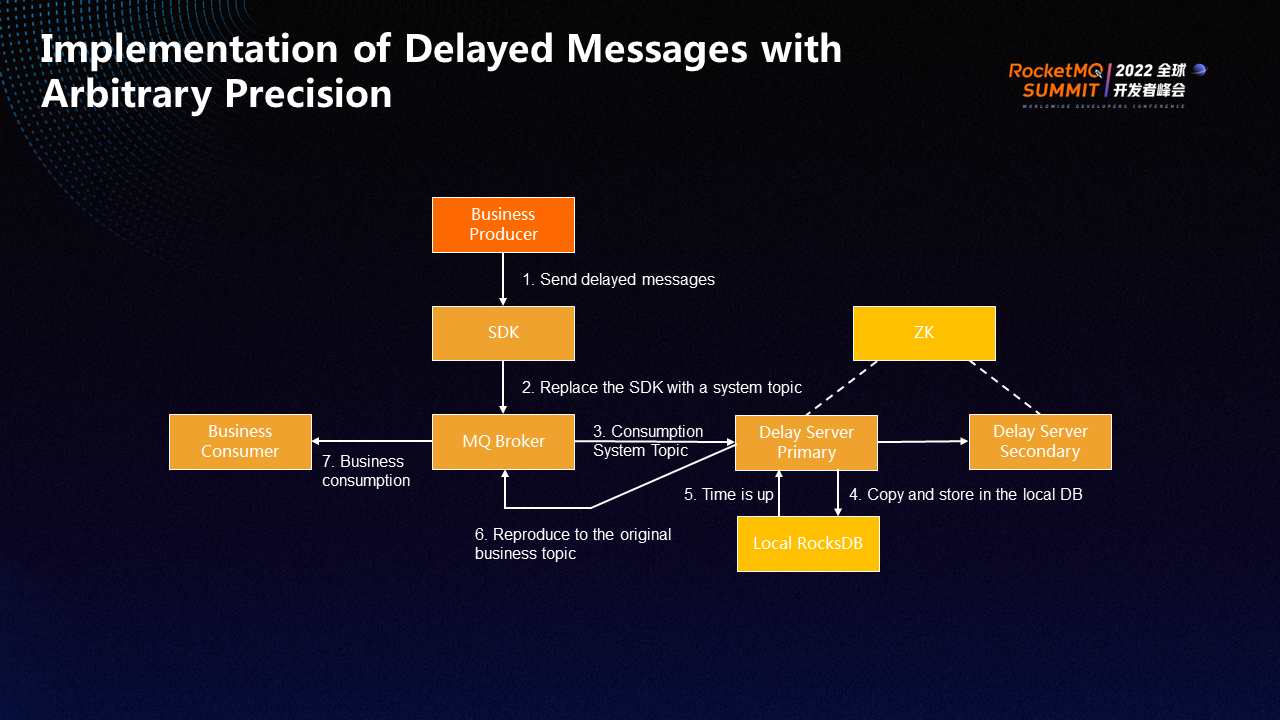

RocketMQ implements simple delayed messages, but only a few fixed levels of delay are supported. However, in the actual business, users prefer to specify the delay time when sending messages. Therefore, we use the Delay Server to delay message sending with arbitrary precision.

The Delay Server saves the messages the user requires to be delayed and waits until the specified time before sending the message back to the original business topic.

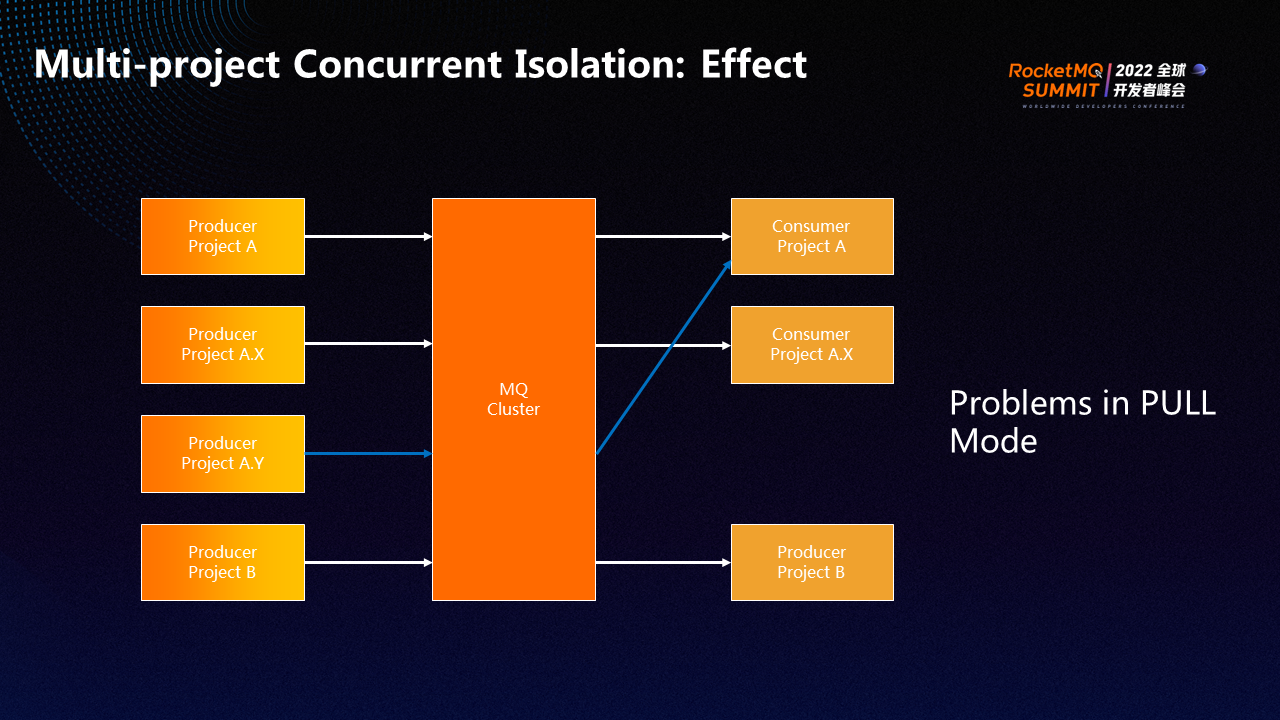

Based on the PULL mode (The PUSH Consumer of RocketMQ is also based on the PULL mode), it is difficult to directly implement dynamic multi-lane isolation. Therefore, many companies will choose to deliver messages to different topics according to different lanes. Although the function is realized, it is too intrusive, and it is not easy to achieve a multi-level fallback.

In Kuaishou, we put the consumption of all lanes in the same topic and realized the multi-level lane fallback. For example, Project A and Project B are being developed at the same time, and the messages produced by Project A are consumed by the consumers of Project A. If there are sub-projects A.X and A.Y under Project A, the messages produced by A.X are consumed by A.X's consumers, but if A.Y has no consumers, the messages produced by A.Y will fall back to be consumed by A's consumers.

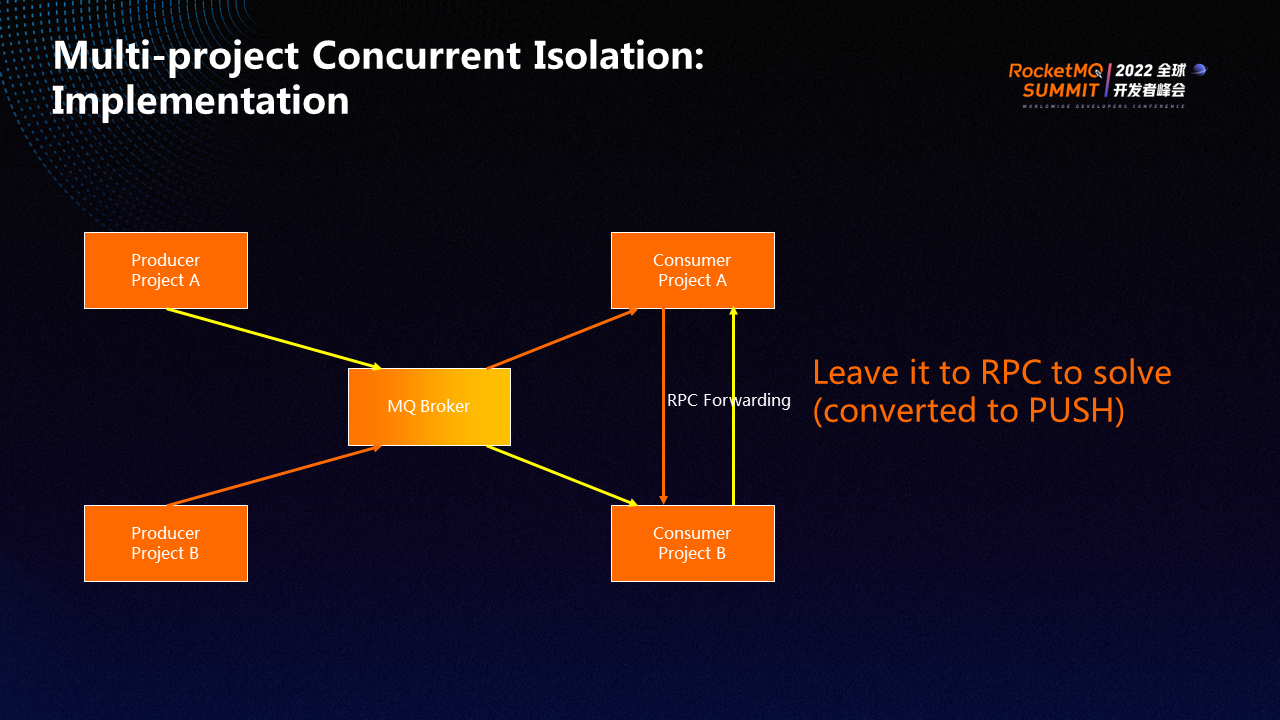

The lane isolation of Kuaishou is mainly achieved through RPC forwarding.

As shown in the preceding figure, the yellow line represents the data flow of Project A, and the orange line represents the data flow of Project B. Before sending the data to the business user, the SDK checks whether the lane markers of the data match the consumer. If not, the SDK forwards the data to the matching consumer through RPC.

This way, we convert the PULL mode to the PUSH mode and select the mode on the main caller side, so we can put all projects in the same topic and realize isolation.

RocketMQ performs client rebalance based on the queue. If the number of consumers is greater than the queues, some consumers have nothing to do. Sometimes, the distribution of queues is uneven, resulting in a large burden on some consumers and a small burden on others.

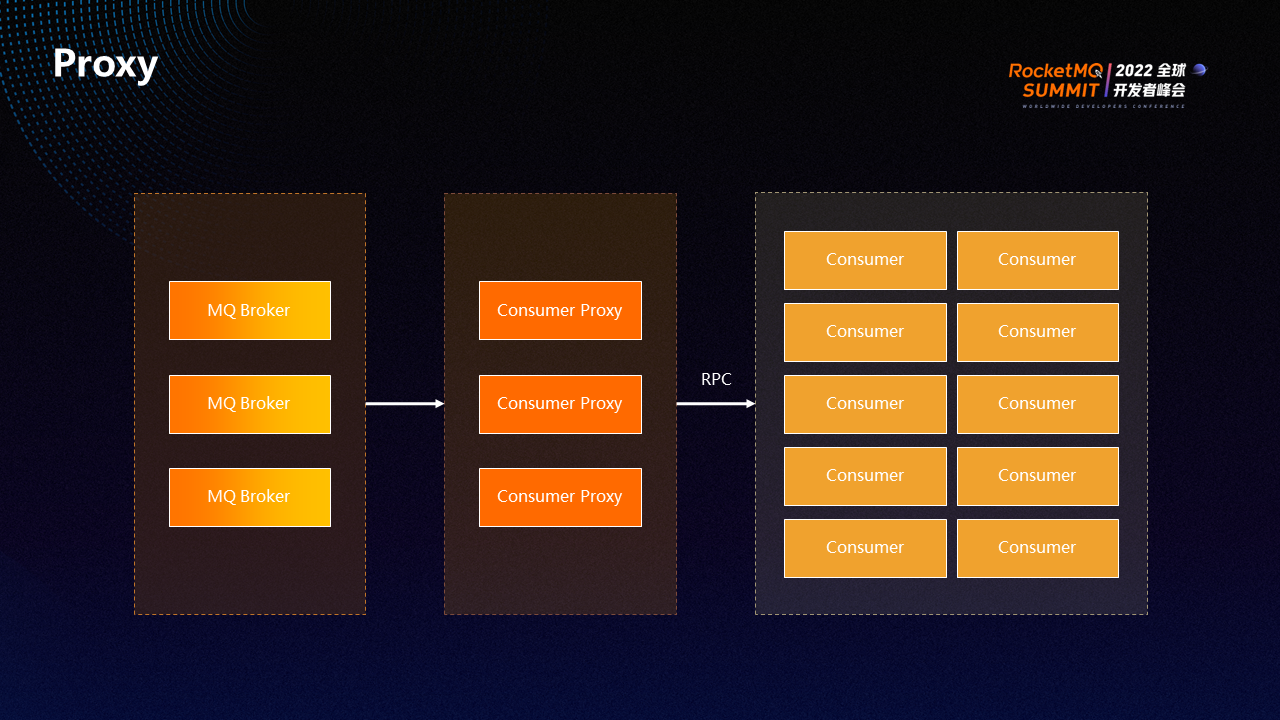

We implement consumer proxies through RPC. First, data is consumed from the RocketMQ broker and then PUSH it to the consumer through RPC.

The RPC of the consumer proxy uses the same mechanism as the RPC forwarding in the preceding section. Therefore, RocketMQ does not require too many queues, but it can have many consumers. The consumer proxy is optional. You only need to specify the consumption mode on the platform without modifying the code or restarting.

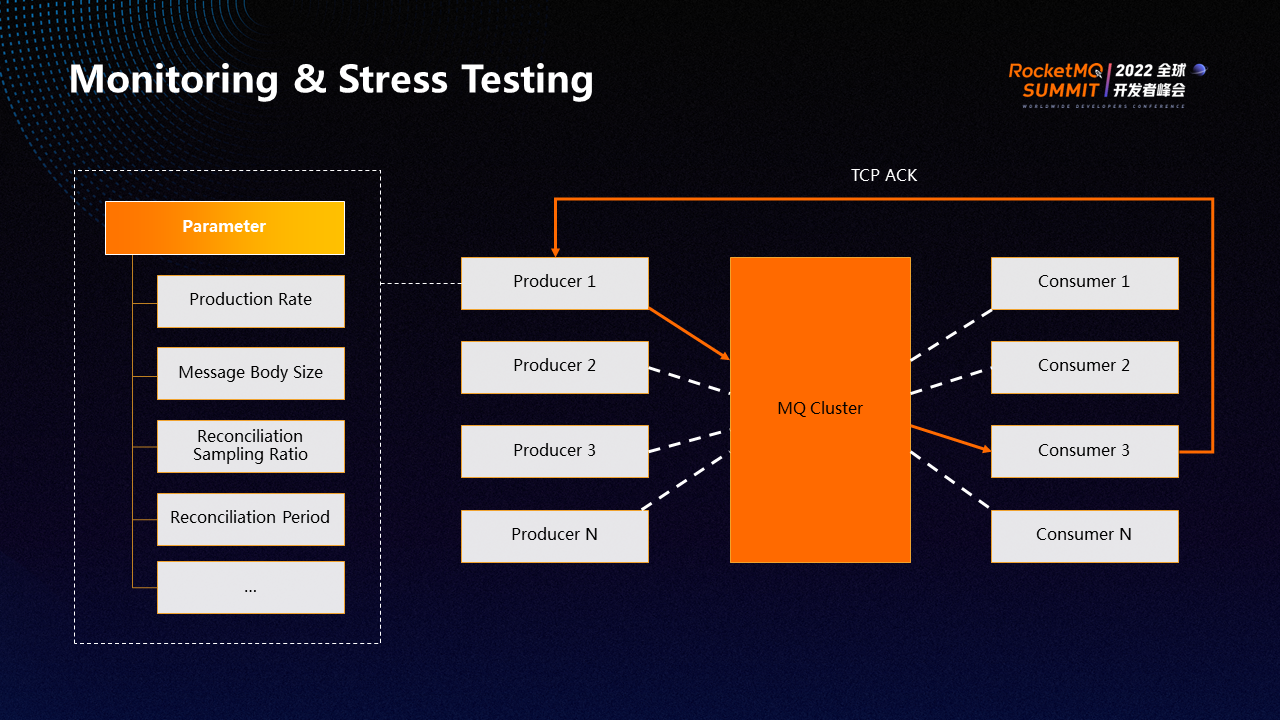

In addition, we developed a dial test program to generate a simulated producer and a consumer. The producer sends messages to each broker of all online clusters, including normal messages, scheduled messages, and transactional messages. When the consumer consumes the message, it takes out the IP address of the producer in the message body and sends an ACK back to the producer through TCP to inform the producer whether the message is lost, whether it is duplicated, and what is the delay from production to consumption.

In addition, various configurations can be made for producers and consumers, such as the message body size, production rate, reconciliation sampling ratio, reconciliation period, etc. For example, if you increase the rate in the configuration, stress testing can be implemented. Producers and consumers will conduct tracking of the results. Finally, viewing the related information and alerting are supported on Grafana.

After the performance of RocketMQ is optimized, the production TPS of 300-byte small messages increases by 54%, and the CPU usage is 11% reduced. The consumption performance of 600 queues increases by 200%, the single-thread production TPS of large messages across IDC increases by 693%, and the consumption throughput of 100KB clusters across IDC increases by 250%.

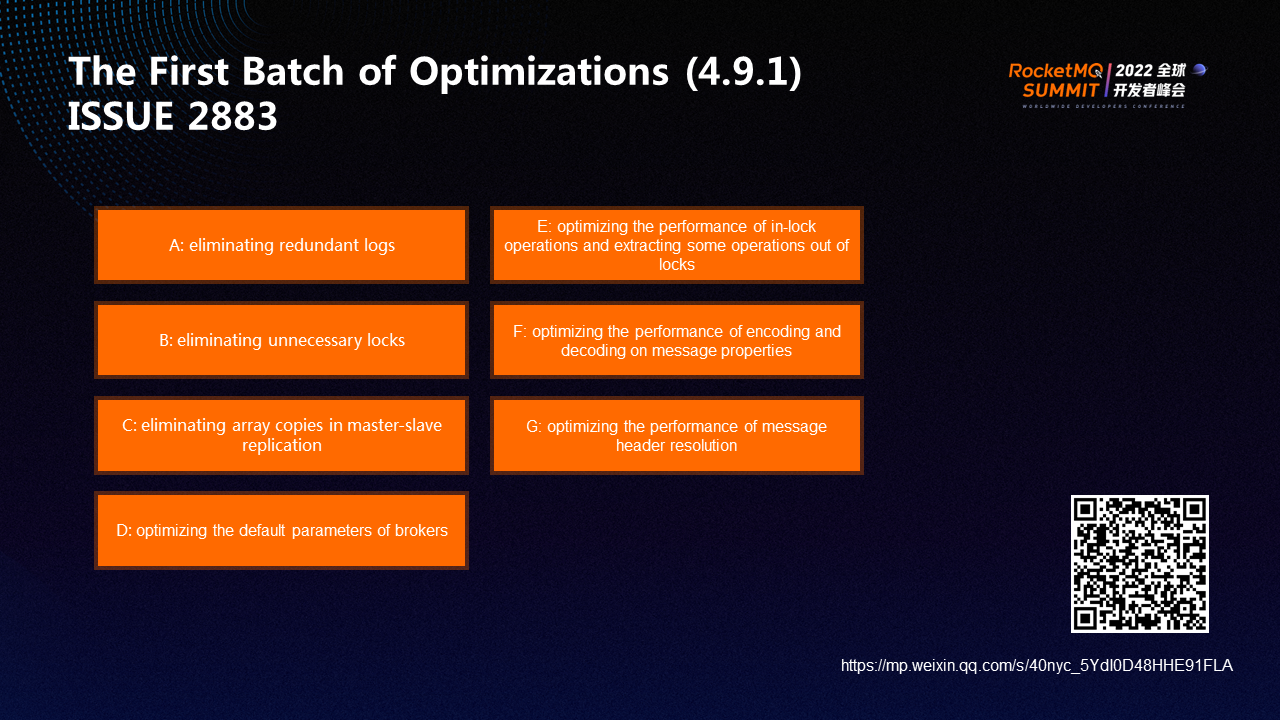

The optimization is mainly divided into two batches:

The first batch focuses on RocketMQ 4.9.1, including clearing redundant logs, eliminating unnecessary locks, eliminating array copies in master-slave replication, optimizing the default parameters of brokers, optimizing the performance of in-lock operations and extracting some operations out of locks, optimizing the performance of encoding and decoding on message properties, and optimizing the performance of message header resolution.

In addition, the default parameter setting of RocketMQ version 4.9.0 is unreasonable. Therefore, we optimized it in version 4.9.1, which significantly improved the performance.

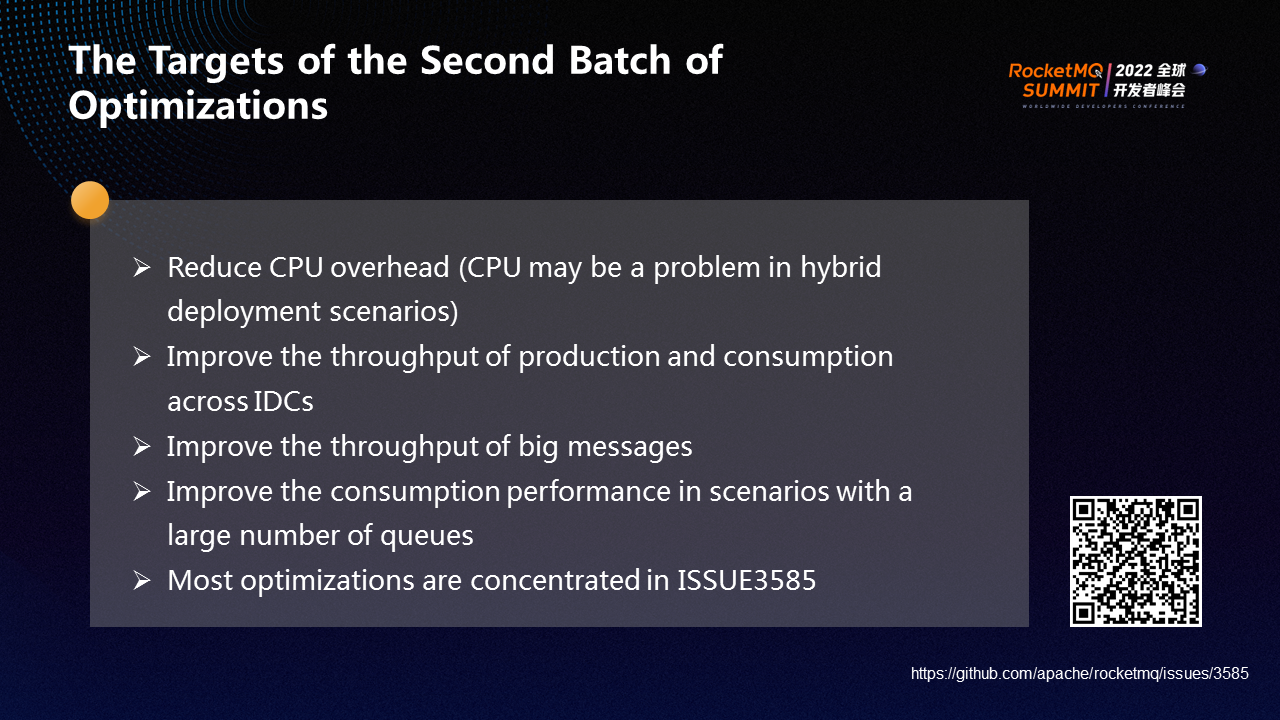

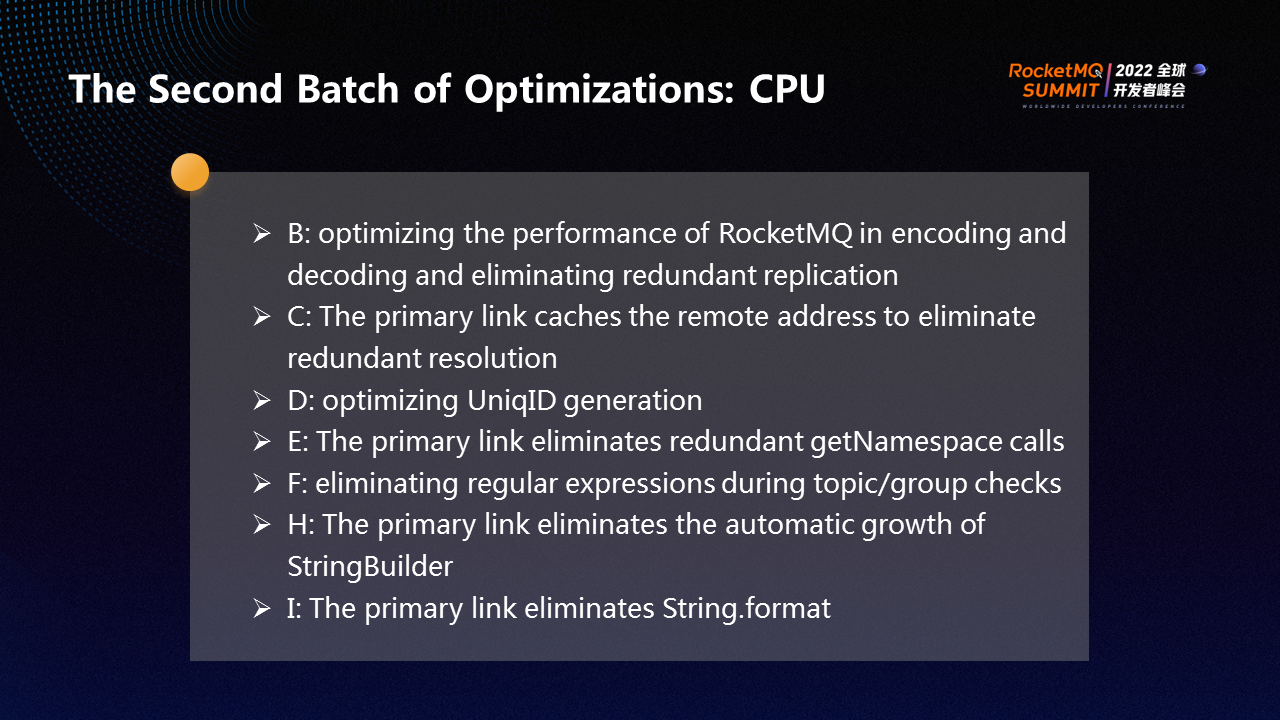

The preceding figure shows the targets of the second batch of optimizations:

The second batch of optimizations is mostly concentrated in ISSUE3585 in the community.

The figure above shows the specific content of the second batch of CPU-related optimizations. The letter number corresponds to ISSUE3585. Previously, RocketMQ used Fastjson for serialization, and a custom protocol performed slightly better than Fastjson. We conducted in-depth research and optimization on it and significantly improved the performance of RocketMQ in encoding and decoding.

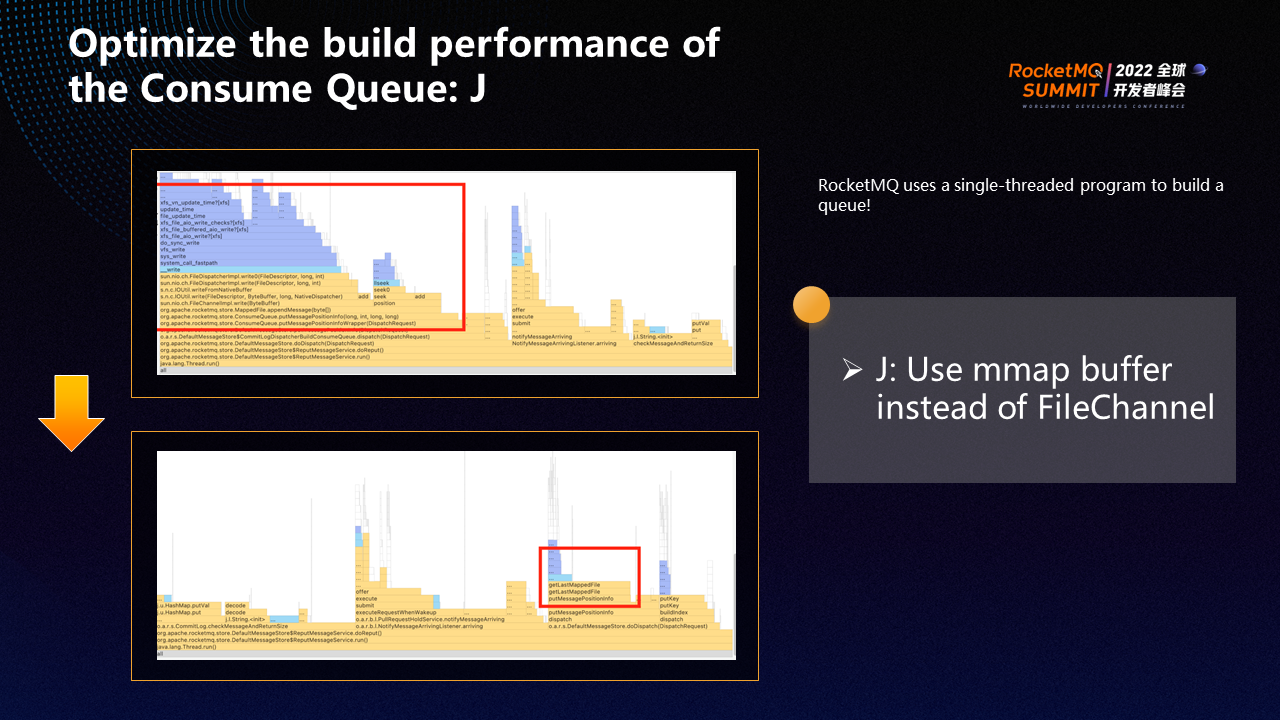

RocketMQ uses a single-threaded program to build a queue. Therefore, we use mmap buffer instead of FileChannel, which significantly improves performance.

The red box above shows the comparison before and after the optimization using a certain method.

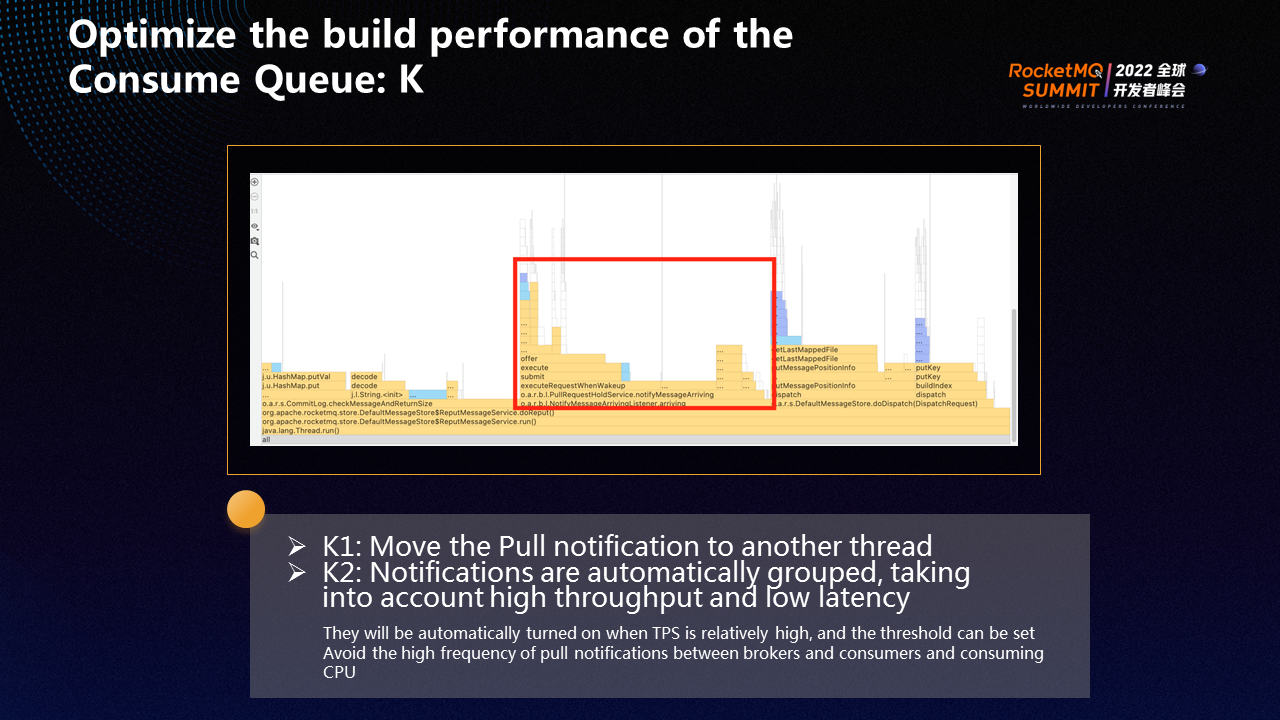

In addition, we move the Pull notification to another thread so it does not occupy the thread's resources. At the same time, notifications are automatically grouped, taking high throughput and low latency into account. They will be automatically turned on only when TPS is high. The threshold can be set to prevent Pull notifications from oscillating too frequently between brokers and consumers and consuming CPU.

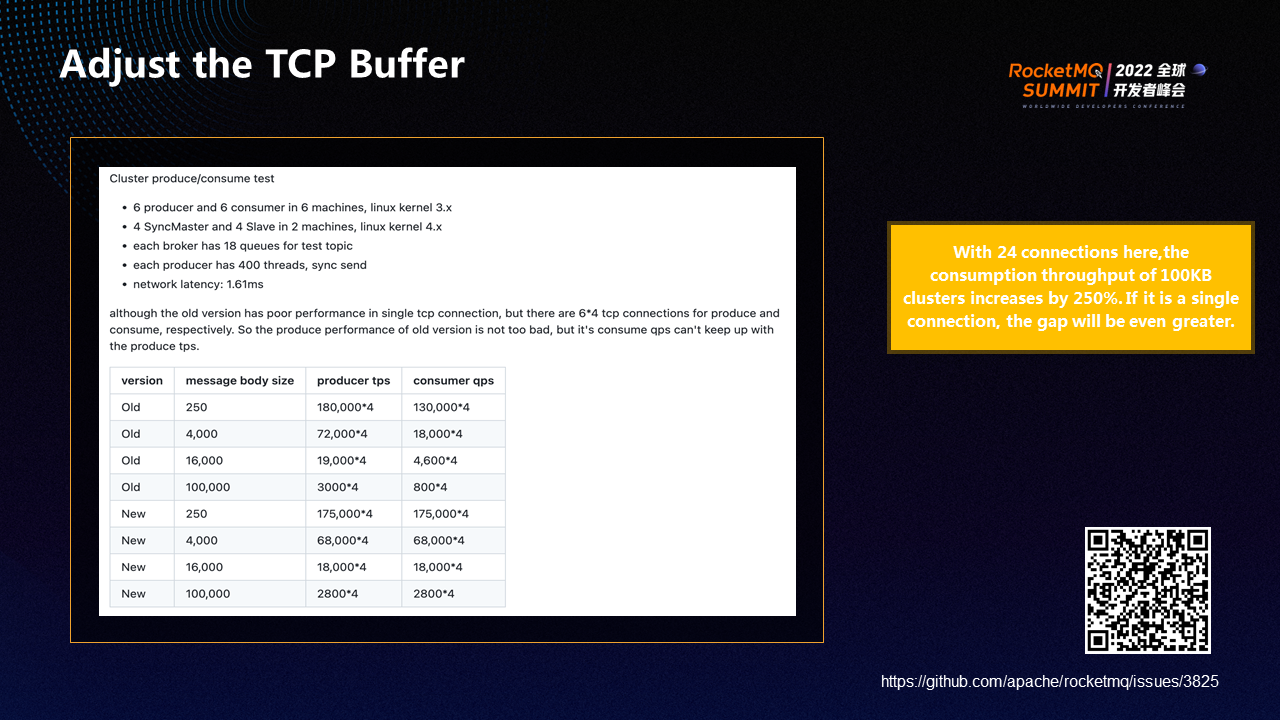

The poor performance of big message processing has always been a problem. After analysis, we find that the TCP parameter settings of RocketMQ remoting need to be optimized. The original buffer setting was a fixed value. If the latency of the data center is high, too small a buffer will seriously affect the throughput of the TCP connection. Therefore, we change the buffer to be automatically managed by the operating system to ensure the performance of throughput.

The preceding figure lists the data table of the online test. It can be seen that the consumption performance has been improved several times.

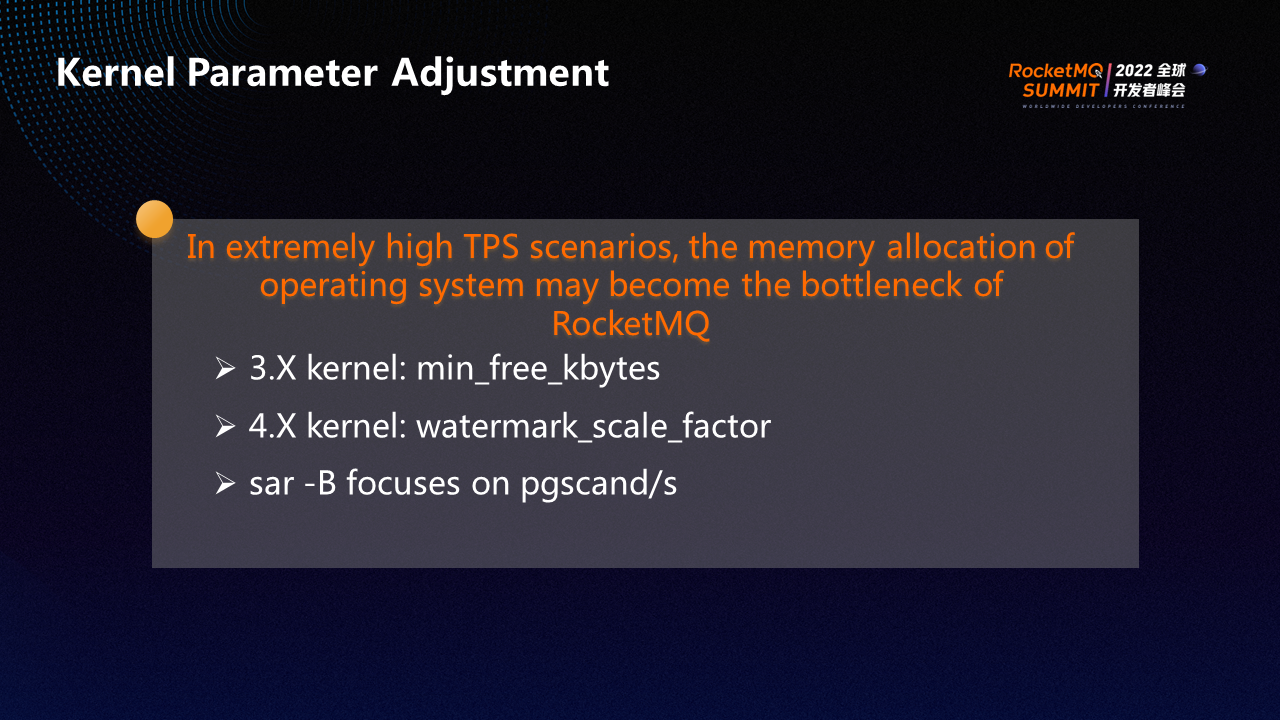

In the case of extremely high TPS, if the message body is large, the memory allocation of the Soperating system may become the bottleneck of RocketMQ, and the performance in 4.X kernel is far better than 3.X. The parameter min_free_kbytes needs to be set to a larger value in the 3.X kernel in extreme scenarios.

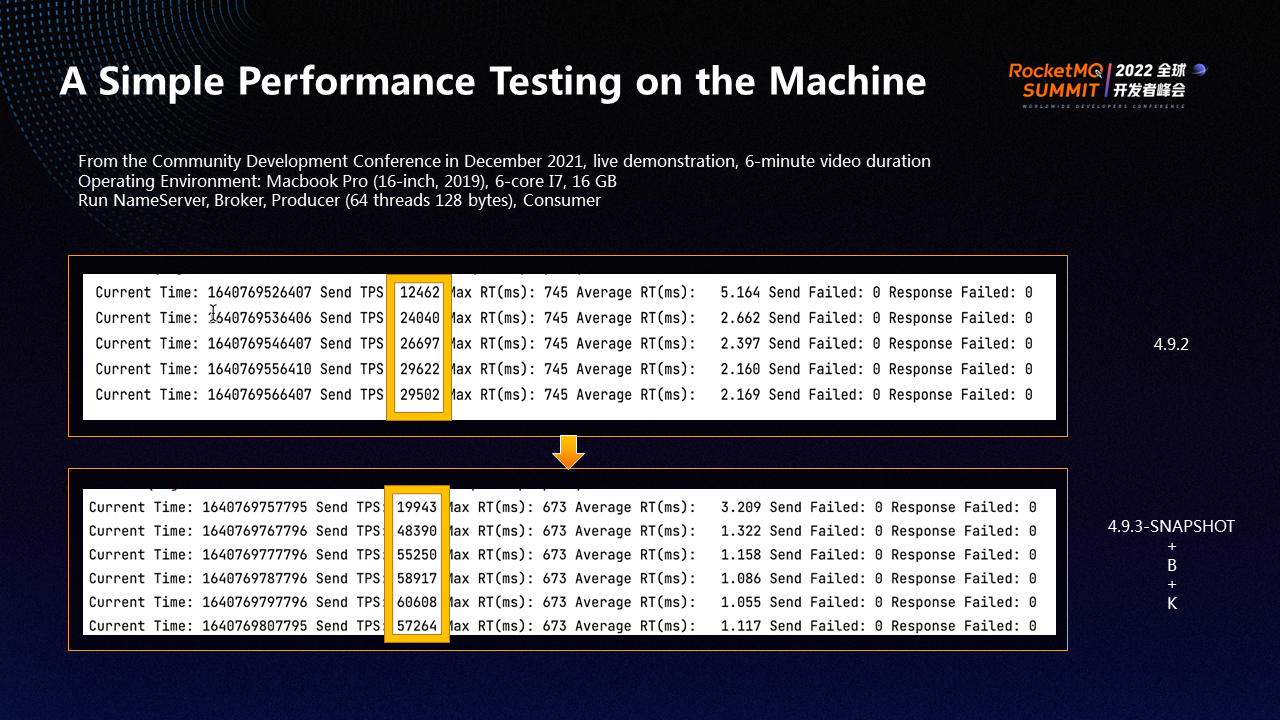

We demonstrated the Performance Testing at the Community Development Conference in December 2021, comparing the performance of version 4.9.2 and version 4.9.3 Snapshot (including the optimizations of version B and K that were not merged into this version at that time) before and after the second batch of optimizations.

All brokers, producers, and consumers run simultaneously on the computer during the test. The TPS of the old version is less than 30,000, while the new version has the capability of automatic batch gathering, with a TPS of up to 60,000, which is double compared to the old version.

An Introduction to KubeVela Addons: Extend Your Own Platform Capability

Best Practices for Large-Scale Apache RocketMQ Cluster Deployment for Li Auto

535 posts | 52 followers

FollowAlibaba Cloud Native Community - March 20, 2023

OpenAnolis - April 7, 2023

Apache Flink Community China - January 11, 2021

Alibaba Cloud Native - November 13, 2024

OpenAnolis - April 20, 2022

Alibaba Clouder - June 20, 2017

535 posts | 52 followers

Follow Elastic High Performance Computing Solution

Elastic High Performance Computing Solution

High Performance Computing (HPC) and AI technology helps scientific research institutions to perform viral gene sequencing, conduct new drug research and development, and shorten the research and development cycle.

Learn More Elastic High Performance Computing

Elastic High Performance Computing

A HPCaaS cloud platform providing an all-in-one high-performance public computing service

Learn More Remote Rendering Solution

Remote Rendering Solution

Connect your on-premises render farm to the cloud with Alibaba Cloud Elastic High Performance Computing (E-HPC) power and continue business success in a post-pandemic world

Learn More ApsaraMQ for RocketMQ

ApsaraMQ for RocketMQ

ApsaraMQ for RocketMQ is a distributed message queue service that supports reliable message-based asynchronous communication among microservices, distributed systems, and serverless applications.

Learn MoreMore Posts by Alibaba Cloud Native Community

Start building with 50+ products and up to 12 months usage for Elastic Compute Service

Get Started for Free Get Started for Free