By Zhu Xiaoran, nicknamed Yuheng at Alibaba.

The rapid development of the Internet, especially in China, promoted the evolution of many new types of media. For example, now there's internet celebrities-or youtubers, instagramers, or more generally influencers, whatever you prefer to call them-and then their huge Internet-hooked audiences, who use their mobile phones to post their opinions, too. In China, the two biggest platforms of this kind are Weibo and WeChat Moments, but other websites are out there, too. For instance, many Chinese netizen will post their thoughts and reviews on review websites and the review section of e-commerce platforms like Taobao. So, in short, the modern face of new media with all of its platforms gives everyone a platform to be heard and express their opinions.

And, interestingly also, the broadcast speed of both breaking news and entertainment gossip is now far faster than ever before-and much faster than we can even imagine. Information is forwarded tens of thousands of times and read millions of times every few minutes. And, in line with this fast flow of data, large amounts of information may be transferred in a rather viral way. So, even now more than ever before, it is crucial for enterprises to monitor the public opinion about them and act accordingly when the Internet's opinions strike.

And, landing at the intersection of big data business-where there's also new retail and e-commerce-we can also see that, along with the rise of "Internet opinions," things like order quantity and user comments have a huge impact on subsequent orders as well as the economy as a whole. For example, product designers need to be able to collect these sorts of statistics, analyzing the data to determine possible future directions in the development of their products.

And, at the same time, enterprise public relations and marketing departments also need to be equipped to handle public opinion promptly and properly. To answer all of this, and to implement this analysis, our traditional public opinion system has been upgraded to the big data-based public opinion collection and analysis system, which will be discussed in detail in this article.

The requirements of the big data public opinion system on the data storage and computing systems are as follows:

This article is a part of two-part article series that introduces the whole architecture of the public opinion analysis system. As the first article in this series, this article will provide the architecture design of the current mainstream big data computing architecture, analyze the advantages and disadvantages, and then introduce the big data architecture of the public opinion analysis system. The second article will describe a complete database table design and provide a sample code.

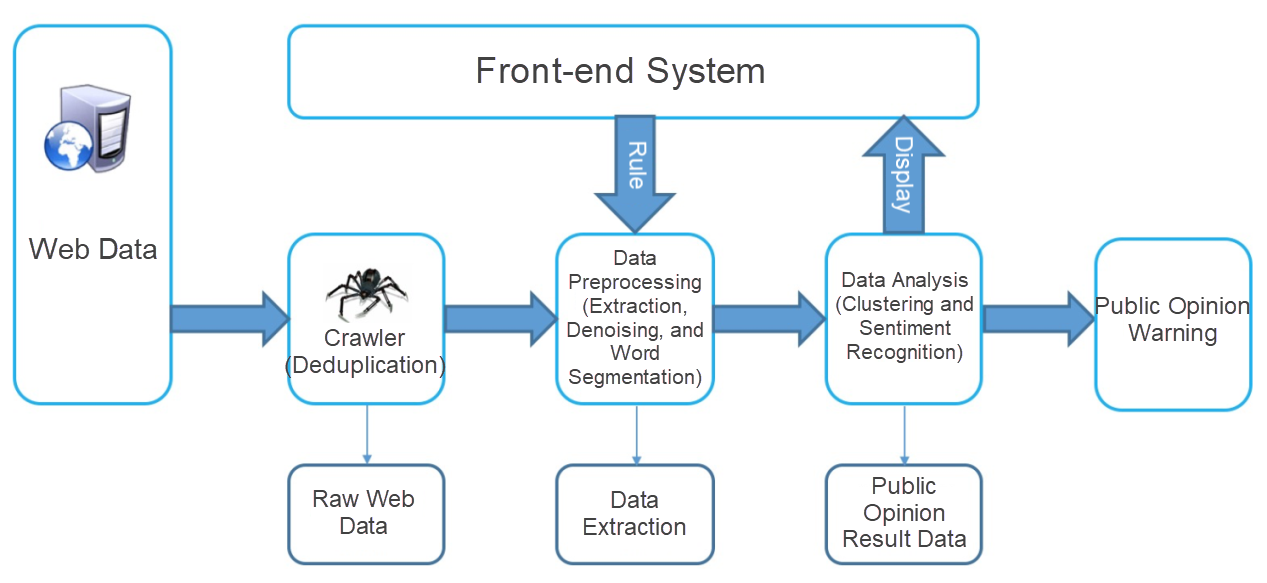

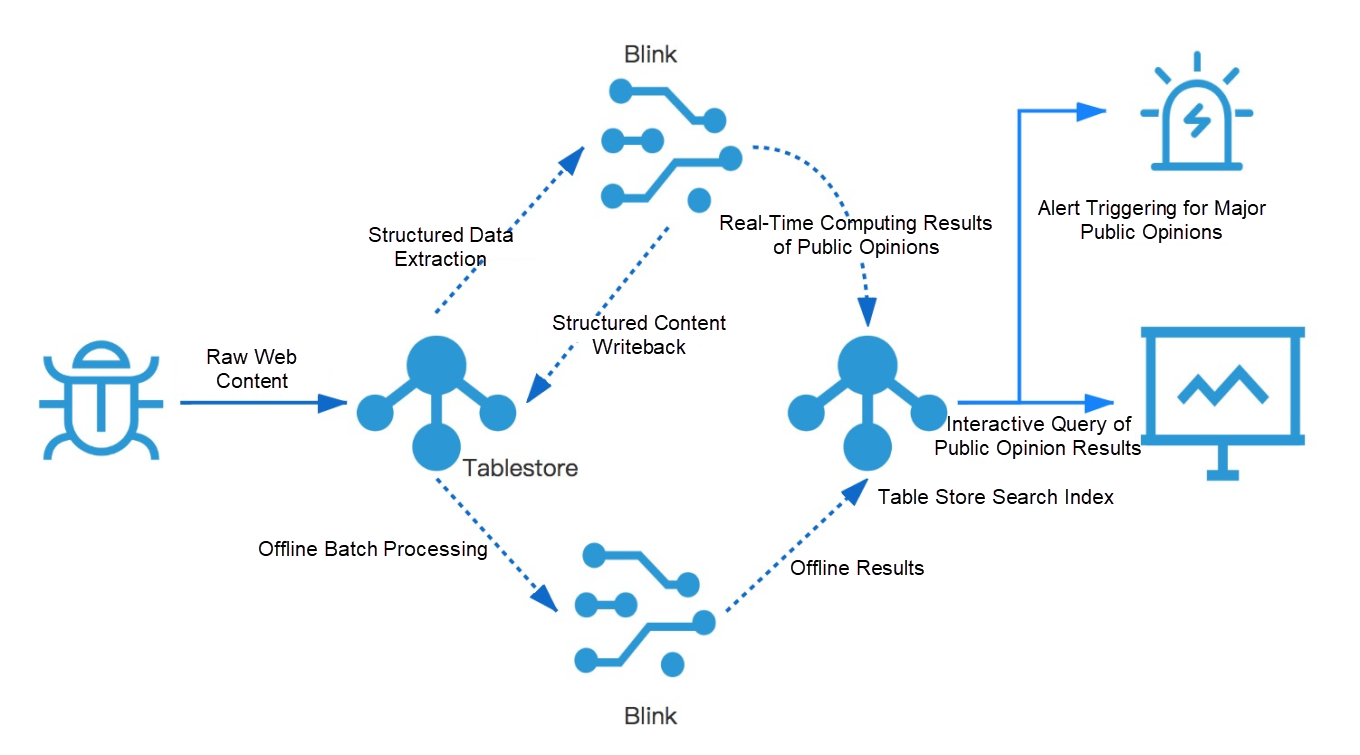

The following figure depicts the flowchart of the public opinion analysis system with a large amount of data. This is in many ways a simplification. It is based on the description of the public opinion system at the beginning of this article.

Figure 1: Business process of the public opinion system

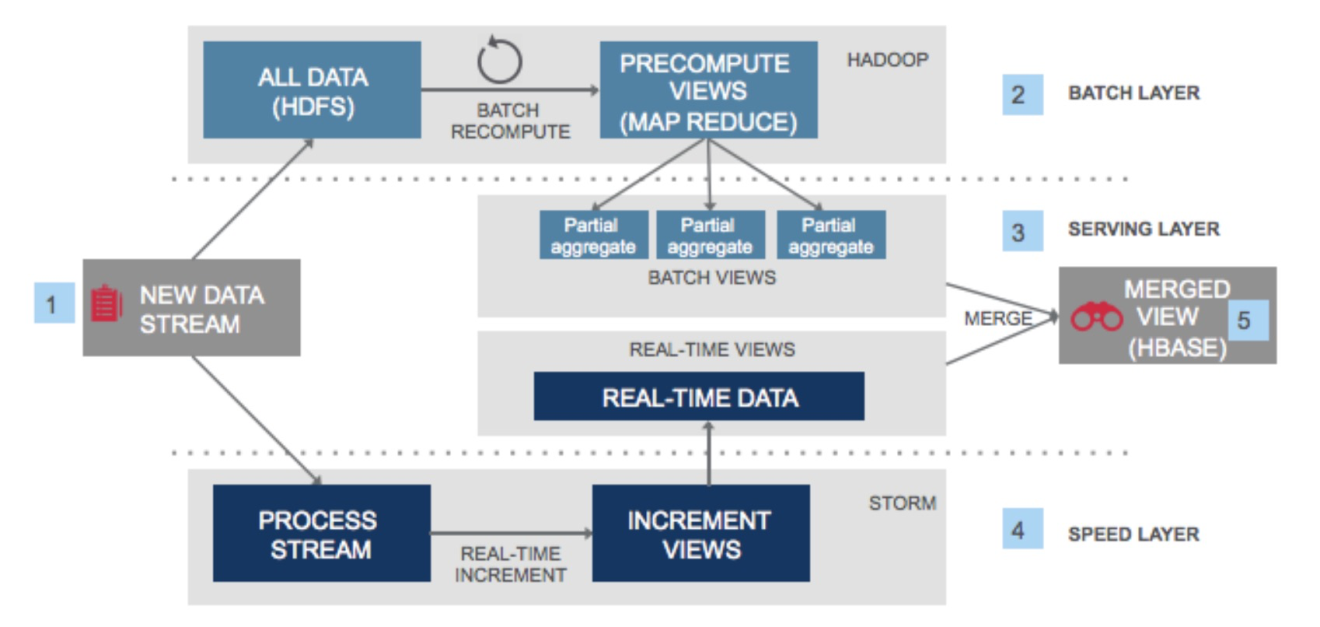

The big data analytics system of public opinion requires two types of computing: real-time computing (including real-time extraction of massive web content, sentiment word analysis, and storage of public opinion results on web pages) and offline computing. The system needs to backtrack historical data, optimize the sentiment dictionary by manual tagging, and correct some real-time computing results. Therefore, you need to choose a system for both real-time computing and batch offline computing in terms of system design. In open-source big data solutions, the Lambda architecture meets these requirements.

Figure 2: Lambda Architecture

The Lambda architecture is the most popular big data architecture in the Hadoop and Spark systems. The greatest advantage of this architecture is that it supports both batch computing, or offline processing, for a large amount of data and real-time stream computing, or hot data processing.

First, the upstream is generally a message queue service, such as Kafka, that stores written data in real-time. Kafka queues have two subscribers:

1. Full Data: It's the upper half in the image. The full data stores on storage media such as the Hadoop Distributed File System (HDFS). Upon an offline computing task, computing resources (such as Hadoop) access the full data in the storage system for batch computing. After the map and reduce computations, the full data results are written into a structured storage engine, such as HBase, and provided for the business side to query.

2. Kafka Queues Subscriber: It is the stream computing engine that consumes data in queues in real-time. For example, Spark Streaming subscribes to Kafka data in real-time, and stream computing results are written into a structured data engine. SERVING LAYER, labeled as 3 in the preceding figure, indicates the structured storage engine for batch computing and stream computing results. Results are displayed and queried on this layer.

In this architecture, batch computing features support processing a large amount of data, and the association of other business metrics for computing based on the business requirements. Computational logic is easily flexible, which is the advantage of batch computing. According to the business requirements, the results are computed repeatedly without changing the results computed earlier multiple times with the same computational logic. The disadvantage of batch computing is that the computing period is long and the computing results are not generated in real time.

As big data computing evolves, real-time computing is inevitable. Lambda architecture implements real-time computing through real-time data streams. Compared with batch computing, real-time computing has an incremental data streams processing method, which determines that data is often the newly generated data, that is, hot data.

Due to hot data, stream computing meets the organizational low-latency computing requirements. For example, in the public opinion analysis system, you may want to compute public opinion information in minutes after capturing data on a web page. As such, the business side has sufficient time for public opinion feedback.

The following section describes how to implement a complete big data architecture of public opinion based on the Lambda architecture.

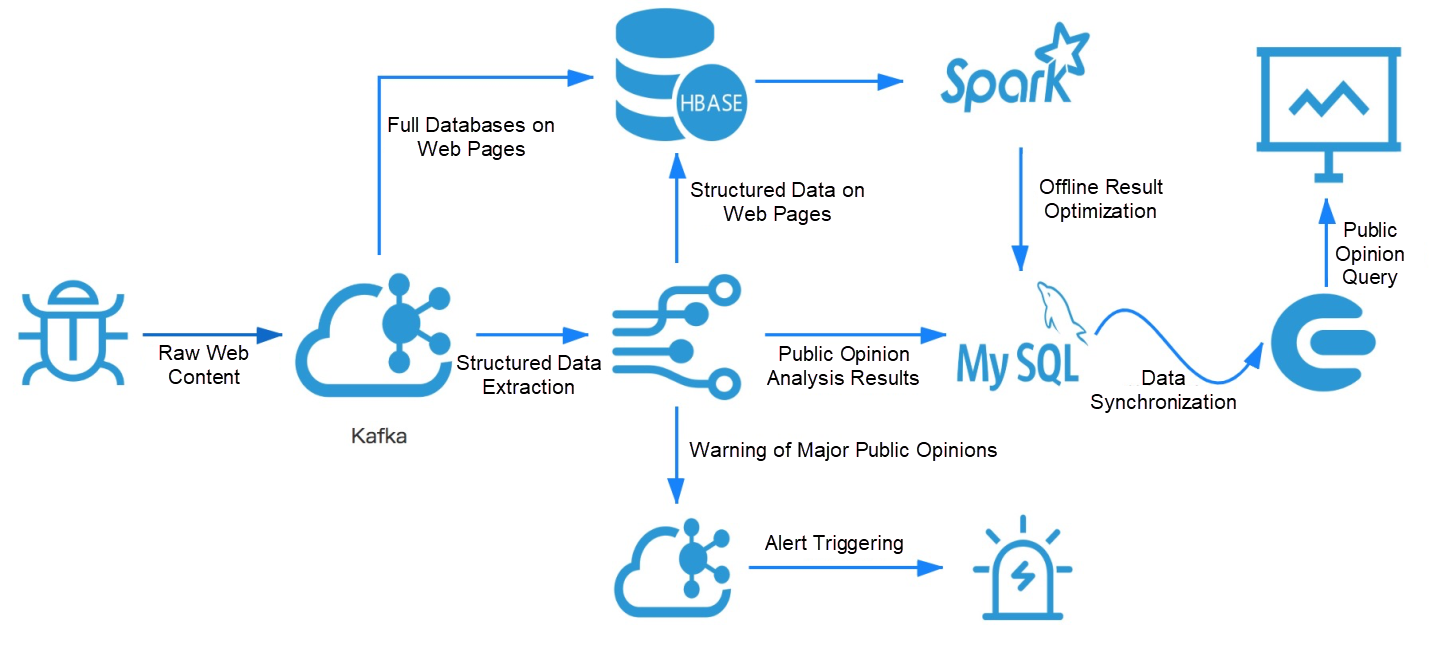

Figure 3 presents a flowchart that shows different storage and computing systems required for constructing the entire public opinion system, along with different requirements for data organization and query. Based on the open-source big data system and Lambda architecture, the entire system should be designed as follows:

Figure 3: The architecture of the open-source, public opinion analysis system

In the preceding public opinion big data architecture, Kafka connects to the stream computing engine, and HBase connects to the batch computing engine to implement batch views and real-time views in Lambda architecture. The architecture is clear and meets the online and offline computing requirements. However, it is not easy to use this system in production due to the following reasons:

According to the previous analysis, you may wonder whether any simplified big data architecture meets Lambda's computing requirements and reduce the number of storage and computing modules. Jay Kreps proposed the Kappa architecture.

In short, to simplify two storage systems, Kappa cancels the full data repository and keeps logs in Kafka for a longer period of time. When recomputing and backtracking are required, Kappa resubscribes to the data from the header of the queue and performs stream computing on all data stored in the Kafka queue again. The design solves the pain point between the two storage systems and the two sets of computational logic that needs to be maintained.

The disadvantage is that the historical data reserved in the queue is limited, and it is hard to trace data without time limits. Lambda is an improvement according to Kappa. Assume that a storage engine meets the requirements of efficient writing and random database query, and it's also similar to the message queue service that meets the first-in-first-out (FIFO) requirements. In this case, you could consider combining the Lambda and Kappa architectures to create the Lambda Plus Architecture.

Based on Lambda, the improvements of the new architecture are as follows:

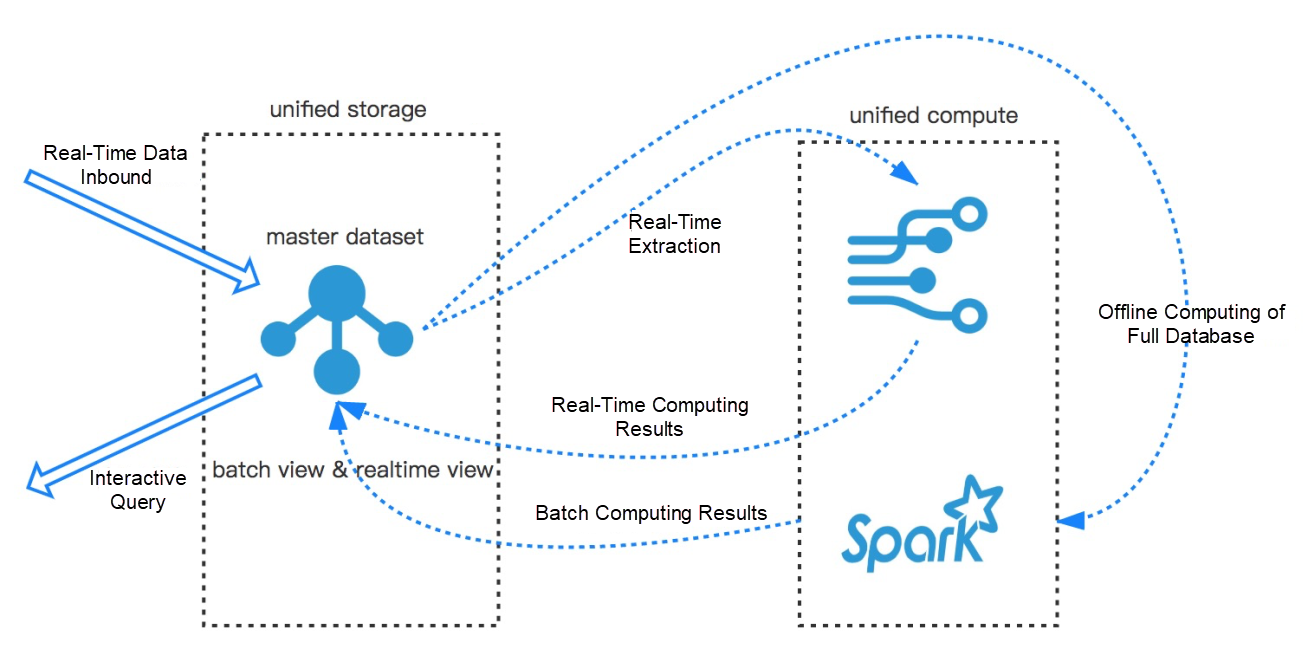

In summary, the core of the new architecture is solving storage problems and flexible connections to computing engines. The architecture of the solution is expected to be similar to the following.

Figure 4: Lambda Plus Architecture

In the architecture, the storage layer replaces the message queue service in the big data architecture by combining master table data and logs in the database. For the computing system, choose the batch computing and stream computing engines, such as Flink or Spark. Tracing historical data without limits, as in the Lambda architecture, and process two types of computing tasks with one set of logic and storage as in the Kappa architecture. This architecture is called Lambda Plus. The following section describes how this type of big data architecture is built on Alibaba Cloud.

Among the various storage and computing products of Alibaba Cloud, you can choose two products to implement the big data system for public opinion, based on the requirements of big data architecture. The storage layer uses the technologies and computing capabilities of Alibaba Cloud Tablestore, which is a distributed multi-model database, and the computing layer uses Blink to implement stream-batch integrated computing.

Figure 5: Public opinion big data architecture in Alibaba Cloud

This architecture is based on Tablestore in terms of storage. A single database can meet different storage requirements. According to the introduction to the public opinion system, crawler data involves four stages in the system flow. Raw web content, structured data on the web page, analysis rule metadata and public opinion results, and retrieval of public opinion results.

The architecture uses the wide column model and schema-free feature of Tablestore to merge the raw web page and structured data of the web page into one web page. Web data tables connect to computing systems using the new Tablestore feature, Tunnel Service. Based on database logs, Tunnel Service organizes and stores data in the data writing order. This feature enables the database to support stream consumption in queues. As such, the storage engine has random access to databases and sequential access to queues, which meets the requirements for integrating the Lambda and Kappa architectures.

Analysis rule metadata tables consist of analysis rules, the sentiment dictionary group layer, and dimension tables in real-time computing.

It also uses Blink as the computing system. It is an Alibaba Cloud real-time computing product that supports both stream computing and batch computing. Similar to Tablestore, Blink easily implements distributed scale-out, allowing computing resources to elastically expand with business data growth. The advantages of using Tablestore and Blink are as follows:

In off-peak hours, you may often need to process data in batches and write the data to Tablestore as feedback, such as sentiment analysis feedback. Therefore, it is ideal that architecture supports both stream processing and batch processing. This implies using one architecture to set analysis code for both real-time stream computing and offline batch computing.

The architecture generates real-time public opinion computing results through the computing process. Connect Tablestore to the Function Compute trigger to activate alerts for major public opinion events. Tablestore and Function Compute implement synchronization for incremental data. Writing events into the result table helps using Function Compute to easily trigger SMS messages or email notifications.

The complete analysis results and display search of public opinion use the new feature-the serch index of Tablestore to completely solve the following pain points of the open-source HBase and Solr multi-engine:

How to Implement Data Replication Using Tunnel Service for Data in Tablestore

How to Design a Storage Layer for Structured Data Storage Requirements

57 posts | 12 followers

FollowAlibaba Clouder - September 26, 2018

Alibaba Cloud MaxCompute - March 25, 2021

Alibaba Clouder - March 14, 2018

Alibaba Cloud Community - May 12, 2022

Alibaba Cloud Community - October 15, 2024

Alibaba Clouder - May 18, 2021

57 posts | 12 followers

Follow ApsaraDB for HBase

ApsaraDB for HBase

ApsaraDB for HBase is a NoSQL database engine that is highly optimized and 100% compatible with the community edition of HBase.

Learn More Batch Compute

Batch Compute

Resource management and task scheduling for large-scale batch processing

Learn More Apsara Stack

Apsara Stack

Apsara Stack is a full-stack cloud solution created by Alibaba Cloud for medium- and large-size enterprise-class customers.

Learn More Data Lake Storage Solution

Data Lake Storage Solution

Build a Data Lake with Alibaba Cloud Object Storage Service (OSS) with 99.9999999999% (12 9s) availability, 99.995% SLA, and high scalability

Learn MoreMore Posts by Alibaba Cloud Storage