By Yunlei, from Alibaba Cloud Storage Team

In the previous article, we introduced the theoretical basis and focused on various high-end SQL operations.

On a table, you can perform filtering (WHERE), conversion (scalar function), aggregation (aggregation or group aggregation), post-aggregation filtering (HAVING), sorting (ORDER BY), projection (SELECT), truncated row count (LIIMIT), and other operations. There is a sequence of execution times between the operations. A typical SQL syntax is listed below:

[WITH with_query [,...]]

SELECT expr

FROM TABLE

WHERE bool_expr

GROUP BY columns

HAVING Condition

ORDER BY expr

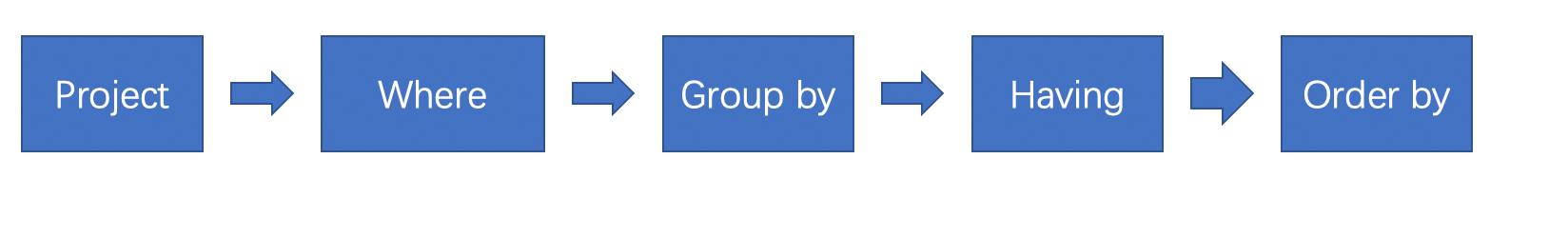

LIMIT countIn terms of execution sequence, first, select the required columns from the table. Second, execute the WHERE statement. Third, after filtering, execute GROUP BY for aggregation computing. Fourth, execute HAVING to perform secondary filtering on the aggregated results. Fifth, execute ORDER BY to sort the results. Finally, limit the number of output rows through LIMIT.

Figure 2-2 – SQL Execution Sequence

After the steps above, the operation on a table is completed, and a new table is generated. When a nested query is required, you can treat the inner query as a normal table by enclosing the inner result table in parentheses and then performing the same operation as above. Therefore, SQL syntax can be nested infinitely. For nested queries, in addition to enclosing them as subtables with parentheses, you can define them using the WITH statement. More details are given below.

The simplest SELECT operation is SELECT select_expr from TABLE. It means that you can retrieve data from tables. At the same time, some column computing is allowed. The following expressions can be used in SELECT:

There can be one or more expressions after SELECT. You can use commas to connect multiple expressions. In the first or second case, the two expressions can be combined, such as SELECT column1, scalar_function(column2), and an infinite number of column names or conversion functions can appear side by side. For the third case, if there is no GROUP BY statement, aggregate functions can only be combined with other aggregate functions, such as SELECT aggretate_function1(column1), aggregate_function2(column2). Case 1 or case 2 cannot occur at the same level. Conversion functions can be nested within aggregate functions, such as SELECT aggregate_function(scalar_function(column)). If there is a GROUP BY statement, the column name of GROUP BY and the conversion function above the column name can appear in the SELECT statement. The principle is simple because case 1 and case 2 do not change the number of rows of results, and aggregation computing of case 3 only generates a single row of results. They cannot be mixed at the same level.

As mentioned above, for each row of input, the conversion function generates the result of the corresponding row after computing, which means the conversion function does not change the number of rows of input data. The scalar in the scalar function represents linear scaling of the raw data without changing the dimensional space of the raw data. The input parameters of the conversion function can be 0 or more. However, there is only one output, which means no matter how many columns of parameters are entered, there is only a single output column. If you want to generate multiple columns, you need to integrate the output results into a complex type (such as array or map) and then use nested queries to expand the results.

Since the conversion function does not change the number of rows of results, the conversion function can be called and nested infinitely, such as fun1(fun2(fun3(fun4(fun5(key))))). Although, in most cases infinite levels of nesting are unnecessary, one or two levels of nesting are common.

The conversion function defines the input and output mode, and the function implementation is not part of the execution framework. Therefore, the execution framework does not need to pay attention to the internal implementation of the function. It only needs to call the function, pass the corresponding parameters into the function, get the output result, and pass it to the subsequent operator.

Based on this mechanism, users can develop more custom UDFs and register them to the execution engine. In the process of developing UDF, developers only need to pay attention to the format definition of UDF and do not need to pay attention to the complex implementation logic inside the execution engine.

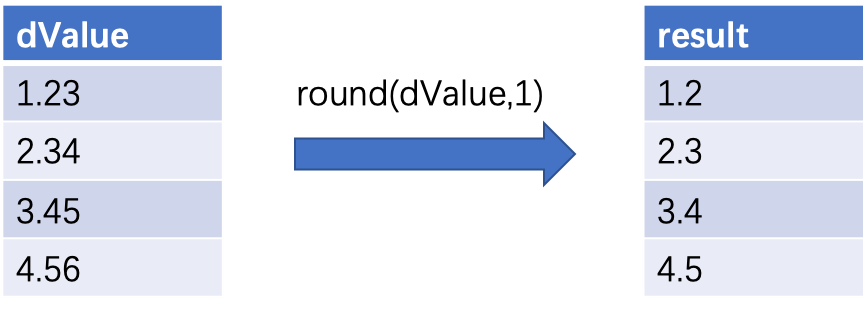

Below is an example of the conversion function. The numbers in the key column are taken away one decimal place. The outputs are listed below:

SELECT round(key,1) FROM table

Figure 2-3

The difference between an aggregate function and a conversion function is that no matter how many rows of input data the aggregate function accepts, the output data has only one value, which is one row and one column. If it is aggregated by window (group by certain columns), the input data in each window will only produce a single output data. For example, the AVG function used to calculate the mean value only returns a single mean value regardless of the number of rows of input data. Another difference is that the conversion function has no internal state, which means the output result can be obtained immediately after inputting the data. However, the aggregate function has to save a state in memory, and you cannot get the final result until all the data have been input. For example, the AVG function saves a sum and a count as states in memory, representing the sum value of the input data and the number of input rows, respectively. Each time new data is input, the state is updated, and the two values are divided when the final output is made to obtain the mean value.

The aggregate function is also a user-defined aggregate function (UDAF). Users can develop their UDAF and register them to the execution engine for calling.

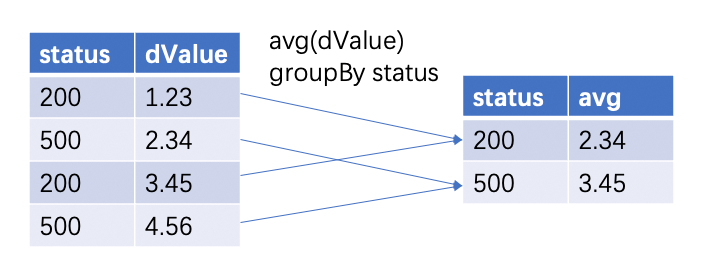

Below is a sample of the aggregate function, which is used to compute the average latency of accessing logs.

SELECT status,avg(dValue) FROM accesslog group by statusThe window is divided by status. There are two windows, 200 and 500. The data in each window is calculated by the aggregate function AVG to generate an aggregated result.

Figure 2-4 – Aggregate Function

If there is only one aggregate function in SQL, and we only want to perform aggregation computing on some data, we only need to put the filter conditions in where and filter out the data we want first. However, what if there are multiple aggregate functions, and each aggregate function requires different filter conditions? For the count operator, the corresponding count_if function can be used to attach filter conditions. For other aggregate functions, you can use case when to filter out the required data and then perform aggregation computing, such as avg(case when status=200 then latency end). However, case when is not designed specifically for filtering, the syntax is not complicated to use. Not all aggregate functions meet the semantics of this filtering. In addition to case when, there is a special selective aggregate operator that can attach a filter condition to each aggregate function. The specific syntax is listed below:

SELECT

key,

AGG1(x) FILTER (WHERE condition1),

AGG2(y) FILTER (WHERE condition2),

AGG3(z) FILTER (WHERE condition3),

...

FROMEach aggregate operator is followed by a filter (where bool expression). Only the content that meets the bool expression can participate in the corresponding aggregate. For each aggregate function in the same layer, you can specify a filter condition or not. The filter conditions corresponding to each aggregate function can be the same or different. This is called selective aggregate, which provides convenience for diversified aggregates at the syntax level.

All inputs participate in the aggregation in aggregate functions. However, there is a scenario in which the data is deduplicated first and then aggregated. The most common usage scenario is count (distinct key). First, deduplicate the key and then compute the count value. In addition to count, other aggregate functions, such as AVG (distinct key), use the same syntax.

The complete syntax within an aggregate function is listed below:

aggregate_function(all key)

aggregate_function(distinct key)In the first syntax, data is not deduplicated, and all the data is involved in computing. In the second syntax, data is deduplicated first and then aggregated. The default is the first syntax, so the all keyword is not required.

In the input parameter of the aggregate function, if the parameter value is null, it does not participate in the computing. For example, sum(key) only counts the sum of non-null values. count(key) only counts the number of non-null values. However, count( ) is an exception. Since is not a specific column, there is no difference between null and non-null values. Therefore, all rows are counted.

If all inputs of an aggregate function, after excluding null values, only have zero rows of valid data, the aggregate function returns null because no valid data is involved in the computing. Let’s take sum as an example. It is not appropriate to return zero or any other special value if all of them are null or only zero rows are entered because there is no special value that can uniquely represent this scenario. Returning null is the best choice. This definition is true in all aggregate functions (except for count). The result of the situation that zero rows are entered is 0.

In scenarios with only aggregate functions, all inputs are aggregated into a single result. If you want to divide the inputs into multiple groups and generate aggregated results for each group separately, you need to specify the groups by GROUP BY. GROUP BY is followed by one or more columns or the result of some columns computed by the conversion function. The GROUP BY clause is used in conjunction with the aggregate operator. In the absence of GROUP BY, the aggregate operator accepts all the input data and produces a computing result. In the case of GROUP BY, it is called group aggregation. Each row of data is computed the group to which it belongs according to the columns specified in GROUP BY or the conversion results of columns. No matter how many rows of data there are in each group, one row of aggregated results will be generated. Figure 2-4 shows a sample of GROUP BY group aggregation. It is grouped by status and divided into two groups. Each group generates one row of aggregated results, so there are two rows of aggregated results.

Below is a sample of GROUP BY, which is used to compute the average latency of each site in accessing logs.

SELECT avg(latency), host from accesslog GROUP BY hostMultiple aggregate functions can be executed within a group, and each aggregate function produces a column of aggregated results. The number of groups determines the number of result rows, and the number of aggregate functions determines the number of result columns.

In scenarios where GROUP BY is used, the expression specified in SELECT, in addition to aggregate functions, can select certain columns or the result of certain columns computed by conversion functions. These columns are restricted and can only be the columns that appear in GROUP BY. If it is a non-GROUP BY column, there will be a difficult problem because the group is divided according to the GROUP BY column, and each group only generates one row of results. If a non-GROUP BY column is selected, then, in the group, there will be multiple rows of data entering the same group. As such, which row should be selected as the solution during output? There is no clear answer to this question. There are several possibilities. The first one is to select a row randomly; the second is to select the first row; the third is to select the last row; the fourth is to output all. There are too many possibilities. If the user does not tell SQL which one to choose, it will cause misjudgment, and the output may not meet the user's expectations. Each option is implemented with its corresponding aggregate function. In the MySQL system, its outputs follow the first option.

Please see window functions for scenarios where you need to generate multiple rows of aggregated results in a group.

If a column to be grouped is a null value, the null value will be treated as a separate group.

In general scenarios, raw data will only participate in aggregation computing in one group and will not appear in multiple groups at the same time. However, there are some advanced usages, and they are grouping set operations. I will describe it in detail below.

The GROUP BY clause described above is a simple grouping aggregate operation. Full data will be divided into different groups according to grouping conditions. Each row of data will be aggregated in only one group. A more complex grouping aggregate operation is the grouping sets operation. The relevant keywords are Grouping sets, Cube, and Rollup. This operator can be allowed to group multiple times based on different grouping conditions in a single query. Each piece of data will participate in aggregate multiple times according to different grouping conditions.

For example, if you want to aggregate (grade, class), (grade), (class) according to multiple groups, you must perform three GROUP BY operations if you use GROUP BY. However, if you use grouping sets, the aggregate can be completed in a single query, and the syntax is select grade,class,count(1) from log group by grouping sets((grade, class), (grade),(class)). In the output result, both grade column and class column will be outputted. However, in the latter two sets, only one column is group by, and the other column appears as null in the result.

Rollup syntax is a special grouping sets syntax. The sets followed by roll up will enumerate all the prefix sets according to hierarchical aggregation. For example, group by rollup(grade, class) is equivalent to group by grouping sets ((grade, class),(grade),()). The last grouping condition is empty grouping, which means no grouping. This is equivalent to the scenarios without GROUP BY.

Cube syntax is a special grouping sets syntax. The difference between Cube and Rollup is that cube will enumerate all possible sets. For example, group by cube(grade,class) is equivalent to group by grouping sets((grade,class),(grade),(class),()).

Conversion functions input a row of data and output a row of data. Aggregate functions aggregate multiple rows of data into a single row. Is there an aggregate function that implements aggregate without changing the number of input rows? The answer is window functions.

A window function is similar to a conversion function in expressing results. For each row of input, a row of output is generated without changing the number of result rows. However, you can use aggregation computing functions within window functions. The window function divides the columns into buckets, distributes each row to the corresponding bucket, executes the aggregate function on each bucket, and writes the result back to each row. Therefore, it means that the window function uses the aggregate function like using the conversion function. The conversion function is to convert an input row into an output row, while the window function aggregates several rows in the window to generate a single result with each row having a result.

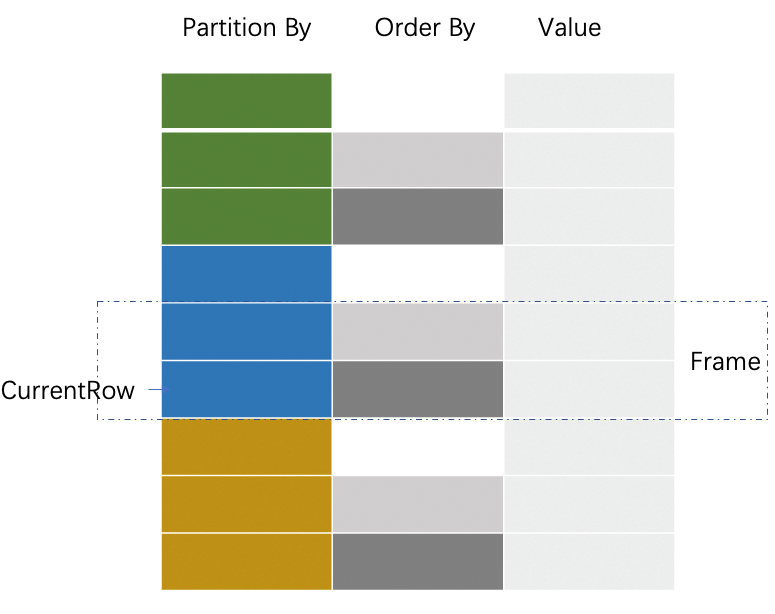

The logic of the window function is shown in Figure 2-4. You can see the window column, sorting column, and column involved in aggregate. Perform aggregation computing on the specified rows in each window and writes the result to the current row. The number of output rows is the same as the number of input rows.

Figure 2-5 – Window Function

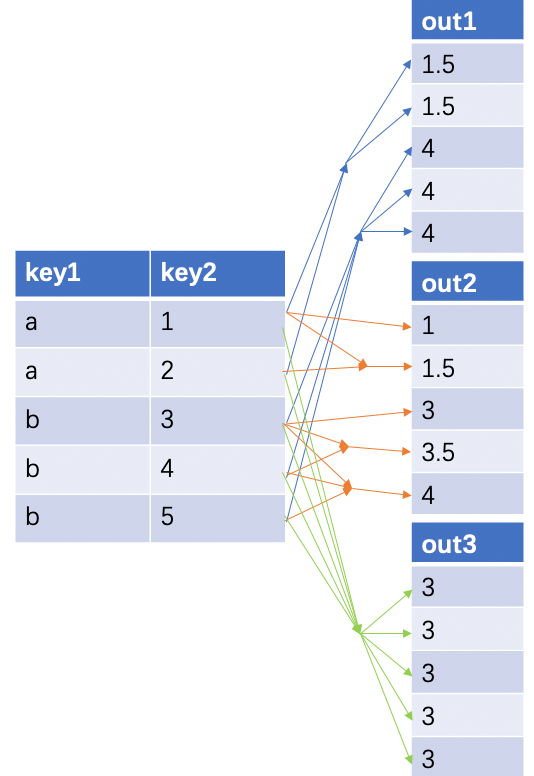

Let's look at the simplest scenario of a window function, avg(key2) over(). It means all the data is seen as a group to do AVG aggregate and write it back to each piece of data. Although the number in each result row is the same, the number of result rows is not changed. As shown in out3 in the figure below, the mean value of all rows is 3, and 3 is the result of each row.

A more complex window function scenario, avg(key2) over(partition by key1). It means that key1 is seen as groups, and the AVG aggregation computing is performed in each group and updated to each piece of data in each group. As shown in out1 in the figure below, the mean value of window a is 1.5, and all results in this window are filled with 1.5. The mean value of window b is 4, and all results in this window are filled with 4.

A more complex example of a window function is avg(key2) over(partition by key1 order by key2). It means that key1 is seen as groups, the data in each group is sorted by key2, and the AVG aggregated result of the data from the first row to the current row in the window is computed, which means the result of each row in the group may be different. As shown in out2 in the figure below, data in the first row of window a is 1, and the mean value is 1; data in the second row of window a is 2, and the mean value of the second row is the mean value of the data in the first and second rows, which is 1.5. Therefore, the result of the first row is 1 and that of the second row is 1.5. The comparison between this and out1 is obvious. In the results of out1, the results in each window are the same.

The preceding example is not the most complicated. In the first two examples, all the data in the two groups is aggregated. After ORDER BY is added, the data from the first row to the current row is aggregated. Is there a way to only aggregate a few rows near the current row? Is it possible to be more flexible in specifying which rows in the window should participate in aggregation computing?* The answer is yes. Window functions can specify several rows before and after the current row to participate in aggregation computing, such as avg(key2) over(partition by key1 order by key2 range between unbounded preceding and current row), which means that data from the first row to the current row should participate in aggregation computing. range between 1 preceding and 2 following means that the data of the previous row, the current row, and the next two rows are aggregated and updated to the result of the current row. Rows that participate in aggregate are called a frame, and the data within a frame is aggregated to produce a result.

Figure 2-6 – Output of Window Functions

In window functions, in addition to common aggregate functions, some special aggregate functions are used for window operations. For example, rank() is used for sorting in a window and outputs the sorted sequence number. The same value has the same sequence number, but the same value will occupy the count value. For example, for 100, 102, 102, and 103, their corresponding output sequence numbers are 1, 2, 2, and 4. Note that the last sequence number is 4. If the expected output is the deduplicated ordered number, you should use the dense_rank function. For the example above, the output is 1, 2, 2, and 3. In addition, there is row_number to output the row number. The cumulative percentage from the first row of the window after the cume_dist is sorted is similar to rank. The same value outputs the same result, and the output result is rank ()/total. percent_rank outputs (rank()-1)/total-1). The difference between cume_dist and percent_rank is that the latter accumulates from 0.

Operators and functions are the same in terms of internal implementation and expression effects; the difference between the two lies in their different syntax forms. Functions have clear function names and parameter lists containing zero or multiple parameters. Operators use common symbols to express their meaning, such as +, -, *, /, and other symbols. The operator contains one or two parameters. The binary operator contains two parameters, such as the + operator, which requires left and right parameters. The unary operator contains one parameter, such as the - operator, which represents the negation of the symbol. Operators require that the syntax form be explicitly defined in the syntax file. However, the function name does not need to be defined in the syntax file because it is just a normal string in the syntax file. It is unnecessary to check the existence of the function until you reach the semantic checking stage.

A complete expression consists of one or more functions, operators, and connectors. An expression acts like a conversion function. It inputs zero or multiple fields and outputs one row and one column of results. Common expressions include bool expression, logical expression, comparison expression, function call, and lambda expression.

A comparison expression connects two expressions using the comparison operators, including >, >=, <, <=, =, and <>, to determine the size between the two expressions. These two expressions are not necessarily base types. They may also be complex expressions, such as function call expressions. The data of base types can be value types (such as integer and bigint) or string types (such as varchar and char). In addition to the comparison algorithm above, there is between keyword, key between x to y, which is equivalent to key >=x and key <=y, and is a closed interval.

A bool expression is a class of expression that returns a bool type. Bool expressions are widely used in various filter conditions, such as WHERE, HAVING, and ON. Some conversion functions and comparison operators can return bool type results. For example, comparison operators, such as > and <.

A function can represent a simple expression. If you want to express complex logic, in addition to nested functions, you can combine multiple expressions with logical link symbols to form a complex bool expression. A logical expression connects one or two bool expressions using logical operators AND, OR, and NOT and returns the bool result. Among them, AND and OR are binocula operators, and NOT is a unary operator.

Lambda expressions (also known as anonymous functions) have no function name and only have parameter lists and calculated expressions. Lambda expressions can be used to allow user-defined process logic and are equivalent to a UDF. Generally, Lambda expressions can be passed into a function as a parameter and then the Lambda expression is called within the function to iterate through the data.

A simple Lambda expression is x -> x +1, which means accepting a parameter x and returning x + 1.

The WHERE clause is followed by a bool expression, indicating that the result of the bool expression is evaluated for each row of data after data is read from the table. If the expression evaluation result is true, the data of this row will be passed to subsequent operators for further calculation. If the evaluation result is false or in an unknown state, the row data should be discarded and no longer participate in subsequent calculations.

A Bool expression can be simple, such as a=1. It can also be an expression composed of nested multi-layer conversion functions, such as a%10=1. It can also be a logical expression connected by logical operators, such as a AND b. All functions in Bool expressions are conversion functions and cannot be aggregate functions.

The operation of the WHERE clause occurs before aggregation computing. The WHERE clause is important to help reduce the amount of data read and calculation and is often used to speed up calculations. In the optimizer, there are some rules to help push the filter conditions down to the leaf nodes as much as possible. Filter pushdown is a frequently used and effective means of acceleration.

Below is an example of the WHERE clause, which is used to get information about all male students.

SELECT * FROM student where gender='male'The HAVING clauses often follow the GROUP BY clauses. The HAVING clause is similar to the WHERE clause and is a bool expression. However, after HAVING is applied to the GROUP BY aggregation computing, the results of each group are used to continue to evaluate the results of the HAVING expression. Only the groups that satisfy the Having clause with true as the evaluation result can be output to subsequent operators.

The difference between HAVING and WHERE is: 1. WHERE is completed before GROUP BY, and HAVING is performed after GROUP BY. 2. WHERE is applied to each raw data, while HAVING is applied to the group aggregated result of GROUP BY.

In theory, even if there is no GROUP BY computing and only a global aggregate operation that can use HAVING, and the results of global aggregate are the same, the function of HAVING is to determine whether the results of this row meet the conditions, such as select avg(latency) as avg_latency from log having avg_latency > 100.

Even if there is no GROUP BY, no aggregate function, and there are only the results of the original columns or conversion functions in SELECT, you can use HAVING, but it is meaningless at this time because the condition in HAVING can be merged into WHERE. For example, select * from log where latency > 10000000 having status>200 can be written as a select * from log where latency > 10000000 and status>200.

In summary, the HAVING clause is generally used in conjunction with the GROUP BY statement to filter the results after group aggregation and filter out certain groups that meet specific conditions.

Below is a sample of the HAVING clause, which is used to find sites whose average latency is greater than ten seconds in accessing logs and their latencies.

SELECT avg(latency), host from accesslog GROUP BY host HAVING avg(latency) > 10

The execution of the HAVING clause occurs after GROUP BY and before ORDER BY. Please refer to Figure 2-2 for the sequence.

The ORDER BY clause contains one or more expressions that are used to sort the output. In ORDER BY, you can specify multiple expressions. Each expression specifies how it is sorted, either ascending sort or descending sort. The default is ascending sort. Multiple expressions are evaluated from left to right during sorting. If the results of multiple rows evaluated by the expression on the left are the same, the value of the expression on the right will be evaluated for sorting. For example, order by key1 asc, key2 desc indicates that key1 is sorted in ascending order. If the results of key1 are the same, key2 is sorted in descending order.

Below is a sample of the ORDER BY clause where students are sorted by score: Select * from student order by score asc

The LIMIT clause is used to limit the number of rows to return. If the number of output rows of the previous operator exceeds the number of rows specified by LIMIT, the excess will be discarded. The LIMIT operator can reduce the amount of data passed downstream. Therefore, it plays a good role in optimization. For example, if the ORDER BY and LIMIT operators are combined, the amount of data used for sorting will be significantly reduced in the sorting stage. The LIMIT operator is pushed down to the leaf node as much as possible. Generally, the LIMIT operator is used in conjunction with ORDER BY. If you use the LIMIT operator alone, the output results are not guaranteed to be in order. In other words, you will get different results each time you execute it. You should use ORDER BY at the same time to ensure the consistency of each query result.

A query sample (SELECT * FROM student limit 100) is used to obtain the information of 100 students.

Generally speaking, the LIMIT limits the maximum number of returned rows. In MySQL, you can limit pagination syntax, offset, and line to read the result of the line row starting from the offset row. For OLAP engines, it is impractical to support pagination because each time you submit a pagination request, you have to compute SQL and turn the page back after obtaining the result, which is costly unless the OLAP engine caches the computing results in memory and waits for the next pagination to obtain them. MySQL can support pagination because MySQL queries are generally transactional queries. On the other hand, the amount of data is relatively small, and the cost of pagination is not high.

In a layer of SQL query, the data source can be one table or multiple tables. You can perform operations on multiple tables and produce a new table. The operations between tables are the Join operation and Set operation.

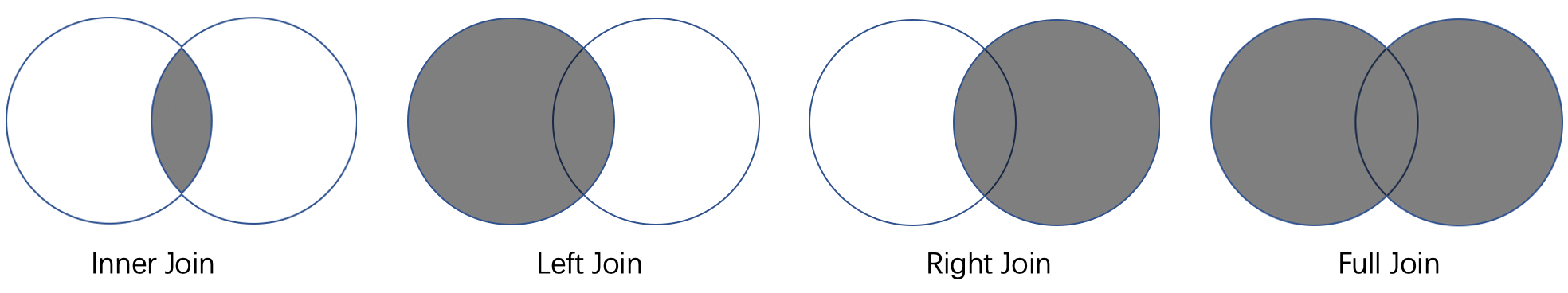

Join can join multiple tables (left and right) into one table and let the rows corresponding to multiple tables output to the same row according to certain conditions. One row of data from the left table and one row of data from the right table are joined into one row, and the columns of both the left and right tables will appear in the result. The following types of JOIN operations are available: Inner Join, Left Join, Right Join, Full Join, and Cross Join. The following figure shows different Join types. Inner Join generates the intersection of the left and right tables, which means the rows that meet the join conditions. In the output result, the columns of the left and right tables are not null. Left Join outputs rows regardless of whether the left table meets the conditions, while the right table only outputs rows that meet the conditions, and other rows are output as null. Right join is opposite to Left join. Full Join generates both the left and right tables. For rows that meet the conditions, it outputs the results after the corresponding left and right tables are joined. For rows that do not meet the conditions, it generates the rows of one table, and the other table is output as null. It combines the characteristics of Left Join and Right Join. Cross Join has no link condition and outputs the Cartesian product of two tables.

The Join operation is one of the operators with the highest computational complexity among all SQL operators. The optimization of Join is an important issue in SQL. The performance of Join is affected by factors (such as the execution mode and execution sequence of Join and the size of left and right tables). In the following sections, I will introduce the rule-based and the cost-based optimizers to optimize Join operators.

Figure 2-7 – Different Join Types

The elements of a set are rows, which are used to splice multiple tables into one table. The number of columns remains the same after splicing. A row in the original table is outputted to the result as it is. The number and type of columns in the left and right tables involved in the set operation must be consistent. The difference between the Set operation and the Join operation is that the Join operation splices the columns of left and right tables into one row according to join conditions. The Set operation splices rows and rows into more rows without changing the content of the original row. Set operations include Union, Intersect, and Except. They represent union set, intersection set, and difference set, respectively.

The theoretical basis of sets is set algebra. By default, there are no repeated elements in sets. You can add the distinct or all keyword after a set operator to indicate that the result is deduplicated and not deduplicated, respectively. The default is the result after deduplication. For example, table1 union table2 will output the results of the two tables after deduplication.

In the simplest query, the FROM clause specifies the table from which the data is to be read. In FROM clause, the simplest case is to specify a table and read data from this table. A slightly more complicated situation is FROM the join results of multiple tables. A more complicated situation is that the source of FROM is not a table at all but the output result of another query. The result of an SQL query can become a new table, and this table can be used as input for another query. This is the excellence of the relational model. As long as you compute a relation, the second relation is formed. After the second computing, the third relation is formed. In theory, the table or relation can go through numerous rounds of computing to form a singly linked list. This is a nested query. The results of nested queries can participate in downstream computing, join, and unio like a normal table.

In SQL, there are two forms of writing nested queries. The first and most intuitive one is to write a subquery after FROM and enclose the subquery with () to form an integrated whole. For example:

select abc from ( select abc from table)Inside the () is a complete subquery.

The second is the WITH syntax.

with temp_table1 as (select abc from table),

temp_table2 as (select abc from temp_table1)

select * from temp_table2You can use the WITH syntax to define multiple views. The views are enclosed in parentheses. Separate multiple temporary tables with commas. The WITH statement does not need to be followed by a comma at the end but followed by the SELECT statement.

The WITH statement is concise and allows you to define only one view per row. The views are defined and used separately, and you can refer to the table name of the view when using it. Defining a view once can even be referenced multiple times. However, for nested queries, temporary tables are defined and used together and need to be defined every time they are used. Inside the outer query statement is a subquery, and the FROM keyword is located in the middle of the entire SQL statement. As a result, the first half of the outer query is located in front of the subquery, and the second half is located behind the subquery. The query semantics in the same layer is separated by a complex word query. As a result, it is difficult to understand SQL semantics. Therefore, we recommend using the WITH syntax when you use nested queries.

A view is defined in a WITH query and the view can be referenced multiple times later. Views are not materialized views. If a view is referenced multiple times, the view will be expanded and executed multiple times.

In addition to being referenced as a relation in a FROM clause, another use of subquery is to be referenced as an expression. For example, the reference subquery result in the WHERE statement is used as a set to determine the relation between a value and this set. The difference between a subquery expression and a nested query is that a subquery expression acts as an expression in a plan, while a nested query acts as a view. In a subquery, you can refer to the attributes of the outer query, while in an outer query, you cannot refer to the attributes of the subquery.

A nested subquery can appear in almost every position in SQL except after FROM.

select (select 1) as one from student.select name from student where id in (select id from applied).There are several ways to determine the relation between the outer query attributes and the inner subquery results.

According to the output results, subqueries are divided into three types:

According to whether the attribute of the outer query is referenced, the following types are divided into:

The result of a scalar subquery only has one value in one row and one column. There are many means to optimize this feature. I will introduce it in the following section on the optimizer. In theory, for each row of data in the outer query, it is necessary to execute a subquery expression. However, there are some differences in the processing of correlated and uncorrelated subqueries. For an uncorrelated subquery, the subquery does not reference any external columns. Therefore, for each external row of data, the execution result of the subquery is the same. It only needs to be executed once. In this scenario, the subquery will only be executed once.

A scalar subquery can be used in CASE expressions, SELECT clauses, WHERE clauses, ORDER BY clauses, and function parameters. Since a scalar subquery only returns one row and one column, it can be used as a single value.

A scalar subquery requires only one result where it is used, but it is not syntactically constrained to return one result. You can write an aggregate subquery or use LIMIT 1 to return only one result. You can also create an SQL statement that may return multiple rows of data. If multiple rows of data are returned during execution, an error will be reported.

For example, select count(1) from log where latency >= (select avg(latency) from log). An aggregate function in a subquery must return a row of results, so it can be executed normally. However, if a user writes such a subquery, select count(1) from log where latency >= (select (latency) from log). There are three possibilities: zero rows of results are returned, one row of results is returned, or more than one row of results is returned. For the first case, the subquery outputs null. For the third case, an error is reported because the outer layer of a scalar subquery requires one row and one column of input. In other words, it is called a scalar subquery because the outer query requires the subquery to output one row and one column of results rather than obtaining only one row and one column of results through syntax or actual operation by the subquery.

In addition to the WHERE clause, in the SELECT statement, select *, (select max(latency) from log )from log, the maximum latency value in each row will be generated. If it is written as select *, (select max(latency) from log )from log an error will be reported.

It can be used as a function parameter: select *, abs((select max(latency) from log) )from log. Scalar subqueries can be used where a single value is needed.

in and not in are used to determine whether the attributes of the outer query belong to the set of inner subquery results. For example:

select * from course where student_id in (select student_id from student where apply_year='2018')In addition to being used for subqueries, they are also used to specify a list constant. For example:

select * from course where student_id in(1,2,3)The EXISTS subquery checks whether the subquery has an output result. If the subquery has at least one row of results, the result is true. Otherwise, the result is false. Usually, the EXISTS subquery is used to determine correlated subqueries, which means to determine the results of EXISTS subquery for each row of data in the outer query. If it is an uncorrelated subquery, the result of EXISTS is the result of one row for each row of data in the outer query, which is meaningless.

For example, SELECT name FROM websites WHERE EXISTS ( select count from access_log WHERE websites.id = access_log.site_id and count > 100) indicates the name of the website whose count is greater than 100 in the output access log.

NOT EXISTS subquery has the opposite meaning, which indicates that the result of the subquery is an empty set.

EXISTS queries can also be expressed with the IN syntax. The IN syntax is used to determine whether each row of a column is in the output result of a subquery. For example, the preceding logic can be expressed in the IN syntax: SELECT name FROM websites WHERE id in ( SELECT site_id from access_log where count > 100). In the IN query, the subquery is an uncorrelated query. Therefore, the subquery only needs to be executed once, so the query efficiency is high.

The outer query can be compared with the results of subqueries. The comparison operators include <, >, <=, >=, =, and <>. The result of a subquery can contain the modifiers: SOME, ANY, and ALL. Each row in the outer table will be compared with each row in the subquery result one by one, returning true or false. For modifiers SOME and ANY, at least one row of the comparison results needs to be true. For modifier ALL, all rows of the comparison results need to be true. =ANY has the same meaning as IN. <>ALL has the same meaning as NOT IN.

A sample: SELECT Num FROM Test2 WHERE Num > ANY (SELECT Num FROM Test1) indicates that if there is any number in the Test1 table smaller than the number in the Test2 table, the data in the row meets the condition and will be output to the downstream operator.

Quantitative subqueries will be introduced in depth in the Optimizer chapter.

Similar to EXISTS, the keyword-unique is used to determine whether all rows in a subquery contain duplicate values. If the rows contain duplicate values, return false. If not, return true.

For example:

select * from log where unique (select projectName from log)Generally speaking, if the preceding subqueries are uncorrelated, the results of each row are the same, which is meaningless. Therefore, the subqueries above are usually correlated subqueries, so the results of each row are different. In general, correlated subqueries will be converted into Join computing during the plan optimization phase.

Subquery is a syntax express form. In materialized plan, there is no such execution mode as subquery, which generally needs to be converted into equivalent relational algebra expressions.

In addition to the common Join (left, right, full, and cross), there are two special forms of Join, semijoin, and antijoin. The semijoin is used in the IN or EXISTS query. It indicates that the data in the left table will be output as long as the condition is met. Each row of data is only output once. The antijoin is used in the NOT IN or NOT EXISTS query. It indicates that data in the left table will be output as long as the condition is not met. Each row of data is output only once. Although semijoin and antijoin have equivalent express forms, these two specialized expressions can achieve better execution performance.

It is simple to process normal data, but there are often some illegal cases to deal with. Null is a typical scenario. An illegal value, or a value whose exact value is unknown, is represented by null.

How to insert a null value in the aggregate function has been described above. In this chapter, we mainly consider how to insert a null value in the conversion function.

If a conversion function or a conversion expression returns a non-boolean value, such as algebraic operations, and if the input is null, the output is also null.

If a conversion function or a conversion expression returns a boolean value, such as a comparison expression, the normal output is true and false. If one of the input parameters is null, and it cannot be determined whether it is true or false, the third state, which is the unknown state, is required for judgment. Why do we need to output null in such a simple way? This is because the amount of information expressed by an unknown state is larger than null. In the subsequent calculation, even if there is an unknown state, the result can be inferred.

For logical expressions (such as AND, OR, and NOT), when an unknown state occurs, we can obtain the final result using the idea of short-circuit evaluation without caring whether the unknown state is true or false.

AND: If it is true and unknown, the result depends on the unknown, and the result is unknown. If it is false and unknown, whether the unknown state is true or false, the result is false.

OR: If it is true or unknown, the result is true regardless of whether the unknown state is true or false. If it is false or unknown, the result depends on the unknown, and the result is still unknown.

NOT: Not unknown. The result is still unknown.

IS NULL syntax and IS NOT NULL Syntax: IS NULL can determine whether an expression is null. IS NOT NULL is the opposite. At the same time, in the SQL standard, there are IS UNKNOWN syntax and IS NOT UNKNOWN syntax but not all SQL engines support the two unknown syntax.

When grouping operations are performed, such as GROUP, DISTINCT, and UNION, if the specified column contains null, all rows that have null will become a group. This is different from the performance in computing expressions. For example, if we determine null=null, the output will be unknown instead of true.

In SQL, the generation of new data depends on expressions or functions. As mentioned above, functions are divided into two types: scalar conversion functions and aggregate computing functions. Scalar computing functions convert one row of input data into one row and one column of output results. Aggregate computing functions convert multiple rows of input data into one row and one column of output results. If we want to convert one column of output to multiple columns of output, we can achieve it using multiple expressions. What should we do if we need to convert one row into multiple rows? In this scenario, the UNNEST function is required.

The UNNEST function can convert one row of data into multiple rows of data. For example, if the input data is an array type, each element in the array can be output as a row of results. The syntax is listed below:

SELECT element FROM (VALUES ( ARRAY[1,2,3]) ) as t(element)

There are three rows of output results, which are 1, 2, and 3, respectively.

In addition to the SELECT syntax, there are other DDL statements (such as INSERT and CREATE statements).

This article describes some of the core syntax rules related to SQL and queries to help readers understand which aspects of computing SQL can accomplish.

2023 Gartner® Magic Quadrant™ for Analytics and Business Intelligence Platforms

1,338 posts | 469 followers

FollowAlibaba Cloud Community - June 28, 2023

ApsaraDB - September 30, 2021

Alibaba Cloud Community - December 27, 2022

ApsaraDB - October 24, 2022

ApsaraDB - December 30, 2021

ApsaraDB - March 26, 2024

1,338 posts | 469 followers

Follow ApsaraDB RDS for SQL Server

ApsaraDB RDS for SQL Server

An on-demand database hosting service for SQL Server with automated monitoring, backup and disaster recovery capabilities

Learn More Data Lake Storage Solution

Data Lake Storage Solution

Build a Data Lake with Alibaba Cloud Object Storage Service (OSS) with 99.9999999999% (12 9s) availability, 99.995% SLA, and high scalability

Learn More Quick BI

Quick BI

A new generation of business Intelligence services on the cloud

Learn More Storage Capacity Unit

Storage Capacity Unit

Plan and optimize your storage budget with flexible storage services

Learn MoreMore Posts by Alibaba Cloud Community

Dikky Ryan Pratama July 1, 2023 at 3:52 pm

awesome!