By Li Feifei, VP of Alibaba Group, President and Senior Fellow of Database, Alibaba Cloud Intelligence.

As China and the world is facing the horror of the coronavirus pandemic, Alibaba's CIO hopes to stand with the CIOs and CTOs of other major enterprises, as well as other technical experts and developers hailing from all over the industry, to create technological solutions that can help to arm companies in combatting the uncertainties and dangerous of the current crisis.

Li Feifei, Chief Database Scientist at Alibaba DAMO Academy, Vice President of Alibaba Group, and a distinguished ACM scientist, gave the first live broadcast in our public service training series on technologies for fighting epidemics. During the training, Li presented Alibaba Cloud's enterprise-level cloud-native distributed database system to the audience. The following content is based on his live video presentation.

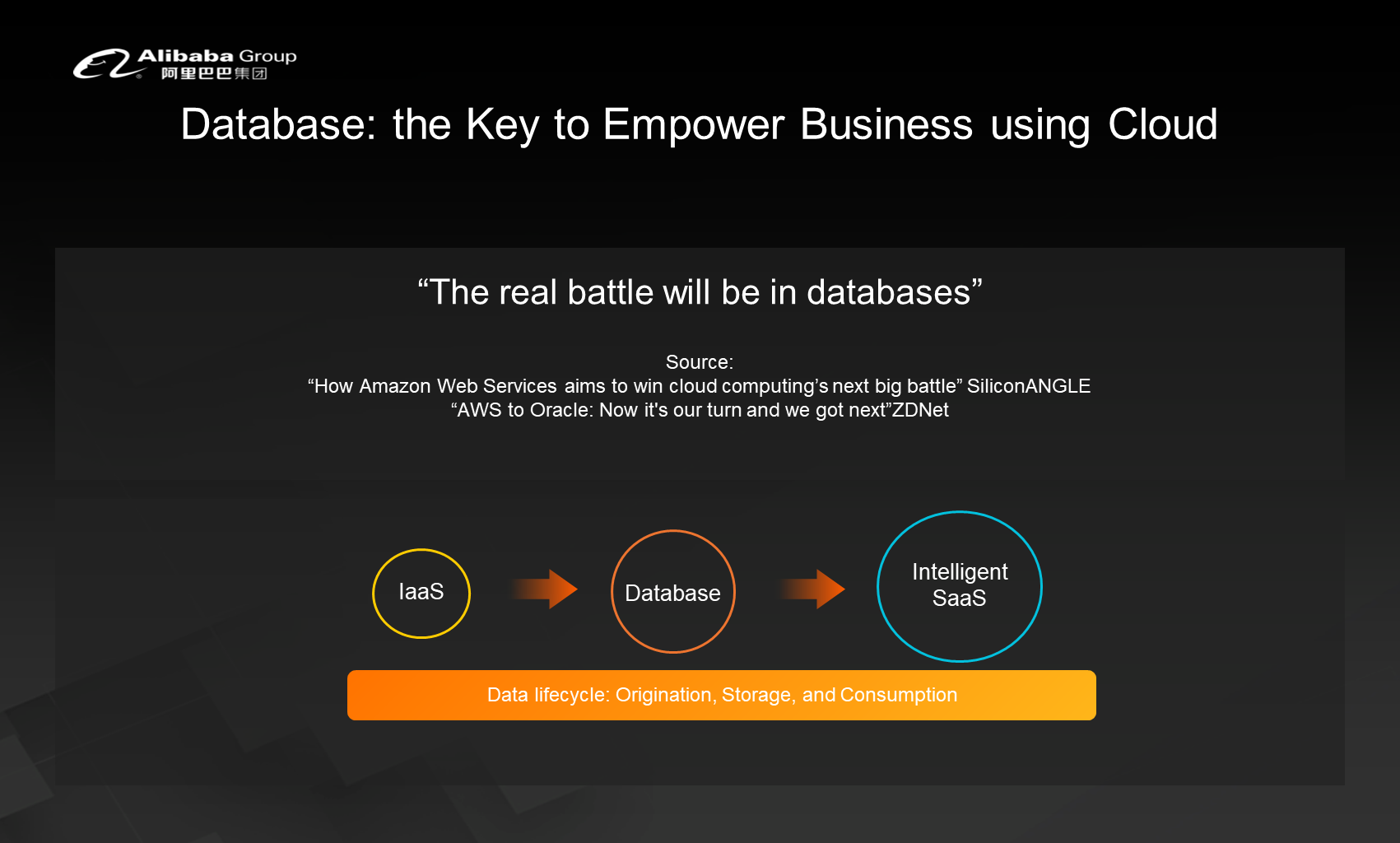

Today, cloud migration has become a prominent trend in the industry. During the cloud migration process, databases are a key component of the cloud. Because cloud started as an Infrastructure-as-a-Service (IaaS) solution, with the rise of various intelligent applications, databases have become an important element in the transition from IaaS to intelligent applications.

As you probably already know, databases can be divided into the following categories.

The most typical example of a database is a conventional relational OLTP database, which is a structured database that is mainly used for transaction processing. The common applications of this type of database at Alibaba include bank transfer accounting, Taobao orders, and order and commodity inventory management. The main challenge faced by this type of database is ensuring data correctness and consistency in scenarios with high concurrency that require high availability and performance.

The second database type is NoSQL databases, which are mainly used to store and process non-structured or semi-structured data, such as documents, graphs, time series, spatiotemporal, and key-value data. These databases sacrifice strong consistency in exchange for improved horizontal system scalability and throughput.

Another example is OLAP databases, which are applicable to massive data, as well as complex data types analysis conditions. These databases support deep intelligent analysis. The main challenges faced by these databases are high performance, deep analysis, linkage with transaction processing databases, and linkage with NoSQL databases.

In addition to the core data engine, there are also peripheral database services and management tools, such as data transmission, database backup, and data management services and tools.

Last but not least, there is the database control and manage platform that can be deployed in a private cloud, proprietary cloud, hybrid cloud, or one's own data center. A database management system is always necessary to manage the generation and deprecation of database instances and the resource consumption. This platform can be provided to database administrators and database developers in a simpler way.

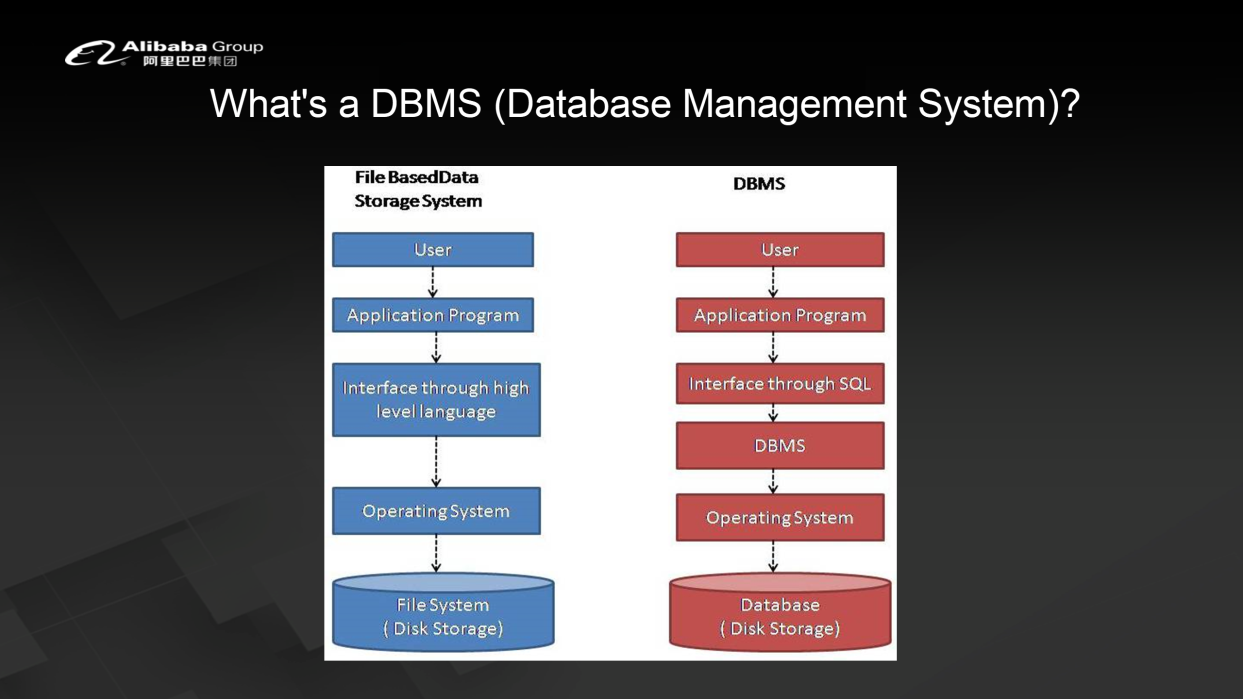

The above figure shows the differences between file-based data storage systems and database management systems. The core of a database management system is located between the operating system and the SQL interface. Simply put, a system is set up between the storage system and upper-layer abstraction to manage the data that is useful for the business. Otherwise, you need to use advanced programming languages to develop applications that interact with the operating system and manage the data. The database abstracts the management, storage, and consumption of data so that you can focus on the business logic side of things instead of having to repeatedly write the required logic in the application. The data management logic is implemented by the database system, and the data access interfaces are abstracted by using SQL.

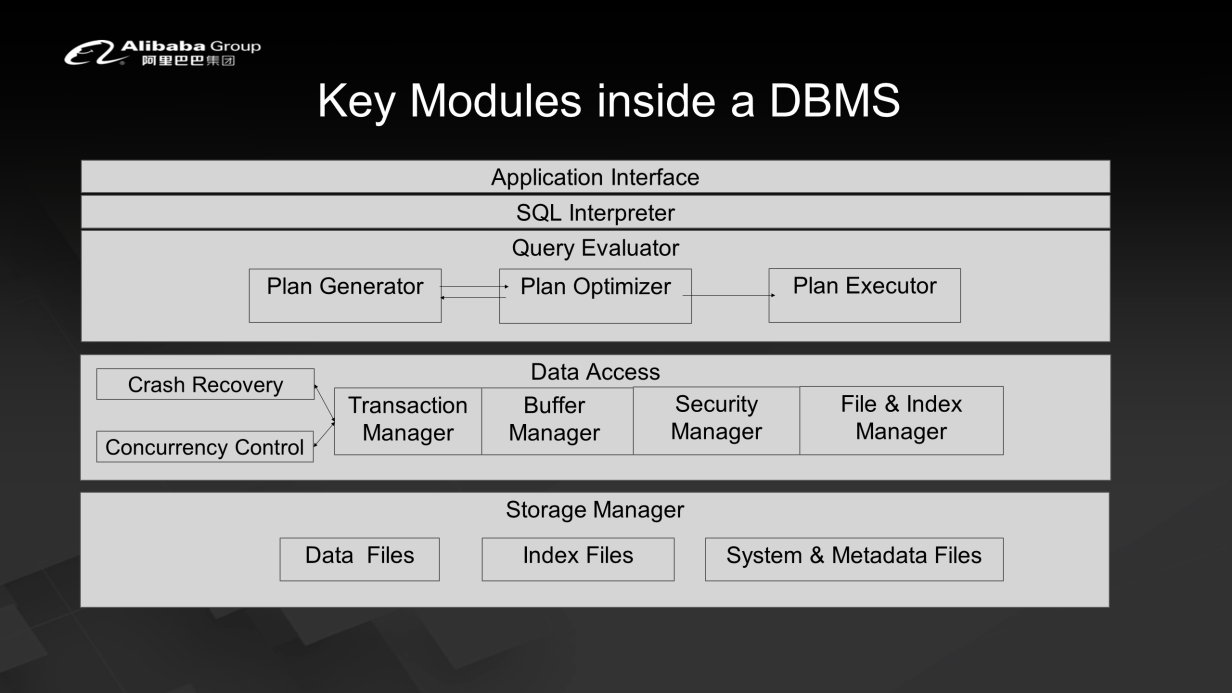

The core modules of database management systems include application interfaces, SQL interfaces, query execution engines, data access modules, and storage engines. The query execution engine can be further divided into a plan generator, plan optimizer, and plan executor. The data access module can be divided into sub-modules such as transaction and memory processing and the management of security as well as file and index files. Transaction processing is the core module and includes the crash recovery and concurrency control functions. The underlying storage engine hosts data files, index files, and system and metadata files.

The processes of database query, analysis, and processing is as follows. First, the query task is submitted through an SQL statement or the Dataframe API of the big data system. Then, it is processed by Parser. At this time, you can use different execution methods and generate a catalog and a logical execution plan. After this, you can then optimize the logical execution plan and generate a physical execution plan. Next, with the help of system statistics, such as index management and memory management statistics, you can generate an optimized physical execution plan and finally run the plan and generate the final result or RDD(resilient distributed datasets).

In short, the architecture of the database management system is designed for persistent data storage in the Data Page format. These data blocks are stored in the memory during the query access process. The system has memory pools, each of which can load a Page. However, the size of the memory pool is limited. Therefore, if the size of data storage is large, optimization is required. Data access optimization is also involved. This is generally done through indexes, which mainly are the hash index and B-Tree index.

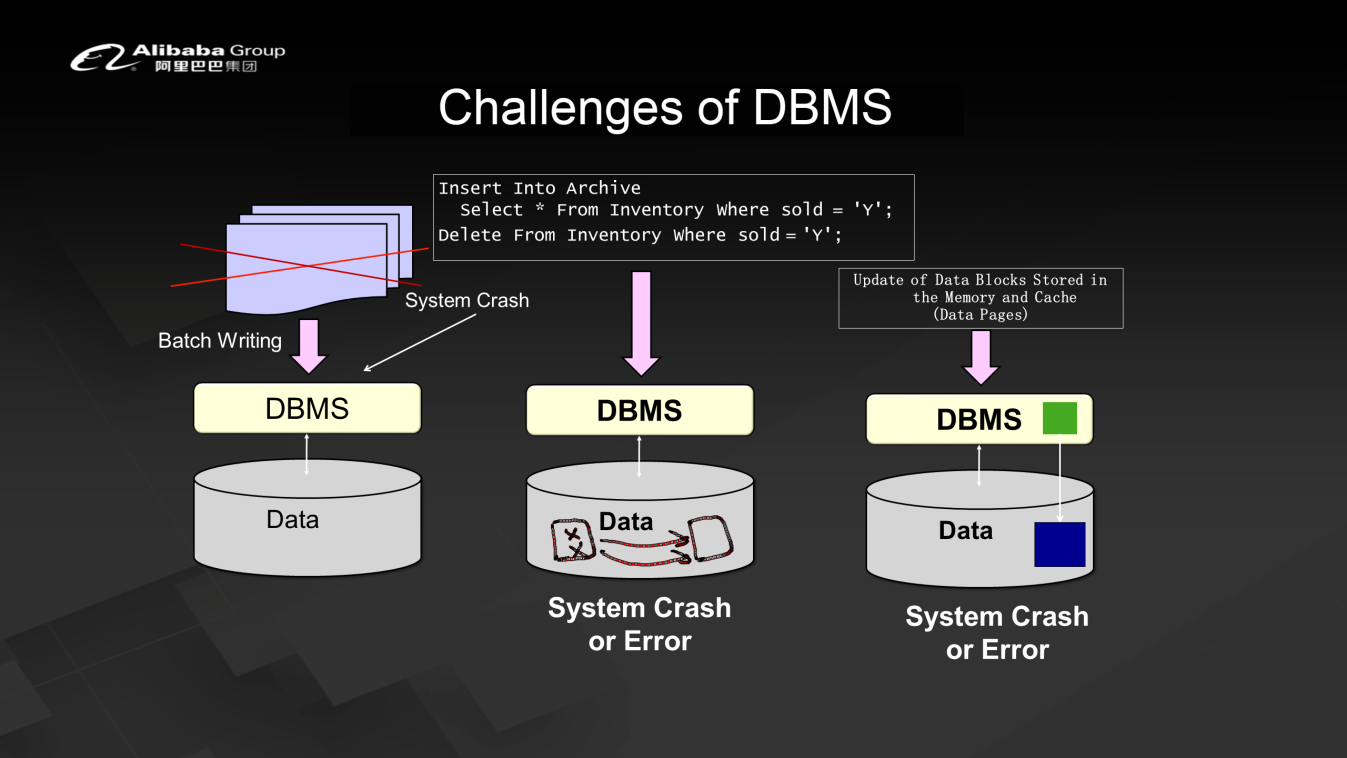

The most critical challenges that face database management systems are write-write conflicts and data consistency during concurrent access. In addition, there are conflicts between reads and writes. For example, if the system goes down when performing batch writing in the database, you need to consider how to implement automatic system recovery.

To solve the preceding problems, a new concept was proposed with database management systems, which was transactions. Simply put, a transaction is a series of actions that can be considered as a whole. From the user's perspective, transactions run in an isolated manner. That is, one user's transaction has nothing to do with another user. If an exception occurs in the system, either all or no operations within one transaction are executed. This lays the foundation for the core features of transactions, which are atomicity, consistency, isolation, and durability.

Database management systems are closely related to big data systems and face many challenges in analytical database systems. For example, predicting the user return rate requires complex query and analysis procedures and complex machine learning models.

Traditional architectures rely on high-end hardware. Each database management system has a small number of servers, and the architecture is relatively simple. However, such systems cannot cope with the rapid growth of new businesses. The core logic of cloud computing vendors is to provide pooled resources through virtualization technology. Cloud-native databases adopt a distributed database architecture to enable large-scale expansion. Each database management system spans multiple servers and virtual machines, posing new challenges to system management. Among them, the main challenge is how to achieve elasticity, high availability, and pay-as-you-go billing so that resources can be efficiently used.

Cloud-native data requires that the control platform be able to manage multiple instances in a unified manner. The system is fully graphic and uses none command lines. In addition, the system can be installed in minutes, deployed in clusters, automatically backed up, restored to a specific point of time, dynamically scaled up or down, and supports performance monitoring and optimization.

From 2005 to 2009, Alibaba had the largest Oracle RAC cluster in the Asia-Pacific region. From 2010 to 2015, we used open source databases and database sharding technologies to reduce our dependence on commercial databases. Since 2016, Alibaba has worked hard to develop our own databases, including PolarDB and OceanBase for transactional processing, and AnalyticDB for analytical processing.

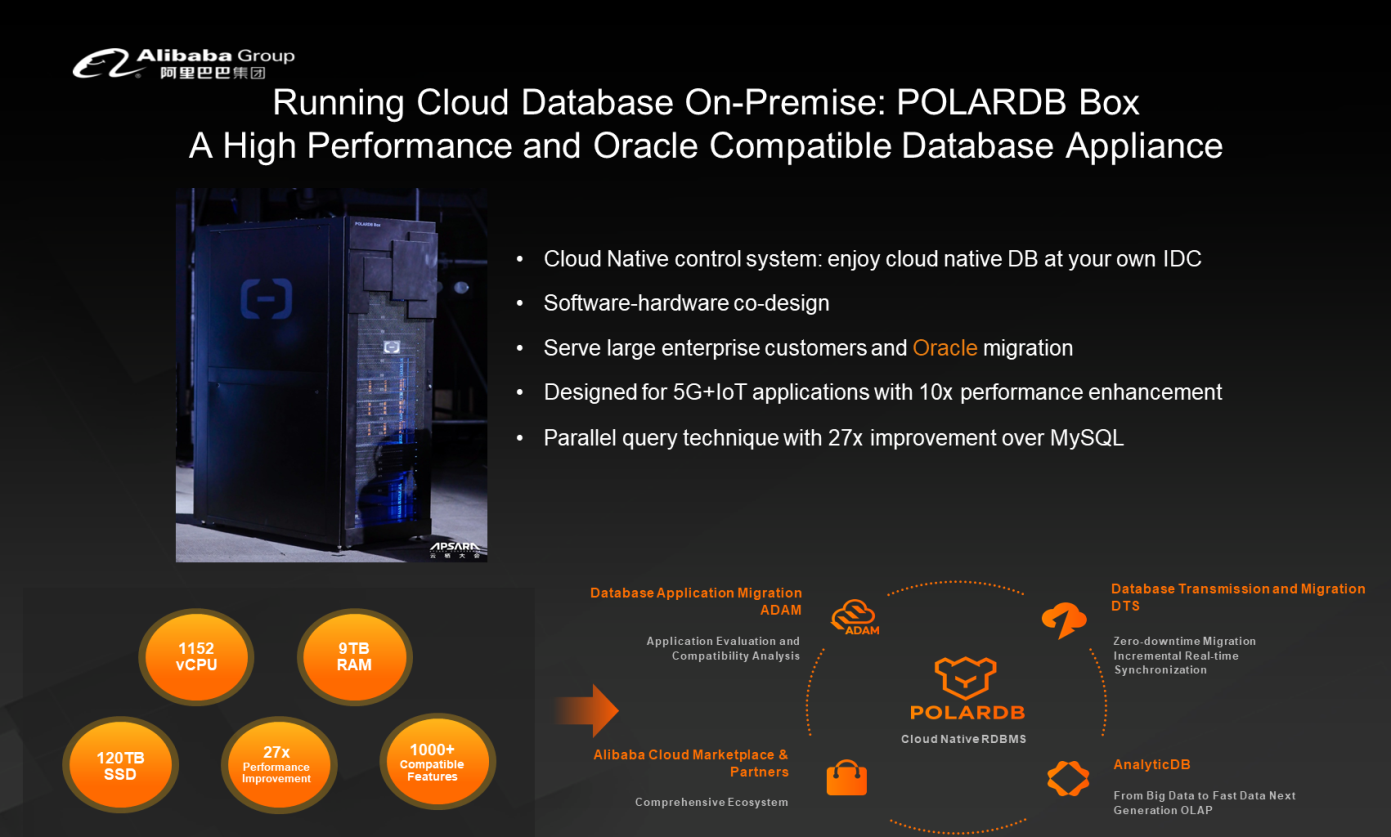

With the development of cloud computing, our database deployment methods have also undergone a significant change. Conventionally, database is deployed in the customer's data center and bound to the customer's servers. However, in a cloud environment, databases can be deployed in multiple forms, such as a public cloud, proprietary or private cloud, hybrid cloud, an independently deployed integrated software and hardware solution, or pure software output.

Multi-model is another trend in the database management system field. Database management systems have evolved from relational database OLTP to semi-structured data, to analytic database OLAP and other non-structured databases, and then to the current multi-model database format. Multi-model databases have two main dimensions. The south dimension indicates that databases can have multiple storage port methods, and the north dimension indicates that they can implement multiple query interfaces and standards, all integrated within in one database system.

We want to integrate machine learning and AI with the database kernel to give databases greater automation and intelligence, so that they can achieve automatic perception, decision-making, recovery, and optimization.

In the future, next-generation enterprise-level databases must adopt designs that combine software and hardware; instead of separating the software from the hardware because only through integrating software and hardware can we take full advantage of the system capabilities.

One of the key differences between transaction processing and analytical processing is row storage and columnar storage. The former stores data according to row, allowing transaction processing systems to efficiently and easily access a complete data entry to process updates. Here, the downside is that such systems need to access and read redundant data information. Comparatively, analytical processing systems only need to read necessary data, but they require multiple access requests when updating different attributes of a data entry.

Hybrid transaction/analytical processing (HTAP) seeks to combine row store and column store to implement hybrid row-column storage in a single system. However, this architecture must overcome many challenges, the most important of which is data consistency.

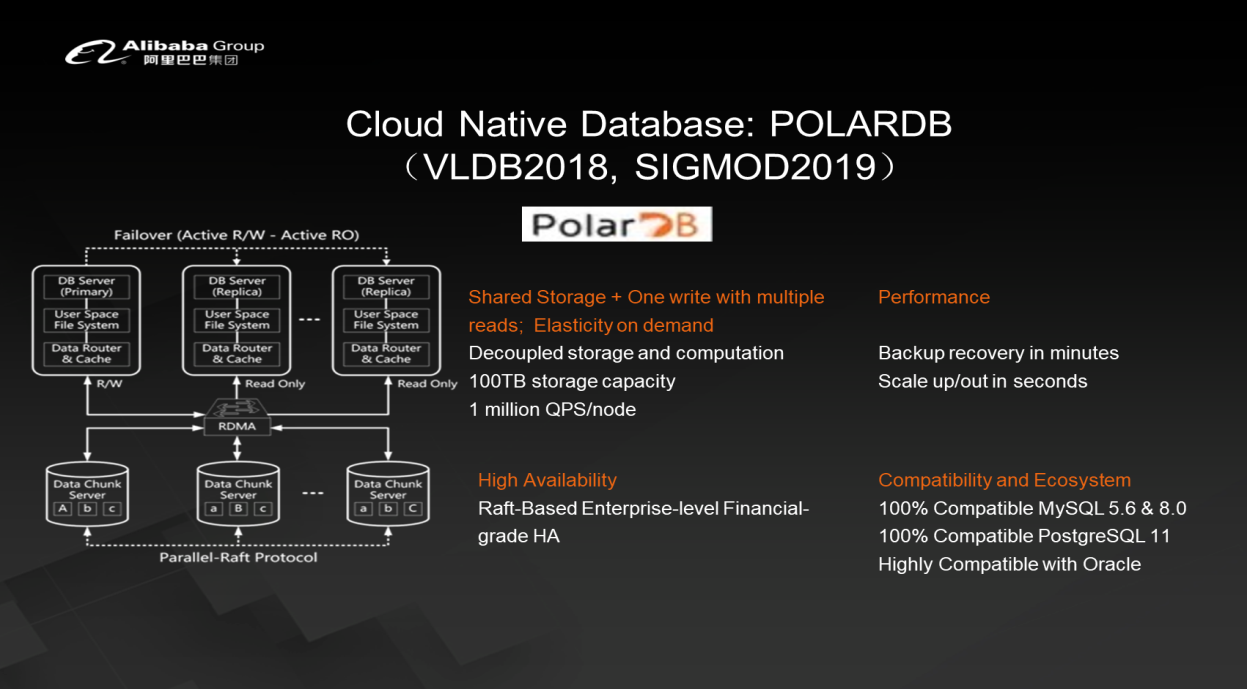

The traditional database architecture is a single-node architecture. It is easy to deploy and develop, but it is not conducive to elastic scaling. The cloud-native architecture implements distributed shared storage based on networks such as remote direct memory access (RDMA). This allows upper-layer applications to view storage as a single component and separates storage from computing at upper layers. As such, users can scale storage and computing capabilities separately for extreme elasticity. This architecture also provides a good management method for cloud-native. Databases such as Alibaba Cloud PolarDB is based on such an architecture.

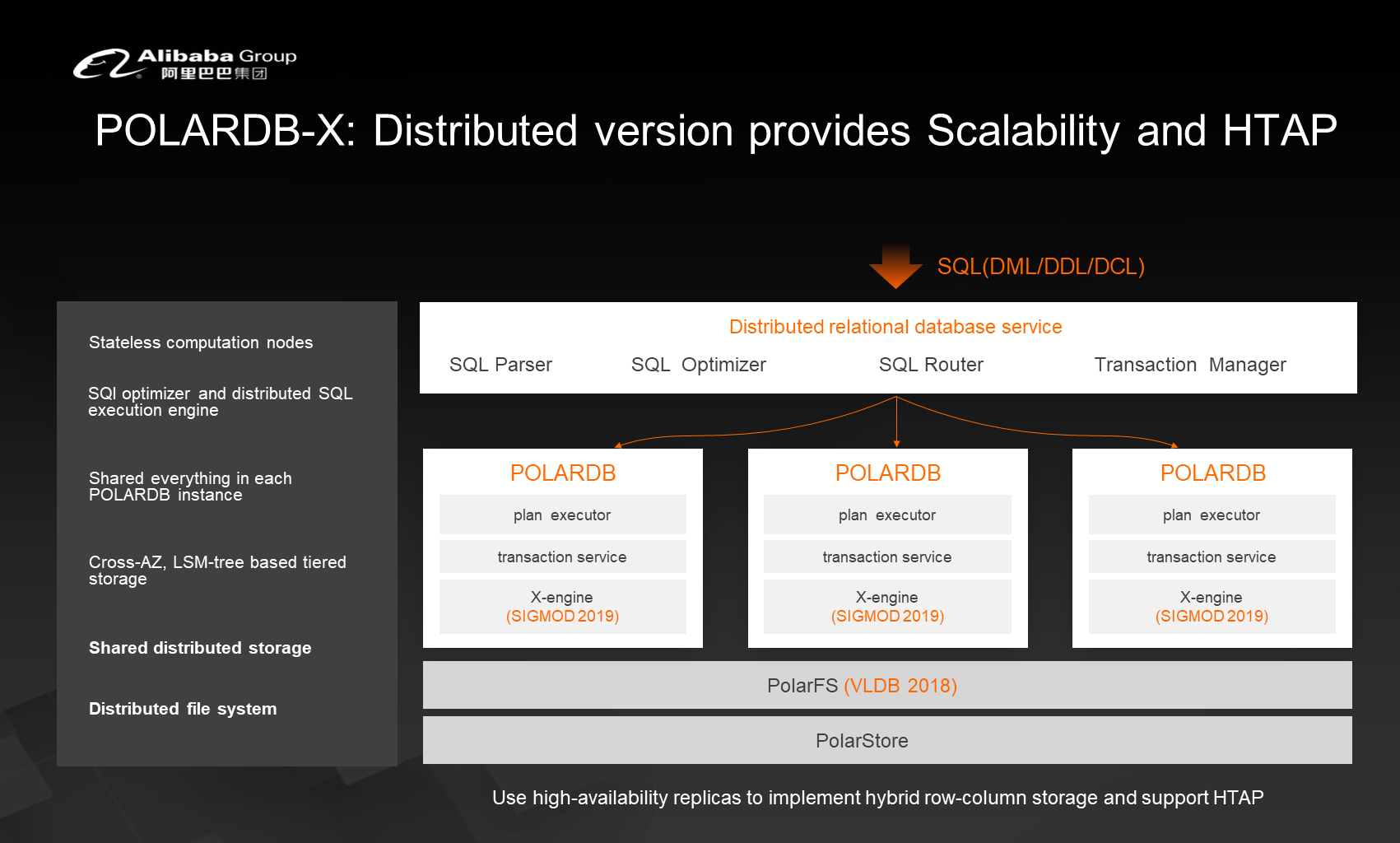

Distributed architectures are another type of database architectures. This architecture performs database sharding and features powerful horizontal scalability. When data volume and concurrency increases, you simply need to add new nodes. The drawback of this architecture is that if you do not want to modify the upper-layer business logic, you must be able to handle distributed transactions and distributed queries. Typical examples of such an architecture include Ant Financial's OceanBase and Alibaba's PolarDB-X, AnalyticDB, and Tencent's TDSQL.

Next-generation enterprise-level database architectures need to perfectly combine the features of cloud-native architectures, distributed architectures, and HTAP. The upper layer should use a Shared-Nothing architecture for database-table sharding, and the lower layer should use a cloud-native architecture with separated storage and computing. The advantage of this architecture is that it supports both horizontal scaling and high availability. In situations that require high concurrency, far fewer shards are required, which greatly reduces the complexity of distributed transactions.

To give you a better understanding of this topic, let's look into the theory behind distributed systems, the core of which is consistency, availability, and partition tolerance. Different architectures can be used to solve these problems, including the single-host and single-node database architecture and the data partitioning or sharding and middleware architecture. In addition, the best way is to implement an integrated distributed architecture. The system coordinates and processes requests, and then returns the final results to the user.

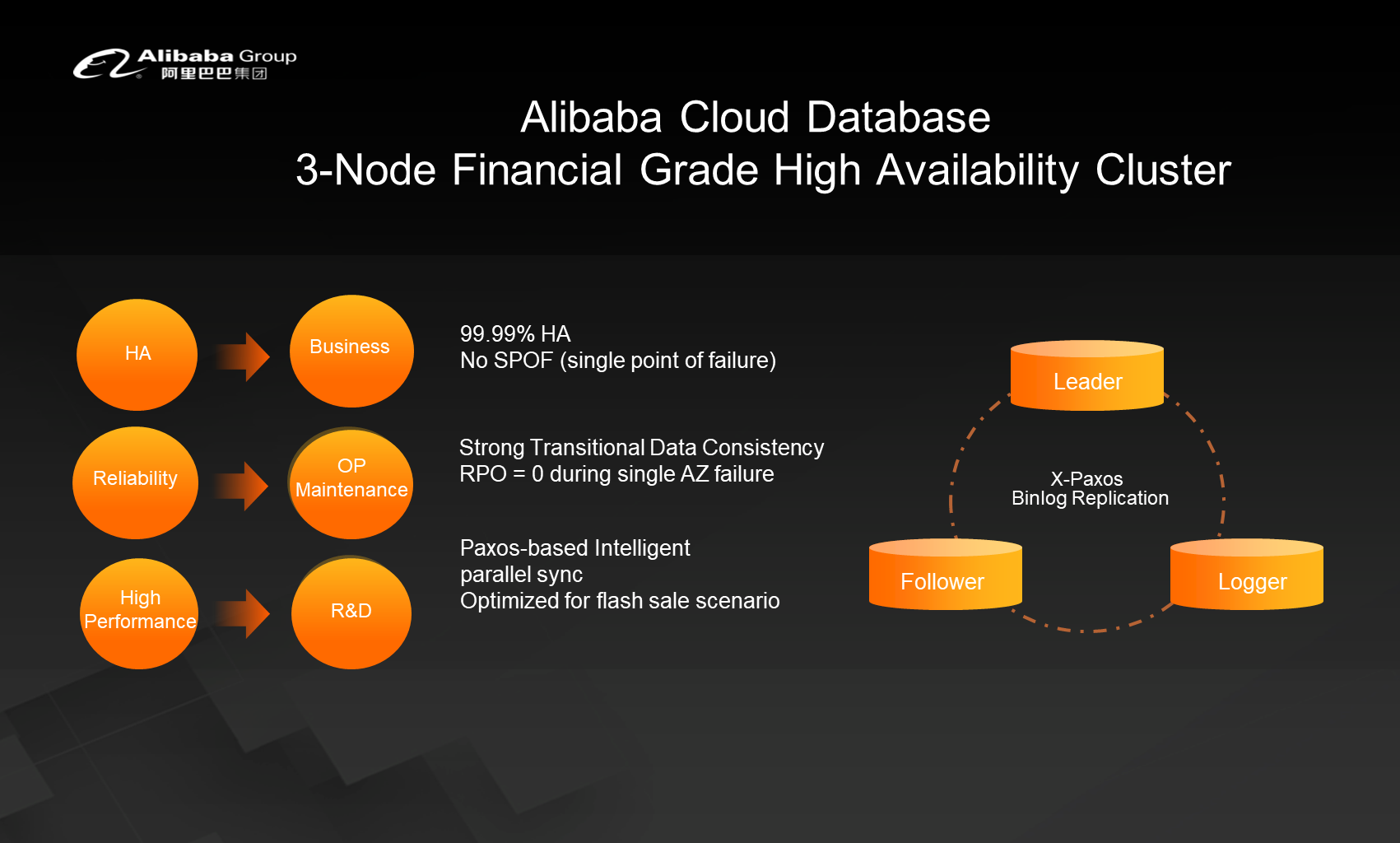

This approach involves high availability. Then, we naturally have to ask: What should I do if a database problem occurs after database and table sharding? Distributed high-availability databases can ensure partition data consistency through data consensus protocols. Now there are already two outstanding distributed data consensus protocols, Paxos and Raft. The protocol mandates that an agreement on data values must be reached in the partitioning process and a possible value must be proposed for each data entry. Once the partition process reaches a consensus on the value of a data entry, all partition processes will obtain the value.

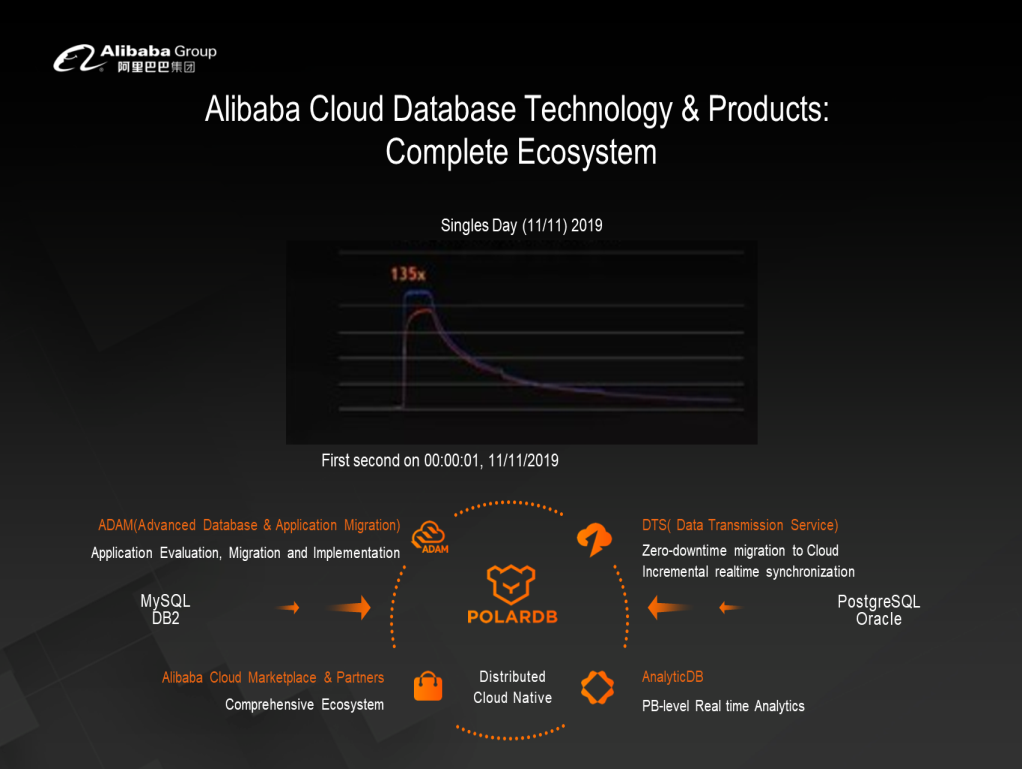

This section introduces Alibaba's database products. Alibaba Cloud databases not only provide services in the cloud, but also support all activities in the Alibaba Group's internal systems. During Double 11 in 2019, the peak processing capacity of Alibaba's database system increased by a factor of 135 at the very first second. Such performance imposes high scalability, elasticity, and availability requirements on databases. Database technology and products must build a complete ecosystem, and therefore we need tools such as PolarDB and AnalyticDB.

The bottom layer of PolarDB is the RDMA-based distributed shared storage. High availability is provided in the distributed shared storage through the Parallel Raft protocol. The upper layer implements multiple computing nodes, with one primary node and multiple real-only nodes. Since the data presented to the underlying layer is a single piece of logical data, the system has strong transaction processing performance and can be elastically scaled in a matter of minutes. In addition, this architecture does not involve compatibility transformation in database and table sharding. This means it is fully compatible with MySQL as well as PostgreSQL, and highly compatible with Oracle.

To support multi-model database deployment, Alibaba Cloud launched an all-in-one product solution in 2019.

Alibaba has integrated the capabilities of XDB, PolarDB, and DRDS in the PolarDB-X distributed database. DRDS serves as its upper layer, which mainly handles distributed transaction processing and query processing. The lower layer is a PolarDB layer that supports horizontal and elastic scaling. PolarDB-X uses the X-Engine storage engine.

To achieve three-node financial-level high availability, we use the Raft protocol to ensure data scale consistency among the three replicas and ensure high availability, scalability, and performance.

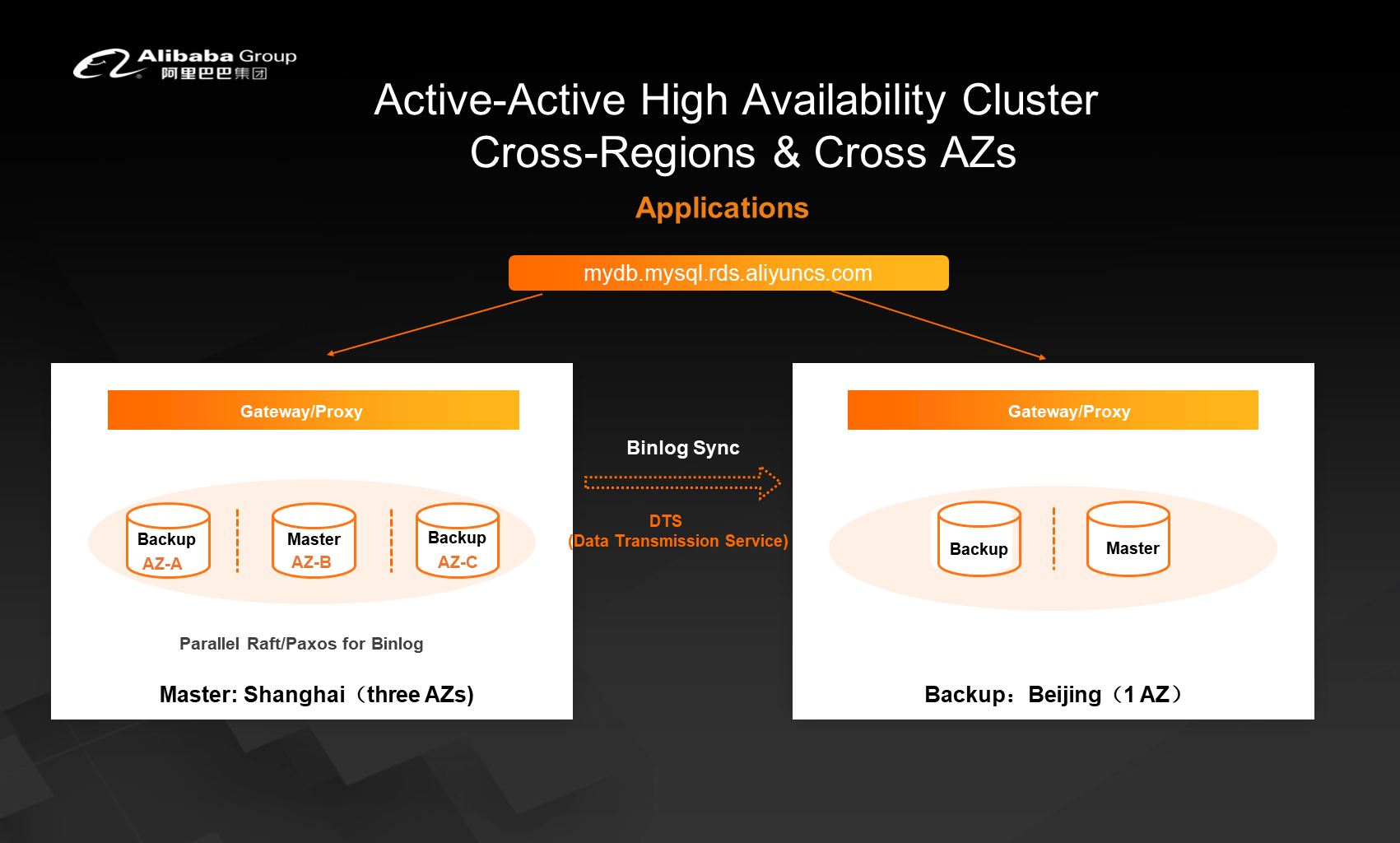

Deployment across zones imposes a major challenge. Generally, the zones consensus protocol is implemented among three replicas in a single city, while log synchronization technology is used across cities and regions. For example, in Data Transmission Service, Binlog parsing is used to parse logs from the source node and synchronize them to the remote node for replay.

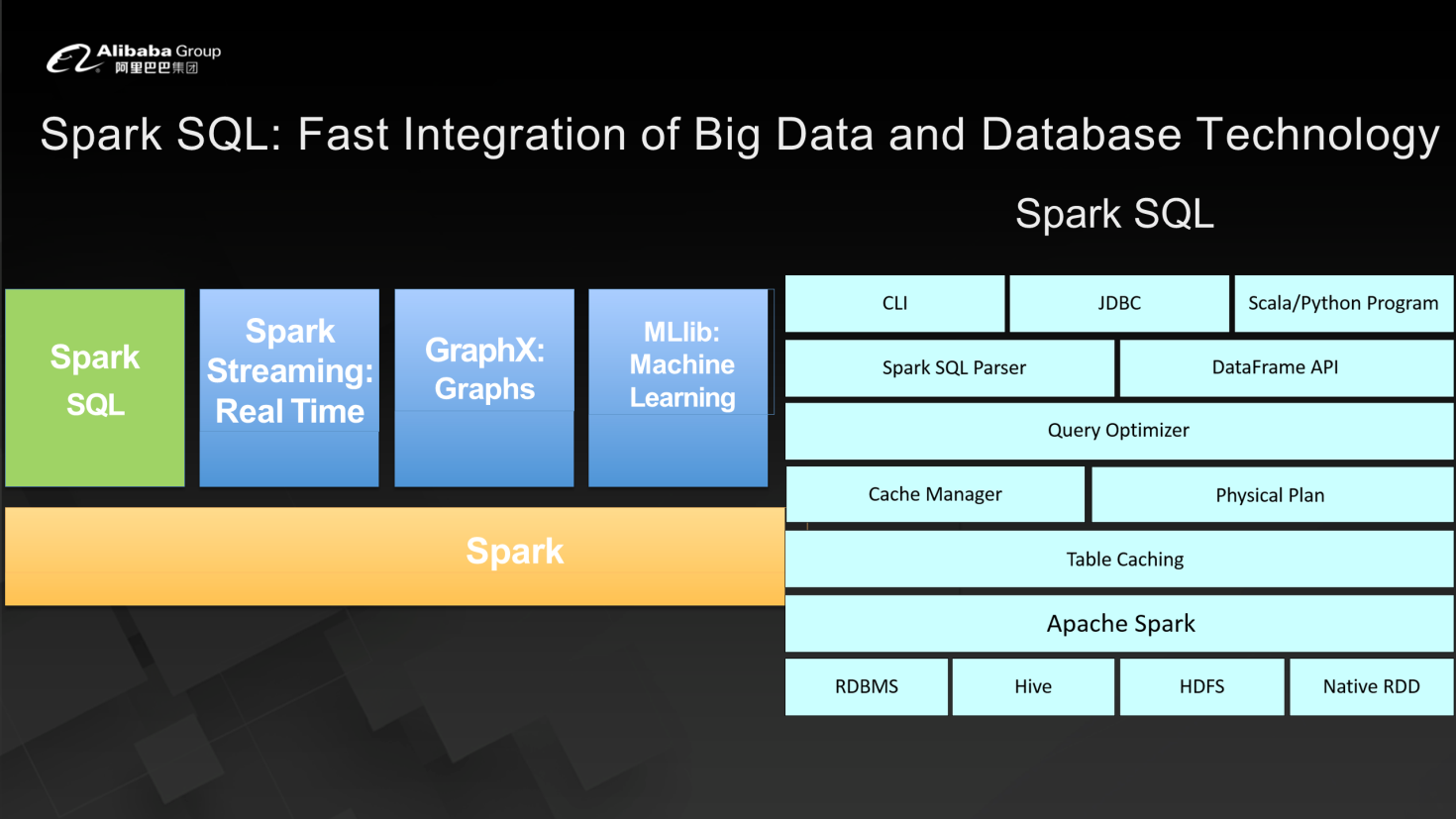

Currently, the trend in the industry is to combine big data and fast data. This means analysis and interactive computing can be performed online and in real time.

Big data systems such as MapReduce and Spark are derived from database management systems. However, Spark processes data in the memory, which greatly reduces the system overhead.

Spark SQL is a very popular structured module that uses SQL for data processing and analysis. Spark SQL operates like a database kernel. The only difference is that it converts input SQL statements into Spark jobs for execution.

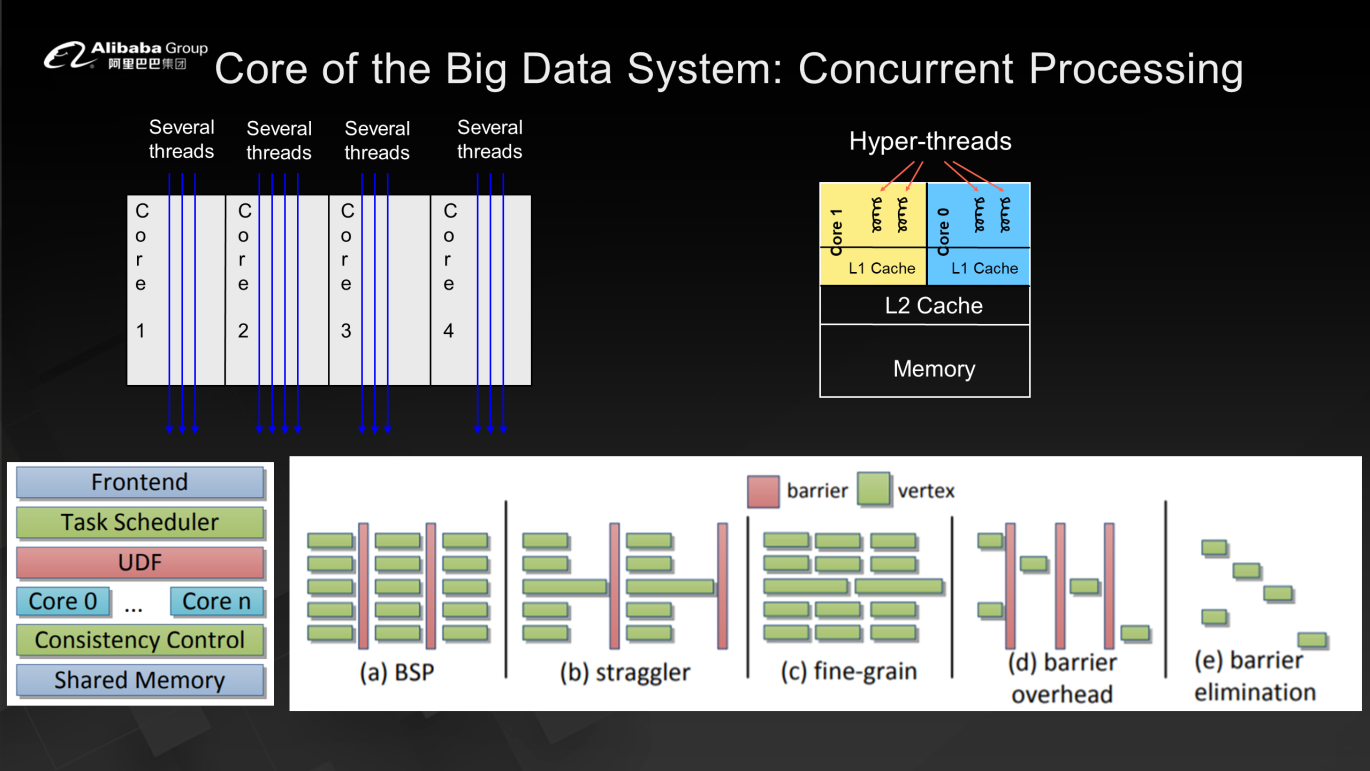

The main challenge faced by big data systems based on the BSP model is the synchronization problem caused by inconsistent task execution progress during concurrent processing. We want big data systems to achieve parallel synchronization just like database management systems.

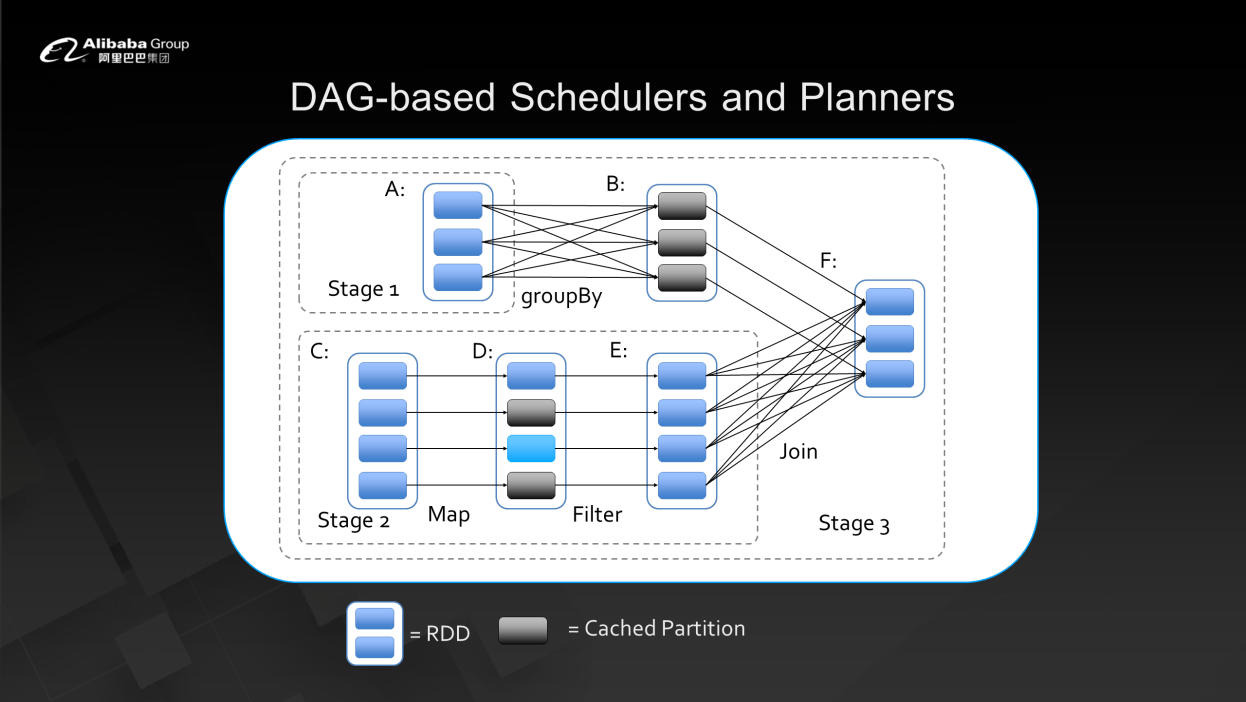

Both database management systems and big data systems use DAG-based schedulers and planners for scheduling. This means the execution plan is viewed as a directed acyclic graph for group execution. After each round of execution is completed, the execution plan is synchronized and then the next round is triggered.

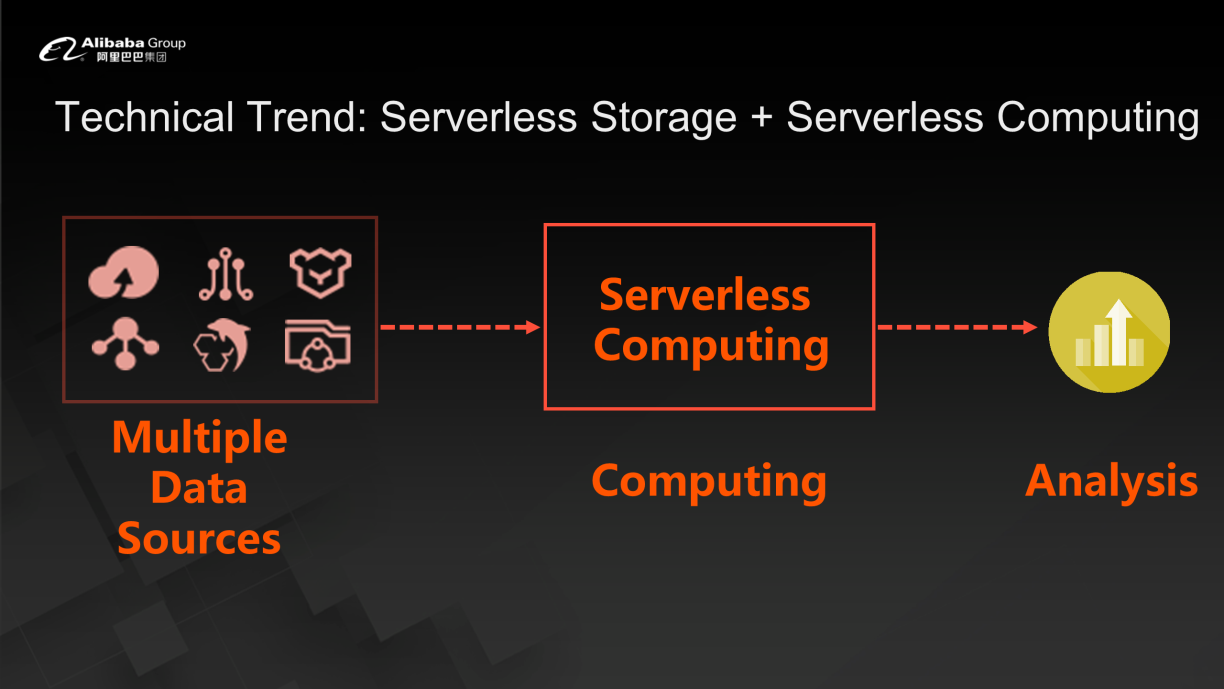

Big data systems and database management systems are merging and are moving in the direction of online real-time operations. The most important challenge in online real-time processing is the processing of multiple data sources and serverless computing.

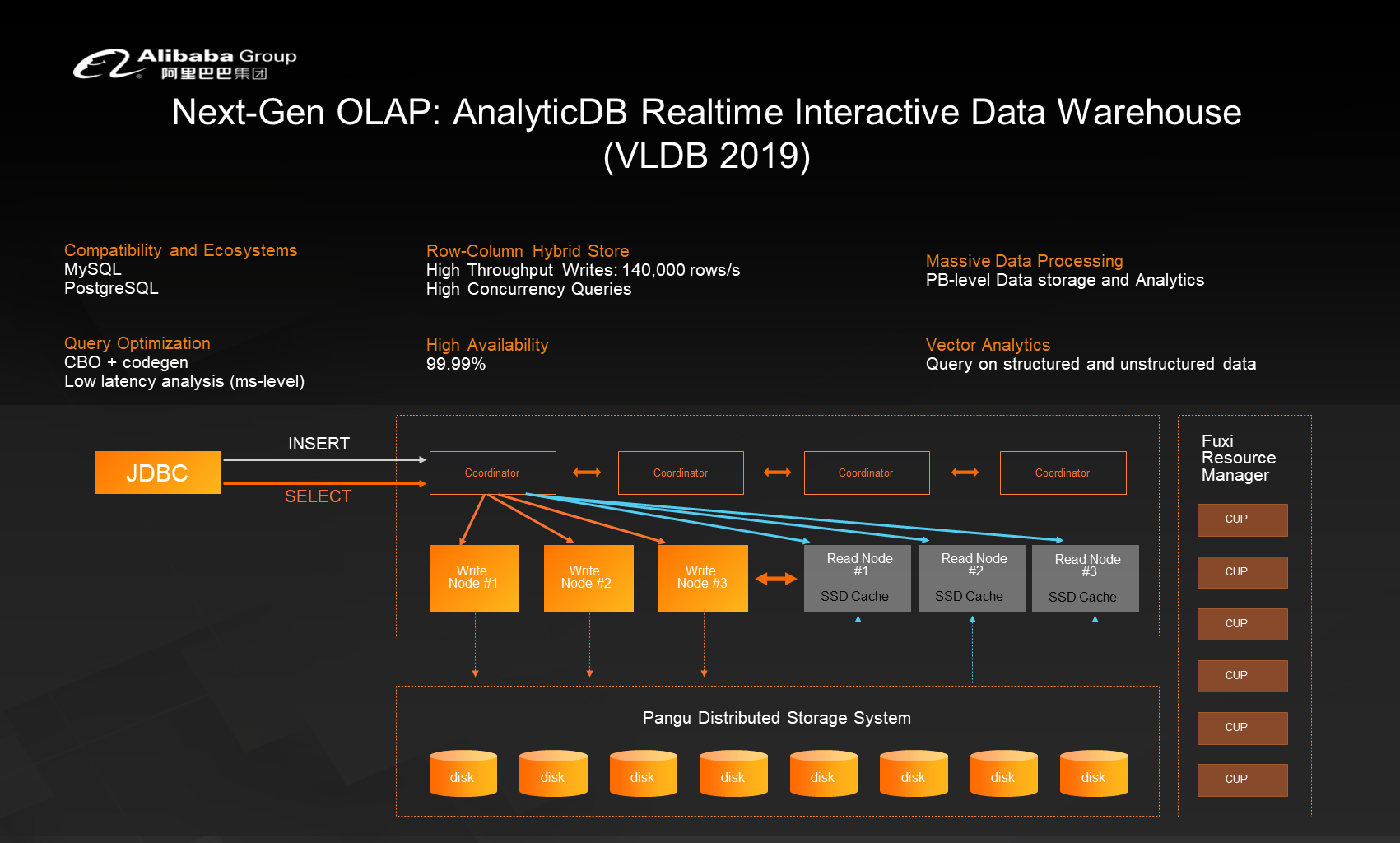

Alibaba Cloud has implemented intelligent OLAP and AnalyticDB real-time interactive data warehouses. This product is based on the BSP model, so it can perform online computing and analysis.

Another advantage of AnalyticDB is that it effectively combines non-structured and semi-structured data with structured data through a vectorized computing engine that can vectorize non-structured data. This allows it to implement joint processing.

Data lakes and data warehouses both solve the intrinsic problems of heterogeneous analysis and processing. However, data warehouses have their own metadata management capabilities and storage engines, whereas data lakes only manage metadata and do not have storage engines. Data lakes connect to different data sources, rather than converting data to their own storage engines. This is the essential difference between DLA and AnalyticDB. Mainstream data lake kernels such as the one used by Alibaba Cloud, generally incorporate a Presto or Spark kernel for interactive computing. Then, the computing results are provided to business intelligence tools. Because data lakes do not have their own storage engines, they are ideal for serverless computing architectures.

NoSQL supports the processing of non-structured and semi-structured data. Based on Redis, Alibaba Cloud has implemented Tair with support for KV caching, MongoDB that processes document data, Time Series Database (TSDB) that processes time series and spatiotemporal data, Graph Database (GDB) that processes graphs, and Cassandra that processes wide tables. In short, NoSQL does away with the requirement of traditional relational databases for ACID data consistency. In exchange, it supports horizontal expansion for complex data, such as non-structured and semi-structured data.

Alibaba's enterprise data management function matrix provides a unified portal and business report services for R&D, DBAs, internal audit, and decision-making. Enterprise-level databases require security control, change stabilizing, and data analysis capabilities. They involve SQL task execution engines, logical database execution engines, security rule engines, and data desensitization engines.

Alibaba Cloud's database management product is called DMS. DMS provides data security in addition to the preceding services. DMS completes the entire process from auditing to proactive interception to data desensitization. It provides a built-in security rule library, rule executors, and actions (similar to triggers) to prevent data loss when a problem occurs in the primary business system.

Backup data has become a valuable resource. The current trend in CDM is to use backup data for analysis, query, and even business intelligence decision-making.

Following the trend in the control platform field, we want to provide the capabilities of private clouds within public clouds. Although public cloud control services provide instance management capabilities, many applications need their own capabilities for direct management. For example, users need to obtain the root and admin permissions for their own data center in the public cloud. Therefore, Alibaba Cloud has set up exclusive clusters for major accounts and adopted cloud-native control capabilities, such as Kubernetes. This makes control as transparent as possible and allows us to grant permissions to customers and applications.

In the industry, the large-scale integration of intelligence and machine learning is becoming the trend in the management and control field. The following figure shows the overall architecture of Alibaba Cloud management and control, which is a Self-Driving Database Platform (SDDP). This platform collects performance data for each instance (with the consent of the user, it collects and accesses user performance data and non-business data, such as CPU usage and disk usage.) Then, it performs modeling analysis and real-time monitoring. With this optimization, we have significantly reduced the number of slow SQL statements and the memory usage of Alibaba Cloud.

Standard cloud data security involves transmission procedures and storage procedures, such as referencing Transparent Data Encryption (TDE) and Data at Rest Encryption. Alibaba Cloud summarizes data security along several dimensions, including encrypted data access and storage, the reduction of internal attack risks, and log data consistency verification, for example, using blockchain technology to enable users to verify the consistency of data and logs.

Data is encrypted after it enters the kernel and does not need to be decrypted. The customer's key, which is invisible to others, is used to encrypt data. This ensures that data remains completely confidential throughout all processes, even in the case of internal attacks.

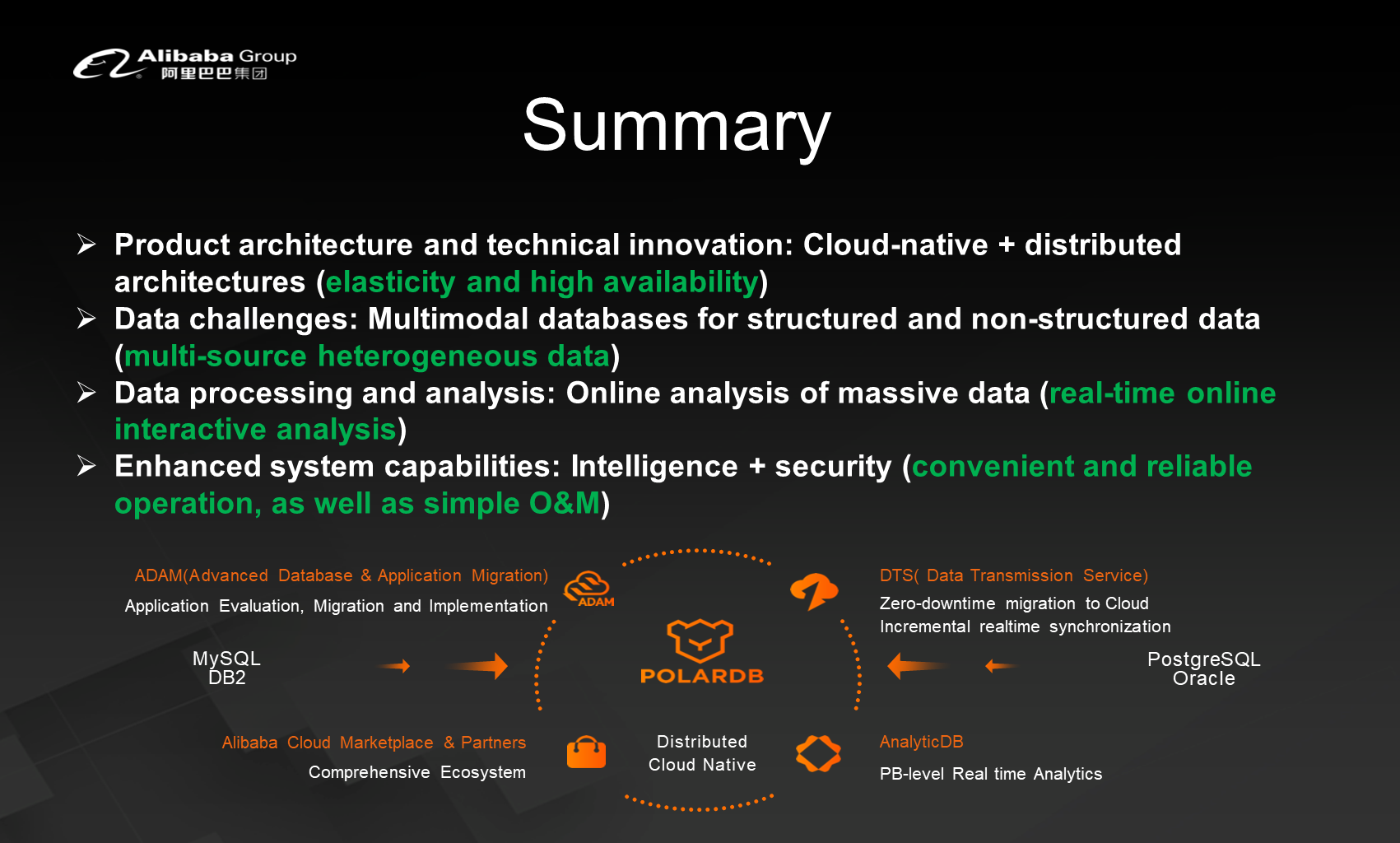

The process of migrating Oracle data to the cloud involves assessment, decision-making, implementation, and optimization. With Alibaba Cloud's Advanced Database and Application Migration (ADAM) automation tool, we can automatically generate reports to tell users which applications are compatible and which applications are incompatible when migrating from Oracle to the target database. This provides a clear process and cost estimate to users in deciding whether or not to migrate applications.

Alibaba Cloud adopts the following Oracle migration process: Use the ADAM automatic migration evaluation tool to evaluate application compatibility and consistency. Next, evaluate the transformations. Then, use Data Transmission Service to migrate databases to different target databases. This provides a standardized, process-based, and product-based Oracle migration solution.

We recommend that small- and medium-sized business systems use target databases that are highly compatible with Oracle, such as PolarDB. In the future, large core systems are likely to develop in the direction of distributed architectures. Therefore, AnalyticDB can be used for analytic systems and PolarDB-X Distributed Edition for transaction systems.

In the database field, Alibaba Cloud currently ranks first in the Asia-Pacific market and third in the global market.

Finally, let's discuss some solutions and application cases based on Alibaba Cloud products and technologies. First, Alibaba's database products support all the complex internal businesses of the Alibaba Group. Externally, they support the applications of the cloud-based commercial systems involved in key national projects. Our database products are used in a wide range of fields, including manufacturing, retail, finance, and entertainment.

To give some specific examples of Alibaba database applications, we helped an eastern bank quickly build an Internet architecture for new businesses and small- and micro-businesses based on the PolarDB Distributed Edition. We helped a Chinese third-party cross-border payment platform to build a high-concurrency and low-latency payment system based on the PolarDB Distributed Edition, while using Data Transmission Service and Data Lake Analytics for heterogeneous multi-source data processing and real-time data synchronization. We used AnalyticDB to replace the traditional Sysbase IQ + Hadoop solution of a major securities firm to help them implement a financial analysis acceleration platform.

Alibaba also helped Tianhong Asset Management build a real-time query and computing platform that supports 500 TB data and 100 million users based on AnalyticDB. We helped one of the largest banks in southern China build a DTS-based geo-disaster recovery solution. We helped China Post use AnalyticDB to build a reporting platform for more than 100,000 agencies across the country. We used cloud-native database technology to help Intime Retail transform its database system and achieve high elasticity and availability, allowing it to support 20X increases in traffic during major promotions while reducing costs by more than 60%.

The future development trends in the database field can be summarized in the following points:

Database cloudification or migration is an ecosystem, not a project. Now, let's review the technologies discussed in this article. Synchronous database migration is made possible by data synchronization and transmission technologies. A distributed cloud-native system is required for elasticity and high availability. NoSQL is required to process non-structured data such as graphs, time series, and spatiotemporal data. Data synchronization and distribution are required for real-time computing and analysis in analysis systems, as well as for backup and hybrid cloud management. Finally, the DevOps development process management suite is used for enterprise database development. In short, only a complete ecosystem can support the rapid development of China's database market and allow us to keep up with global trends.

Get to know our core technologies and latest product updates from Alibaba's top senior experts on our Tech Show series

The Evolution of Enterprise Security Systems and Best Practices by Alibaba

2,593 posts | 793 followers

FollowApsaraDB - June 16, 2023

ApsaraDB - January 17, 2022

JJ Lim - January 4, 2022

Alibaba Clouder - February 11, 2021

Alibaba Cloud Community - December 17, 2025

Alibaba Cloud Community - February 3, 2026

2,593 posts | 793 followers

Follow Application High availability Service

Application High availability Service

Application High Available Service is a SaaS-based service that helps you improve the availability of your applications.

Learn More ApsaraDB for HBase

ApsaraDB for HBase

ApsaraDB for HBase is a NoSQL database engine that is highly optimized and 100% compatible with the community edition of HBase.

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Time Series Database (TSDB)

Time Series Database (TSDB)

TSDB is a stable, reliable, and cost-effective online high-performance time series database service.

Learn MoreMore Posts by Alibaba Clouder