By Dassi Orleando, Alibaba Cloud Community Blog author.

Systems for recognizing people's faces on images, like the system implemented with Facebook’s tagging system, sound pretty difficult to make. But, in reality, they're not as difficult as you may think. In fact, it is just essentially one very powerful API that powers the whole entire thing.

In this article, we're going to see how you can make one-or at least the underlying concept of it-by taking a look at a JavaScript library that allows you to do this kind of thing directly in the browser itself. That is, we're going to take a look at TrackingJs, which is a powerful framework that has an API to handle and track content through images and videos in addition to allowing developers to do a lot of other things as well.

Tracking.js is a JavaScript framework built to enable developers to embed computer-vision and object-detection features into their web applications effortlessly without the need of a whole lot of coding or complex algorithms. Tracking.js is relatively simple and easy to use that is in many ways similar to JQuery. It provides tracker, utilities functions for different computations operations and other web components to make life that much easier.

A tracker in tracking.js represents a JS object which can detect and track elements in an image or a video, this element can be a face, a color, an object, among other things. Tracking.js also provides the ability to create your custom tracker to detect whatever you want. Let’s look at some specific trackers.

A color tracker can be used to track specific set of colors inside an image or a video. By default, this tracker supports the colors of Magenta, Cyan, Yellow, but there is a possibility to add other custom colors. Let’s see the tracker in action:

Here’s the code snipped available over on GitHub.

Here’s a little explanation of the code:

var colors = new tracking.ColorTracker(['magenta', 'cyan', 'yellow']);Now let’s see how to add our custom color by using the function registerColor taking a color label as parameter plus a callback function.

Here’s the code snipped available over on GitHub.

At lines 27 and 34, you can see how we used that function to register two new colors. The fact is that tracking.js work with color by using their RGB representation, and by default the creators of this only registered the colors Magenta, Cyan and Yellow into the library, so if you want to add more color you can just use this function to show to the lib how to detect your color.

In our case, we have added green and black by having their RGB representation which are respectively: (0,255,0) and (0,0,0). The second parameter is just to callback containing the logic used to detect your colors.

It’s pretty simple, tracking.js will send to this callback the color (in RGB format) on which it is when tracking, and you can try to compare if the values you (the callback) actually receiving are corresponding to the RGB code of your color. For example, for the green (absolute green) the RGB code is (0,255, 0), so we just verify if data received corresponds to that and return true/false at this point tracking.js knows if it should consider that area or not.

tracking.ColorTracker.registerColor('green', function(r, g, b) {

if (r == 0 && g == 250 && b == 0) {

return true;

}

return false;

});The last thing to do is to add the label of our color to the color list when initializing the tracker.

var colors = new tracking.ColorTracker(['magenta', 'cyan', 'yellow','green']);This part is more so related to tracking anything you want to track in a series of images or video. For this, it's best that you provide some trained data as an input about what the tracker should track. Fortunately, TrackingJs comes with 3 data sets which we can use for these purposes, which specifically are eyes, mouth, and face data sets. Let’s discover how they work.

Let’s now implement an object detection example, the code is here.

Here we just include at the top of the file tree datasets trained to detect faces, eyes, and mouths. Those are the only trees available, by default, in this lib. Click this link to see how to build a custom datasets to detect new objects.

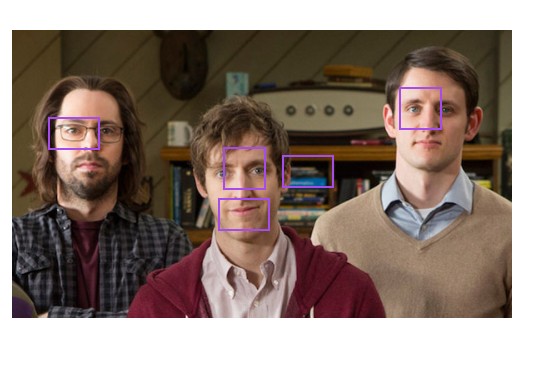

The result may look like the following:

Making a program to visualize stuff with computer can be very hard in different ways. For example, you'll have to figure out how to open the user’s camera and process the pixels available, and then there's also detecting curves on an image and others kind of heavy computational operations. The utilities represent a lightweight and easy to use API for web developers. Actually, some of the available utilities are: Features detection, Convolutions, Gray Scale, Image Blur, Integral Image, Sobel, Viola-Jones.

This feature works by finding corners of objects available within an image. That API looks like:

var corners = tracking.Fast.findCorners(pixels, width, height);Next, there are the convolutional filters, which can be useful for detecting image edges, blurring, sharpening, embossing and many other things. There are three ways to convolve images: horizontally, vertically or by separating the convolves:

tracking.Image.horizontalConvolve(pixels, width, height, weightsVector, opaque);

tracking.Image.verticalConvolve(pixels, width, height, weightsVector, opaque);

tracking.Image.separableConvolve(pixels, width, height, horizWeights, vertWeights, opaque);Gray Scale is used for detecting the gray scale tone-side luminance values of an image, here’s the syntax for it:

tracking.Image.grayscale(pixels, width, height, fillRGBA);Tracking.js implements a blurring algorithm called Gaussian Blur, which a type of blur effect. This particular blur effect is mostly used in multimedia software which it can be helpful to reduce noises or unwanted details into an image. In a single line of code here’s the syntax to blur an image:

tracking.Image.blur(pixels, width, height, diameter);This feature allows developers to have the sum of value in a rectangular subset of grid, it is also called summed area table. In the image processing domain, it is also known as an integral image. To calculate this with tracking.js just do:

tracking.Image.computeIntegralImage(

pixels, width, height,

opt_integralImage,opt_integralImageSquare,opt_tiltedIntegralImage,

opt_integralImageSobel

);This feature computes the vertical and horizontal gradients of the image and combines the computed images to find edges in the image. The way we implement the Sobel filter is by first gray scaling the image, then taking the horizontal and vertical gradients and finally combining the gradient images to make up the final image.

The API looks like this:

tracking.Image.sobel(pixels, width, height);The Viola–Jones object detection framework is the first object detection framework to provide competitive object detection rates in real-time. This technique is used inside tracking.ObjectTracker implementation.

To use Viola-Jones to detect an object of an image pixels using tracking.js, you can use the following:

tracking.ViolaJones.detect(pixels, width, height, initialScale, scaleFactor, stepSize, edgesDensity, classifier);Computer vision programs can often be tough to write, and there is in fact a lot of computation involved to complete even some simple tasks, also coding can became very repetitive at times. Knowing that the main goal of this excellent lib is to provide all those complex processing on the web with an intuitive API, it came with Web Components.

Web components in many ways is a new concept in modern web development. The aim of them is to bring in an easier logic encapsulation model. In fact, this can allow a developer to encapsulate all the logic of the feature into a HTML element and use it as natural as any DOM element. This in turn uses the same concept behind libraries like react, angular and others. But, unlike these, web components are completely integrated into the web browser.

So Tracking.js embed some of their APIs-such as the ones we discussed above-into the web component element we discussed above to provide a simple and easy way to bring computer vision on the web. To use them, we first need to install them via a bower package. Just type the following command to install TrakingJs’s web components:

bower install tracking-elements --saveBy using this, some basic elements like Color element (used to detect color) and Object element (used to detect elements) can be provided. The main principle behind TrackingJs’s web component is to extend native web elements (such as <video />, <img />, so forth), and also to add new features to the framework. This is done with a new attribute: id=””, and the element to detect (color, object, so on) is define by target="" attribute.

Let’s try to detect some colors: magenta, cyan, yellow :

<!-- detect colors into an image -->

<img is="image-color-tracking" target="magenta cyan yellow" />

<canvas is="canvas-color-tracking" target="magenta cyan yellow"></canvas>

<video is="video-color-tracking" target="magenta cyan yellow"></video>In the above code, is="image-color-tracking" was used to specify that we are extending the native <img/> element and target="magenta cyan yellow" was used to let tracker know the colors we are targeting, this element also exposes Tracker events and methods.

Let’s do it by practice:

Here is the code snippet.

is attribute and defining the object to track with target attributeplotRectagle() function to draw a rectangle around the detected area.In this article, we’ve looked at how to install and apply computer-vision on the web and perform some object- and color-detection features with TrackingJs.

2,593 posts | 793 followers

FollowJwdShah - February 7, 2024

Alibaba F(x) Team - June 20, 2022

Farah Abdou - October 31, 2024

Alibaba Clouder - November 4, 2019

dehong - July 8, 2020

Alibaba Cloud Community - April 7, 2024

2,593 posts | 793 followers

Follow ID Verification

ID Verification

A digital ID verification solution to provide highly secure, convenient, and flexible remote ID verification

Learn More Fraud Detection

Fraud Detection

A risk management solution that features real-time analysis and accurate identification and is suitable for all business scenarios.

Learn MoreMore Posts by Alibaba Clouder