By Digoal

An electrical grid is a very typical and well-established system that is vital to people's livelihood and has massive amounts of data. In today's era of Internet (IoT), many aspects of an electrical grid system can be used as references and considerations for other relevant systems.

Each province may have hundreds of millions of electricity meters, and some large prefectural cities may have tens of millions of electricity meters.

How do we pay our electric bills? In our community, we pay electric bills once every two months. We consume electricity first and pay the bill later. This payment model demonstrates the State Grid's kindness, because nowadays payment usually comes before our consumption of good or services in most cases. Usually, we recharge our accounts before we actually consume goods or services, such as mobile telephone accounts, broadband network accounts, and natural gas accounts. If our account balance runs out, mobile phone or network services will stop.

However, most people here are generally good-mannered and very few people actually refuse to pay the bill after consuming electricity. The State Grid will stop providing electricity for those who refuse to pay the bill.

If electric bill payments are changed to use the recharge model, will you be able to get used to this new payment model? The job of electricity meter readers may gradually disappear (many cities no longer have electricity meter readers).

Even if electric bill payments do not change to the recharge model, an electricity grid system is responsible for many other things, such as real-time electricity consumption monitoring by household, community, district, and administrative region, acceleration monitoring, data board analysis, data prediction, and emergency response.

How should the system be designed?

The following section uses a real-time electricity meter monitoring case to show how to design this system and perform stress testing.

Electricity consumption data needs to be collected from each electricity meter every five minutes.

Record electricity consumption details (for future data board analysis) and update users' latest electricity consumption data (to determine if a user has exceeded the consumption limit or if a user has stolen electricity).

This may involve the following tables:

1. Electricity consumption details (readings uploaded each time used for purposes like reconciliation and analysis)

Meter ID, time, and reading

2. Real-time electricity consumption (real-time status, alerting rules, and response)

Meter ID, last time, current time, reading at the beginning of a day, reading at the beginning of a month, reading at the beginning of a year, last reading, current reading

3. Alerting rule table

For example, an alert is triggered when the increment within five minutes exceeds a specific value

An alert is triggered when the electricity consumption within five minutes is a negative value.

4. Abnormal electricity consumption details (details of exception alerts)

5. User account (balance, package, etc.)

Meter ID, balance, electricity plan ID, creation time, update time

6. Metadata (electricity plan information)

Electricity plan ID, electricity consumption in peak or non-peak hours, pricing, and other information

7. Users' recharge records

1. Analysis

Assume that electricity consumption data is collected every five minutes from each of 1 billion electricity meters throughout China. In this case, 288 billion data records will be created in one day and 105 trillion in one year. The data volume in this scenario is very large.

Of course, details are generally not collected in such a large range. Instead, details are collected at the prefectural city level (tens of millions of households). Thus, around 2.88 billion records are created in one day and around one trillion in one year.

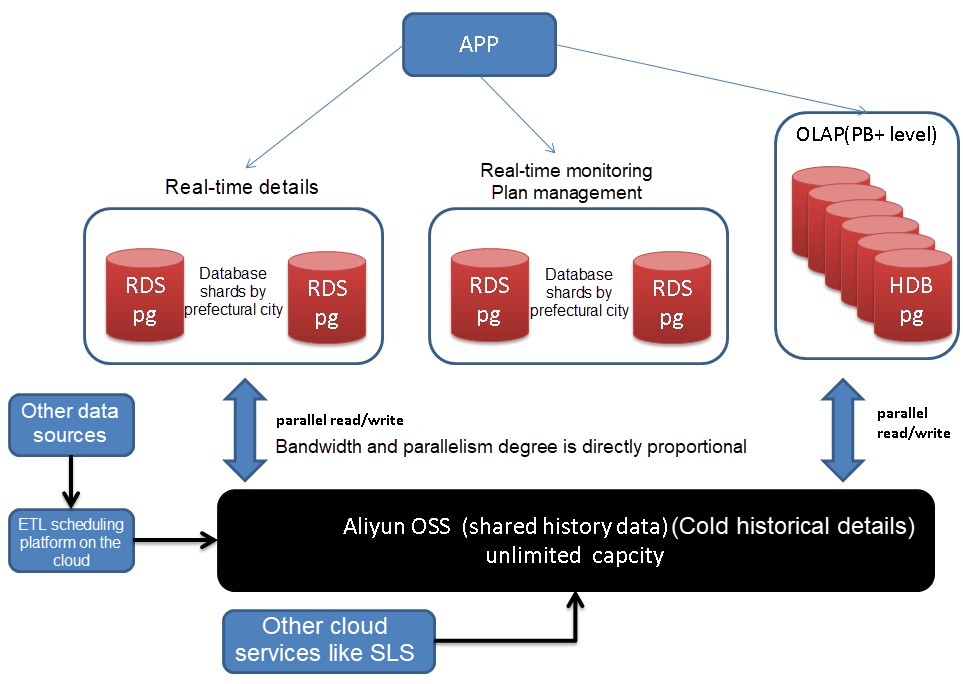

Alibaba Cloud HybridDB for PostgreSQL is a distributed MPP database targeting analytics business that may involve PB-scale data. Alibaba Cloud HybridDB for PostgreSQL provides powerful computing, storage, and OLAP features (such as GIS, JSON, analytic functions, windows, column storage, partitioning, horizontal scaling).

2. Real-time alerting

Around tens of millions of data records are created every five minutes in a single prefectural city. A total of around 2.88 billion records are created each day.

Alibaba Cloud RDS for PostgreSQL allows hundreds of billions of data records to be processed each day. Therefore, it can easily meet the need to process 2.88 billion records.

PostgreSQL is applied in many fields around the globe, ranging from military products and research projects, to commercial and civilian fields (such as astronomy, New Retail, Cainiao Network, Amap, StreamCompute, spatial–temporal search, data mining by any dimension, JSON, graph search, text search, search for similar images, and financial risk control).

3. Electricity plan management

Electricity plan management is a typical OLTP system. Alibaba Cloud RDS for RDS PostgreSQL can support electricity plan management perfectly.

Design and performance metric reference:

1. Write detailed data into RDS for PostgreSQL in real time. A single instance of RDS for PostgreSQL can write around 2 million rows per second and around 170 billion rows each day.

Create partitioned tables by hour.

RDS for PostgreSQL supports reading data from and writing data to OSS external tables, as well as writing data to OSS in parallel.

2. Use HybridDB for PostgreSQL for data analysis.

HybridDB for PostgreSQL supports analysis of massive amounts of data (PB-scale data) and allows reading/writing data from/to OSS in parallel. HybridDB for PostgreSQL supports features such as parallel computing, column storage, compression, and analysis.

In Alibaba, HybridDB for PostgreSQL is applied in many cases, most of which have hundreds of TB of data.

3. The scheduling system imports detailed data from RDS for PostgreSQL to HDB for PostgreSQL by way of OSS. Data is processed in parallel throughout the process and no performance bottlenecks are encountered.

4. Use RDS for PostgreSQL for real-time monitoring and alerting. A single instance of RDS for PostgreSQL allows around 300,000 records to be updated per second (bulk update has better performance) and around 26 billion records each day. Therefore, RDS for PostgreSQL can meet the requirements of electricity consumption monitoring in municipalities and prefectural cities perfectly.

5. Use RDS for PostgreSQL for other plan-related OLTP requirements.

6. Use OSS to store historical details, for example, details of one year ago.

If OSS is used, RDS for PostgreSQL and HybridDB for PostgreSQL can use external OSS tables to access this historical data in a transparent manner. OSS can also provide support for analyzing and querying details.

1. Scaling of detail business

Perform database sharding by prefectural city. For a city with an ultra-large number of households, you can further split data by electricity meter hash. (A single instance of RDS for PostgreSQL has already provided powerful computing power. Performance metrics are provided in a later section. The probability of horizontal scaling is very low.)

2. Scaling of analytic business

HybridDB for PostgreSQL itself is an MPP database that supports the computation of PB-scale data. With the separation of OSS and computation, nearly unlimited storage is supported.

3. Scaling of real-time monitoring business

Perform database sharding by prefectural city. For a city with a ultra-large number of households, you can further split data by electricity meter hash. (A single instance of RDS for PostgreSQL has already provided powerful computing power. Performance metrics are provided in a later section. The probability of horizontal scaling is very low.)

4. Scaling of other plan-related OLTP business

Because this business volume is very small, it is not necessary to take scaling into account.

Detail business only involves in writing data and therefore is very simple. I have written many similar use cases. The data in the following cases is provided for your reference.

1. Gateway data of service providers and financial data is very large in size, and needs to be inserted quickly into a big database for persistence.

Up to 5.06 million rows can be inserted per second (1.78 GB/s, 437.2 billion rows and 154 TB each day).

2. PostgreSQL on ECS + Disks

Up to 1.73 million rows can be inserted per second (150 billion rows each day).

Data is written from an OLTP system to an OLAP system using the scheduling system to implement data analysis in the OLAP system.

PostgreSQL 10, which will be released soon, contains the StreamCompute plug-in. In addition to traditional methods, the StreamCompute plug-in in PostgreSQL can also be used to implement real-time monitoring.

This article also describes the traditional method of implementing real-time monitoring. After all, many users still adopt the traditional method.

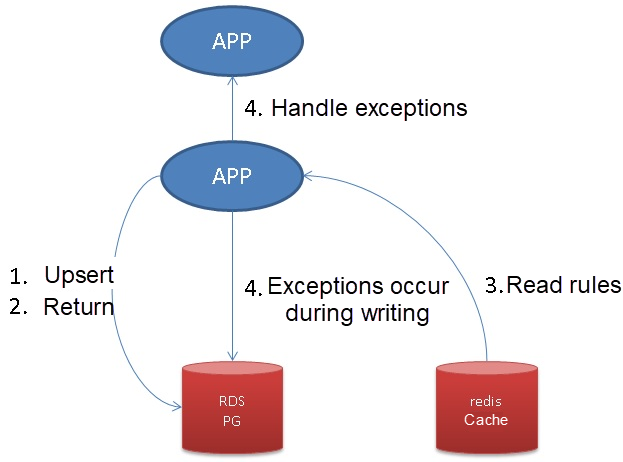

This demo involves three tables:

1. Real-time electricity consumption (real-time status, alerting rules, and response)

Meter ID, last time, current time, reading at the beginning of a day, reading at the beginning of a month, reading at the beginning of a year, last reading, current reading

insert returning

2. Alerting rule table

For example, an alert is triggered when the increment within five minutes exceeds a specific value.

An alert is triggered when the electricity consumption within five minutes is a negative value.

select, CACHE

3. Abnormal electricity consumption details (details of exception alerting)

insert

The procedure is as follows:

We take the most stressful scenario, for example — update and return electricity meter data in real time.

create table tbl_real_data (

uid int primary key, -- Electricity meter ID

last_time timestamp, -- Last update time

curr_time timestamp, -- Current time

d1 float4, -- Reading at the beginning of a day, provided by business

d2 float4, -- Reading at the beginning of a month, provided by business

d3 float4, -- Reading at the beginning of a year, provided by business

d4 float4, -- Last reading

d5 float4 -- Current reading, provided by business

); Design a query, update the data, and return data after the update

insert into tbl_real_data values ($1,$2,$3,$4,$5,$6,$7,$8)

on conflict (uid) do

update set

last_time=tbl_real_data.curr_time,

curr_time=excluded.curr_time,

d1=coalesce(excluded.d1,tbl_real_data.d1),

d2=coalesce(excluded.d2,tbl_real_data.d2),

d3=coalesce(excluded.d3,tbl_real_data.d3),

d4=tbl_real_data.d5,

d5=excluded.d5

returning *; For example, insert real-time electricity meter data and return status before and after the update respectively. The business issues real alerting and responds accordingly based on the returned data and configured rules.

insert into tbl_real_data values (

1, -- Electricity meter ID

null, -- Last update time

now(), -- Current time

2, -- Reading at the beginning of a day, provided by business at the time of flipping

3, -- Reading at the beginning of a month, provided by business at the time of flipping

4, -- Reading at the beginning of a year, provided by business at the time of flipping

null, -- Last reading

6 -- Current reading

)

on conflict (uid) do

update set

last_time=tbl_real_data.curr_time,

curr_time=excluded.curr_time,

d1=coalesce(excluded.d1,tbl_real_data.d1),

d2=coalesce(excluded.d2,tbl_real_data.d2),

d3=coalesce(excluded.d3,tbl_real_data.d3),

d4=tbl_real_data.d5,

d5=excluded.d5

returning *;

uid | last_time | curr_time | d1 | d2 | d3 | d4 | d5

-----+-----------+----------------------------+----+----+----+----+----

1 | | 2017-08-26 12:19:54.486801 | 2 | 3 | 4 | | 6

(1 row)

insert into tbl_real_data values (

1, -- Electricity meter ID

null, -- Last update time

now(), -- Current time

null, -- Reading at the beginning of a day, provided by business at the time of flipping

null, -- Reading at the beginning of a month, provided by business at the time of flipping

null, -- Reading at the beginning of a year, provided by business at the time of flipping

null, -- Last reading

8 -- Current reading

)

on conflict (uid) do

update set

last_time=tbl_real_data.curr_time,

curr_time=excluded.curr_time,

d1=coalesce(excluded.d1,tbl_real_data.d1),

d2=coalesce(excluded.d2,tbl_real_data.d2),

d3=coalesce(excluded.d3,tbl_real_data.d3),

d4=tbl_real_data.d5,

d5=excluded.d5

returning *;

uid | last_time | curr_time | d1 | d2 | d3 | d4 | d5

-----+----------------------------+----------------------------+----+----+----+----+----

1 | 2017-08-26 12:19:54.486801 | 2017-08-26 12:20:01.452364 | 2 | 3 | 4 | 6 | 8

(1 row)

The last status is returned. Business implements real-time alerting based on the returned status information and configured rules. The following stress testing script randomly performs an upsert on 1,000 households' electricity meter data and returns the final status and the last status.

vi test.sql

\set uid random(1,10000000)

\set d5 random(1,100000)

insert into tbl_real_data (uid,curr_time,d5) values (:uid, now(), :d5) on conflict (uid) do update set last_time=tbl_real_data.curr_time, curr_time=excluded.curr_time, d1=coalesce(excluded.d1,tbl_real_data.d1), d2=coalesce(excluded.d2,tbl_real_data.d2), d3=coalesce(excluded.d3,tbl_real_data.d3), d4=tbl_real_data.d5, d5=excluded.d5 returning *; Results

pgbench -M prepared -n -r -P 1 -f ./test.sql -c 64 -j 64 -T 120

tps = 291094.784574 (including connections establishing)

tps = 291123.921221 (excluding connections establishing) 1. Detail business (performance metrics for a single database)

Up to 1.73 million rows of details can be inserted per second (150 billion rows each day). This performance can meet the need to monitor electricity meter data in a large prefectural city with 470 million households.

(Electricity meter readings are uploaded every five minutes and proper methods need to be adopted to prevent broadcast storm, for example, by using a random beginning time.)

2. Analytic business

PB-scale data analysis.

3. Real-time monitoring business (performance metrics for a single database)

Up to 290,000 rows can be monitored per second (25 billion rows each day). It can meet the data monitoring requirements of a large prefectural city with 80 million households.

(Electricity meter readings are uploaded every five minutes and proper methods need to be adopted to prevent broadcast storm, for example, by using a random beginning time.)

City population statistics (from the Internet ):

Chongqing: 30 million; Beijing: 23 million; Tokyo: 37 million Even if the proportion of population to the number of electricity meters is 3:1, the total number of the electricity meters in Tokyo is over 10 million. A single instance of PostgreSQL is far from enough to meet the electricity data monitoring requirement in the most populous city in the world.

An electric grid system is vital to people's livelihood. Currently, we generally pay the electricity bill on a monthly basis. However, we may pre-charge electricity or water fees in the future, similar to how we pay our cellphone bills and natural gas bills.

In fact, pricing is not very simple, and involves many aspects such as electricity pricing in peak and non-peak hours, tiered electricity pricing, commercial electricity rate, and industrial electricity rate. If electricity plans are available in the future, electricity pricing will become more complicated.

However, you do not have to worry about these issues. Cloud products such as Alibaba Cloud RDS for PostgreSQL, HybridDB for PostgreSQL, OSS, and Redis can meet the requirements of electricity grid systems perfectly by implementing a collection of electric consumption details, real-time analysis, OLAP analysis of massive amounts of data over the Internet, and OLTP management of electricity plans.

Amuthan Nallathambi - October 12, 2023

ApsaraDB - April 28, 2020

Hologres - July 24, 2020

Alibaba Cloud Serverless - January 3, 2024

ApsaraDB - June 7, 2022

Alibaba Clouder - May 25, 2018

AnalyticDB for PostgreSQL

AnalyticDB for PostgreSQL

An online MPP warehousing service based on the Greenplum Database open source program

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More PolarDB for PostgreSQL

PolarDB for PostgreSQL

Alibaba Cloud PolarDB for PostgreSQL is an in-house relational database service 100% compatible with PostgreSQL and highly compatible with the Oracle syntax.

Learn MoreMore Posts by digoal