By Jiang Xiaowei (Liangzai), Alibaba Researcher

Due to different emphases, traditional databases can be divided into the online transaction processing (OLTP) system and the online analytical processing (OLAP) system. With the development of the Internet, the data volume has increased exponentially, so standalone databases can no longer meet business needs. Especially in the analysis field, a single query may require the processing of a large part or full amount of data. It is urgent to relieve the pressure caused by massive data processing. This has contributed to the big data revolution based on Hadoop over the past decade, which solved the need for massive data analysis. Meanwhile, many distributed database products have emerged in the database field to cope with the increase in data volume in OLTP scenarios.

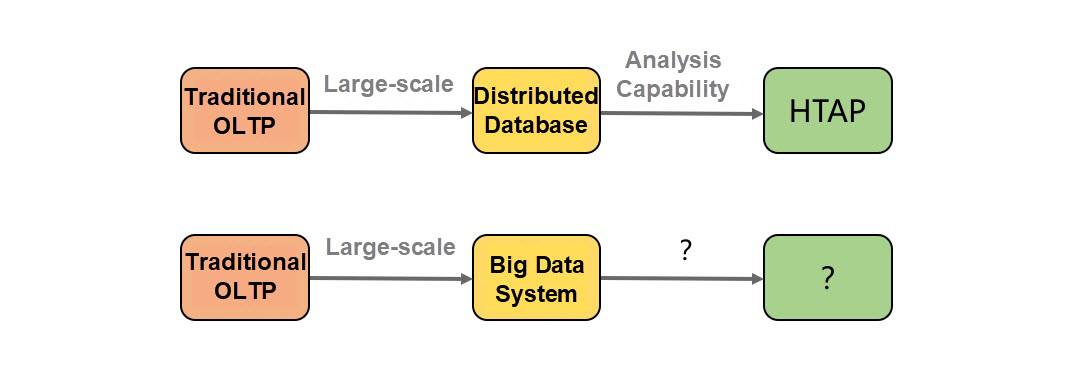

To analyze data in an OLTP system, we usually synchronize the data to an OLAP system on a regularly. This architecture ensures that analytical queries do not affect online transactions through two systems. However, regular synchronization leads to analysis results that are not based on the latest data, and such latency prevents us from making more timely business decisions. To solve this problem, the Hybrid Transactional/Analytical Processing (HTAP) architecture has emerged in recent years. It allows us to directly analyze the data in OLTP databases, thereby ensuring the timeliness of data analysis. Analysis is no longer unique to traditional OLAP systems or big data systems. Naturally, you may ask, "Now that HTAP can analyze data, will it replace the big data system? What is the next stop for big data?"

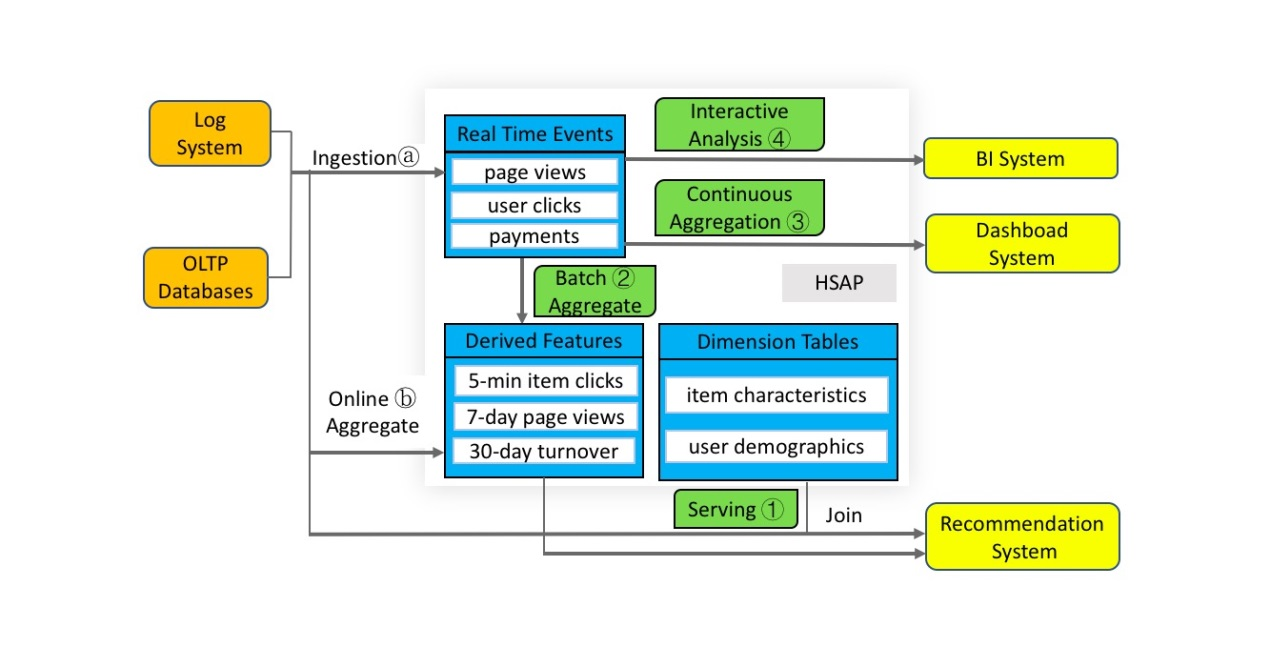

To answer this question, we analyzed typical application scenarios of big data systems by taking a recommendation system as an example. When the recommendation system works, a shopping app can show you exactly what you want to buy and a short video app can play your favorite music. An advanced recommendation system aims to make recommendations according to your real-time behaviors. Every interaction between you and the system will immediately optimize the next experience. To support this system, the back-end big data technology stack has evolved into a fairly complex and diverse system. The following figure shows a big data technology stack that supports a real-time recommendation system.

To make high-quality personalized recommendations in real-time, the recommendation system relies heavily on real-time features and continuous model updates.

Real-time features can be classified into two categories:

The data is also used to generate samples for real-time and offline machine learning. The trained models are verified and continuously updated into the recommendation system.

The preceding part describes the core of an advanced recommendation system, but this is only a small part of the whole system. A complete recommendation system also requires real-time model monitoring, verification, analysis, and tuning. This means that you must use a real-time dashboard to view the results of A/B testing (3), use interactive analysis (4) to perform BI analysis, and refine and optimize the model. Moreover, the operational staff also calls various complex queries to gain insights into the business progress, and conduct targeted marketing through methods, such as tagging people or items.

This example shows a complex but typical big data scenario, from ingestion (a) to online aggregate (b), from serving (1), continuous aggregation (3), to interactive analysis (4), and batch aggregate (2). Such complex scenarios have diverse requirements for big data systems. During the process of building these systems, we have seen two new trends.

Existing solutions address the need for real-time hybrid serving/analysis processing through a portfolio of products. For example, after real-time online aggregation of data through Apache Flink, the aggregated data is stored in products that provide multi-dimensional analysis, such as Apache Druid, and data services are provided through Apache HBase. The stovepipe development model will inevitably produce data silos, which cause unnecessary data duplication. Complex data synchronization across products also makes data consistency and security a challenge. The complexity makes it difficult for application development to quickly respond to new requirements. This causes slower business iteration and brings a large additional cost of development and operation and maintenance (O&M).

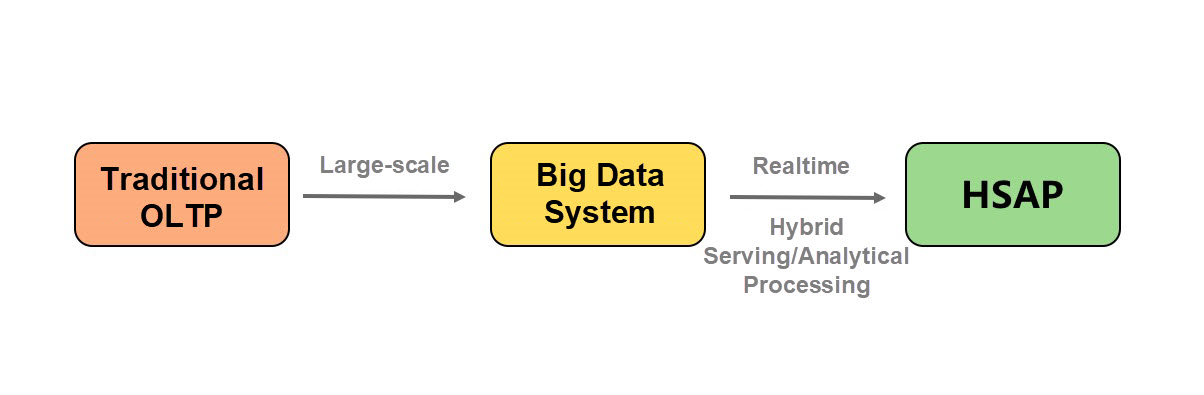

We believe that real-time serving and analysis integration should be implemented through a unified HSAP system. With such a system, application development can be conducted without considering the problems and limitations of each product. In this way, we can greatly simplify the business architecture and improve development and O&M efficiency. Such a unified system can avoid unnecessary data duplication, thereby saving costs. Meanwhile, this architecture allows the system to respond in seconds or sub-seconds. In this way, business decisions can be made in real-time so data can exert greater business values.

Although a distributed HTAP system can analyze data in real-time, it cannot solve the problems of big data.

First, the data synchronized from transaction systems is only a small portion of the data that the real-time recommendation system needs to process. Most of the other data come from non-transaction systems, such as logs (users often have dozens or even hundreds of views before each purchase), and most of the analysis is performed on the non-transaction data. The HTAP system does not have this part of data and therefore does not analyze the non-transaction data.

Then, can we write the non-transaction data into the HTAP system for analysis? Let's analyze the differences between the data writing modes of the HTAP and HSAP systems. The cornerstone and advantage of the HTAP system is to support fine-grained distributed transactions. Transaction data is often written into the HTAP system in the form of many distributed small transactions. However, the data from systems, such as logs, does not have the semantics of fine-grained distributed transactions. Unnecessary overhead is required if the non-transaction data is imported into the HTAP system.

In contrast, the HSAP system requires no high-frequency distributed small transactions. Generally, you can write a huge amount of single data into an HSAP system in real-time or write distributed batch data into the system at a relatively low frequency. Therefore, the HSAP system can be optimized in design to be more cost-effective and to avoid the unnecessary overhead of importing non-transaction data to the HTAP system. Even if we do not care about this overhead, we assume that we can write all the data into the HTAP system at any cost. If this is the case, can we solve this problem? The answer is still "no."

Good support for OLTP scenarios is a prerequisite for HTAP systems. For this purpose, an HTAP system usually adopts row store for data. However, the analytical query of row-store data has quite a lower efficiency (order of magnitude) than column-store data. The ability to analyze data and the ability to analyze data efficiently are not the same thing. To achieve efficient analysis, an HTAP system must copy massive non-transaction data to column store, but this will inevitably cause a lot of costs. It is better to copy a small amount of transaction data to an HSAP system at a lower cost. This can better avoid affecting the OLTP system. So, we think that HTAP and HSAP will complement each other and lead the direction of the database and big data fields, respectively.

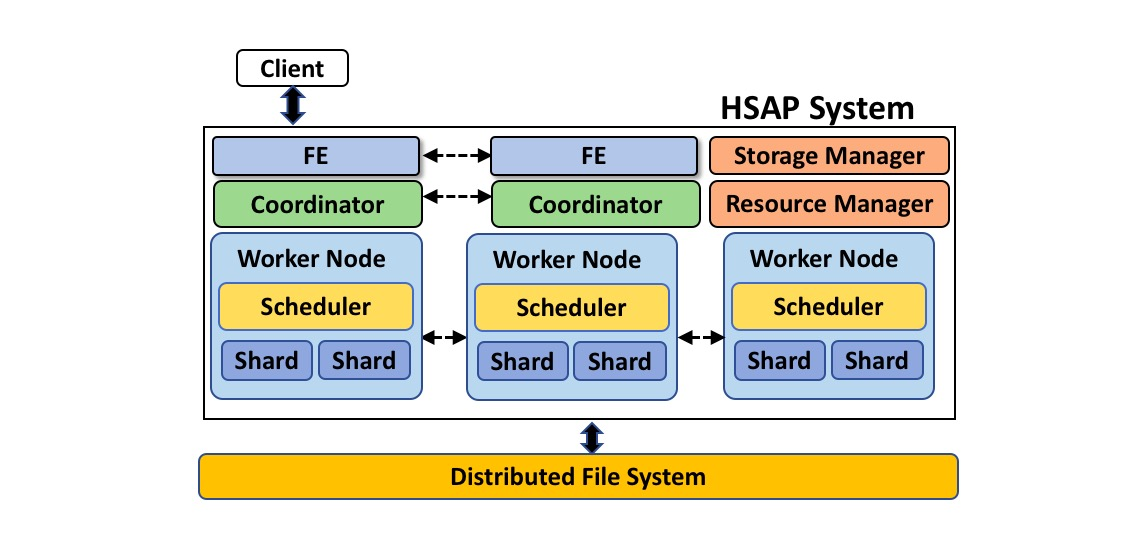

As a brand-new architecture, HSAP has faced challenges different from the existing big data and traditional OLAP systems.

To address these challenges, we believe that a typical HSAP system can adopt architecture similar to that shown in the preceding figure.

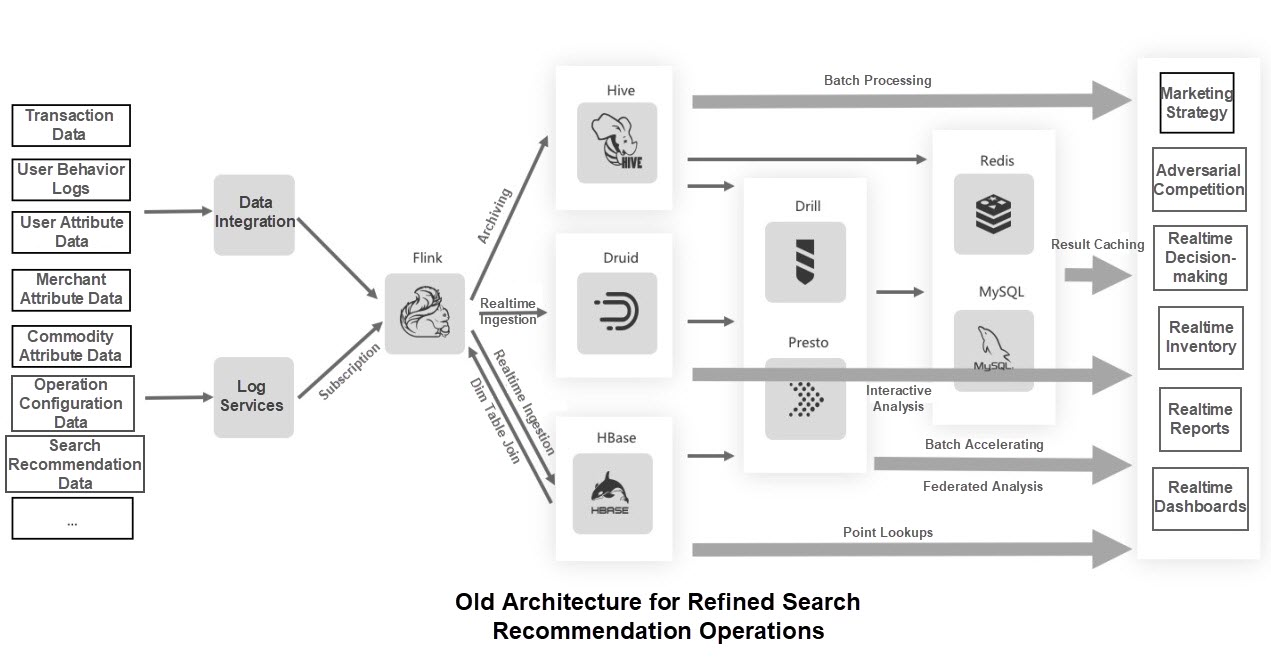

Let's share the refined operation business of Alibaba's search recommendations. The following figure shows an example of the system architecture before we adopt HSAP.

We must run a series of storage and computing engines (such as HBase, Druid, Hive, Drill, and Redis) to meet business needs. In addition, data synchronization tasks are required to maintain approximate synchronization between multiple storage systems. This business architecture is complex and the development is time-consuming.

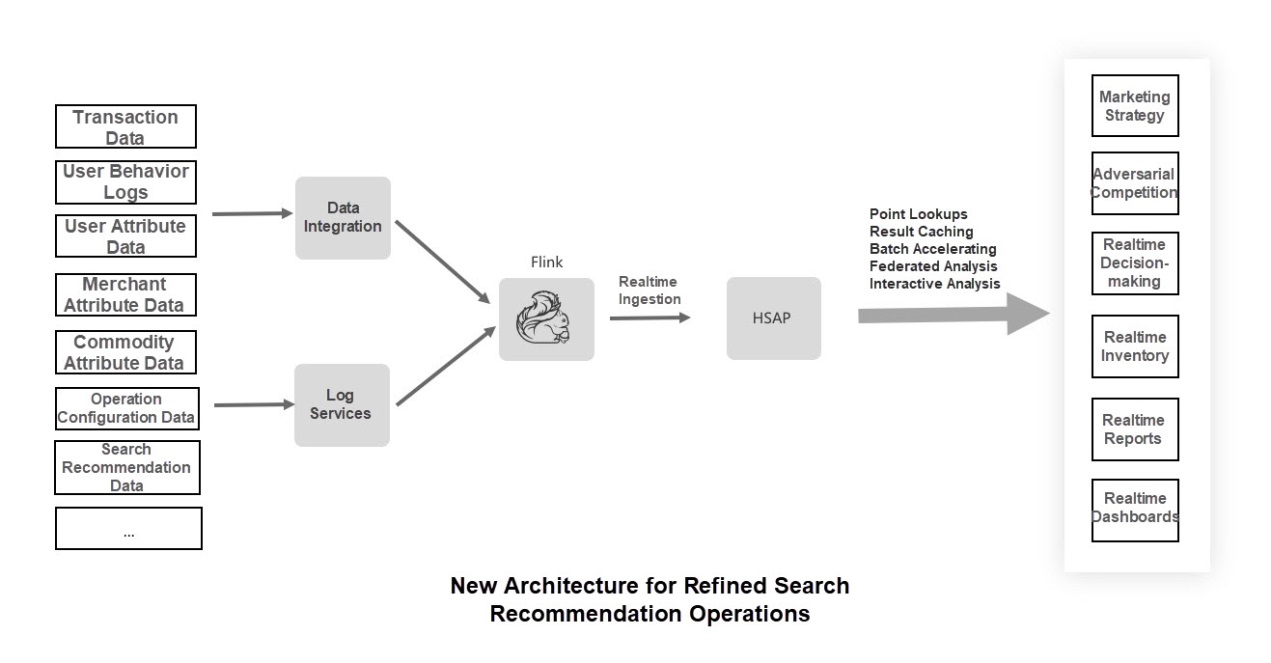

We upgraded this business by using the HSAP system during the Double 11 Global Shopping Festival in 2019, and the new architecture was greatly simplified. User, commodity, merchant attribute data, and massive user behavior data are cleansed in real-time and offline before they are imported into the HSAP system. The HSAP system undertakes query and analysis services such as real-time dashboard, real-time reporting, effect tracking, and real-time data applications. Real-time dashboard, real-time sales forecast, real-time inventory monitoring, and real-time BI reports monitor the business progress in real-time. This allows you to know the business growth and track algorithm results, thereby making efficient decisions. Data products such as real-time tagging, real-time profiling, competition analysis, people and product selection, and benefit delivery facilitate refined operation and decision-making. The real-time data interface service supports algorithm control, inventory monitoring, and early warning services. A complete HSAP system enables reuse and data sharing across all channels and links, addressing data analysis and query requirements from different business perspectives, including operation, products, algorithms, development, analysis, and decision-making.

With unified real-time storage, the HSAP architecture provides a wide range of data query and application services without data duplication, such as simple query, OLAP analysis, and online data services. In this way, the access requirements of data applications are met. This new architecture greatly reduces business complexity, allowing us to quickly respond to new business needs. Its real-time performance in seconds or sub-seconds enables more timely and efficient decision-making, allowing data to create greater business values.

More information,Please join the DingTalk Group of Hologres.

Yangqing Jia Launches the New-Generation Cloud-Native Data Warehouse

Hologres - July 1, 2021

Hologres - June 30, 2021

Hologres - July 22, 2020

Alibaba Clouder - July 3, 2020

Alibaba Clouder - March 22, 2021

Alibaba Cloud MaxCompute - January 22, 2021

Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More Data Lake Storage Solution

Data Lake Storage Solution

Build a Data Lake with Alibaba Cloud Object Storage Service (OSS) with 99.9999999999% (12 9s) availability, 99.995% SLA, and high scalability

Learn MoreMore Posts by Hologres