The Alibaba Cloud 2021 Double 11 Cloud Services Sale is live now! For a limited time only you can turbocharge your cloud journey with core Alibaba Cloud products available from just $1, while you can win up to $1,111 in cash plus $1,111 in Alibaba Cloud credits in the Number Guessing Contest.

By Wanqing

The transaction team had never had any full-time test developers. All our work was self-tested by R&D engineers. At that time, the company did not have strong automated testing capabilities, and almost all the tests were manual. However, I thought test resources were essential at that moment. I worked hard to have a test team be set up to protect the release quality of the order system.

At that time, some interesting things happened. According to JN, the framework team did not have any test developers, but they never seemed to cause any errors. With a bit too much pride, they thought that technical engineers should guarantee the code quality on their own. They thought they could do no wrong. I thought this approach was a bit too idealistic. Sometimes R&D engineers cannot easily find their own mistakes. Bringing in another group of people to check the code from another perspective would further improve quality assurance. After all, this system was extremely important and risky. However, I did not think we should not build a test team that only served to point out errors.

After talking the matter over with JN for a long period of time, we determined the responsibilities of the test team. Specifically, R&D engineers were responsible for ensuring the quality of the code. On this basis, test developers mainly provided tool support to reduce test costs and guarantee the testing results as far as possible.

In February or March 2016, the transaction team welcomed its first test developer. Around April, we had a total of four test developers and I was responsible for the whole test team.

The first thing I did was to set up a system for automated integration testing.

I selected Robot Framework as the technology stack, mainly because most team members still used Python at the time, though the test developers could code in both Python and Java. Another reason was that shifting the language stack would be cost-effective because the Robot Framework had its own specifications for keyword-driven testing (KDT) and its system-related libraries could be extracted.

In addition to developing test process specifications and standards, I started to build a platform to manage test cases, test statuses, and test reports.

I named this system WeBot.

The system used Robot Framework as the basis for executing test cases.

The system used Jenkins to manage execution plans and schedule test cases to execute in different locations.

We built a simple management UI based on Django to manage test cases and test reports, allowing each test case to be randomly assembled as a unit. Engineers who were familiar with Java can think of each test case as an SPI.

In addition, we introduced Docker to deploy the secondary environment, though this was rarely used because the production system of Ele.me did not use Docker at that time. The Ele.me's production system was containerized around 2017.

I was quite happy working on the test environment at that time because I liked challenging myself.

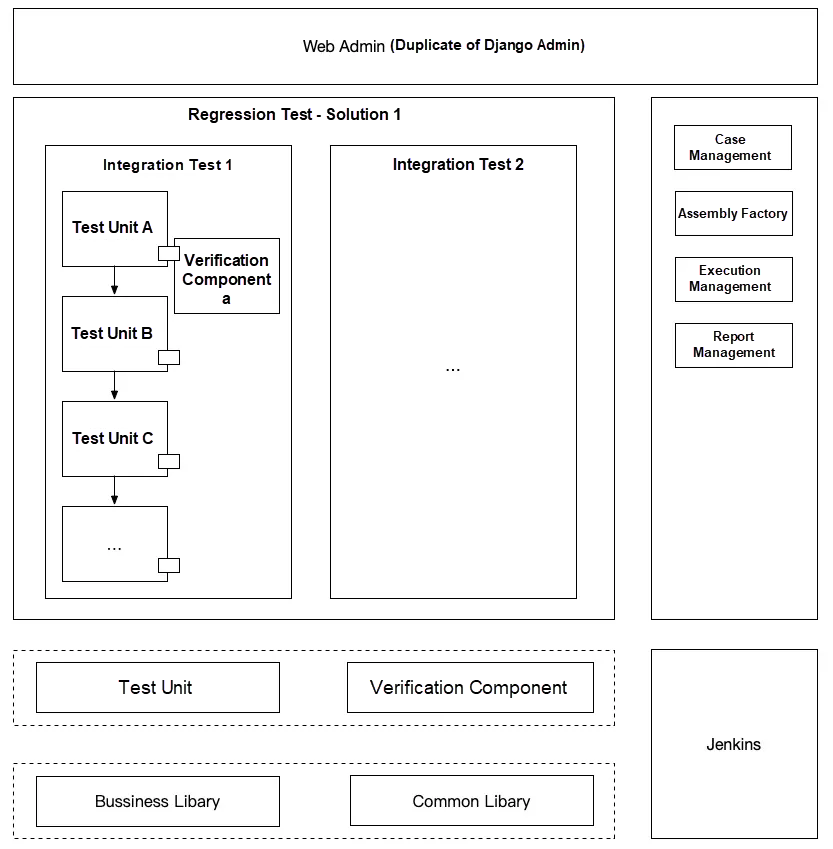

The following figure shows the general idea.

Test unit: The business library is actually an encapsulation layer for the SOA service API in Robot Framework. Each test unit can call one or more API operations to perform atomic business actions.

Verification component: The verification component verifies returned values or can be additionally configured to verify Redis and database data.

Integration test 1: An integration test case is completed by multiple test units that are orchestrated in series. After each test unit executes the test case, the input parameters and output parameters of the request can be obtained anywhere in the operation domain of the integration test case.

Regression test: Multiple integration tests can be selected and configured for execution as a regression test plan.

In this way, we were able to implement multi-layer multiplexing with different granularities. Based on the integration test and the regression test plan, the backend would compile the code to generate a corresponding Robot file.

This project actually failed in the end. The main reason was the test developers lacked development skills and the UI required a great deal of frontend development work. At the beginning, I directly duplicated Django's extended management UI called xadmin and made simple extensions to the UI. However, our manpower at the time did not allow me to spend too much energy on the UI. As a result, the built-in frontend components provided a poor user experience, which led to low efficiency. We had basically given up on secondary development by May.

However, this attempt brought led to some other achievements. Our attempt was equivalent to abandoning system management cases, but retaining the Jenkins + Robot Framework combination. We hosted some of our integration test cases in Git. The R&D team deployed developed Git branches to the specified environment and pulled test cases every morning for execution. The R&D team observed the automated test reports every morning to check whether the latest content to be released was correct. In addition, the R&D team was allowed to manually execute the test cases. Wenwu and Xiaodong contributed a lot of time and energy to this work.

The automated integration tests and the regression tests provided important support for the subsequent splitting and small-scale refactoring of the order system. In addition, the R&D team was relieved and could feel free to pursue more innovations. The R&D team actively used this toolkit, which helped them a lot.

The second thing I did was to build a performance test.

This came about as follows:

I remember when I first joined the order team in 2015, I was honored to visit teacher XL who had not yet joined Ele.me but later became the head of global architecture for Ele.me. He talked about how to optimize the order system and, importantly, he also mentioned stress testing.

At that time, the system was experiencing some performance and capacity-related problems, which were unpredictable. For example, before we finished sharding, the merchant client team released a new version of order list, an existing coarse-grained universal API that allowed free condition combinations was used in the feature. We were unable to predict when this query actually used an index with very poor performance. As we approached the noon business peak, the query API generating several thousand QPS suddenly caused a secondary database to crash (our monitoring and alert system was not that sophisticated in 2015). Every secondary database crashed after the traffic was switched to it. We had to throttle traffic by 50% for all APIs to withstand the high traffic. This process lasted for nearly half an hour. After tracing the problem back to a recent change, we recovered the system by asking the merchant client team to roll back this change. According to subsequent troubleshooting, the accident was caused by slow SQL statements at approximately hundreds of QPS.

The company-wide performance test was set before the performance test I had planned. The company's performance test was set up for the "517 Take-out Festival" celebrated on May 17 and the company had a group of professional test developers. This was the first festival created by Ele.me and the company spent a long time preparing and carrying out this event.

During stress testing, we needed to constantly resolve problems and repeat the stress test. Due to this project, many colleagues had to basically work around the clock. Looking back now, I remember the latest I ever left work was probably on May 6. It was 5:30 a.m. when left the building. When I arrived home, the street lamps had just gone off.

Although the full-pipeline stress testing covered all our systems, some system components were left out. Therefore, we needed to be able to independently carry out stress testing on some APIs or logic any time we needed to.

The setup process was as follows:

In terms of technology, we selected Locust written in Python, because the SOA framework and components of Python provided great convenience. The previous company-wide full-pipeline stress test was based on JMeter. However, JMeter cannot be easily integrated with the SOA framework of Java. As a result, JMeter cannot directly use soft loads. Instead, it requires a frontend HaProxy to distribute traffic. This caused some inconvenience at the time. Another reason was that Locust was designed to enable some performance test cases to approach real business scenarios. Locust monitored only the QPS, so the results were sometimes distorted.

While the full-pipeline performance test team was pioneering the test, I soon finished developing the performance test capability. The entire development process took more than one month. By August or September, we were able to organize performance tests for internal services. Performance testers and R&D engineers also needed some time to learn the test procedure. Soon, the performance test developed by our team was applied to the whole department, including the financial team that merged with us later.

This test enabled us to predict the upper limits of our service loads and performance while providing open APIs. In this way, some APIs with potential performance defects were prevented from going online, especially APIs involving complex query conditions on merchant clients. In addition, this test could simulate high-concurrency scenarios. In the reconstruction phase, we were able to find some problems with concurrent locks and trace dependencies in advance.

The third thing I did was to organize random fault drills.

At first, the random fault drills were actually very simple. The general idea was as follows:

This model was very simple. After several rounds of governance efforts, we were able to significantly reduce the external dependencies of the order system. Therefore, the model was completed within two or three days. However, this version was like a toy and had little reference significance. The Mock Server had limited capabilities due to its lack of support for high concurrency.

JN gathered some colleagues to build a wheel based on the prototype of Netflix's Choas Monkey. We named the wheel "Kennel."

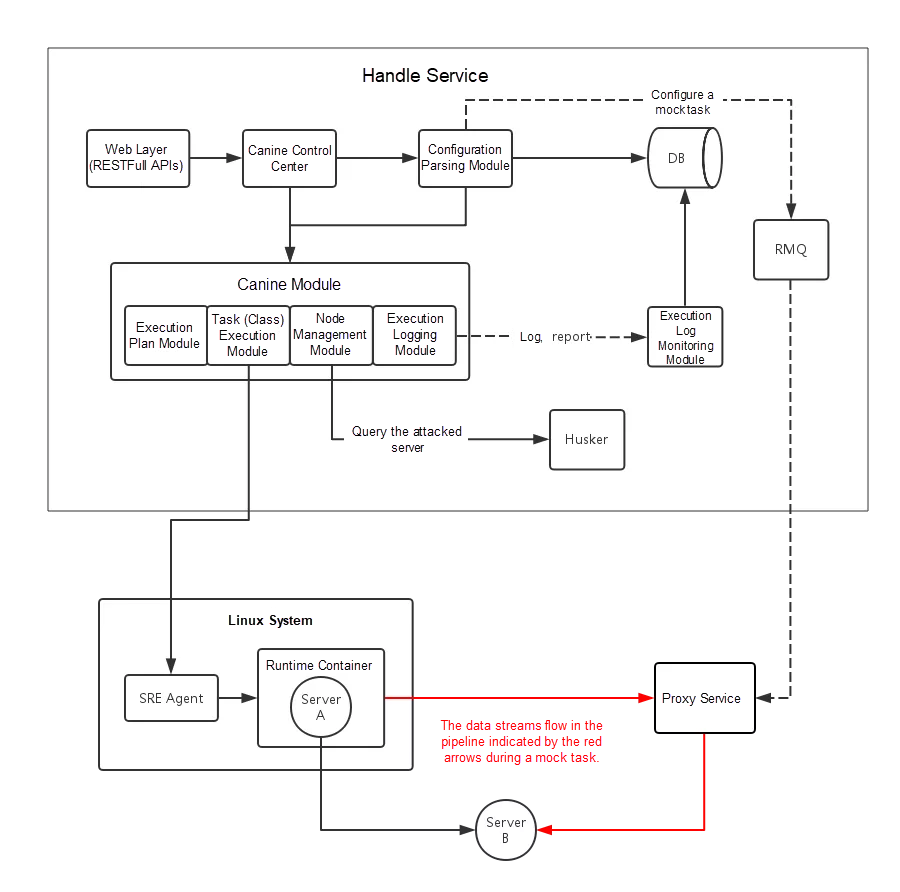

The following figure shows the design of the control center.

With the help of the special project team and the O&M team, we first made Kennel available around October 2016. This tool can simulate network packet loss, interface injection exceptions, cluster node removal, and force termination of service processes.

We had never tried such a tool before, and we didn't know what the test results would look like. I planned to make the first attempt in November. I tried to design five scenarios that required acceptance:

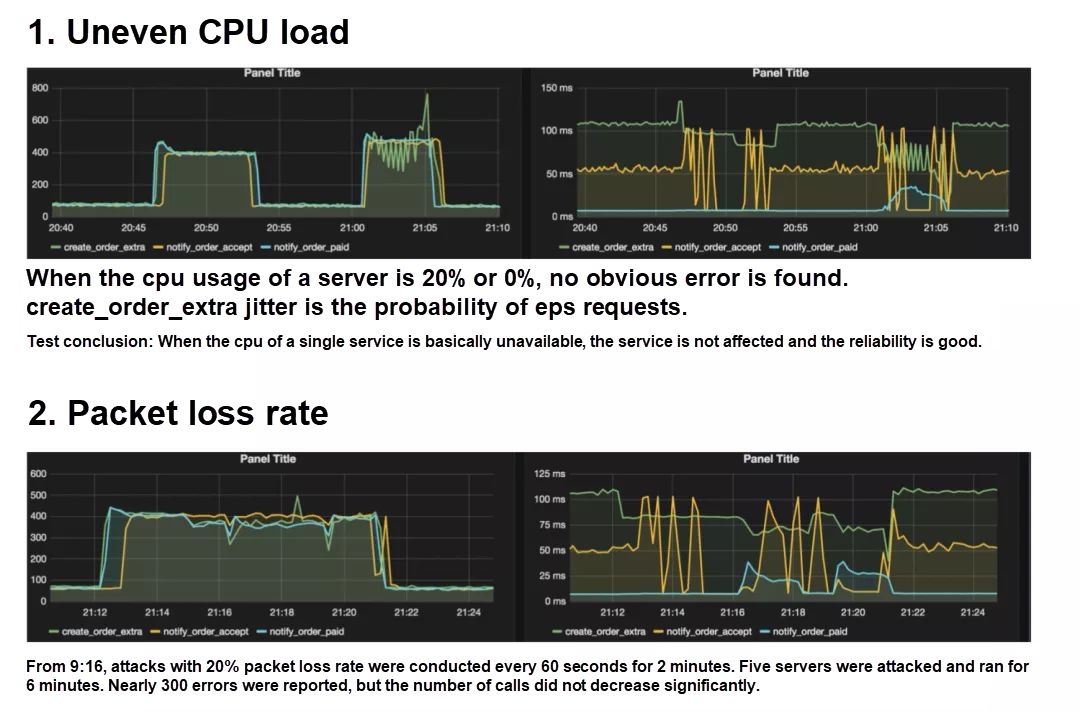

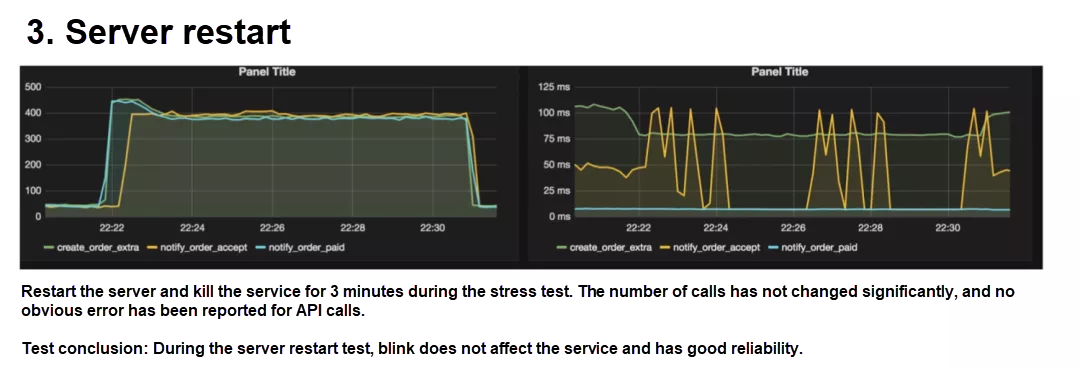

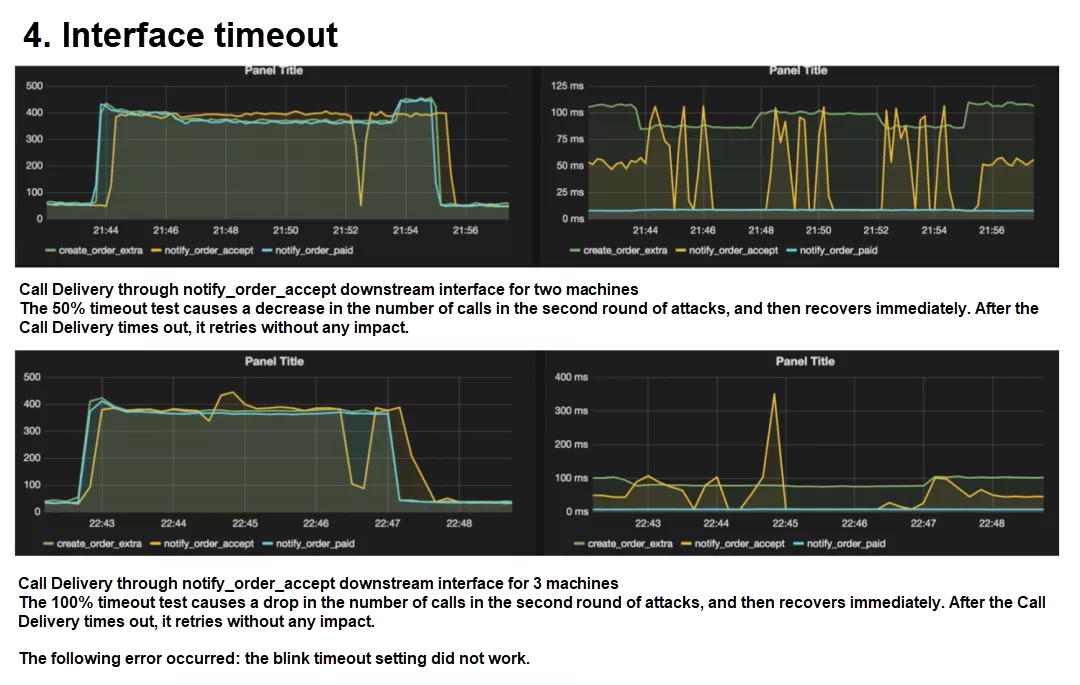

We selected a test developer as the leader of testing based on these scenarios. The test reports for different services were slightly different. The following figures show the screenshots of one of the test reports.

After testing several major transaction services, we did find some potential risks.

In some cases, the number of machines in a cluster may be different from that in the service registry. Specifically, after a service node is force terminated, the service registry cannot automatically discover and remove the service node. This can potentially lead to a high-risk situation.

Load imbalances occurred in each cluster and the CPU utilization of some machines may be too high. These problems were related to load balancing policies.

During automatic recovery from "force termination," the CPU utilization of certain nodes was significantly higher than that of other nodes, but the CPU utilization gradually evened out after a few hours. This problem was also related to load balancing policies.

When the CPU load of a single node was high, the load balancing mechanism did not route the traffic from the node to other nodes, even when the request performance of this node was far worse than that of other nodes and requests frequently timed out. This problem was related to the load balancing mechanism and the fusing mechanism. The SOA of Python performs fusing on the server side, not the client side.

The timeout settings for many services were incorrect. The framework supports soft timeout and hard timeout settings. Soft timeout generates an alert but does not block the service. In contrast, the default hard timeout period was 20 seconds, which was a long time. Only soft timeout periods or even no timeout periods were set for many services. This was actually a severe potential risk that could cause system crashes caused by a minor mistake.

In some scenarios, the timeout settings were invalid. After we traced the problems and worked with the framework team to reproduce the symptoms, we finally found that, in these scenarios, messages were sent using message queues. Python frameworks used Gevent to support high-concurrency scenarios, but failed to properly use this timeout mechanism.

2,599 posts | 764 followers

FollowAlibaba Clouder - November 2, 2020

Alibaba Clouder - November 2, 2020

Alibaba Clouder - November 2, 2020

Alibaba Clouder - November 2, 2020

Alibaba Clouder - November 2, 2020

Alibaba Developer - May 21, 2021

2,599 posts | 764 followers

Follow Black Friday Cloud Services Sale

Black Friday Cloud Services Sale

Get started on cloud with $1. Start your cloud innovation journey here and now.

Learn More ApsaraDB for MyBase

ApsaraDB for MyBase

ApsaraDB Dedicated Cluster provided by Alibaba Cloud is a dedicated service for managing databases on the cloud.

Learn More Architecture and Structure Design

Architecture and Structure Design

Customized infrastructure to ensure high availability, scalability and high-performance

Learn MoreMore Posts by Alibaba Clouder