The Alibaba Cloud 2021 Double 11 Cloud Services Sale is live now! For a limited time only you can turbocharge your cloud journey with core Alibaba Cloud products available from just $1, while you can win up to $1,111 in cash plus $1,111 in Alibaba Cloud credits in the Number Guessing Contest.

By Wanqing

In September or October 2015, we began to implement database and table sharding. The sharding plan had almost been finalized when I joined the project. The implementation was led by the DAL team from the CI department.

First, the original system could not support high concurrency. At that time, our order system had one primary MySQL database, five secondary MySQL databases, and one additional MySQL database used for master high availability (MHA). At the time, the databases could not support concurrent requests and had weak resistance to risks. If the business department did not inform us of promotional events in advance, when our secondary database went down, we could only switch between the available databases and throttle a large amount of traffic to prevent system crashes. Moreover, at that time, we prayed every day that the order system of Meituan Takeout would not crash during peak hours. If it crashed, more traffic would flow to Ele.me and we would begin to get nervous. Similarly, if our order system crashed, the Meituan Takeout system would have a hard time dealing with the extra traffic. We were both at a similar stage of development.

Second, DDL costs were too high while our business fighting its decisive battle. At that time, Ele.me averaged millions of orders per day. To meet certain business requirements, we needed to add fields to the order system. However, when we asked the database administrator (DBA) to assess the risks, the DBA answered that the operation would cause the order system to stop service for three to five hours and we needed approval from the CEO. Apparently, our technical team could not afford the risk, and neither could the business team. Therefore, we came up with a workaround: We would insert the required fields into the reserved JSON extension fields. This solution solved the problem for a time, but also generated many potential risks.

In addition, some special business scenarios and coarse-grained open APIs generated SQL statements with extremely low performance. All these risks could lead to system crashes.

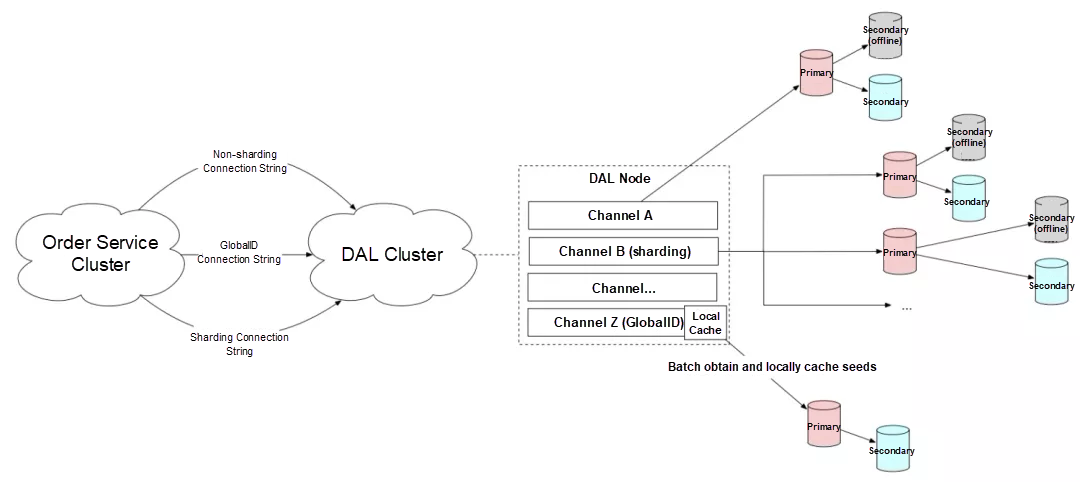

The following figure shows the physical structure after sharding.

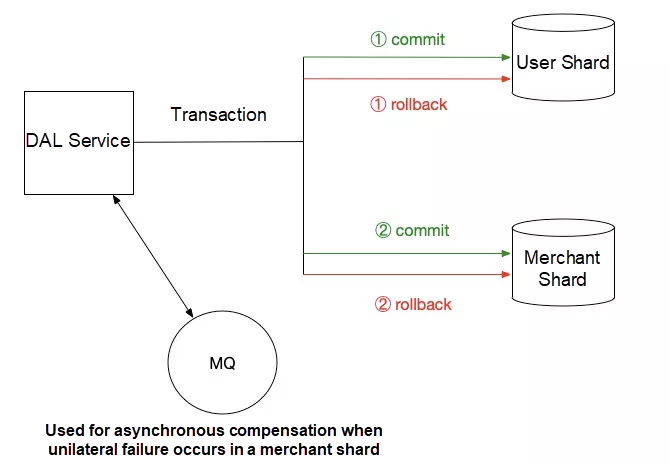

The following figure shows the logic of an update operation.

Actually, we implemented two-dimensional sharding, with 120 shards for each dimension. However, these shards could be routed by user ID, merchant ID, or order ID. Specifically, write requests were preferentially routed by user ID. Due to resource limitations, user shards and merchant shards were deployed in hybrid mode.

I will not elaborate on the technical details of database and table sharding here. The sharding process mainly included the following stages:

During this period of time, as a business team, we actually spent most of our time on step 3 and had to work overtime until 3:00 or 4:00 a.m. several times.

Before the 2016 Spring Festival, to withstand the holiday business peak and ensure system stability, we went so far as to archived the data in databases and retained only the orders generated within the last 15 days.

I remember on the final day of migration, probably in the middle of March 2016, several team members and I arrived at the company at about 5:00 a.m. when the dawn was just breaking. The whole Ele.me system went down and write requests were blocked. We completed the configurations for databases and verified the configurations. Finally, we resumed write requests, verified the businesses, gradually released the frontend traffic, and resumed service. The critical process took about 10 minutes and the service was completely resumed half an hour after service was stopped.

On the next day, we were finally able to import historical orders generated within the last three months.

After this change, we got rid of most of the bottlenecks and pain points related to databases.

Around July 2015, influenced by some articles about architectures and instructions from JN, we decided to implement message broadcast in the order system to take decoupling a step further.

After researching RabbitMQ, NSQ, RocketMQ, Kafka, and ActiveMQ, we ultimately decided to select RabbitMQ. In fact, I thought RocketMQ was more suitable for the system at that time, especially for ordered messages, because it could provide natural support in some transaction business scenarios. However, the O&M team had more experience in maintaining RabbitMQ. The framework team and the O&M team were very confident and had never caused any problem since we built the order system. If we chose RabbitMQ, we could obtain natural support from the O&M team and our business team could avoid many risks.

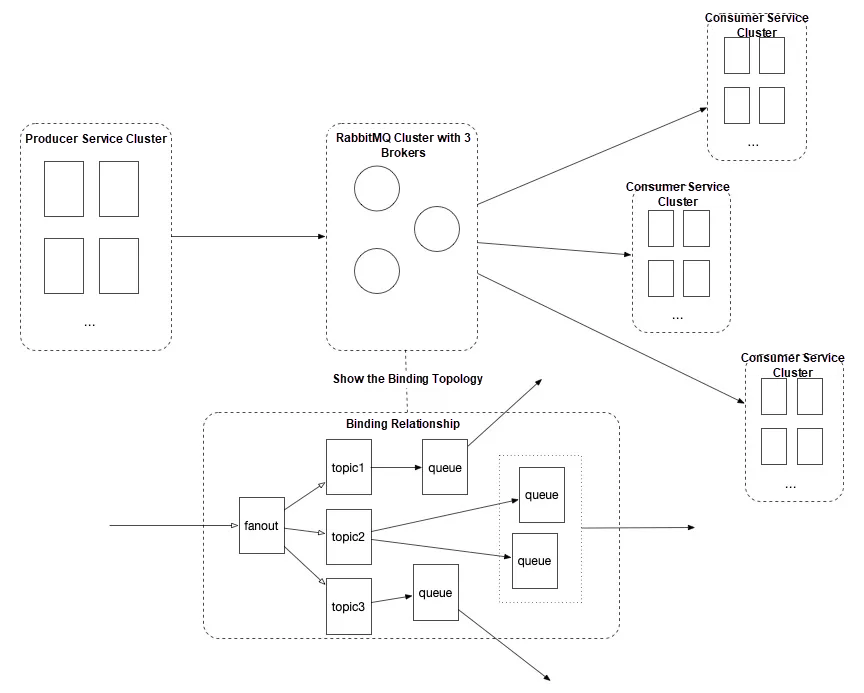

Therefore, the framework team performed a rigorous performance test on RabbitMQ and provided some performance metrics. After this test, a cluster consisting of three brokers was built to separately serve the order system. Previously, only one MQ node had been provided to serve asynchronous message tasks in the Zeus system.

To ensure that the cluster would not affect the main transaction processes, we carried out a series of fault tolerance optimizations on the SOA framework on the client side. Our fault tolerance work focused on the timeout period for requests sent to connect to the RabbitMQ cluster, disconnection from the RabbitMQ cluster, asynchronous message sending, and the maximum number of retries. Ultimately, we built a brand new RabbitMQ cluster that consisted of three nodes and received all order messages.

During this period, I actually made a small mistake. Although the framework team had already carried out fault tolerance for exceptional cases, the message broadcast sending time was closely related to the status of main processes. I was always careful, but before code release, I added a message sending switch for the initial release. This was at about 8:00 p.m. With what I know now, I would have spent more time on canary release and observation. Shortly after I released all the code, the monitoring results showed that many API operations started to time out. (We used the default timeout period of the framework, which was 30s. This setting was actually risky.) Obviously, something had slowed down the API operations. After the transaction curve dropped sharply, I was immediately placed on call by the NOC. I immediately disabled the message sending switch. The curve recovered instantly. Then, I turned to the architecture team and asked them to help find the cause of the problem. The real cause was my lack of skills.

That evening, we enabled and disabled the switch repeatedly and gradually increased the traffic from 5% to 10%, 30%, and more. After various tries and verifications, we concluded that the problem was related to the HAProxy configurations. The HAProxy would disconnect from the RabbitMQ cluster while the service client still sent requests based on the previous connection. In addition, the client did not provide fault tolerance for such timeout scenarios. The problem disappeared after we adjusted the connection timeout configuration of the HAProxy, although some potential risks were remained, as shown in the log.

In this case, each connected service provider had to apply for a topic and could determine the number of queues for the topic based on service requirements.

This physical architecture ran stably for less than a year and had many problems, which will be explained in the next section.

At the time, we set the following usage rules:

This logical architecture for message broadcast is still in use and has produced huge benefits in terms of decoupling.

From the middle of 2015 to the beginning of 2016, we had to process more than a million orders per day and the number of orders grew rapidly.

During that period, we also read about many architectures, including ESB, SOA, microservices, CQRS, and EventSource. We actively discussed how to reconstruct the order system to support higher concurrency. At that time, the Order Fulfillment Center (OFC) of JD.com, Inc. (JD) was widely discussed. In particular, we bought and read a book entitled Secrets of Jingdong Technologies (京东技术解密), but quickly concluded that it was not relevant to our order system. The OFC of JD was obviously determined by the characteristics of the retail business. Many concepts used by the OFC were difficult to understand for beginners like us and we could not see how to apply them to O2O. However, we were still deeply influenced by the OFC and we gave our group a similar abbreviation, OSC, which stands for Order Service Center.

Since the order system had been in service for more than three years and most engineers working on the main language stacks had shifted from Python to Java, we planned to rewrite the order system. Therefore, I designed an architecture system, using osc as the domain prefix of applications. The core idea of this order system was to store the snapshots taken of transactions, simplify the system, reduce dependencies on other parties, and reduce the system's roles as a data tunnel.

We chose Java as the language stack. In other words, we planned to convert to Java. (Unfortunately, the shift to Java was only completed in 2019.)

It was September. Coincidentally, the company began to set up an architecture review system for new services. My plan was probably the first or second plan that was reviewed and it was quickly rejected.

In fact, looking back from one year later, I quite appreciated this architecture review, precisely because my plan failed to pass the review.

Actually, the review was quite interesting. I vaguely remember the reviewers who participated in the architecture review. There were a representative of the database architect (DA) team, a representative of the basic OPS team, and an architect who had just joined the company.

The architect's questions focused on whether the architecture could be used for one year or three years, while the questions from the representative of the basic OPS team were especially interesting. His first question was whether this system was in a critical path. I thought, isn't this question unnecessary? I answered right away, "Yes. It is right in the middle of a critical path."

Then, he asked whether this application could be downgraded if an exception occurred. I thought, isn't this question superfluous as well? This pipeline certainly cannot be downgraded. This pipeline was the core and most fundamental part of the system. Transactions were central to the company's core business. Maybe we didn't think alike.

He concluded that the system was in a critical path and involved orders, which represented the core business of the company. However, because it could not be downgraded, if the system failed, the entire company would be affected. Therefore, the review ended and my plan was rejected.

2,599 posts | 764 followers

FollowAlibaba Clouder - November 2, 2020

Alibaba Clouder - November 2, 2020

Alibaba Clouder - November 2, 2020

Alibaba Clouder - November 2, 2020

Alibaba Clouder - November 2, 2020

Alibaba Developer - May 21, 2021

2,599 posts | 764 followers

Follow Black Friday Cloud Services Sale

Black Friday Cloud Services Sale

Get started on cloud with $1. Start your cloud innovation journey here and now.

Learn More ApsaraDB for MyBase

ApsaraDB for MyBase

ApsaraDB Dedicated Cluster provided by Alibaba Cloud is a dedicated service for managing databases on the cloud.

Learn More Architecture and Structure Design

Architecture and Structure Design

Customized infrastructure to ensure high availability, scalability and high-performance

Learn MoreMore Posts by Alibaba Clouder