本文介紹如何在DLC計算資源上提交AutoML實驗進行超參數調優。本方案採用PyTorch架構,通過torchvision.datasets.MNIST模組自動下載和載入MNIST手寫數字資料集,並對其進行模型訓練,以尋找最佳超參數配置。提供單機、分布式及嵌套參數三種訓練模式供選擇,以滿足不同訓練需求。

前提條件

首次使用AutoML功能時,需要完成AutoML相關許可權授權。具體操作,請參見雲產品依賴與授權:AutoML。

已完成DLC相關許可權授權,授權方法詳情請參見雲產品依賴與授權:DLC。

已建立工作空間並關聯了通用計算資源公用資源群組。具體操作,請參見建立及管理工作空間。

已開通OSS並建立OSS Bucket儲存空間,詳情請參見控制台快速入門。

步驟一:建立資料集

步驟二:建立實驗

進入新建實驗頁面,並按照以下操作步驟配置關鍵參數,其他參數配置詳情,請參見建立實驗。參數配置完成後,單擊提交。

設定執行配置。

本方案提供單機訓練、分布式訓練以及嵌套參數訓練三種訓練方式,您可以選擇其中一種訓練方式。

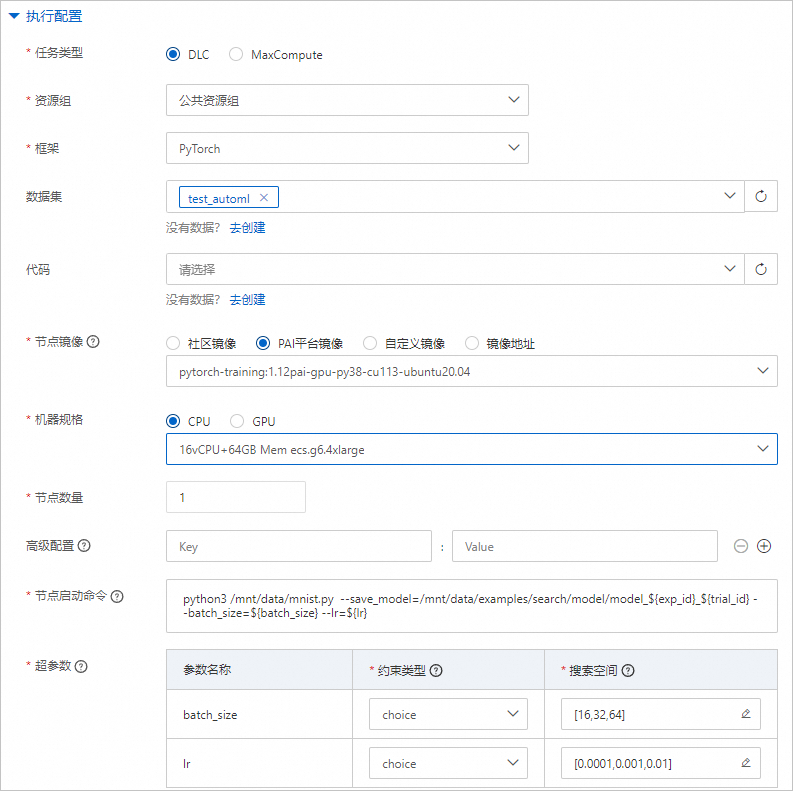

單機訓練參數配置說明

參數

描述

任务类型

選擇DLC。

资源组

選擇公共资源组。

框架

選擇PyTorch。

数据集

選擇步驟一中已建立的資料集。

节点镜像

選擇PAI平台镜像 >

pytorch-training:1.12PAI-gpu-py38-cu113-ubuntu20.04。机器规格

選擇CPU >

ecs.g6.4xlarge。节点数量

設定為1。

启动命令

配置為

python3 /mnt/data/mnist.py --save_model=/mnt/data/examples/search/model/model_${exp_id}_${trial_id} --batch_size=${batch_size} --lr=${lr}。超參數

batch_size

約束類型:選擇choice。

搜尋空間:單擊

,增加3個枚舉值,分別為16,32和64。

,增加3個枚舉值,分別為16,32和64。

lr

約束類型:選擇choice。

搜尋空間:單擊

,增加3個枚舉值,分別為0.0001、0.001和0.01。

,增加3個枚舉值,分別為0.0001、0.001和0.01。

使用上述配置可以產生9種超參數組合,後續實驗會分別為每種超參數組合建立一個Trial,在每個Trial中使用一組超參數組合來運行指令碼。

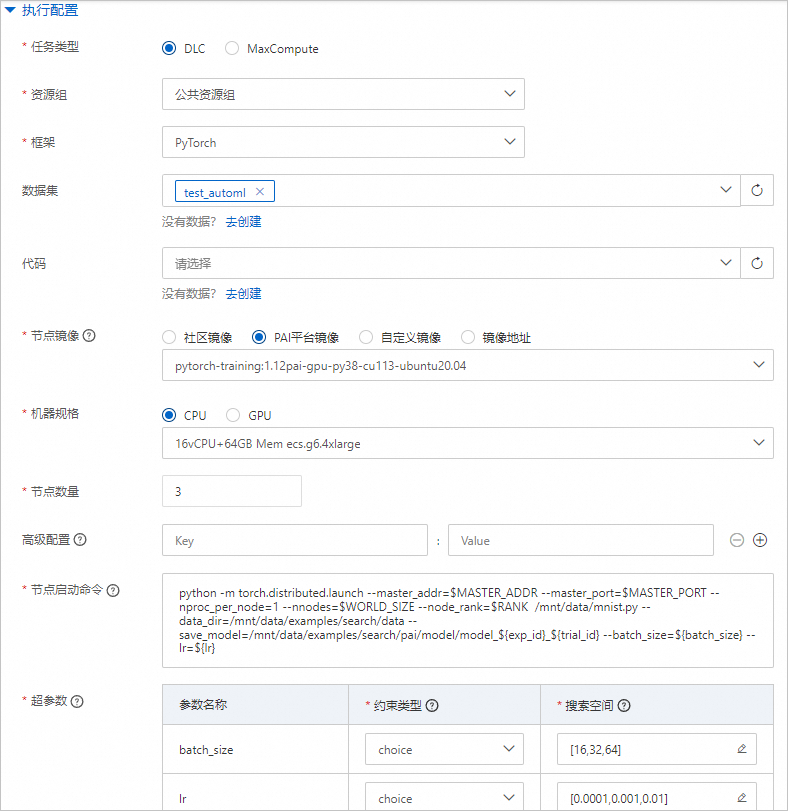

分布式訓練參數配置說明

參數

描述

任务类型

選擇DLC。

资源组

選擇公共资源组。

框架

選擇PyTorch。

数据集

選擇步驟一中已建立的資料集。

节点镜像

選擇PAI平台镜像 >

pytorch-training:1.12PAI-gpu-py38-cu113-ubuntu20.04。机器规格

選擇CPU >

ecs.g6.4xlarge。节点数量

設定為3。

启动命令

配置為

python -m torch.distributed.launch --master_addr=$MASTER_ADDR --master_port=$MASTER_PORT --nproc_per_node=1 --nnodes=$WORLD_SIZE --node_rank=$RANK /mnt/data/mnist.py --data_dir=/mnt/data/examples/search/data --save_model=/mnt/data/examples/search/pai/model/model_${exp_id}_${trial_id} --batch_size=${batch_size} --lr=${lr}。超參數

batch_size

約束類型:選擇choice。

搜尋空間:單擊

,增加3個枚舉值,分別為16、32和64。

,增加3個枚舉值,分別為16、32和64。

lr

約束類型:選擇choice。

搜尋空間:單擊

,增加3個枚舉值,分別為0.0001、0.001和0.01。

,增加3個枚舉值,分別為0.0001、0.001和0.01。

使用上述配置可以產生9種超參數組合,後續實驗會分別為每種超參數組合建立一個Trial,在每個Trial中使用一組超參數組合來運行指令碼。

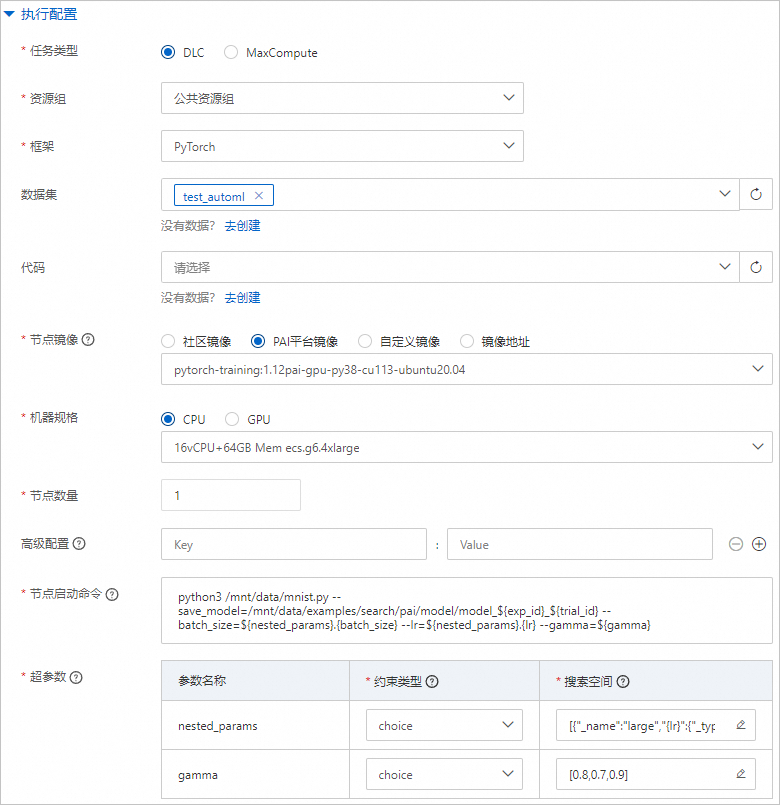

嵌套參數訓練參數配置說明

參數

描述

任务类型

選擇DLC。

资源组

選擇公共资源组。

框架

選擇PyTorch。

数据集

選擇步驟一中已建立的資料集。

节点镜像

選擇PAI平台镜像 >

pytorch-training:1.12PAI-gpu-py38-cu113-ubuntu20.04。机器规格

選擇CPU >

ecs.g6.4xlarge。节点数量

設定為1。

启动命令

配置為

python3 /mnt/data/mnist.py --save_model=/mnt/data/examples/search/pai/model/model_${exp_id}_${trial_id} --batch_size=${nested_params}.{batch_size} --lr=${nested_params}.{lr} --gamma=${gamma}。超參數

nested_params

約束類型:選擇choice。

搜尋空間:單擊

,增加2個枚舉值,分別為

,增加2個枚舉值,分別為{"_name":"large","{lr}":{"_type":"choice","_value":[0.02,0.2]},"{batch_size}":{"_type":"choice","_value":[256,128]}}和{"_name":"small","{lr}":{"_type":"choice","_value":[0.01,0.1]},"{batch_size}":{"_type":"choice","_value":[64,32]}}。

gamma

約束類型:選擇choice。

搜尋空間:單擊

,增加3個枚舉值,分別為0.8、0.7和0.9。

,增加3個枚舉值,分別為0.8、0.7和0.9。

使用上述配置可以產生9種超參數組合,後續實驗會分別為每種超參數組合建立一個Trial,在每個Trial中使用一組超參數組合來運行指令碼。

設定Trial配置。

參數

描述

最佳化指標

指標類型

選擇stdout。表示最終指標從運行過程中的stdout中提取。

計算方式

選擇best。

指標權重

配置如下:

key:validation: accuracy=([0-9\\.]+)。

Value:1。

指標來源

命令關鍵字配置為cmd1。

最佳化方向

選擇越大越好。

模型儲存路徑

設定為儲存模型的OSS路徑。本方案配置為

oss://examplebucket/examples/model/model_${exp_id}_${trial_id}。設定搜尋配置。

參數

描述

搜尋演算法

選擇TPE。演算法詳情說明,請參見支援的搜尋演算法。

最大搜尋次數

配置為3。表示該實驗允許啟動並執行最多Trial個數為3個。

最大並發量

配置為2。表示該實驗允許並行啟動並執行最多Trial個數為2個。

開啟earlystop

開啟開關。如果一個Trial在評估一組特定的超參數組合時發現效果明顯很差,則會提前終止該Trial的評估過程。

start step

配置為5。表示該Trial在最早執行完5次評估後,可以決定是否提前停止。

步驟三:查看實驗詳情和運行結果

在實驗列表中,單擊目標實驗名稱,進入實驗詳情頁面。

在該頁面,您可以查看Trial的執行進度和狀態統計。實驗根據配置的搜尋演算法和最大搜尋次數自動建立3個Trial。

單擊Trial列表,您可以在該頁面查看該實驗自動產生的所有Trial列表,以及每個Trial的執行狀態、最終指標和超參數組合。

相關文檔

您也可以提交MaxCompute計算資源的超參數調優實驗,詳情請參見MaxCompute K均值聚類最佳實務。

關於AutoML更詳細的使用方法和原理介紹,請參見自動機器學習(AutoML)。