ApsaraMQ for RocketMQ allows you to configure alert rules by using CloudMonitor. This helps you monitor the status and key metrics of your instance in real time and enables you to receive exception notifications at the earliest opportunity to implement risk warnings in production environments.

Background information

ApsaraMQ for RocketMQ provides fully managed messaging services. ApsaraMQ for RocketMQ also provides a Service Level Agreement (SLA) for each edition of instances. The actual metrics of each instance edition, such as messaging transactions per second (TPS) and message storage, are the same as the metrics that are specified for the edition. For information about the SLAs of different instance editions, see Limits on instance specifications.

You do not need to worry about the instance performance. However, you must monitor your instance usage in production environments to make sure that you do not exceed the thresholds that are specified for your instance. ApsaraMQ for RocketMQ integrates with CloudMonitor to provide monitoring and alerting services free of charge and for immediate use. You can use the services to monitor the following items:

Instance usage

If your actual instance usage exceeds the specification limit, ApsaraMQ for RocketMQ forcibly throttles the instance. To prevent faults that are caused by instance throttling, you can configure the instance usage alert in advance and upgrade your instance configurations when an excess usage risk is detected.

Business logic errors

Errors may occur when you send and receive messages. You can configure the invocation error alert to detect and fix errors and prevent negative impacts on your business.

Performance metrics

If performance metrics such as response time (RT) and message delay are required for your message system, you can configure the corresponding metric alerts in advance to prevent business risks.

Rules for configuring alerts

ApsaraMQ for RocketMQ provides various metrics and monitoring and alerting items. For more information, see Metric details and Metrics. Monitoring items can be divided into the following categories: resource usage, messaging performance, and messaging exceptions.

Based on accumulated best practices in production environments, we recommend that you follow the rules that are described in the following table to configure alerts.

The following monitoring items are basic configurations that are recommended by Alibaba Cloud. ApsaraMQ for RocketMQ also provides other monitoring items. You can configure alerts in a fine-grained manner based on your business requirements. For more information, see Monitoring and alerting.

Category | Monitoring item | Configuration timing and reason | Related personnel |

Resource usage |

|

| Resource O&M engineers |

Messaging performance |

|

|

|

Messaging exceptions |

|

|

|

Procedure for configuring alerts

Log on to the ApsaraMQ for RocketMQ console. In the left-side navigation pane, click Instances.

In the top navigation bar, select a region, such as China (Hangzhou). On the Instances page, click the name of the instance that you want to manage.

In the left-side navigation pane, click Monitoring and Alerts. Then, click Create Alert Rule.

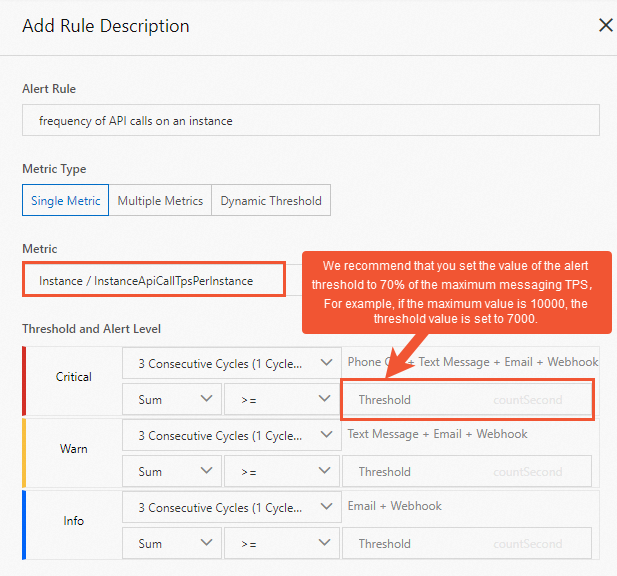

Best practices for configuring alerts about the number of API calls initiated to send and receive messages on an instance

Background: In ApsaraMQ for RocketMQ, the number of API calls initiated to send and receive messages is measured by messaging transactions per second (TPS). A peak messaging TPS is specified for each ApsaraMQ for RocketMQ 5.0 instance. For more information, see Quotas and limits. If the number of API calls that are initiated to send and receive messages on an instance exceeds the specification limit, the instance is throttled.

Risk caused by not configuring the alert: If you do not configure the alert, you cannot receive alerts before the number of API calls exceeds the specification limit. As a result, your instance is throttled and specific messages fail to be sent or received.

Configuration timing: We recommend that you configure the alert after the instance is created and the ratio between the TPS for sending messages and the TPS for receiving messages is specified.

Recommended threshold: We recommend that you set the alert threshold to 70% of the specification limit. For example, you purchase an instance whose maximum messaging TPS is 10,000, and the ratio between the TPS for sending messages and the TPS for receiving messages is set to 1:1. In this case, we recommend that you set the thresholds for the number of API calls initiated for sending messages and the number of API calls initiated for receiving messages to 3,500.

Professional Edition and Platinum Edition instances support the elastic TPS feature. You can enable the feature and set the alert threshold to 70% of the sum of the specification limit and the maximum elastic TPS.

Alert handling: After you receive an alert about the number of API calls, we recommend that you perform the following steps to handle the alert:

Go to the Dashboard page in the ApsaraMQ for RocketMQ console, analyze the topics and groups on which the largest number of API calls are initiated, and then determine whether the business changes are normal.

If the business changes are abnormal, contact your users for further analysis.

If the business changes are normal, the specification of your instance is insufficient to maintain normal business operations. In this case, we recommend that you upgrade your instance configurations. For more information, see Upgrade or downgrade instance configurations.

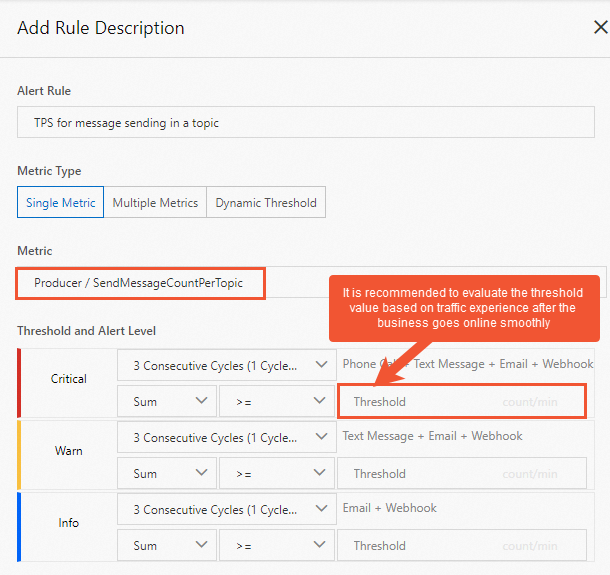

Best practices for configuring the alert about message sending TPS in a topic or message receiving in a consumer group

Background: ApsaraMQ for RocketMQ provides metrics to monitor messaging TPS by topic and consumer group. You can use the metrics to monitor messaging TPS in a specific business item and understand your business scale.

Risks caused by not configuring the alert: Messaging TPS in a topic specifies the number of API calls that you can initiate to send and receive messages in the topic. If you do not configure the alert, you cannot receive alerts before traffic drops to zero or traffic spikes occur. This may cause unexpected risks.

Configuration timing: We recommend that you configure the alert after your business stabilizes.

Recommended threshold: We recommend that you configure the threshold based on the traffic volume after your business stabilizes.

Alert handling: After you receive an alert about messaging TPS, we recommend that you perform the following steps to handle the alert:

Go to the Dashboard page in the ApsaraMQ for RocketMQ console, view the messaging TPS curve of the specified topic or group, and then check whether the traffic changes are normal.

Determine whether the traffic changes are normal based on the business model.

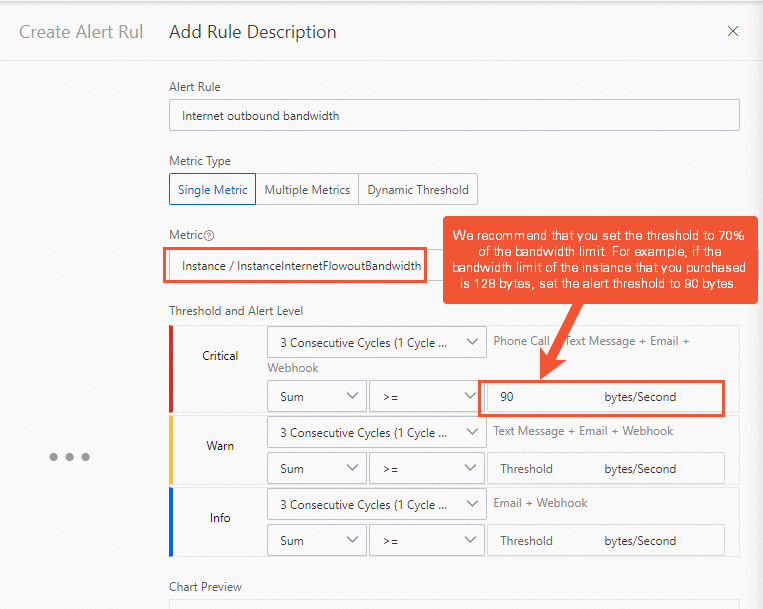

Best practices for configuring the Internet outbound bandwidth alert

Background: ApsaraMQ for RocketMQ 5.0 instances support the Internet access feature. Internet access is affected by outbound bandwidth. If the bandwidth limit is exceeded, access to the Internet may be compromised.

Risk caused by not configuring the alert: If you do not configure the alert, you cannot receive an alert when the Internet traffic usage of the instance exceeds the bandwidth limit. This causes issues such as packet loss and timeout or failures during client invocation.

Configuration timing: We recommend that you configure the alert after the instance is created and the Internet access feature is enabled.

Recommended threshold: We recommend that you set the alert threshold to 70% of the specification limit. For example, if the bandwidth limit of the instance that you purchased is 128 bytes, set the alert threshold to 90 bytes.

Alert handling: After you receive an alert about Internet outbound bandwidth, we recommend that you perform the following steps to handle the alert:

Go to the Dashboard page in the ApsaraMQ for RocketMQ console and check whether the business changes are normal.

If the business changes are abnormal, contact your users for further analysis.

If the business changes are normal, the specification of your instance is insufficient to maintain normal business operations. In this case, we recommend that you upgrade your instance configurations. For more information, see Upgrade or downgrade instance configurations.

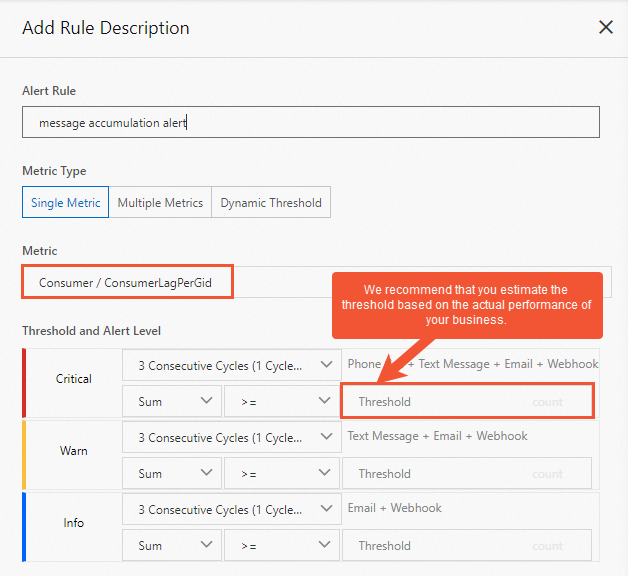

Best practices for configuring the message accumulation alert

Fluctuation and errors may exist in the statistics about message accumulation. We recommend that you do not set the threshold for accumulated messages to less than 100. If your business is affected even if the number of accumulated messages is small, we recommend that you configure the consumption delay time alert to monitor message accumulation.

Background: ApsaraMQ for RocketMQ allows you to monitor message accumulation by consumer group. You can use the message accumulation alert to prevent faults that are caused by message accumulation.

Risks caused by not configuring the alert: Message accumulation is a typical scenario and capability of ApsaraMQ for RocketMQ. In scenarios in which messages must be processed in real time, you must monitor and manage the number of accumulated messages to prevent negative impacts caused by message accumulation on your business.

Configuration timing: We recommend that you configure the alert after your business stabilizes.

Recommended threshold: We recommend that you configure the threshold based on the actual performance of your business.

Alert handling: After you receive an alert about message accumulation, we recommend that you perform the following steps to handle the alert:

Go to the Dashboard page in the ApsaraMQ for RocketMQ console, view the change trend of the message accumulation curve of the specified consumer group, and then find the time when message accumulation started.

Analyze the cause of message accumulation based on the business changes and application logs. For information about the consumption mechanism of accumulated messages, see Consumer types.

Determine whether to scale out the consumer application or fix the consumption logic based on the cause of message accumulation.

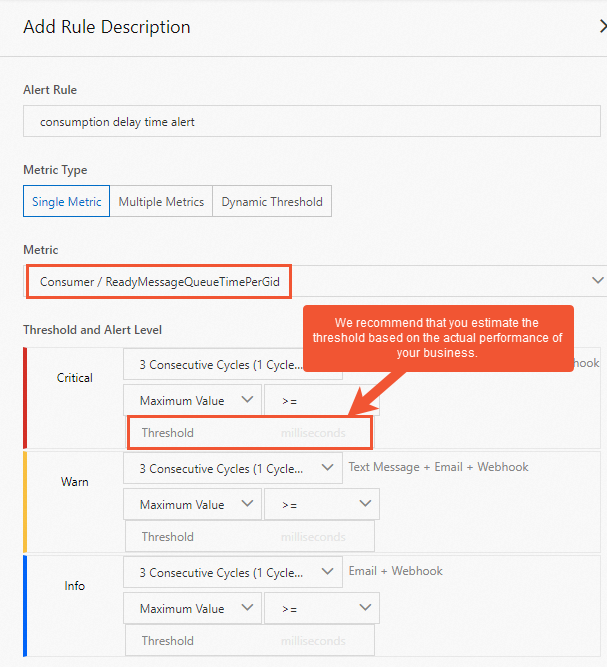

Best practices for configuring the consumption delay time alert

Consumption delay time is calculated based on the delay time of the first unconsumed message in a consumer group. Consumption delay time is cumulative and sensitive to business changes. After you receive an alert about consumption delay time, you must determine whether a small number of messages or all messages are delayed.

Background: ApsaraMQ for RocketMQ allows you to monitor consumption delay by consumer group. The consumption delay time alert provides a detailed metric for analyzing message accumulation.

Risks caused by not configuring the alert: Message accumulation is a typical scenario and capability of ApsaraMQ for RocketMQ. In scenarios in which messages must be processed in real time, you must monitor and manage the number of accumulated messages and the consumption delay time to prevent negative impacts caused by message accumulation on your business.

Configuration timing: We recommend that you configure the alert after your business stabilizes.

Recommended threshold: We recommend that you configure the threshold based on the actual performance of your business.

Alert handling: After you receive an alert about consumption delay time, we recommend that you perform the following steps to handle the alert:

Go to the Dashboard page in the ApsaraMQ for RocketMQ console, view the change trend of the message accumulation curve of the specified group, and then find the time when message accumulation started.

Analyze the cause of message accumulation based on the business changes and application logs. For information about the consumption mechanism of accumulated messages, see Consumer types.

Determine whether to scale out the consumer application or fix the consumption logic based on the cause of message accumulation.

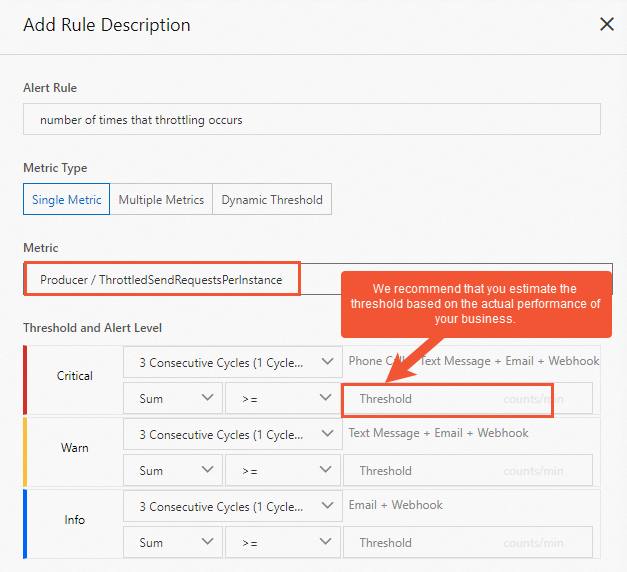

Best practices for configuring the alert about the number of times that throttling occurs

Background: ApsaraMQ for RocketMQ allows you to use events that trigger throttling on a specific instance as alert metrics. This helps you understand negative impacts on your business.

Risks caused by not configuring the alert: A large number of times that throttling occurs indicates that your traffic usage frequently exceeds the specification limit. In this case, we recommend that you upgrade your instance configurations.

Configuration timing: We recommend that you configure the alert after your business stabilizes.

We recommend that you configure the alert about the number of times that throttling occurs on an instance after the instance is created.

We recommend that you configure the alert about the number of times that throttling occurs in a topic or consumer group after your business stabilizes.

Recommended threshold: We recommend that you configure the threshold based on the actual performance of your business.

Alert handling: After you receive an alert about the number of times that throttling occurs, we recommend that you perform the following steps to handle the alert:

Go to the Dashboard page in the ApsaraMQ for RocketMQ console, view the messaging TPS curve of the specified instance, topic, or consumer group, and then analyze the time when throttling occurred and the throttling pattern.

Based on the statistics on the dashboard, check the topics or consumer groups that use the most TPS to determine whether the traffic increase meets your business requirements.

If the traffic increase meets your business requirements, upgrade your instance configurations. Otherwise, troubleshoot the issue.