Fees are incurred when you use MaxCompute for development. If daily consumption is stable in most time but the fees surge in a specific period of time, you need to identify the cause of this issue. You can perform a cost analysis to identify the projects and jobs that cause the surges, except for surges due to business growth. Then, you can optimize and adjust the jobs at the earliest opportunity to optimize costs. This topic describes how to troubleshoot an unexpected surge in the postpaid fees of MaxCompute based on bill details and MaxCompute Information Schema.

Background information

In this topic, the following general principles are used to troubleshoot an unexpected surge in the postpaid fees of MaxCompute.

Confirm the date when the cost surges in the Expenses and Costs console and identify the billable items or projects that cause unexpected surges on that day.

Analyze the reason of the unexpected cost surge.

If computing fees are higher than expected, you can use the TASKS_HISTORY data in the Information Schema view to obtain the number of jobs and the top N most costly jobs.

If storage fees are higher than expected, you can download usage records to analyze the changes in the storage fees.

If the fees for data downloads over the Internet are higher than expected, you can use the TUNNELS_HISTORY data in the Information Schema view to obtain the changes in the download fees.

Step 1: Obtain the Information Schema service

From March 1, 2024, MaxCompute no longer automatically provides the project-level Information Schema service for new projects. By default, the package of the project-level Information Schema service is not available for the projects that are created on or after March 1, 2024. If you want to query metadata, you can use the tenant-level Information Schema service to obtain more comprehensive information. For more information about how to use the tenant-level Information Schema service, see Tenant-level Information Schema.

Before you use Information Schema for an existing project, you must be granted the permissions to access the project metadata. To obtain the permissions, install the Information Schema permission package as the project owner or a RAM user that is assigned the Super_Administrator role. For more information, see the "Assign a role to a user" section in Perform access control based on project-level roles. You can use one of the following methods to install the Information Schema permission package. For more information about the features and limits of Information Schema, see Project-level Information Schema.

Log on to the MaxCompute client and run the following command:

install package Information_Schema.systables;Log on to the DataWorks console and go to the Ad Hoc Query page. For more information about ad hoc queries, see Use the ad-hoc query feature to execute SQL statements (optional). Run the following command:

install package Information_Schema.systables;

To collect metadata of multiple MaxCompute projects, you must install the Information Schema permission package for each MaxCompute project. Then, you must insert the backup metadata of each MaxCompute project into the same table for statistical analysis.

We recommend that you use the tenant-level Information Schema service to query the metadata and usage history of all projects of the current user account. For more information, see Tenant-level Information Schema.

(Optional) Step 2: Grant permissions to users except the project owner

The views provided by Information Schema contain all the user data at the project level. By default, the owner of a project can view the user data of this project. Other users or roles in the project must be granted the required permissions to view the data. For more information, see Cross-project resource access based on packages.

Syntax of the statements that are used to grant permissions to users or roles:

grant <actions> on package Information_Schema.systables to user <user_name>;

grant <actions> on package Information_Schema.systables to role <role_name>;actions: the permissions that you want to grant. Set the value to Read.

user_name: an Alibaba Cloud account or RAM user that is added to the project.

You can run the list users; command on the MaxCompute client to obtain user accounts.

role_name: a role that is added to the project.

You can run the

list roles;command on the MaxCompute client to query the name of the role.

Example:

grant read on package Information_Schema.systables to user RAM$Bob@aliyun.com:user01;(Optional) Step 3: Download and back up metadata

For historical jobs that are completed in a project, MaxCompute retains the data that was generated in the previous 14 days. If you need to frequently query data that was generated in the previous 14 days, we recommend that you back up metadata to your project on a regular basis. If you need to perform ad hoc queries only on the running history of jobs in the previous 14 days, skip this step.

Log on to the MaxCompute client and run the following commands to create metadata backup tables:

-- project_name specifies the name of the MaxCompute project. -- Create the tasks_history backup table. create table if not exists <project_name>.tasks_history ( task_catalog STRING ,task_schema STRING ,task_name STRING ,task_type STRING ,inst_id STRING ,status STRING ,owner_id STRING ,owner_name STRING ,result STRING ,start_time DATETIME ,end_time DATETIME ,input_records BIGINT ,output_records BIGINT ,input_bytes BIGINT ,output_bytes BIGINT ,input_tables STRING ,output_tables STRING ,operation_text STRING ,signature STRING ,complexity DOUBLE ,cost_cpu DOUBLE ,cost_mem DOUBLE ,settings STRING ,ds STRING ); -- Create the tunnels_history backup table. create table if not exists <project_name>.tunnels_history ( tunnel_catalog string ,tunnel_schema string ,session_id string ,operate_type string ,tunnel_type string ,request_id string ,object_name string ,partition_spec string ,data_size bigint ,block_id bigint ,offset bigint ,length bigint ,owner_id string ,owner_name string ,start_time datetime ,end_time datetime ,client_ip string ,user_agent string ,object_type string ,columns string ,ds string );Go to the DataWorks DataStudio page, create an ODPS SQL node named history_backup, and configure periodic scheduling to write data to the tasks_history and tunnels_history backup tables at regular intervals. Click the

icon in the upper-left corner to save the table data.

icon in the upper-left corner to save the table data. For more information about how to create an ODPS SQL node, see Develop a MaxCompute SQL task.

The following sample commands are used to run the ODPS SQL node.

-- project_name specifies the name of the MaxCompute project. use <project_name>; -- Insert data into the tasks_history table. insert into table <project_name>.tasks_history select task_catalog,task_schema ,task_name,task_type STRING,inst_id,`status`,owner_id,owner_name,result,start_time,end_time,input_records,output_records,input_bytes,output_bytes,input_tables,output_tables,operation_text,signature,complexity,cost_cpu,cost_mem,settings,ds from information_schema.tasks_history where ds ='${datetime1}'; -- Insert data into the tunnels_history table. insert into table <project_name>.tunnels_history select tunnel_catalog,tunnel_schema,session_id,operate_type,tunnel_type,request_id,object_name,partition_spec,data_size,block_id,offset,length,owner_id,owner_name,start_time,end_time,client_ip,user_agent,object_type,columns,ds from information_schema.tunnels_history where ds ='${datetime1}';${datetime1}is a scheduling parameter of DataWorks. To configure this parameter, perform the following operations: On the right side of the ODPS SQL node tab, click the Properties tab. In the General section of the Properties panel, add the ${datetime1} parameter and assign the${yyyymmdd}value to this parameter.NoteTo collect and analyze the metadata of multiple MaxCompute projects at the same time, you can create multiple ODPS SQL nodes and write the metadata of these MaxCompute projects to the same data backup table.

Step 4: Analyze the projects and billable items that cause unexpected cost surges in the Expenses and Costs console

Go to the Expenses and Costs console and use one of the following methods to analyze the projects and billable items that cause unexpected cost surges. For more information about how to go to the Expenses and Costs console, see View billing details.

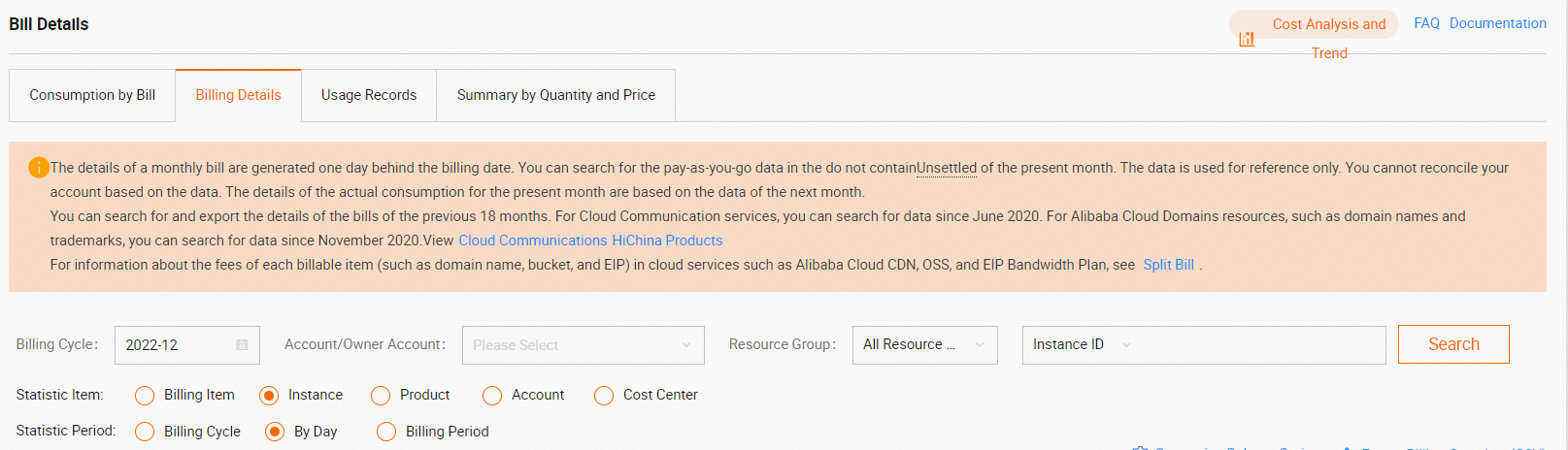

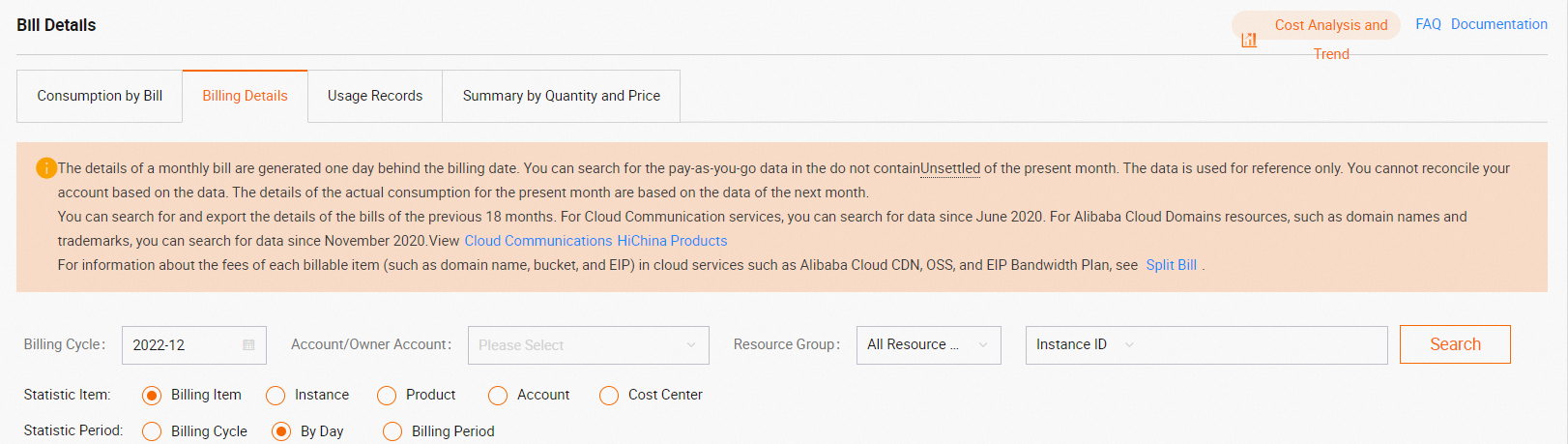

On the Billing Details tab of the Bill Details page, select Instance for Statistic Item and select By Day for Statistic Period to search for bills and identify the projects that generate high costs based on instance IDs. If the cost of a project is higher than expected, analyze the project.

On the Billing Details tab of the Bill Details page, select Billing Item for Statistic Item and select By Day for Statistic Period to search for bills and identify the billable items that generate high costs. You can search for a project based on Instance ID and analyze its billable items that generate high costs.

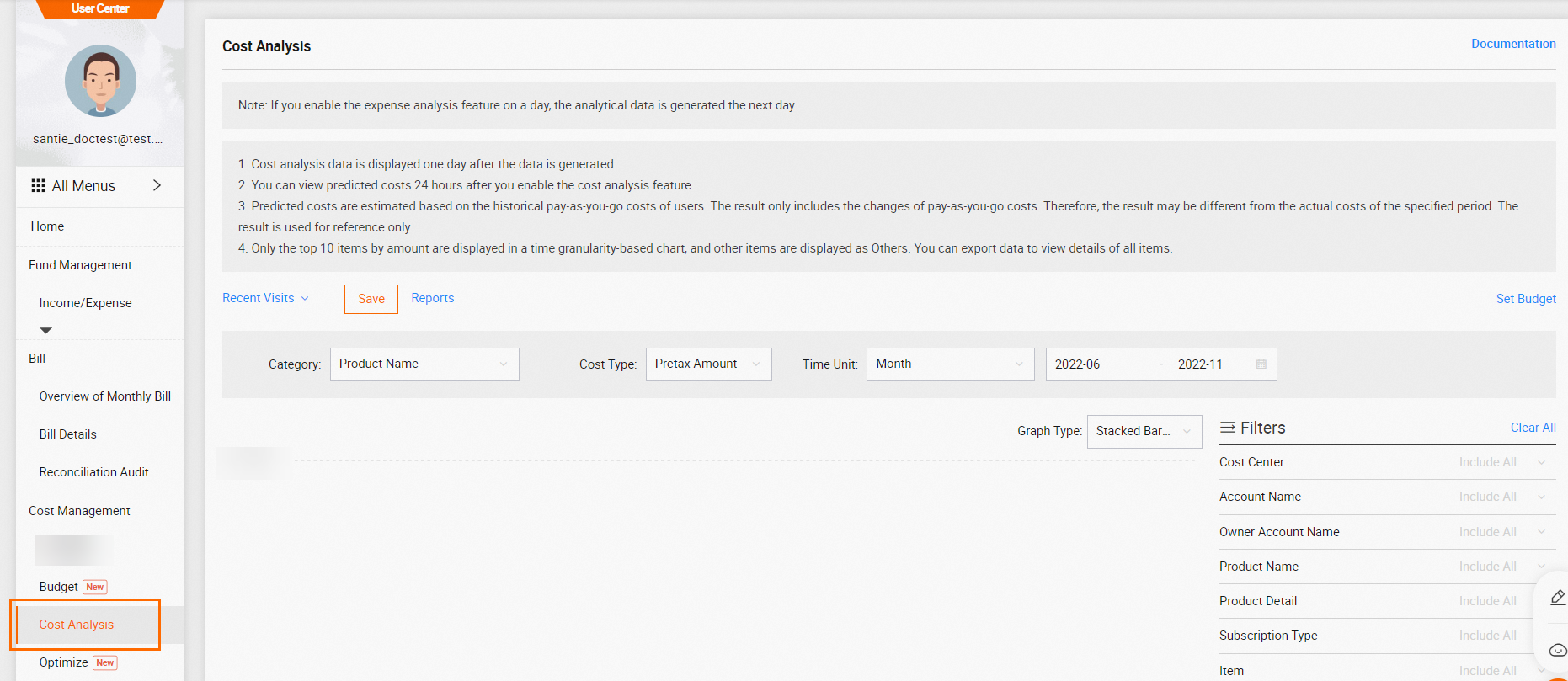

Identify the billable items that cause cost surges on the Cost Analysis page.

Step 5: Troubleshoot the cost surge issue

Analyze and troubleshoot the cost surge issue based on projects and billable items.

Troubleshoot unexpected surges in computing fees of SQL jobs

If the overall cost of your SQL jobs, including the SQL jobs that involve external tables, is high, unexpected cost surges may be caused by a single job, repeated running of jobs, or invalid configurations of scheduling properties.

Query the instance ID (inst_id) of the high-cost job and view the execution information about the job.

Log on to the MaxCompute client. Run the

usecommand to switch to the high-cost project that is identified in Step 4 and use TASKS_HISTORY to query the job fees. Sample commands:-- Enable the MaxCompute V2.0 data type edition. For more information about the data types supported by the MaxCompute V2.0 data type edition, see the "MaxCompute V2.0 data type edition" topic. set odps.sql.decimal.odps2=true; select inst_id --- Instance ID , input_bytes --- Amount of input data ( ,complexity ,cast(input_bytes/1024/1024/1024 * complexity * 0.3 as DECIMAL(18,5) ) cost_sum ,get_json_object(settings, "$.SKYNET_ID") SKYNET_ID --- ID of the DataWorks scheduling task from information_schema.tasks_history -- If you want to query metadata that was generated in the previous 14 days, you must query the table that is backed up in Step 3. The table name is in the <project_name>.tasks_history format. where task_type = 'SQL' OR task_type = 'SQLRT' AND ds = 'Date partition to be queried' ORDER BY cost_sum DESC LIMIT 10000 ;NoteComputing fees of an SQL job = Amount of input data × Complexity of SQL statements × Unit price (USD 0.0438/GB)

In this example,

task_type = 'SQL'indicates the SQL job, andtask_type = 'SQLRT'indicates the SQL query acceleration job.

View

SKYNET_IDof the high-cost SQL job. SKYNET_ID indicates the ID of the DataWorks scheduling task.If the ID is available, view the node execution details in the DataWorks console.

If no ID is available, the task is not initiated by a specific DataWorks scheduling node. You can view the execution information based on

inst_id. Sample command:select operation_text from information_schema.tasks_history where ds='<Task-mapped date partition>' and inst_id='<inst_id>';

Find the repeatedly running jobs.

Log on to the MaxCompute client. Run the use command to switch to the high-cost project that is identified in Step 4 and use TASKS_HISTORY to query the repeatedly running jobs. Sample commands:

-- Analyze the trend of the number of jobs.

SELECT signature

,ds

,COUNT(*) AS tasknum

FROM information_schema.tasks_history

-- If you want to query metadata that was generated in the previous 14 days, you must query the table that is backed up in Step 3. The table name is in the <project_name>.tasks_history format.

where task_type = 'SQL' OR task_type = 'SQLRT'

AND ds >= 'Date partition to be queried'

GROUP BY ds

,signature

ORDER BY tasknum DESC

LIMIT 10000

;

-- Confirm the abnormal signature and view the recent execution status of the SQL job.

SELECT *

FROM information_schema.tasks_history

-- If you want to query metadata that was generated in the previous 14 days, you must query the table that is backed up in Step 3. The table name is in the <project_name>.tasks_history format.

where signature = 'Abnormal signature'

AND ds >= 'Date partition to be queried'

;Troubleshoot unexpected surges in computing fees of Spark jobs

If the overall cost of your Spark jobs is high, you can query the instance ID (inst_id) of the high-cost job and view the execution information about the job.

Log on to the MaxCompute client. Run the

usecommand to switch to the high-cost project that is identified in Step 4 and use TASKS_HISTORY to query the job fees. Sample commands:-- Enable the MaxCompute V2.0 data type edition. For more information about the data types supported by the MaxCompute V2.0 data type edition, see the "MaxCompute V2.0 data type edition" topic. set odps.sql.decimal.odps2=true; select inst_id -- Instance ID ,cost_cpu -- CPU consumption of the job (100 indicates 1 core × s) For example, if 10 CPU cores run for 5 seconds, the value of cost_cpu is 5000, which is calculated by using the following formula: 10 × 100 × 5. ,cast(cost_cpu/100/3600 * 0.66 as DECIMAL(18,5) ) cost_sum from information_schema.tasks_history -- If you want to query metadata that was generated in the previous 14 days, you must query the table that is backed up in Step 3. The table name is in the <project_name>.tasks_history format. where task_type = 'CUPID' and status='Terminated' AND ds = 'Date partition to be queried' ORDER BY cost_sum DESC LIMIT 10000 ;NoteComputing fees of a Spark job on the day = Total billable hours on the day × Unit price (USD 0.1041/Hour/Task).

task_type = 'CUPID'indicates the Spark job.

View the execution information based on

inst_id. Sample command:select operation_text from information_schema.tasks_history where ds='Task-mapped date partition' and inst_id='<inst_id>';

Troubleshoot unexpected surges in computing fees of MapReduce jobs

If the overall cost of your MapReduce jobs is high, you can query the instance ID (inst_id) of the high-cost job and view the execution information about the job.

Log on to the MaxCompute client. Run the

usecommand to switch to the high-cost project that is identified in Step 4 and use TASKS_HISTORY to query the job fees. Sample commands:-- Enable the MaxCompute V2.0 data type edition. For more information about the data types supported by the MaxCompute V2.0 data type edition, see the "MaxCompute V2.0 data type edition" topic. set odps.sql.decimal.odps2=true; select inst_id -- Instance ID ,cost_cpu -- CPU consumption of the job (100 indicates 1 core × s) For example, if 10 CPU cores run for 5 seconds, the value of cost_cpu is 5000, which is calculated by using the following formula: 10 × 100 × 5. ,cast(cost_cpu/100/3600 * 0.46 as DECIMAL(18,5) ) cost_sum from information_schema.tasks_history -- If you want to query metadata that was generated in the previous 14 days, you must query the table that is backed up in Step 3. The table name is in the <project_name>.tasks_history format. where task_type = 'LOT' and status='Terminated' AND ds = 'Date partition to be queried' ORDER BY cost_sum DESC LIMIT 10000 ;View the execution information based on

inst_id. Sample command:select operation_text from information_schema.tasks_history where ds='Task-mapped date partition' and inst_id='<inst_id>';

Troubleshoot unexpected cost surges in storage

You need to query storage fees on the Usage Records tab on the Bill Details page.

Download usage records. For more information, see the "Step 1: Download the usage records of bills" section in Analyze the usage records of MaxCompute bills.

Upload usage records. For more information, see the "Step 2 (Optional): Upload the usage records to MaxCompute" section in Analyze the usage records of MaxCompute bills.

Perform SQL analysis. For more information, see the "Step 3: Analyze the usage records of bills" section in Analyze the usage records of MaxCompute bills.

Troubleshoot unexpected cost surges in data downloads over the Internet

If the overall cost of data downloads over the Internet is high, you can perform the following operations to analyze the types of high-cost downloads and the periods in which high-cost downloads occur:

Log on to the MaxCompute client. Run the use command to switch to the high-cost project that is identified in Step 4 and use TUNNELS_HISTORY to query the download fees. Sample commands:

-- Enable the MaxCompute V2.0 data type edition. For more information about the data types supported by the MaxCompute V2.0 data type edition, see the "MaxCompute V2.0 data type edition" topic.

set odps.sql.decimal.odps2=true;

SELECT ds

,operate_type

,SUM(CAST(data_size / 1024 / 1024 / 1024 * 0.8 AS DECIMAL(18,5))) download_fee

FROM information_schema.tunnels_history

WHERE operate_type = 'DOWNLOADLOG'

OR operate_type = 'DOWNLOADINSTANCELOG'

AND ds >= 'Date partition to be queried'

GROUP BY ds

,operate_type

ORDER BY download_fee DESC

;Fees of a data download over the Internet = Amount of downloaded data × Unit price (USD 0.1166/GB)

You can also analyze the trend of download fees over a specific period of time based on the execution results. You can run the tunnel show history command to view download history information. For more information, see Tunnel commands.

References

For more information about cost optimization, see Overview.