If you want to know the distribution of your MaxCompute fees and prevent the fees from exceeding your expectations when you use MaxCompute, you can obtain and analyze your MaxCompute bills to maximize resource utilization and reduce costs. This topic describes how to analyze the distribution of MaxCompute fees based on usage records of bills.

Background information

MaxCompute is a big data analytics platform. You can use the subscription and pay-as-you-go billing methods for computing resources of MaxCompute. You are charged based on MaxCompute projects on a daily basis, and daily bills are generated before 06:00 of the next day. For more information about billable items and billing methods of MaxCompute, see Overview.

Alibaba Cloud provides information about MaxCompute bill fluctuations (fee increases in most cases) during data development or before a version release of MaxCompute. You can analyze the bill fluctuations and improve the performance of jobs in your MaxCompute projects based on the analysis results. You can download the usage records of all commercial services on the Billing Management page in the Alibaba Cloud Management Console. For more information about how to obtain and download bills, see View billing details.

Step 1: Download the usage records of bills

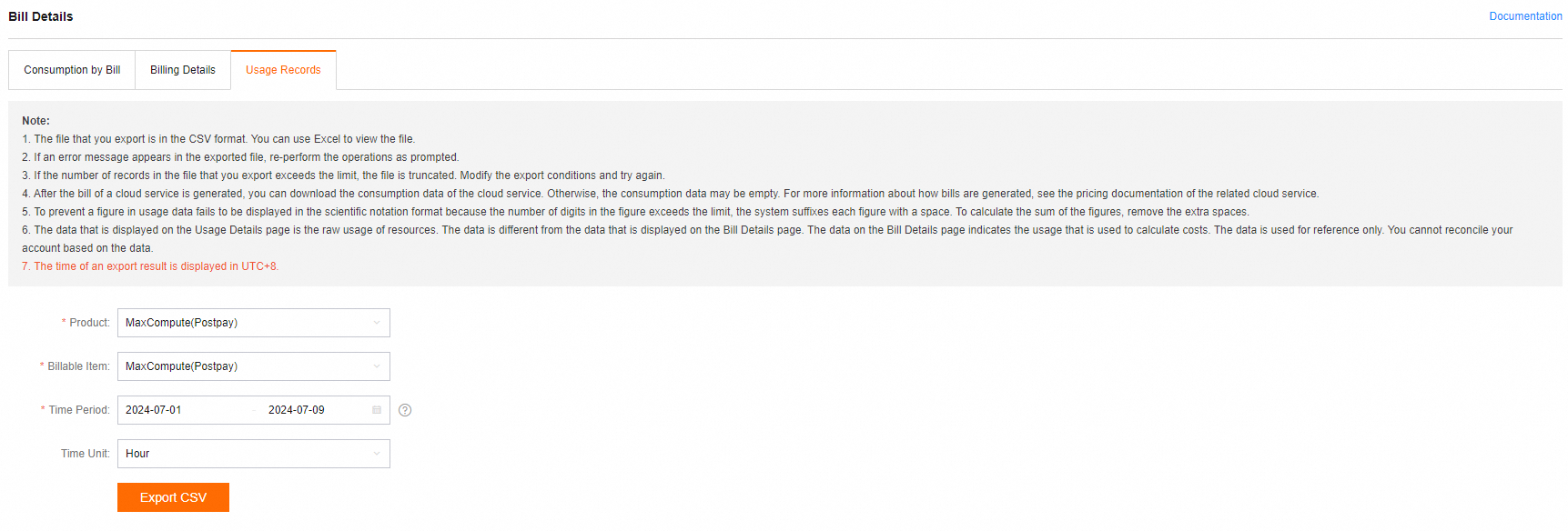

You can go to the Usage Records page to download and view the daily resource usage records and check how fees are generated. For example, you can check the storage and computing fees of each day and determine from which jobs the fees are generated.

Configure the following parameters:

Product: the service for which you want to download usage records. Set this parameter to MaxCompute(Postpay).

Billable Item:

MaxCompute(Postpay): the pay-as-you-go billing method.

ODPSDataPlus: the subscription billing method.

Time Period: the time range within which you want to query data. You can specify the start time and end time of the bills that you want to view.

If you start a job on December 1 and the job is complete on December 2, you must set the start time of the time range to December 1. Otherwise, you cannot find the resource usage of the job in the downloaded usage records. The resource consumption record of the job is displayed in the bill that is generated on December 2.

Click Export CSV. After a period of time, you can go to the Export Record page to download the usage records.

Step 2 (Optional): Upload the usage records to MaxCompute

If you want to use MaxCompute SQL to analyze the usage records of bills, perform this step to upload the usage records of bills to MaxCompute. If you only want to use Excel to analyze the usage records of bills, skip this step.

Create a MaxCompute table named

maxcomputefeeon the MaxCompute client (odpscmd). Sample statements:CREATE TABLE IF NOT EXISTS maxcomputefee ( projectid STRING COMMENT 'ProjectId' ,feeid STRING COMMENT 'MeteringId' ,meteringtime STRING COMMENT 'MeteringTime' ,type STRING COMMENT 'MeteringType, such as Storage, ComputationSQL, or DownloadEx' ,starttime STRING COMMENT 'StartTime' ,storage BIGINT COMMENT 'Storage' ,endtime STRING COMMENT 'EndTime' ,computationsqlinput BIGINT COMMENT 'SQLInput(Byte)' ,computationsqlcomplexity DOUBLE COMMENT 'SQLComplexity' ,uploadex BIGINT COMMENT 'UploadEx' ,download BIGINT COMMENT 'DownloadEx(Byte)' ,cu_usage DOUBLE COMMENT 'MRCompute(Core*Second)' ,Region STRING COMMENT 'Region' ,input_ots BIGINT COMMENT 'InputOTS(Byte)' ,input_oss BIGINT COMMENT 'InputOSS(Byte)' ,source_id STRING COMMENT 'DataWorksNodeID' ,source_type STRING COMMENT 'SpecificationType' ,RecycleBinStorage BIGINT COMMENT 'RecycleBinStorage' ,JobOwner STRING COMMENT 'JobOwner' ,Signature STRING COMMENT 'Signature' );Fields of usage records:

ProjectId: the ID or name of a MaxCompute project of your Alibaba Cloud account or a MaxCompute project of the Alibaba Cloud account to which the current RAM user belongs.

MeteringId: the billing ID. The billing ID indicates the ID of a storage task, an SQL computing task, an upload task, or a download task. The ID of an SQL computing task is specified by InstanceID, and the ID of an upload or download task is specified by Tunnel SessionId.

MeteringType: the billing type. Valid values: Storage, ComputationSql, UploadIn, UploadEx, DownloadIn, and DownloadEx. Only the items that are outlined in red are charged based on billing rules.

Storage: the volume of data that is read per hour. Unit: bytes.

StartTime or EndTime: the time when a job started to run or the time when a job stopped. Only storage data is obtained on an hourly basis.

SQLInput(Byte): the SQL computation item. This field specifies the amount of input data each time an SQL statement is executed. Unit: bytes.

SQLComplexity: the complexity of SQL statements. This field is one of the SQL billing factors.

UploadEx or DownloadEx(Byte): the amount of data that is uploaded or downloaded over the Internet. Unit: bytes.

MRCompute(Core*Second): the billable hours of a MapReduce job or a Spark job. The value is calculated by using the following formula:

Number of cores × Period of time for running a job (second). After calculation, you must convert the result into billable hours.InputOTS(Byte) or InputOSS(Byte): the amount of data that is read from Tablestore or Object Storage Service (OSS) by using Tablestore external tables or OSS external tables. Unit: bytes. These fields are used when fees for Tablestore external tables or OSS external tables are generated.

RecycleBinStorage: the amount of data that is read for backup per hour. Unit: bytes.

Region: the region where the MaxCompute project resides.

JobOwner: the user who submits the job.

Signature: the signature of an SQL job. The signatures of SQL jobs that have the same content and are repeatedly run or scheduled multiple times are the same.

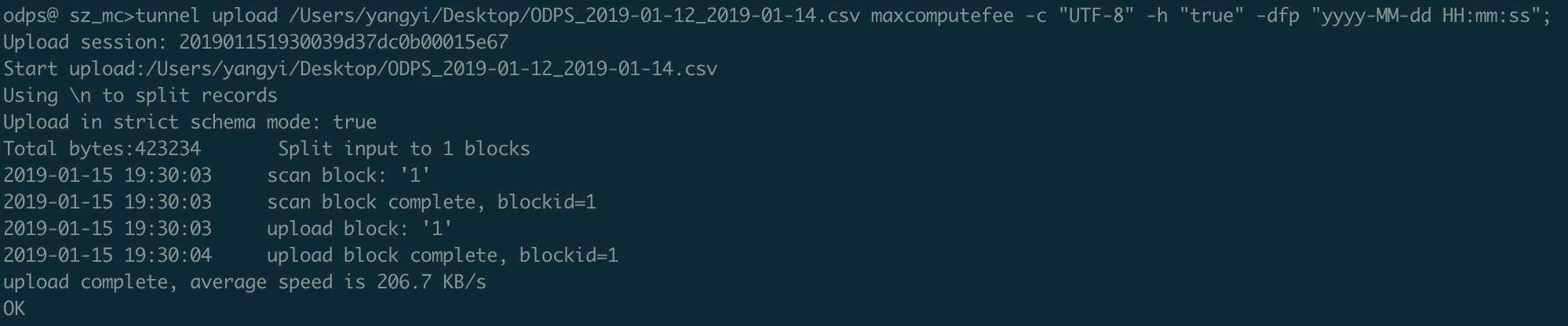

Run the following Tunnel command to upload the usage records of bills.

Before you upload a CSV file that contains the usage records of bills to MaxCompute, make sure that the number and data types of columns in the CSV file are the same as the number and data types of columns in the maxcomputefee table. If the preceding condition is not met, the data upload fails.

tunnel upload ODPS_2019-01-12_2019-01-14.csv maxcomputefee -c "UTF-8" -h "true" -dfp "yyyy-MM-dd HH:mm:ss"; Note

NoteFor more information about the configurations of Tunnel commands, see Tunnel commands.

You can also use the data import feature of DataWorks to upload the usage records of bills. For more information, see Use DataWorks (offline and real-time).

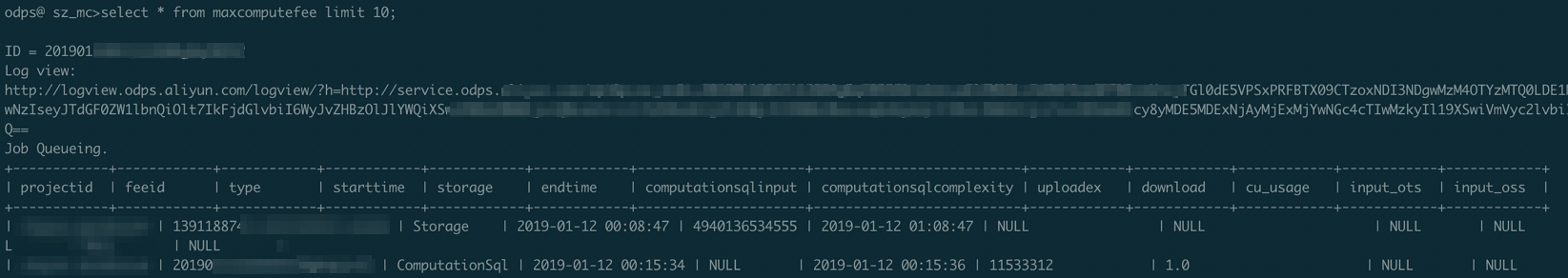

Execute the following statement to check whether all usage records are uploaded:

SELECT * FROM maxcomputefee limit 10;

Step 3: Analyze the usage records of bills

Analyze the fees of SQL jobs

MaxCompute SQL jobs meet the business requirements of 95% of cloud users. The fees of MaxCompute SQL jobs also occupy a large proportion of MaxCompute bills.

Computing fee for an SQL job = Amount of input data × SQL complexity × Price per GB (USD 0.0438)

Analyze usage records by using Excel: Analyze the records whose MeteringType is ComputationSql in the usage records. Sort the fees of SQL jobs and check whether the fees of specific SQL jobs are higher than expected or whether a large number of SQL jobs exist. You can calculate the fee of an SQL job by using the following formula:

Fee of an SQL job = SQLInput(Byte)/1024/1024/1024 × SQL complexity × SQL job unit price.Analyze usage records by using MaxCompute SQL: Before you use MaxCompute SQL to analyze usage records, make sure that the data upload described in Step 2 is complete, and the maxcomputefee table is generated.

-- Sort SQL jobs based on sqlmoney to analyze the fees of SQL jobs. SELECT to_char(endtime,'yyyymmdd') as ds,feeid as instanceid ,projectid ,computationsqlcomplexity -- SQL complexity ,SUM((computationsqlinput / 1024 / 1024 / 1024)) as computationsqlinput -- Amount of input data (GB) ,SUM((computationsqlinput / 1024 / 1024 / 1024)) * computationsqlcomplexity * 0.0438 AS sqlmoney FROM maxcomputefee WHERE TYPE = 'ComputationSql' AND to_char(endtime,'yyyymmdd') >= '20190112' GROUP BY to_char(endtime,'yyyymmdd'),feeid ,projectid ,computationsqlcomplexity ORDER BY sqlmoney DESC LIMIT 10000 ;The following conclusions can be drawn from the execution result:

To reduce the fees of large jobs, you can reduce the amount of data that you want to read and the SQL complexity.

You can summarize the amount of data that is processed on a daily basis based on the

dsfield and analyze the fee trend of SQL jobs in a specific period of time. For example, you can create a line chart in an Excel file or by using tools such as Quick BI, to display the trend.To identify the node that you want to optimize based on the analysis result, you can perform the following steps:

Obtain the LogView URL of the run logs of the instance that is specified by instanceid.

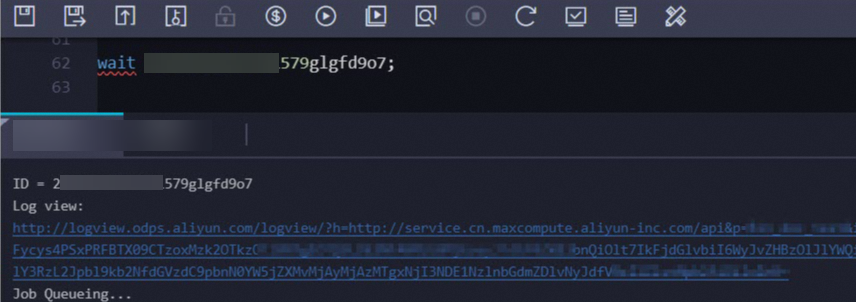

Run the

wait <instanceid>;command on the MaxCompute client (odpscmd) or in the DataWorks console to view the run logs of the instance that is specified by instanceid.

Run the following command to view the details of the job:

DESC instance 2016070102275442go3xxxxxx;The following result is returned:

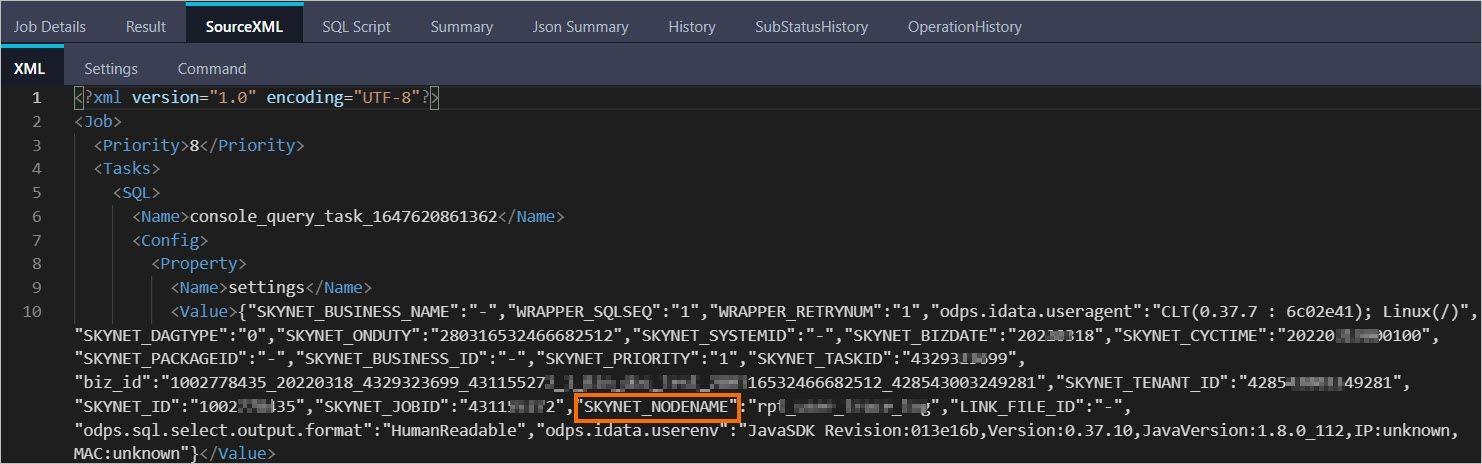

ID 2016070102275442go3xxxxxx Owner ALIYUN$***@aliyun-inner.com StartTime 2016-07-01 10:27:54 EndTime 2016-07-01 10:28:16 Status Terminated console_query_task_1467340078684 Success Query select count(*) from src where ds='20160628';Enter the LogView URL in a web browser and press Enter. In LogView, click the SourceXML tab to view the value of the SKYNET_NODENAME parameter.

Note

NoteFor more information about how to use LogView to view information about jobs, see Use LogView V2.0 to view job information.

If the value of the SKYNET_NODENAME parameter cannot be obtained or the SKYNET_NODENAME parameter is left empty, you can click the SQL Script tab to obtain the code snippet. Then, find the node that you want to optimize in the DataWorks console based on the code snippet and optimize the node. For more information, see DataWorks code search.

In the DataWorks console, search for the node based on the value of the SKYNET_NODENAME parameter and optimize the node.

Analyze the trend of the number of jobs

In most cases, the fee increases with a surge in the number of jobs due to repeated operations or invalid settings of scheduling attributes.

Analyze usage records by using Excel: Analyze the records whose MeteringType is ComputationSql in the usage records. Collect the number of jobs per day for each project and check whether the number of jobs for specific projects greatly fluctuates.

Analyze usage records by using MaxCompute SQL: Before you use MaxCompute SQL to analyze usage records, make sure that the data upload described in Step 2 is complete, and the maxcomputefee table is generated.

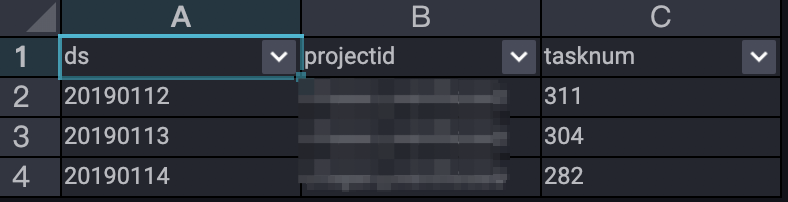

-- Analyze the trend of the number of jobs. SELECT TO_CHAR(endtime,'yyyymmdd') AS ds ,projectid ,COUNT(*) AS tasknum FROM maxcomputefee WHERE TYPE = 'ComputationSql' AND TO_CHAR(endtime,'yyyymmdd') >= '20190112' GROUP BY TO_CHAR(endtime,'yyyymmdd') ,projectid ORDER BY tasknum DESC LIMIT 10000 ;The following figure shows the execution result.

The execution result shows the trend of the number of jobs that were submitted to MaxCompute and were successfully run from January 12, 2019 to January 14, 2019.

The execution result shows the trend of the number of jobs that were submitted to MaxCompute and were successfully run from January 12, 2019 to January 14, 2019.

Analyze storage fees

Use Excel to analyze the reasons for charging CNY 0.01 for storage

This issue is caused by existing data that is stored on MaxCompute and the data volume does not exceed 0.5 GB.

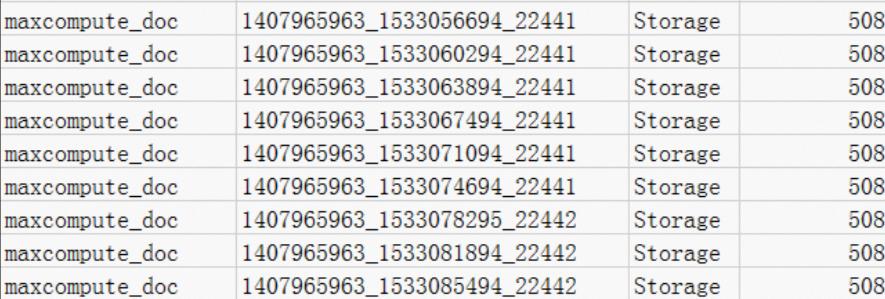

View the records whose MeteringType is Storage. 508 bytes of data is stored for the

maxcompute_docproject. The following figure shows the details. You are charged CNY 0.01 for data whose size is no more than 512 MB based on storage billing rules. If the data is only used for testing, you can perform the following operations to resolve the issue:

If the data is only used for testing, you can perform the following operations to resolve the issue: If only table data is no longer used, execute the

Drop Tablestatement to delete table data in the project.If the project is no longer used, log on to the MaxCompute console and delete the project on the Projects page.

Use Excel to analyze the storage fees of data that is stored

less than one dayView the records whose MeteringType is Storage. A total of 333,507,833,900 bytes of data is stored for the alian project. The data is uploaded at 08:00. Therefore, storage is billed from 09:07 and you are charged for 15-hour storage.

NoteIf the billing cycle is one day, the billing stops at the end of each day. As a result, the last record is not included in the bill of the day.

The average storage within 24 hours is calculated based on storage billing rules. Then, the average storage fee is calculated by using the billing formula.

-- Calculate the average storage. 333507833900 Byte×15/1024/1024/1024/24=194.127109076362103 GB The storage fee per day is calculated by using the following formula. Four decimal places are retained in the result. 194.127109076362103 GB × USD 0.0006 per GB per day = USD 0.1165 per day

Analyze usage records by using MaxCompute SQL: Before you use MaxCompute SQL to analyze usage records, make sure that the data upload described in Step 2 is complete, and the maxcomputefee table is generated.

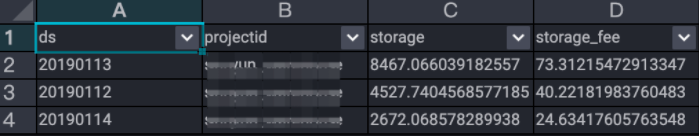

-- Analyze storage fees. SELECT t.ds ,t.projectid ,t.storage ,CASE WHEN t.storage < 0.5 THEN t.storage*0.01 --- If the actually used storage space of a project is greater than 0 MB and less than or equal to 512 MB, the unit price for the storage space is CNY 0.01 per GB per day. WHEN t.storage >= 0.5 THEN t.storage*0.004 --- If the actually used storage space of a project is greater than 512 MB, the unit price for the storage space is CNY 0.004 per GB per day. END storage_fee FROM ( SELECT to_char(starttime,'yyyymmdd') as ds ,projectid ,SUM(storage/1024/1024/1024)/24 AS storage FROM maxcomputefee WHERE TYPE = 'Storage' and to_char(starttime,'yyyymmdd') >= '20190112' GROUP BY to_char(starttime,'yyyymmdd') ,projectid ) t ORDER BY storage_fee DESC ;The following figure shows the execution result.

The following conclusions can be drawn from the execution result:

The following conclusions can be drawn from the execution result:Storage fees increased on January 12, 2019 and decreased on January 14, 2019.

To reduce storage fees, we recommend that you specify a lifecycle for tables and delete unnecessary temporary tables.

Analyze long-term storage, Infrequent Access (IA) storage, long-term storage access, and low-frequency storage access by using MaxCompute SQL: Before you use MaxCompute SQL to analyze the usage records, make sure that the data upload described in Step 2 is complete, and the maxcomputefee table is generated.

-- Analyze long-term storage fees. SELECT to_char(starttime,'yyyymmdd') as ds ,projectid ,SUM(storage/1024/1024/1024)/24*0.0011 AS longTerm_storage FROM maxcomputefee WHERE TYPE = 'ColdStorage' and to_char(starttime,'yyyymmdd') >= '20190112' GROUP BY to_char(starttime,'yyyymmdd') ,projectid; -- Analyze the IA storage fees. SELECT to_char(starttime,'yyyymmdd') as ds ,projectid ,SUM(storage/1024/1024/1024)/24*0.0011 AS lowFre_storage FROM maxcomputefee WHERE TYPE = 'LowFreqStorage' and to_char(starttime,'yyyymmdd') >= '20190112' GROUP BY to_char(starttime,'yyyymmdd') ,projectid; -- Analyze long-term storage access fees. SELECT to_char(starttime,'yyyymmdd') as ds ,projectid ,SUM(computationsqlinput/1024/1024/1024)*0.522 AS longTerm_IO FROM maxcomputefee WHERE TYPE = 'SqlLongterm' and to_char(starttime,'yyyymmdd') >= '20190112' GROUP BY to_char(starttime,'yyyymmdd') ,projectid; -- Analyze IA storage access fees. SELECT to_char(starttime,'yyyymmdd') as ds ,projectid ,SUM(computationsqlinput/1024/1024/1024)*0.522 AS lowFre_IO FROM maxcomputefee WHERE TYPE = 'SqlLowFrequency' and to_char(starttime,'yyyymmdd') >= '20190112' GROUP BY to_char(starttime,'yyyymmdd') ,projectid;

Analyze download fees

For Internet-based data downloads or cross-region data downloads in your MaxCompute project, you are charged based on the amount of data that you download.

Fees of a download job = Amount of downloaded data × Price per GB (USD 0.1166)

Analyze usage records by using Excel: Analyze the records whose MeteringType is DownloadEx.

For example, you find a record for download traffic of 0.036 GB (38,199,736 bytes). In this case, the download fee is calculated based on the billing rules that are described in Download pricing (pay-as-you-go) by using the following formula:

(38,199,736 bytes/1024/1024/1024) × USD 0.1166 per GB = USD 0.004.Optimize data downloads. You can also check whether the service configured for your tunnel is billed due to Internet access. For more information, see Endpoints. For example, you are located in Suzhou, China, which belongs to the China (Shanghai) region, and want to download large amounts of data. In this case, you can use an Elastic Compute Service (ECS) instance in the China (Shanghai) region to download data to your virtual machine (VM). Then, you can use your subscription ECS instance to download the data.

Analyze usage records by using MaxCompute SQL: Before you use MaxCompute SQL to analyze usage records, make sure that the data import described in Step 2 is complete, and the maxcomputefee table is generated.

-- Analyze download fees. SELECT TO_CHAR(starttime,'yyyymmdd') AS ds ,projectid ,SUM((download/1024/1024/1024)*0.1166) AS download_fee FROM maxcomputefee WHERE type = 'DownloadEx' AND TO_CHAR(starttime,'yyyymmdd') >= '20190112' GROUP BY TO_CHAR(starttime,'yyyymmdd') ,projectid ORDER BY download_fee DESC ;

Analyze the fees of MapReduce jobs

Computing fee for a MapReduce job on the day = Total billable hours on the day × Price per hour per task (USD 0.0690)

Analyze usage records by using Excel: Analyze the records whose MeteringType is MapReduce in the usage records. Calculate and sort the fees of MapReduce jobs based on the value that is specified by SpecificationType. The fee of a MapReduce job or a Spark job is calculated by using the following formula:

Number of cores × Period of time for running a job (second)/3600 × Unit price (USD 0.0690).Analyze usage records by using MaxCompute SQL: Before you use MaxCompute SQL to analyze usage records, make sure that the data upload described in Step 2 is complete, and the maxcomputefee table is generated.

-- Analyze the fees of MapReduce jobs. SELECT TO_CHAR(starttime,'yyyymmdd') AS ds ,projectid ,(cu_usage/3600)*0.0690 AS mr_fee FROM maxcomputefee WHERE type = 'MapReduce' AND TO_CHAR(starttime,'yyyymmdd') >= '20190112' GROUP BY TO_CHAR(starttime,'yyyymmdd') ,projectid ,cu_usage ORDER BY mr_fee DESC ;

Analyze the fees of jobs that use Tablestore external tables or OSS external tables

Computing fee for an SQL job that uses Tablestore external tables or OSS external tables = Amount of input data × Price per GB (USD 0.0044)

Analyze usage records by using Excel: Analyze the records whose MeteringType is ComputationSqlOTS or ComputationSqlOSS in the usage records. Sort the computing fees for SQL jobs that use Tablestore external tables or OSS external tables. The computing fee is calculated by using the following formula:

SQLInput(Byte)/1024/1024/1024 × Unit price (USD 0.0044).Analyze usage records by using MaxCompute SQL: Before you use MaxCompute SQL to analyze usage records, make sure that the data upload described in Step 2 is complete, and the maxcomputefee table is generated.

-- Analyze the fees of SQL jobs that use Tablestore external tables. SELECT TO_CHAR(starttime,'yyyymmdd') AS ds ,projectid ,(computationsqlinput/1024/1024/1024)*1*0.03 AS ots_fee FROM maxcomputefee WHERE type = 'ComputationSqlOTS' AND TO_CHAR(starttime,'yyyymmdd') >= '20190112' GROUP BY TO_CHAR(starttime,'yyyymmdd') ,projectid ,computationsqlinput ORDER BY ots_fee DESC ; -- Analyze the fees of SQL jobs that use OSS external tables. SELECT TO_CHAR(starttime,'yyyymmdd') AS ds ,projectid ,(computationsqlinput/1024/1024/1024)*1*0.03 AS oss_fee FROM maxcomputefee WHERE type = 'ComputationSqlOSS' AND TO_CHAR(starttime,'yyyymmdd') >= '20190112' GROUP BY TO_CHAR(starttime,'yyyymmdd') ,projectid ,computationsqlinput ORDER BY oss_fee DESC ;

Analyze the computing fees for Spark jobs

Computing fee for a Spark job on the day = Total billable hours on the day × Price per hour per task (USD 0.1041)

Analyze usage records by using Excel: Analyze the records whose MeteringType is spark in the usage records. Sort the computing fees for Spark jobs. The computing fee for a Spark job is calculated by using the following formula:

Number of cores × Period of time for running a job (second)/3600 × Unit price (USD 0.1041).Analyze usage records by using MaxCompute SQL: Before you use MaxCompute SQL to analyze usage records, make sure that the data upload described in Step 2 is complete, and the maxcomputefee table is generated.

-- Analyze the fees of Spark jobs. SELECT TO_CHAR(starttime,'yyyymmdd') AS ds ,projectid ,(cu_usage/3600)*0.1041 AS mr_fee FROM maxcomputefee WHERE type = 'spark' AND TO_CHAR(starttime,'yyyymmdd') >= '20190112' GROUP BY TO_CHAR(starttime,'yyyymmdd') ,projectid ,cu_usage ORDER BY mr_fee DESC ;

References

TO_CHAR is a date function or a string function. For more information about the TO_CHAR function, see TO_CHAR.