このトピックでは、Alibaba Cloud Realtime Compute for Apache FlinkでApache Flink用Change Data Capture(CDC)コネクタを使用する方法と、コネクタ名を変更する方法について説明します。

Apache Flink用CDCコネクタは、Apache Flink 2.0のプロトコルに準拠したオープンソースのコネクタです。 Apache Flink用CDCコネクタでサポートされているサービスとそのサービスレベルアグリーメント(SLA)は、Alibaba Cloud Realtime Compute for Apache Flinkが商用リリースしているCDCコネクタのものとは異なります。

Apache Flink用CDCコネクタの使用時に、構成の失敗、デプロイの失敗、データの損失などの問題が発生した場合は、オープンソースコミュニティで関連するトラブルシューティング方法を見つける必要があります。 Alibaba Cloud Realtime Compute for Apache Flinkは、Apache Flink用CDCコネクタのテクニカルサポートを提供していません。

Apache Flink用CDCコネクタのSLAは、お客様自身で保証する必要があります。

利用可能なCDCコネクタ

CDCコネクタ | 説明 |

これらのCDCコネクタは、Realtime Compute for Apache Flinkで提供されています。 Apache Flink用CDCコネクタを使用する必要はありません。 | |

これらの CDC コネクタは商用利用できません。これらのコネクタの使用方法の詳細については、「Apache Flink CDC コネクタを使用する」をご参照ください。 説明 Apache Flink用CDCコネクタのデフォルト名、または新しいカスタムコネクタの名前が、Realtime Compute for Apache Flinkの組み込みコネクタまたは既存のカスタムコネクタの名前と同じである場合は、名前の競合を防ぐために、デフォルトのコネクタ名を変更します。 SQL Server CDCコネクタとDb2 CDCコネクタの場合は、コミュニティでデフォルトのコネクタ名を変更し、コネクタを再パッケージ化する必要があります。 たとえば、sqlserver-cdcをsqlserver-cdc-testに変更できます。 詳細については、コネクタ名を変更するをご参照ください。 |

Apache Flink用CDCコネクタとVVRのバージョンマッピング

VVRバージョン | Apache Flink用CDCコネクタのリリースバージョン |

vvr-4.0.0-flink-1.13~vvr-4.0.6-flink-1.13 | release-1.4 |

vvr-4.0.7-flink-1.13~vvr-4.0.9-flink-1.13 | release-2.0 |

vvr-4.0.10-flink-1.13~vvr-4.0.12-flink-1.13 | release-2.1 |

vvr-4.0.13-flink-1.13~vvr-4.0.14-flink-1.13 | release-2.2 |

vvr-4.0.15-flink-1.13~vvr-6.0.2-flink-1.15 | release-2.3 |

vvr-6.0.2-flink-1.15~vvr-8.0.5-flink-1.17 | release-2.4 |

vvr-8.0.1-flink-1.17 から vvr-8.0.7-flink-1.17 | release-3.0 |

vvr-8.0.11-flink-1.17 から vvr-11.1-jdk11-flink-1.20 | release-3.4 |

Apache Flink CDC コネクタを使用する

SQLデプロイ

Apache Flink CDC コネクタ ページで、Apache Flink 用の CDC コネクタの必要なリリースバージョンを選択します。

説明最新安定バージョンの使用をお勧めします。

互換性の問題を防ぐため、使用している Ververica Runtime (VVR) バージョンに対応する CDC リリースバージョンを選択してください。バージョンマッピングについては、「Apache Flink 用 CDC コネクタと VVR のバージョンマッピング」をご参照ください。

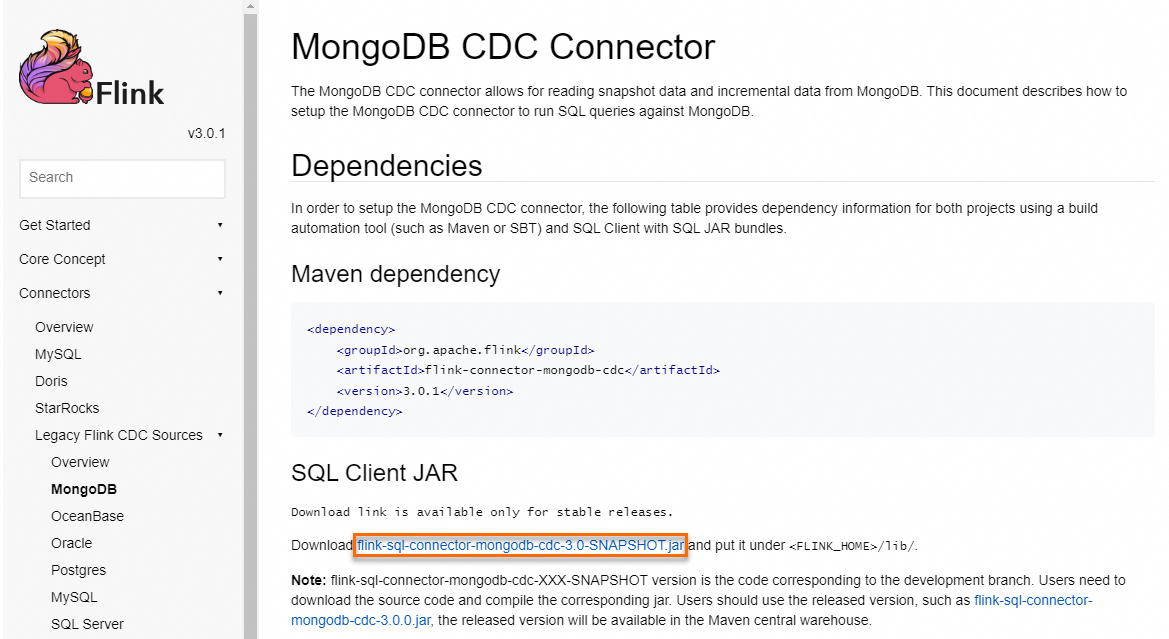

左側の目次で、[コネクタ] をクリックし、目的の CDC コネクタを選択します。表示されるページの [SQL Client JAR] セクションで、リンクをクリックして CDC コネクタの JAR ファイルをダウンロードします。

説明

説明また、Maven リポジトリ にアクセスして、必要な CDC コネクタ JAR をダウンロードすることもできます。

ワークスペースの [アクション] 列にある [コンソール] をクリックします。開発コンソールが開きます。

開発コンソールが開きます。

左側のナビゲーションウィンドウで、[コネクタ] をクリックします。

[コネクタ] ページで、[カスタムコネクタの作成] をクリックします。

ダイアログで、手順 2 でダウンロードした JAR ファイルをアップロードします。

詳細については、カスタムコネクタの管理をご参照ください。

SQL でジョブを開発し、

connectorオプションを Apache Flink CDC コネクタの名前に設定します。詳細については、「Apache Flink 用 CDC コネクタ」をご参照ください。

JARデプロイ

JAR デプロイメントで Apache Flink 用の CDC コネクタを使用する場合は、

pom.xmlファイルで次の依存関係を宣言する必要があります。<dependency> <groupId>com.ververica</groupId> <artifactId>flink-connector-${Name of the desired connector}-cdc</artifactId> 目的のコネクタの名前 <version>${Version of the connector for Apache Flink}</version> Apache Flink用コネクタのバージョン </dependency>Mavenリポジトリには、リリースバージョンのみが含まれており、スナップショットバージョンは含まれていません。 スナップショットバージョンを使用する場合は、GitHubリポジトリをクローンし、スナップショットバージョンのJARファイルをコンパイルできます。

importキーワードを使用して、コードに関連する実装クラスをインポートし、ドキュメントの説明に基づいてクラスを使用します。重要異なる ID のアーティファクトについて、

flink-connector-xxxとflink-sql-connector-xxxの違いに注意してください。flink-connector-xxx: コネクタのコードのみが含まれています。コネクタの依存関係を使用する場合は、コード内で依存関係を宣言する必要があります。flink-sql-connector-xxx: すべての依存関係を単一の JAR ファイルにパッケージ化して、直接使用できるようにします。

ビジネス要件に基づいて、コネクタ実装クラスを選択します。たとえば、Realtime Compute for Apache Flink の開発コンソールでカスタムコネクタを作成するときに、

flink-sql-connector-xxxを使用できます。

コネクタ名を変更する

このセクションでは、Apache Flink用SQL Server CDCコネクタの名前を変更する方法について説明します。

GitHubリポジトリをクローンし、使用するバージョンに対応するブランチに切り替えます。

SQL Server CDCコネクタのファクトリクラスの識別子を変更します。

//com.ververica.cdc.connectors.sqlserver.table.SqlServerTableFactory @Override public String factoryIdentifier() { return "sqlserver-cdc-test"; // 変更後のコネクタ名 }flink-sql-connector-sqlserver-cdcサブモジュールをコンパイルしてパッケージ化します。

Realtime Compute for Apache Flinkの開発コンソールの左側のナビゲーションペインで、[コネクタ] をクリックします。 [コネクタ] ページで、[カスタムコネクタの作成] をクリックします。 [カスタムコネクタの作成] ダイアログボックスで、手順 3 でパッケージ化されたJARファイルをアップロードします。

詳細については、カスタムコネクタの管理をご参照ください。

SQL デプロイメント の手順に基づいて SQL ドラフトを作成する場合は、

connectorパラメーターをコネクタ名sqlserver-cdc-testに設定します。