コンテナエンジンのレイヤーはユーザーにとって透過的ではないため、コンテナサービスの障害のトラブルシューティングは困難です。この問題に対処するため、Alibaba Cloud Container Service for Kubernetes (ACK) は SysOM を導入しました。SysOM は、オペレーティングシステムカーネルレイヤーでコンテナ監視データを提供することにより、コンテナのメモリ問題の可観測性を向上させます。これにより、コンテナエンジンのレイヤーでより透過的に問題を表示および診断し、コンテナ化されたアプリケーションを効率的に移行できます。このトピックでは、SysOM を使用してコンテナのメモリ問題を特定する方法について説明します。

前提条件

2021 年 10 月以降に作成された ACK マネージドクラスター、または ACK Serverless クラスター があり、クラスターの Kubernetes バージョンが 1.18.8 以降であること。クラスターの作成方法の詳細については、「ACK マネージドクラスターを作成する」および「ACK Serverless クラスターを作成する」をご参照ください。クラスターの更新方法の詳細については、「ACK クラスターを手動でアップグレードする」をご参照ください。

Managed Service for Prometheus が有効になっていること。詳細については、「Managed Service for Prometheus を有効にする」をご参照ください。

ack-sysom-monitor が有効になっていること。詳細については、「ack-sysom-monitor を有効にする」をご参照ください。

ack-sysom-monitor の課金

ack-sysom-monitor コンポーネントが有効になると、関連コンポーネントは自動的に監視メトリックを Managed Service for Prometheus に送信します。これらのメトリックは カスタムメトリック と見なされます。カスタムメトリックには料金が発生します。

この機能を有効にする前に、「課金の概要」を読んでカスタムメトリックの課金ルールをご理解いただくことをお勧めします。料金は、クラスターサイズとアプリケーションの数によって異なる場合があります。 リソース使用量を表示する の手順に従って、リソース使用量を監視および管理できます。

シナリオ

コンテナ化は、低コスト、高効率、柔軟性、スケーラビリティという利点により、エンタープライズ IT アーキテクチャのベストプラクティスとなっています。

ただし、コンテナ化はコンテナエンジンのレイヤーの透過性を損ない、メモリ使用量の過剰、さらには制限の超過につながり、メモリ不足 (OOM) の問題を引き起こす可能性があります。

Alibaba Cloud Container Service for Kubernetes (ACK) チームは、Alibaba Cloud GuestOS オペレーティングシステムチームと協力して、オペレーティングシステムカーネルレイヤーでのコンテナ監視機能を通じて正確なメモリ管理を実装し、OOM 問題を防止しています。

コンテナメモリ

コンテナのメモリは、アプリケーションメモリ、カーネルメモリ、および空きメモリで構成されます。

メモリカテゴリ | メモリのサブカテゴリ | 説明 |

アプリケーションメモリ | アプリケーションメモリは、次のタイプで構成されます。

| 実行中のアプリケーションによって占有されるメモリ。 |

カーネルメモリ | カーネルメモリは、次のタイプで構成されます。

| OS カーネルによって使用されるメモリ。 |

空きメモリ | なし。 | 使用されていないメモリ。 |

仕組み

Kubernetes は、メモリワーキングセットを使用して、コンテナのメモリ使用量を監視および管理します。コンテナによって占有されるメモリが指定されたメモリの上限を超えるか、ノードがメモリプレッシャーに直面すると、Kubernetes はワーキングセットに基づいてコンテナをエビクトするか終了するかを決定します。SysOM を介してポッドのワーキングセットを監視することにより、より包括的で正確なメモリ監視および分析機能を得ることができます。これにより、O&M 担当者と開発者は、大きなワーキングセットの問題を迅速に特定して解決し、コンテナのパフォーマンスと安定性を向上させることができます。

メモリワーキングセットとは、一定の時間範囲内でコンテナによって実際に使用されるメモリを指し、コンテナの現在の操作に必要なメモリです。具体的な計算式は、ワーキングセット = InactiveAnon + ActiveAnon + ActiveFile です。InactiveAnon と ActiveAnon は、アプリケーションの匿名メモリの合計量を表し、ActiveFile は、アプリケーションのアクティブファイルキャッシュのサイズを表します。監視と分析を行うことで、O&M 担当者はリソースをより効果的に管理して、アプリケーションの継続的かつ安定した運用を確保できます。

SysOM 機能を使用する

SysOM の OS カーネルレベルのダッシュボードでは、ポッドとノードのメモリ、ネットワーク、ストレージメトリックなどのシステムメトリックをリアルタイムで表示できます。SysOM メトリックの詳細については、「SysOM に基づくカーネルレベルのコンテナ監視」をご参照ください。

ACK コンソール にログインします。左側のナビゲーションウィンドウで、[クラスター] をクリックします。

[クラスター] ページで、管理するクラスターを見つけ、その名前をクリックします。左側のペインで、 を選択します。

[Prometheus 監視] ページで、[SysOM] > [SysOM - ポッド] を選択して、ダッシュボードのポッドメモリデータを表示します。

計算式に基づいて、メモリ不足の問題を特定する方法を分析します。

ポッドの合計メモリ = RSS 常駐メモリ + キャッシュ ≈ inactive_anon + active_anon + inactive_file + active_file

ワーキングセット = inactive_anon + active_anon + active_file

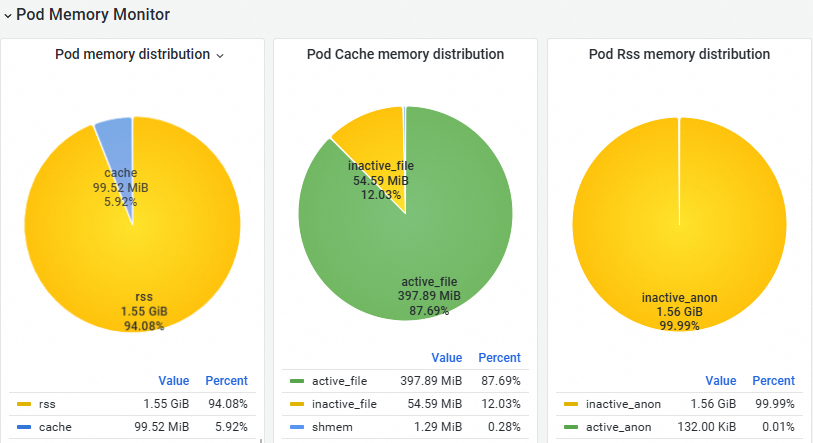

[ポッドメモリモニター] セクションで、ポッドの合計メモリ式に従って、最初にポッドメモリをキャッシュと RSS メモリに分割できます。次に、キャッシュを active_file、inactive_file、shmem (共有メモリ) の 3 つのタイプに分割し、RSS メモリを active_anon と inactive_anon の 2 つのタイプに分割します。

次の図に示すように、inactive_anon が最大の割合を占めています。

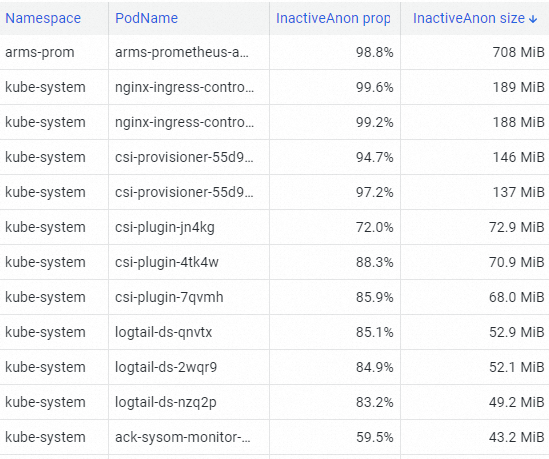

[ポッドリソース分析] セクションで、top コマンドを使用して、クラスター内で最も多くの InactiveAnon メモリを占有しているポッドを見つけます。

次の図に示すように、arms-prom ポッドが最も多くの InactiveAnon メモリを占有しています。

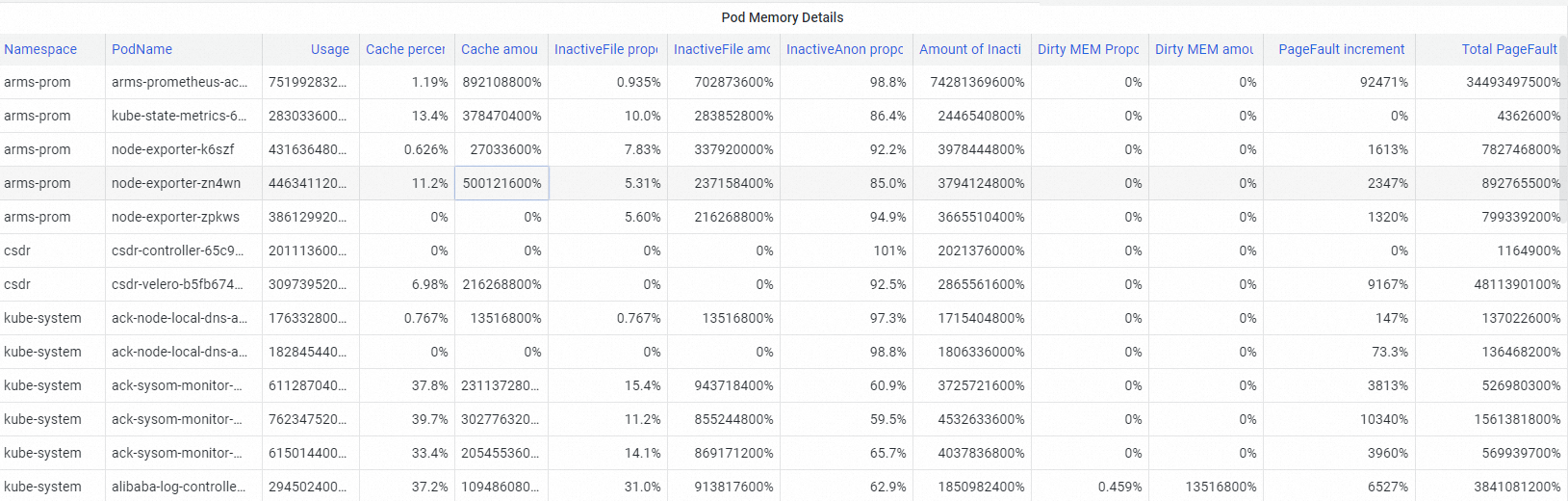

[ポッドメモリ詳細] セクションで、ポッドのメモリ使用量の詳細を表示できます。ダッシュボードに表示されるポッドキャッシュ、InactiveFile、InactiveAnon、Dirty Memory などのポッドメトリックに基づいて、ポッドの一般的なメモリ不足の問題を特定できます。

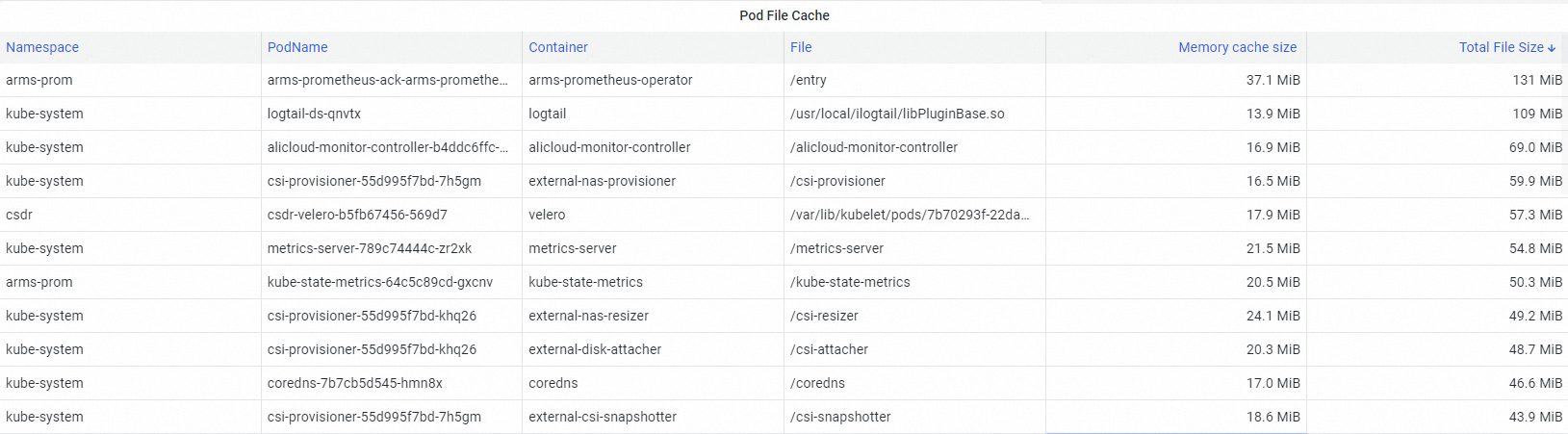

[ポッドファイルキャッシュ] セクションで、大きなキャッシュメモリの原因を特定します。

ポッドが大量のメモリを占有している場合、ポッドのメモリワーキングセットが実際に使用中のメモリサイズを超える可能性があります。メモリ不足の問題は、ポッドが属するアプリケーションのパフォーマンスに悪影響を及ぼします。

メモリ不足の問題を修正します。

ACK が提供する詳細スケジューリング機能を使用して、メモリ不足の問題を修正できます。詳細については、「メモリ QoS を有効にする」をご参照ください。

関連情報

SysOM メトリックの詳細については、「SysOM に基づくカーネルレベルのコンテナ監視」をご参照ください。

コンテナのメモリ Quality of Service (QoS) 機能の詳細については、「カーネルの機能とインターフェースの概要」をご参照ください。