ACK クラスターは、デフォルトで Alibaba Cloud Prometheus とオープンソース Prometheus の両方と互換性があります。事前構成された Prometheus メトリックがビジネスニーズを満たさない場合は、カスタムの Prometheus Query Language (PromQL) を使用してアラートルールを作成できます。これらのルールは、クラスターノード、ホスト、コンテナーレプリカ、ワークロードなどのリソースの正常性を監視するのに役立ちます。アラートルールは、指定されたデータメトリックがしきい値に達したとき、または条件が満たされたときにアラートをトリガーし、通知を送信できます。

前提条件

ACK クラスターで Prometheus モニタリングが有効になっています。詳細については、「Alibaba Cloud Prometheus モニタリングを使用する」(推奨) または「オープンソース Prometheus モニタリングを使用する」をご参照ください。

カスタム PromQL を使用して Prometheus アラートルールを設定する

ACK クラスターは、デフォルトで Alibaba Cloud Prometheus とオープンソース Prometheus の両方と互換性があります。カスタム PromQL を使用して、Prometheus モニタリングデータに基づいてアラートルールを設定できます。アラートルールの条件が満たされると、システムは対応するアラートイベントを生成し、通知を送信します。

Alibaba Cloud Prometheus モニタリング

Alibaba Cloud Prometheus モニタリングでカスタム PromQL を使用してアラートルールを設定する方法の詳細については、「Prometheus アラートルールを作成する」をご参照ください。

オープンソース Prometheus モニタリング

アラート通知ポリシーを設定します。

オープンソース Prometheus モニタリングは、Webhook、DingTalk ロボット、メールなどの通知方法をサポートしています。ack-prometheus-operator アプリケーションで

receiverパラメーターを設定することで、Prometheus アラートの通知方法を設定できます。詳細については、「アラート設定」をご参照ください。アラートルールを作成します。

クラスターに PrometheusRule Custom Resource Definition (CRD) をデプロイして、アラートルールを定義します。詳細については、「Prometheus ルールのデプロイ」をご参照ください。

apiVersion: monitoring.coreos.com/v1 kind: PrometheusRule metadata: labels: # ラベルは Prometheus CRD の ruleSelector.matchLabels と一致している必要があります。 prometheus: example role: alert-rules name: prometheus-example-rules spec: groups: - name: example.rules rules: - alert: ExampleAlert # expr は PromQL クエリとトリガー条件を指定します。このパラメーターについては、このトピックのアラートルールの説明にある PromQL 設定列をご参照ください。 expr: 100 - (avg by(instance) (rate(node_cpu_seconds_total{mode="idle"}[2m])) * 100) > 90アラートルールが有効かどうかを確認します。

次のコマンドを実行して、クラスター内の Prometheus サービスをローカルマシンのポート 9090 にマッピングします。

kubectl port-forward svc/ack-prometheus-operator-prometheus 9090:9090 -n monitoringブラウザで localhost:9090 と入力して、Prometheus サーバーコンソールを表示します。

オープンソース Prometheus ページの上部で、 を選択します。

[ルール] ページで、アラートルールを表示できます。ターゲットのアラートルールが表示されている場合、そのルールは有効です。

アラートルールの説明

クラスターとアプリケーションに関する豊富な運用およびメンテナンス (O&M) の経験に基づき、ACK は以下の推奨 Prometheus アラートルール設定を提供します。これらのルールは、クラスターの安定性、ノードの異常、ノードのリソース使用量、アプリケーションコンテナーレプリカの異常、ワークロードの異常、ストレージの例外、ネットワークの例外など、さまざまな側面をカバーしています。

コンテナーレプリカの異常やワークロードの異常などの問題をカバーするアラートルールは、次の重大度レベルに分類されます。

Critical: この問題はクラスター、アプリケーション、さらにはビジネスに影響を与えます。直ちに対応が必要です。

Warning: この問題はクラスター、アプリケーション、さらにはビジネスに影響を与えます。できるだけ早く調査する必要があります。

Normal: このアラートは、重要な機能の変更に関連しています。

[ルール説明] 列に記載されている操作エントリポイントは、[アラート] ページの [アラートルール管理] タブです。アラートルールを更新するには、Container Service for Kubernetes (ACK) コンソールにログインします。[クラスター] リストで、ターゲットクラスターの名前をクリックします。左側のナビゲーションウィンドウで、 を選択します。[アラート] ページで、[アラートルール管理] タブをクリックして、対応するアラートルールを更新します。

異常なコンテナーレプリカ

説明 | 重大度 | PromQL 設定 | ルール説明 | 一般的なトラブルシューティング手順 |

Pod のステータス異常 | Critical | min_over_time(sum by (namespace, pod, phase) (kube_pod_status_phase{phase=~"Pending|Unknown|Failed"})[5m:1m]) > 0 | 過去 5 分以内に Pod のステータスが異常な場合にアラートをトリガーします。 操作エントリポイントで、[クラスターコンテナーレプリカ異常アラートルールセット] をクリックし、[Pod ステータス異常] アラートルールを設定します。詳細については、「ACK でのアラートの管理」をご参照ください。 | Pod のステータス異常のトラブルシューティング方法の詳細については、「Pod の例外のトラブルシューティング」をご参照ください。 |

Pod の起動失敗 | Critical | sum_over_time(increase(kube_pod_container_status_restarts_total{}[1m])[5m:1m]) > 3 | 過去 5 分以内に Pod の起動が 3 回以上失敗した場合にアラートをトリガーします。 操作エントリポイントで、[クラスターコンテナーレプリカ異常アラートルールセット] をクリックし、[Pod 起動失敗] アラートルールを設定します。詳細については、「ACK でのアラートの管理」をご参照ください。 | Pod の起動失敗のトラブルシューティング方法の詳細については、「Pod の例外のトラブルシューティング」をご参照ください。 |

1,000 を超える Pod のスケジューリングに失敗 | Critical | sum(sum(max_over_time(kube_pod_status_phase{ phase=~"Pending"}[5m])) by (pod)) > 1000 | 過去 5 分以内にスケジューリングの失敗により合計 1,000 個の Pod が Pending 状態になった場合にアラートをトリガーします。 | この問題は、大規模なクラスターのスケジューリングシナリオにおける過度のタスクプレッシャーが原因である可能性があります。ACK マネージドクラスター Pro 版は、クラスターのスケジューリングなどの強化されたコア機能を提供し、SLA (Service-level agreement) を提供します。ACK マネージドクラスター Pro 版を使用することをお勧めします。詳細については、「ACK マネージドクラスター Pro 版の概要」をご参照ください。 |

頻繁なコンテナー CPU スロットリング | Warning | rate(container_cpu_cfs_throttled_seconds_total[3m]) * 100 > 25 | コンテナーの CPU が頻繁にスロットリングされる場合にアラートをトリガーします。これは、スロットリングされた CPU 時間が過去 3 分以内の合計 CPU 時間の 25% を超えた場合に発生します。 | CPU スロットリングは、コンテナー内のプロセスに割り当てられる CPU タイムスライスを削減します。これにより、これらのプロセスのランタイムが増加し、コンテナー化されたアプリケーションのビジネスロジックが遅くなる可能性があります。 この場合、Pod の CPU リソース制限が低すぎないか確認してください。CPU Burst ポリシーを使用して CPU スロットリングを最適化することをお勧めします。詳細については、「CPU Burst ポリシーを有効にする」をご参照ください。クラスターノードがマルチコアサーバーの場合は、CPU トポロジー対応スケジューリングを使用して、断片化された CPU リソースを最大限に活用することをお勧めします。詳細については、「CPU トポロジー対応スケジューリングを有効にする」をご参照ください。 |

コンテナーレプリカ Pod の CPU 使用率が 85% を超える | Warning | (sum(irate(container_cpu_usage_seconds_total{pod=~"{{PodName}}.*",namespace=~"{{Namespace}}.*",container!="",container!="POD"}[1m])) by (namespace,pod) / sum(container_spec_cpu_quota{pod=~"{{PodName}}.*",namespace=~"{{Namespace}}.*",container!="",container!="POD"}/100000) by (namespace,pod) * 100 <= 100 or on() vector(0)) >= 85 | 指定された名前空間または指定された Pod で、コンテナーレプリカ Pod の CPU 使用率が Pod の制限の 85% を超えたときにアラートをトリガーします。 Pod に制限が設定されていない場合、このアラートルールは有効になりません。 デフォルトのしきい値 85% は推奨値です。必要に応じて調整できます。 特定の Pod または名前空間のデータをフィルター処理するには、 | Pod の CPU 使用率が高いと、CPU スロットリングや CPU タイムスライスの割り当て不足につながり、Pod 内のプロセスの実行に影響します。 この場合、Pod の CPU |

コンテナーレプリカ Pod のメモリ使用量が 85% を超える | Warning | ((sum(container_memory_working_set_bytes{pod=~"{{PodName}}.*",namespace=~"{{Namespace}}.*",container !="",container!="POD"}) by (pod,namespace)/ sum(container_spec_memory_limit_bytes{pod=~"{{PodName}}.*",namespace=~"{{Namespace}}.*",container !="",container!="POD"}) by (pod, namespace) * 100) <= 100 or on() vector(0)) >= 85 | コンテナーレプリカ Pod のメモリ使用量が Pod の制限の 85% を超えたときにアラートをトリガーします。 Pod に制限が設定されていない場合、このアラートルールは有効になりません。 デフォルトのしきい値 85% は推奨値です。必要に応じて調整できます。 特定の Pod または名前空間のデータをフィルター処理するには、 | Pod のメモリ使用量が高いと、out-of-memory (OOM) killer によって Pod が終了され、Pod の再起動につながる可能性があります。 この場合、Pod のメモリ |

異常なワークロード

説明 | 重大度 | PromQL 設定 | ルール説明 | 一般的なトラブルシューティング手順 |

利用可能な Deployment レプリカのステータス異常 | Critical | kube_deployment_spec_replicas{} != kube_deployment_status_replicas_available{} | Deployment の利用可能なレプリカ数が希望の数と一致しない場合にアラートをトリガーします。 操作エントリポイントで、[クラスターアプリケーションワークロード異常アラートルールセット] をクリックし、[Deployment 利用可能レプリカステータス異常] アラートルールを設定します。詳細については、「ACK でのアラートの管理」をご参照ください。 | Pod の起動に失敗した場合、またはステータスが異常な場合は、「Pod の例外のトラブルシューティング」をご参照ください。 |

DaemonSet レプリカのステータス異常 | Critical | ((100 - kube_daemonset_status_number_ready{} / kube_daemonset_status_desired_number_scheduled{} * 100) or (kube_daemonset_status_desired_number_scheduled{} - kube_daemonset_status_current_number_scheduled{})) > 0 | DaemonSet の利用可能なレプリカ数が希望の数と一致しない場合にアラートをトリガーします。 操作エントリポイントで、[クラスターアプリケーションワークロード異常アラートルールセット] をクリックし、[Deployment 利用可能レプリカステータス異常] アラートルールを設定します。詳細については、「ACK でのアラートの管理」をご参照ください。 | Pod の起動に失敗した場合、またはステータスが異常な場合は、「Pod の例外のトラブルシューティング」をご参照ください。 |

DaemonSet レプリカの異常なスケジューリング | Critical | kube_daemonset_status_number_misscheduled{job} > 0 | DaemonSet レプリカが異常にスケジューリングされた場合にアラートをトリガーします。 操作エントリポイントで、[クラスターアプリケーションワークロード異常アラートルールセット] をクリックし、[Daemonset レプリカスケジューリング異常] アラートルールを設定します。詳細については、「ACK でのアラートの管理」をご参照ください。 | Pod の起動に失敗した場合、またはステータスが異常な場合は、「Pod の例外のトラブルシューティング」をご参照ください。 |

ジョブの失敗 | Critical | kube_job_status_failed{} > 0 | ジョブの実行に失敗した場合にアラートをトリガーします。 操作エントリポイントで、[クラスターアプリケーションワークロード異常アラートルールセット] をクリックし、[ジョブ実行失敗] アラートルールを設定します。詳細については、「ACK でのアラートの管理」をご参照ください。 |

|

ストレージの例外

説明 | 重大度 | PromQL 設定 | ルール説明 | 一般的なトラブルシューティング手順 |

PersistentVolume のステータス異常 | Critical | kube_persistentvolume_status_phase{phase=~"Failed|Pending"} > 0 | 永続ボリューム (PV) のステータスが異常な場合にアラートをトリガーします。 操作エントリポイントで、[クラスターストレージ異常イベントアラートルールセット] をクリックし、[PersistentVolume ステータス異常] アラートルールを設定します。詳細については、「ACK でのアラートの管理」をご参照ください。 | PV のステータス異常のトラブルシューティング方法の詳細については、「ディスク PV に関するよくある質問」のディスクマウントセクションをご参照ください。 |

ノードのディスク領域が 10% 未満 | Critical | ((node_filesystem_avail_bytes * 100) / node_filesystem_size_bytes) < 10 | ノードのディスクブロックデバイスの空き領域が 10% 未満になった場合にアラートをトリガーします。 操作エントリポイントで、[クラスターリソース異常アラートルールセット] をクリックし、[クラスターノード - ディスク使用量 >= 85%] アラートルールを設定します。詳細については、「ACK でのアラートの管理」をご参照ください。 | ノードをスケールアウトするか、ディスクを拡張することをお勧めします。詳細については、「ディスク PV に関するよくある質問」のディスクマウントセクションをご参照ください。 |

異常なノードステータス

説明 | 重大度 | PromQL 設定 | ルール説明 | 一般的なトラブルシューティング手順 |

ノードが 3 分間 NotReady ステータスのまま | Critical | (sum(max_over_time(kube_node_status_condition{condition="Ready",status="true"}[3m]) <= 0) by (node)) or (absent(kube_node_status_condition{condition="Ready",status="true"})) > 0 | クラスターノードが 3 分間 NotReady ステータスのままである場合にアラートをトリガーします。 操作エントリポイントで、[クラスターノード異常アラートルールセット] をクリックし、[クラスターノードオフライン] アラートルールを設定します。詳細については、「ACK でのアラートの管理」をご参照ください。 |

|

異常なホストのリソース使用量

以下のセクションでは、ホストリソースメトリックとノードリソースメトリックの違いについて説明します:

ホストリソースメトリックは、ノードが実行されている物理マシンまたは仮想マシンのリソースを測定します。

使用量の数式では、分子はホスト上のすべてのプロセスのリソース使用量であり、分母はホストの最大容量です。

説明 | 重大度 | PromQL 設定 | ルール説明 | 一般的なトラブルシューティング手順 |

ホストのメモリ使用量が 85% を超える | Warning | (100 - node_memory_MemAvailable_bytes / node_memory_MemTotal_bytes * 100) >= 85 | クラスターのホストのメモリ使用量が 85% を超えた場合にアラートをトリガーします。 操作エントリポイントで、[クラスターリソース異常アラートルールセット] をクリックし、[クラスターノード - メモリ使用量 >= 85%] アラートルールを設定します。詳細については、「ACK でのアラートの管理」をご参照ください。 説明 ACK アラート設定のルールは CloudMonitor によって提供され、そのメトリックは対応する Prometheus ルールのメトリックと一致します。 デフォルトのしきい値 85% は推奨値です。必要に応じて調整できます。 |

|

ホストのメモリ使用量が 90% を超える | Critical | (100 - node_memory_MemAvailable_bytes / node_memory_MemTotal_bytes * 100) >= 90 | クラスター内のホストのメモリ使用量が 90% を超えています。 |

|

ホストの CPU 使用率が 85% を超える | Warning | 100 - (avg by(instance) (rate(node_cpu_seconds_total{mode="idle"}[2m])) * 100) >= 85 | クラスターのホストの CPU 使用率が 85% を超えた場合にアラートをトリガーします。 操作エントリポイントで、[クラスターリソース異常アラートルールセット] をクリックし、[クラスターノード - CPU 使用率 >= 85%] アラートルールを設定します。 説明 ACK アラート設定のルールは、CloudMonitor ECS モニタリングによって提供されます。ルール内のメトリックは、この Prometheus ルールのメトリックと同等です。 デフォルトのしきい値 85% は推奨値です。必要に応じて調整できます。 詳細については、「ACK でのアラートの管理」をご参照ください。 |

|

ホストの CPU 使用率が 90% を超える | Critical | 100 - (avg by(instance) (rate(node_cpu_seconds_total{mode="idle"}[2m])) * 100) >= 90 | クラスターのホストの CPU 使用率が 90% を超えた場合にアラートをトリガーします。 |

|

異常なノードリソース

以下のセクションでは、ノードリソースメトリックとホストリソースメトリックの違いについて説明します:

ノードリソースメトリックは、ノード上のリソースの消費を、その割り当て可能な容量に対して測定します。メトリックは、ノード上のコンテナーによって消費されるリソース (分子) と、ノード上の割り当て可能なリソース (分母) の比率です。

メモリを例にとります:

消費リソース: ノードによって使用される合計メモリリソース。これには、ノード上で実行されているすべてのコンテナーのワーキングセットメモリが含まれます。ワーキングセットメモリには、コンテナーの割り当て済みおよび使用済みメモリ、コンテナーに割り当てられたページキャッシュなどが含まれます。

割り当て可能リソース: コンテナーに割り当てることができるリソースの量。これには、ホスト上のコンテナーエンジンレイヤーによって消費されるリソースは含まれません。これは ACK のノード用に予約されたリソースです。詳細については、「ノードリソース予約ポリシー」をご参照ください。

使用量の数式では、分子はノード上のすべてのコンテナーのリソース使用量であり、分母はノードがコンテナーに割り当てることができるリソースの量 (Allocatable) です。

Pod のスケジューリングは、実際のリソース使用量ではなく、リソースリクエストに基づいています。

説明 | 重大度 | PromQL 設定 | ルール説明 | 一般的なトラブルシューティング手順 |

ノードの CPU 使用率が 85% を超える | Warning | sum(irate(container_cpu_usage_seconds_total{pod!=""}[1m])) by (node) / sum(kube_node_status_allocatable{resource="cpu"}) by (node) * 100 >= 85 | クラスターノードの CPU 使用率が 85% を超えた場合にアラートをトリガーします。 数式は次のとおりです

|

|

ノードの CPU リソース割り当て率が 85% を超える | 通常 | (sum(sum(kube_pod_container_resource_requests{resource="cpu"}) by (pod, node) * on (pod) group_left max(kube_pod_status_ready{condition="true"}) by (pod, node)) by (node)) / sum(kube_node_status_allocatable{resource="cpu"}) by (node) * 100 >= 85 | クラスターノードの CPU リソース割り当て率が 85% を超えた場合にアラートをトリガーします。 数式は |

|

ノードの CPU オーバーセル率が 300% を超える | Warning | (sum(sum(kube_pod_container_resource_limits{resource="cpu"}) by (pod, node) * on (pod) group_left max(kube_pod_status_ready{condition="true"}) by (pod, node)) by (node)) / sum(kube_node_status_allocatable{resource="cpu"}) by (node) * 100 >= 300 | クラスターノードの CPU オーバーセル率が 300% を超えた場合にアラートをトリガーします。 数式は デフォルトのしきい値 300% は推奨値です。必要に応じて調整できます。 |

|

ノードのメモリ使用量が 85% を超える | Warning | sum(container_memory_working_set_bytes{pod!=""}) by (node) / sum(kube_node_status_allocatable{resource="memory"}) by (node) * 100 >= 85 | クラスターノードのメモリ使用量が 85% を超えた場合にアラートをトリガーします。 数式は次のとおりです

|

|

ノードのメモリリソース割り当て率が 85% を超える | 通常 | (sum(sum(kube_pod_container_resource_requests{resource="memory"}) by (pod, node) * on (pod) group_left max(kube_pod_status_ready{condition="true"}) by (pod, node)) by (node)) / sum(kube_node_status_allocatable{resource="memory"}) by (node) * 100 >= 85 | クラスターノードのメモリリソース割り当て率が 85% を超えた場合にアラートをトリガーします。 数式は |

|

ノードのメモリオーバーセル率が 300% を超える | Warning | (sum(sum(kube_pod_container_resource_limits{resource="memory"}) by (pod, node) * on (pod) group_left max(kube_pod_status_ready{condition="true"}) by (pod, node)) by (node)) / sum(kube_node_status_allocatable{resource="memory"}) by (node) * 100 >= 300 | クラスターノードのメモリオーバーセル率が 300% を超えた場合にアラートをトリガーします。 数式は デフォルトのしきい値 300% は推奨値です。必要に応じて調整できます。 |

|

ネットワークの例外

説明 | 重大度 | PromQL 設定 | ルール説明 | 一般的なトラブルシューティング手順 |

クラスター CoreDNS の可用性異常 - リクエスト数がゼロに低下 | Critical | (sum(rate(coredns_dns_request_count_total{}[1m]))by(server,zone)<=0) or (sum(rate(coredns_dns_requests_total{}[1m]))by(server,zone)<=0) | この例外は、ACK マネージドクラスター (Pro 版および Basic 版) でのみ検出できます。 | クラスター内の CoreDNS Pod が正常であるか確認してください。 |

クラスター CoreDNS の可用性異常 - パニック例外 | Critical | sum(rate(coredns_panic_count_total{}[3m])) > 0 | この例外は、ACK マネージドクラスター (Pro 版および Basic 版) でのみ検出できます。 | クラスター内の CoreDNS Pod が正常であるか確認してください。 |

クラスター Ingress コントローラー証明書の有効期限が近づいています | Warning | ((nginx_ingress_controller_ssl_expire_time_seconds - time()) / 24 / 3600) < 14 | ACK Ingress コントローラーコンポーネントをインストールしてデプロイし、Ingress 機能を有効にする必要があります。 | Ingress コントローラー証明書を再発行します。 |

Auto Scaling の例外

説明 | 重大度 | PromQL 設定 | ルール説明 | 一般的なトラブルシューティング手順 |

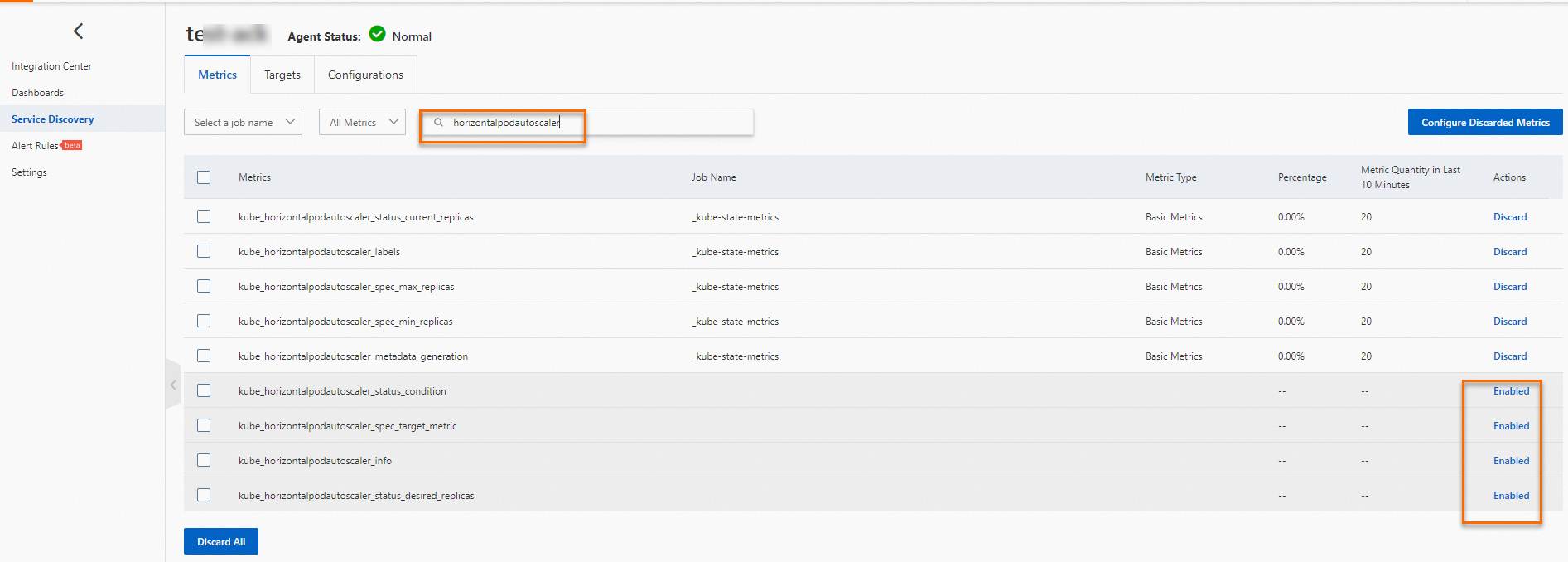

HPA の現在のレプリカ数が最大に達しました | Warning | max(kube_horizontalpodautoscaler_spec_max_replicas) by (namespace, horizontalpodautoscaler) - max(kube_horizontalpodautoscaler_status_current_replicas) by (namespace, horizontalpodautoscaler) <= 0 | Alibaba Cloud Prometheus で | Horizontal Pod Autoscaler (HPA) ポリシーが期待どおりであるか確認してください。ビジネスワークロードが高いままである場合は、HPA の maxReplicas の値を増やすか、アプリケーションのパフォーマンスを最適化する必要がある場合があります |

関連ドキュメント

コンソールから、または API を使用して Prometheus モニタリングデータをクエリする方法の詳細については、「PromQL を使用して Prometheus モニタリングデータをクエリする」をご参照ください。

ACK Net Exporter を使用して、コンテナーネットワークの問題を迅速に発見し、特定できます。詳細については、「KubeSkoop を使用してネットワークの問題を特定する」をご参照ください。

Alibaba Cloud Prometheus を使用する際の一般的な問題と解決策の詳細については、「可観測性のよくある質問」をご参照ください。