Use the data integration feature in DataWorks to export full data from Tablestore to MaxCompute for offline analysis and processing. DataWorks is a platform for big data development and governance.

Prerequisites

Before you export data, complete the following preparations:

Obtain information about the Tablestore source table. This includes the instance name, instance endpoint, and region ID.

Create a MaxCompute project to use as the data storage destination.

Create an AccessKey for your Alibaba Cloud account or a RAM user. The account or user must have access permissions for Tablestore and MaxCompute.

Activate DataWorks and create a workspace in the region where your MaxCompute or Tablestore instance is located.

Create a serverless resource group and attach it to the workspace. For more information about billing, see Serverless resource group billing.

If your DataWorks workspace and Tablestore instance are in different regions, you must create a VPC peering connection to enable cross-region network connectivity.

Procedure

Follow these steps to configure a full data export from Tablestore to MaxCompute.

Step 1: Add a Tablestore data source

Configure a Tablestore data source in DataWorks to connect to the source data table.

Log on to the DataWorks console. Switch to the destination region. In the navigation pane on the left, choose . From the drop-down list, select the workspace and click Go to Data Integration.

In the navigation pane on the left, click Data source.

On the Data Sources page, click Add Data Source.

In the Add Data Source dialog box, search for and select Tablestore as the data source type.

In the Add OTS Data Source dialog box, configure the data source parameters as described in the following table.

Parameter

Description

Data Source Name

The data source name must be a combination of letters, digits, and underscores (_). It cannot start with a digit or an underscore (_).

Data Source Description

A brief description of the data source. The description cannot exceed 80 characters in length.

Region

Select the region where the Tablestore instance resides.

Tablestore Instance Name

The name of the Tablestore instance.

Endpoint

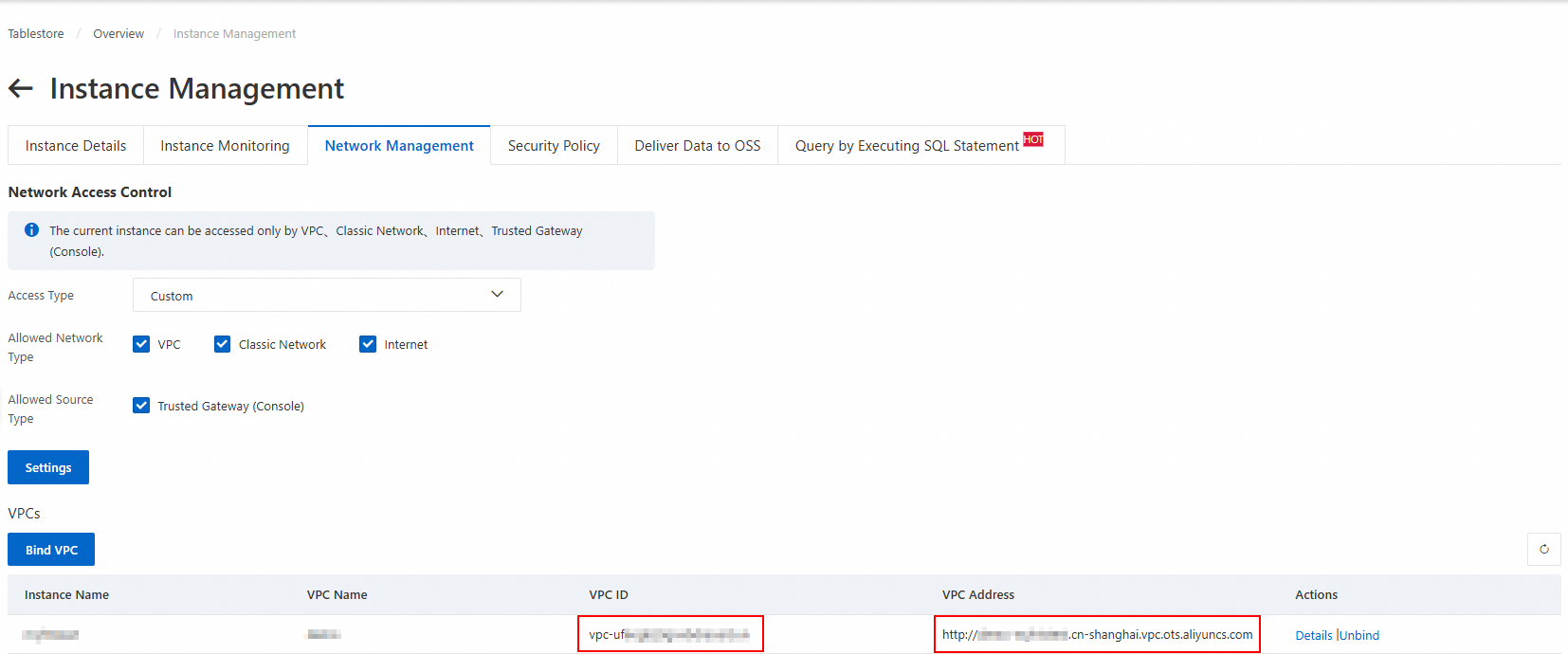

The endpoint of the Tablestore instance. Use the VPC address.

AccessKey ID

The AccessKey ID and AccessKey secret of the Alibaba Cloud account or RAM user.

AccessKey Secret

Test the resource group connectivity.

When you create a data source, you must test the connectivity of the resource group to ensure that the resource group for the sync task can connect to the data source. Otherwise, the data sync task cannot run.

In the Connection Configuration section, click Test Network Connectivity in the Connection Status column for the resource group.

After the connectivity test passes, click Complete. The new data source appears in the data source list.

If the connectivity test fails, use the Network Connectivity Diagnostic Tool to troubleshoot the issue.

Step 2: Add a MaxCompute data source

Configure a MaxCompute data source to use as the destination for the data export.

Click Add Data Source again. Select MaxCompute as the data source type and configure the parameters.

Parameter

Description

Data Source Name

The name must consist of letters, digits, and underscores (_). It cannot start with a digit or an underscore (_).

Data Source Description

A brief description of the data source. The description cannot exceed 80 characters.

Authentication Method

The default value is Alibaba Cloud Account And Alibaba Cloud RAM Role. You cannot change this value.

Alibaba Cloud Account

Current Alibaba Cloud Account: Select the MaxCompute Project Name and Default Access Identity for the current account in the specified region.

Other Alibaba Cloud Account: Enter the UID of Alibaba Cloud Account, MaxCompute Project Name, and RAM Role for the other account in the specified region.

Region

The region where the MaxCompute project is located.

Endpoint

The default value is Auto Fit. You can also select Custom Configuration as needed.

After you configure the parameters and the connectivity test succeeds, click Complete to add the data source.

Step 3: Configure a batch synchronization task

Create a data sync task to define the data transfer rules and field mappings from Tablestore to MaxCompute.

Create a task node

Go to the Data Development page.

Log on to the DataWorks console.

In the top navigation bar, select a resource group and a region.

In the navigation pane on the left, click .

Select the target workspace and click Go to Data Studio.

On the Data Studio console, click the

icon to the right of Workspace Directories, and then select .

icon to the right of Workspace Directories, and then select .In the Create Node dialog box, select a Path. Set the data source to Tablestore and the destination to MaxCompute(ODPS). Enter a Name and click OK.

Configure the sync task

In the Workspace Directories, click the new batch synchronization task node to open it. You can configure the sync task in the codeless UI or using the code editor.

Codeless UI (default)

Configure the following items in the codeless UI:

Data Source: Select the source and destination data sources.

Runtime Resource: Select a resource group. The system automatically tests the data source connectivity after you make a selection.

Data Source:

Table: Select the source data table from the drop-down list.

Primary Key Range (Start): Specify the start of the primary key range from which to read data. The value must be in the JSON array format.

inf_minrepresents an infinitely small value.For example, if the primary key consists of an

intcolumn namedidand astringcolumn namedname, the sample configuration is as follows:Specific primary key range

Full data

[ { "type": "int", "value": "000" }, { "type": "string", "value": "aaa" } ][ { "type": "inf_min" }, { "type": "inf_min" } ]Primary Key Range (End): Specify the end of the primary key range from which to read data. The value must be in the JSON array format.

inf_maxrepresents an infinitely large value.For example, if the primary key consists of an

intcolumn namedidand astringcolumn namedname, the sample configuration is as follows:Specific primary key range

Full data

[ { "type": "int", "value": "999" }, { "type": "string", "value": "zzz" } ][ { "type": "inf_max" }, { "type": "inf_max" } ]Splitting Configuration: Specify custom split configuration information in the JSON array format. In most cases, we recommend that you do not configure this parameter. If you do not configure this parameter, set it to

[].If hot spots occur in Tablestore data storage and the automatic splitting policy of Tablestore Reader is ineffective, use a custom splitting rule. The rule specifies the split points within the start and end primary key range. You only need to configure the shard key and do not need to specify all primary keys.

Destination: Configure the following items. keep the default values for other parameters or modify them as needed.

Production Project Name: The name of the MaxCompute project that is associated with the destination data source. This parameter is automatically populated.

Tunnel Resource Group: The default value is Common transmission resources. This resource group uses the free quota of MaxCompute. Select a dedicated Tunnel resource group as needed.

Table: Select the destination table. Click Generate Target Table Schema to automatically create the destination table.

Partition: Specify the partition where the synchronized data is saved. This parameter can be used for daily incremental synchronization.

Write Mode: Select whether to clear existing data before writing or to append data to the table.

Destination Field Mapping: Configure the field mapping from the source table to the destination table. The system automatically maps fields based on the source table. Modify the mapping as needed.

After you complete the configuration, click Save at the top of the page.

Code editor

Click Code Editor at the top of the page to edit the script in the editor.

The following sample script is for a source data table where the primary key consists of anintcolumn namedidand astringcolumn namedname. The attribute column is anintfield namedage. In your script, replace the values for thedatasourceandtableparameters with your actual values.

{

"type": "job",

"version": "2.0",

"steps": [

{

"stepType": "ots",

"parameter": {

"datasource": "source_data",

"column": [

{

"name": "id",

"type": "INTEGER"

},

{

"name": "name",

"type": "STRING"

},

{

"name": "age",

"type": "INTEGER"

}

],

"range": {

"begin": [

{

"type": "inf_min"

},

{

"type": "inf_min"

}

],

"end": [

{

"type": "inf_max"

},

{

"type": "inf_max"

}

],

"split": []

},

"table": "source_table",

"newVersion": "true"

},

"name": "Reader",

"category": "reader"

},

{

"stepType": "odps",

"parameter": {

"partition": "pt=${bizdate}",

"truncate": true,

"datasource": "target_data",

"tunnelQuota": "default",

"column": [

"id",

"name",

"age"

],

"emptyAsNull": false,

"guid": null,

"table": "source_table",

"consistencyCommit": false

},

"name": "Writer",

"category": "writer"

}

],

"setting": {

"errorLimit": {

"record": "0"

},

"speed": {

"concurrent": 2,

"throttle": false

}

},

"order": {

"hops": [

{

"from": "Reader",

"to": "Writer"

}

]

}

}After you finish editing the script, click Save at the top of the page.

Run the sync task

Click Debug Configuration on the right side of the page. Select a resource group to run the task and add Script Parameters.

bizdate: The data partition of the MaxCompute destination table. For example,

20251120.

Click Run at the top of the page to start the sync task.

Step 4: View the synchronization result

After the sync task is complete, view its execution status in the logs and check the synchronized data in the DataWorks console.

View the task running status and result at the bottom of the page. The following log information indicates that the sync task ran successfully.

2025-11-18 11:16:23 INFO Shell run successfully! 2025-11-18 11:16:23 INFO Current task status: FINISH 2025-11-18 11:16:23 INFO Cost time is: 77.208sView the synchronized data in the destination table.

Go to the DataWorks console. In the navigation pane on the left, click . Then, click Go to Data Map to view the destination table and its data.