You can use a Logtail plug-in to extract log fields from logs based on a regular expression. The logs are parsed into key-value pairs. This topic describes how to create a Logtail configuration in regex mode in the Simple Log Service console.

Solution overview

Consider the following raw log:

127.0.0.1 - - [16/Aug/2024:14:37:52 +0800] "GET /wp-admin/admin-ajax.php?action=rest-nonce HTTP/1.1" 200 41 "http://www.example.com/wp-admin/post-new.php?post_type=page" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/127.0.0.0 Safari/537.36 Edg/127.0.0.0"After processing with the regex parsing plug-in, the result is as follows:

The regular expression applied is as follows:

(\S+)\s-\s(\S+)\s\[([^]]+)]\s"(\w+)\s(\S+)\s([^"]+)"\s(\d+)\s(\d+)\s"([^"]+)"\s"([^"]+).*Prerequisites

A machine group has been created, and servers have been added to the machine group. We recommend that you create a custom identifier-based machine group. For more information, see Create a custom identifier-based machine group or Create an IP address-based machine group.

Ports 80 and 443 are enabled for the server on which Logtail is installed. If the server is an Elastic Computing Service (ECS) instance, you can reconfigure the related security group rules to enable the ports. For more information about how to configure a security group rule, see Add a security group rule.

The server from which you want to collect logs continuously generates logs. Logtail collects only incremental logs. If a log file on your server is not updated after a Logtail configuration is delivered and applied to the server, Logtail does not collect logs from the file. For more information, see Read log files.

1. Select a project and a logstore

Log on to the Simple Log Service console.

Click Quick Data Import on the right side of the console.

On the Import Data page, click Regular Expression - Text Logs.

Select the project and logstore you want.

2. Configure a machine group

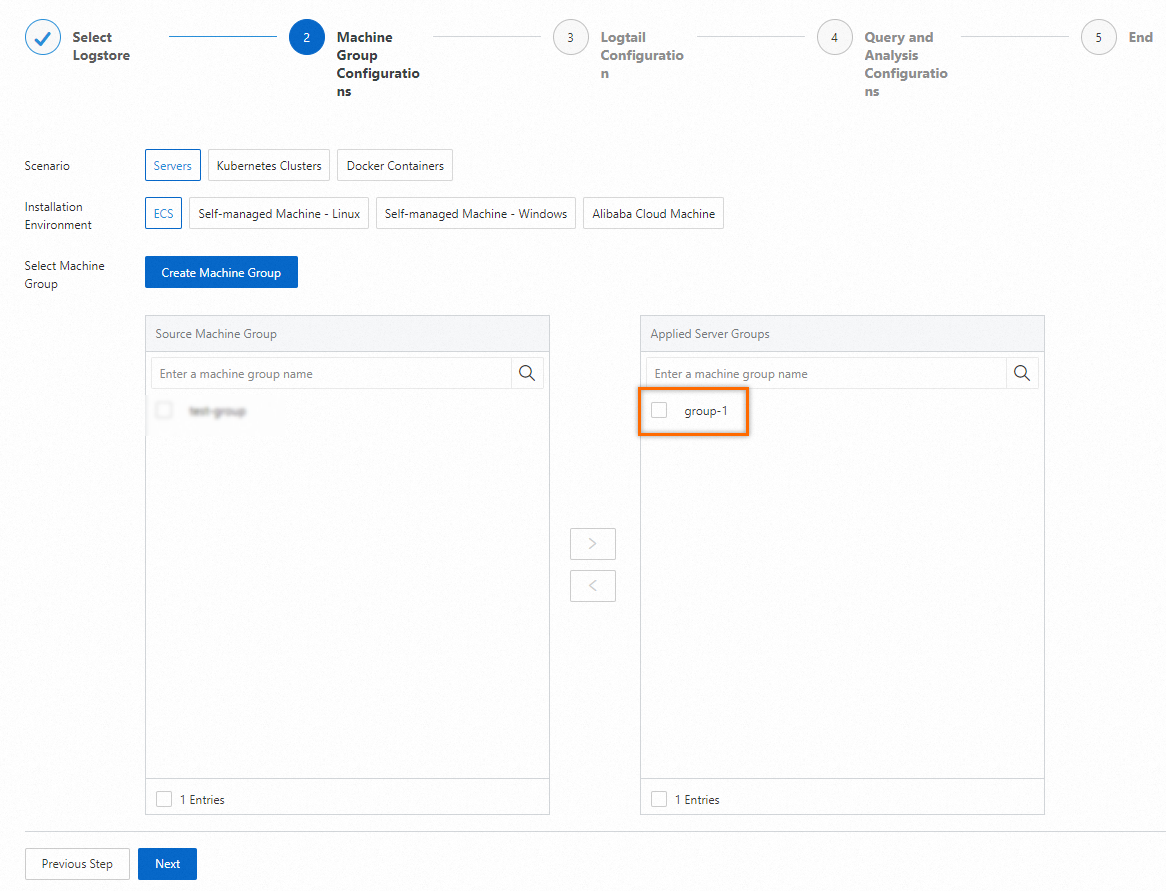

Apply the Logtail configuration to the specified machine group to collect data from the server. Choose the appropriate scenario and installation environment based on your requirements, because this will influence subsequent configurations.

Existing machine group

Select the desired machine group from the Source Machine Group list and click Next.

No available machine group

Click Create Machine Group and configure the parameters in the Create Machine Group panel. Machine group identities are categorized as either IP Address or Custom Identifier. For more information, see Create a custom identifier-based machine group and Create an IP address-based machine group.

ImportantIf the machine group is applied immediately after creation, the heartbeat may show a status of FAIL due to an ineffective connection. Click Retry. If the problem persists, see How do I troubleshoot an error related to a Logtail machine group in a host environment?

3. Configure a Logtail

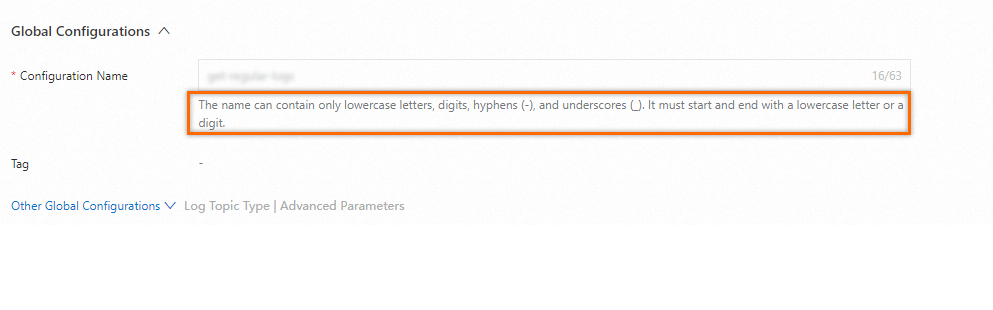

3.1 Global configurations

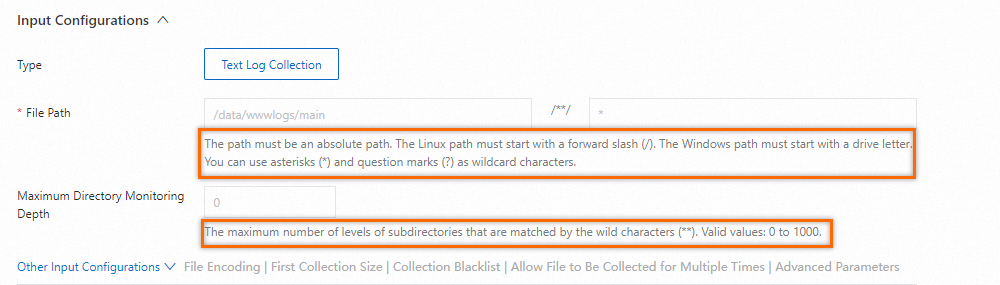

3.2 Input configurations

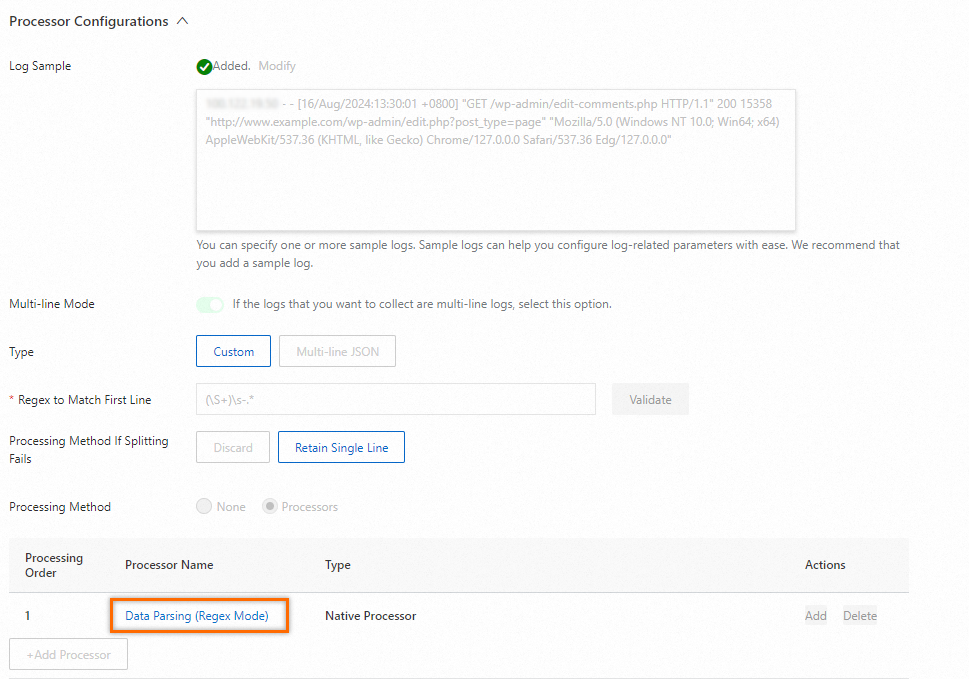

3.3 Processor configurations

Log Sample: Supports multiple logs. Log samples can help configure log processing parameters, facilitating the setup process. We recommend that you add log samples.

Multi-line Mode: For multi-line logs, enable this option.

Type: Select Custom. For example, the regular expression to match the beginning of a line is

(\S+)\s-.*.Processing Method If Splitting Fails: Select Keep Single Line.

Processing Method

Use the Data Parsing (Regex Mode) plug-in. For multi-line logs, enable Multi-line Mode to automatically generate a regular expression.

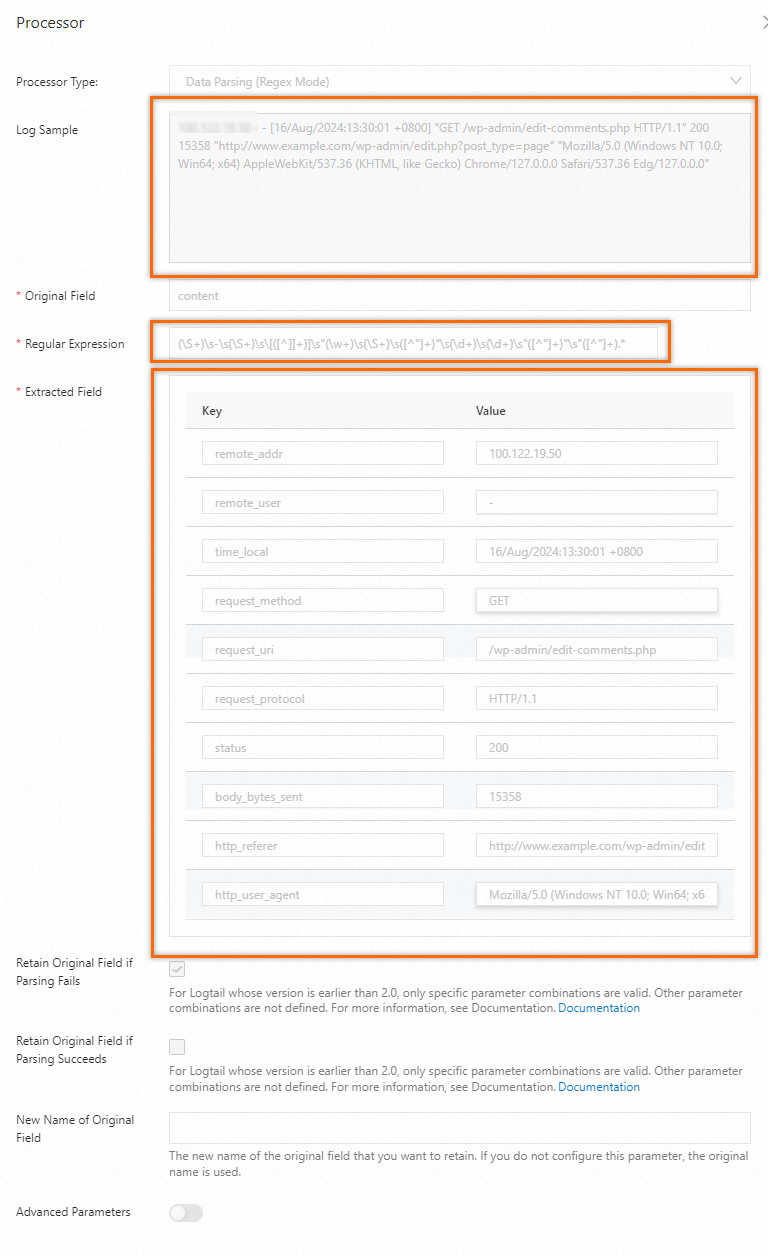

Click Data Parsing (Regex Mode) to access the detailed configuration page for the plug-in. Configure the regular expression and set key values based on the extracted data.

Configuration description

Parameter

Description

Original Field

The original field that is used to store the content of a log before the log is parsed. Default value: content.

Regular Expression

The regular expression that is used to match logs.

If you specify a sample log, Simple Log Service can automatically generate a regular expression or use a regular expression that you manually specify.

Click Generate. In the Sample Log field, select the log content that you want to extract and click Generate Regular Expression. Simple Log Service generates a regular expression based on the content that you specified.

Click Manual to specify a regular expression. After you configure the settings, click Validate to check whether the regular expression can parse and extract log content as expected. For more information, see How do I test a regular expression?

If you do not specify a sample log, you must specify a regular expression based on the actual log content.

Extracted Field

The extracted fields. Configure the Key parameter for each Value parameter. The Key parameter specifies a new field name. The Value parameter specifies the content that is extracted from logs.

Retain Original Field If Parsing Fails

If you select the Retain Original Field If Parsing Fails parameter and parsing fails, the original field is retained.

Retain Original Field If Parsing Succeeds

If you select the Retain Original Field If Parsing Succeeds parameter and parsing is successful, the original field is retained.

New Name of Original Field

If you select the Retain Original Field If Parsing Fails or Retain Original Field If Parsing Succeeds parameter, you can rename the original field to store the original log content.

A Logtail configuration requires up to three minutes to take effect.

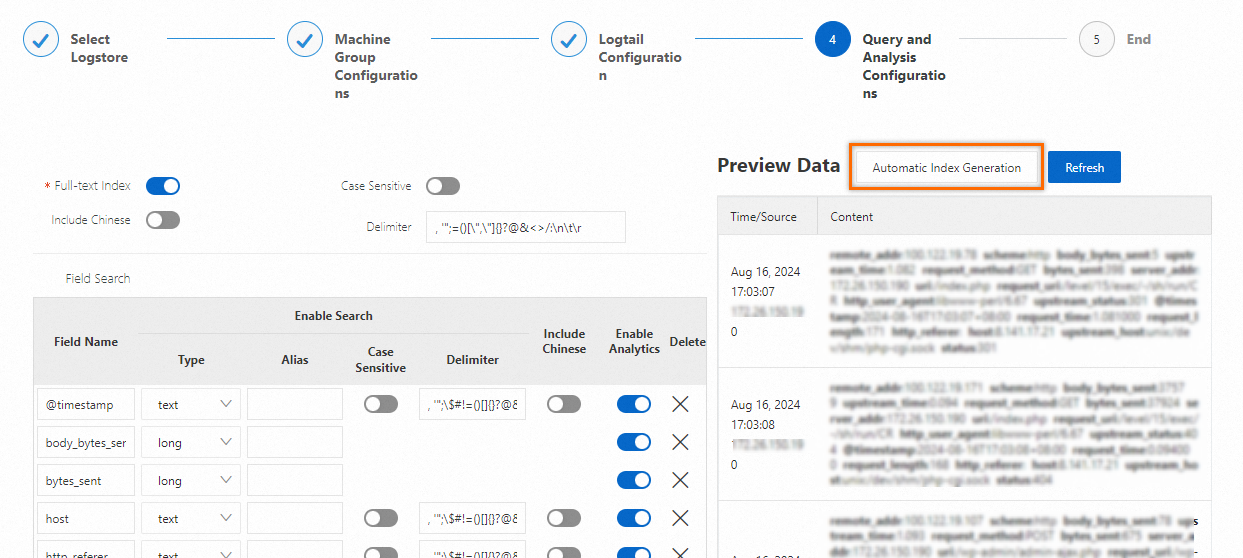

4. Configure query and analysis

By default, Simple Log Service enables full-text indexing. You can also manually create field indexes, or click Automatic Index Generation to automatically generate indexes. For more information, see Create indexes.

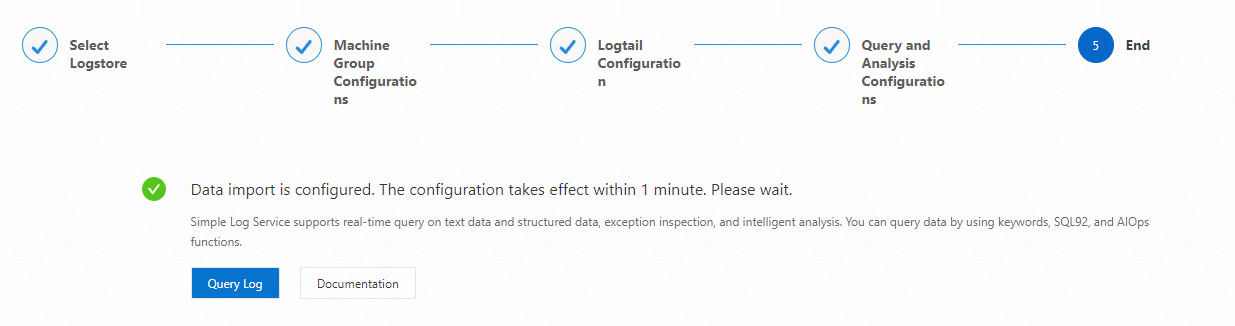

5. Query logs

Click Query Log to go to the query and analysis page.

Wait approximately one minute for the index to activate before viewing the collected logs on the Raw Logs tab. For more information, see Query and analyze logs.

To query all fields in logs, use full-text indexes. To query only specific fields, use field indexes, which helps reduce index traffic. To perform field analysis, create field indexes and include a SELECT statement in your analysis query.

What to do next

For more information about log index types, configuration examples, and index-related billing, see Create indexes.

For more information about log query syntax, see Search syntax.