You can import log files from an OSS bucket to Simple Log Service for querying, analysis, and processing. Simple Log Service supports importing OSS files up to 5 GB. The size of a compressed file is its size after compression.

Billing description

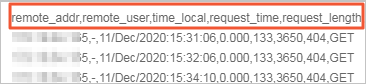

Simple Log Service does not charge for the data import feature. However, this feature accesses OSS APIs, which incurs OSS traffic fees and request fees. For more information about the pricing of billable items, see OSS Pricing. The formula to calculate the daily OSS fees for importing OSS data is as follows:

![]()

Prerequisites

Log files have been uploaded to an OSS bucket. For more information, see Upload files in the console.

A project and a Logstore have been created. For more information, see Manage a project and Create a basic Logstore.

You have completed the cloud resource access authorization. This authorization allows Simple Log Service to access your OSS resources using the AliyunLogImportOSSRole role.

Your account has the oss:ListBuckets permission to access OSS buckets. For more information, see Grant custom policies to a RAM user.

If you use a Resource Access Management (RAM) user, you must also grant the PassRole permission to the RAM user. The following is an example of the authorization policy. For more information, see Create a custom policy and Manage RAM user permissions.

{ "Statement": [ { "Effect": "Allow", "Action": "ram:PassRole", "Resource": "acs:ram:*:*:role/aliyunlogimportossrole" }, { "Effect": "Allow", "Action": "oss:GetBucketWebsite", "Resource": "*" }, { "Effect": "Allow", "Action": "oss:ListBuckets", "Resource": "*" } ], "Version": "1" }

Create a data import configuration

If an OSS file that was already imported is updated, such as by appending new content, the data import task re-imports the entire file.

Log on to the Simple Log Service console.

In the Data Ingestion area, on the Data Import tab, select OSS-Data Import.

Select the destination project and Logstore, and then click Next.

Set the import configuration.

In the Import Configuration step, set the following parameters.

Click Preview to view the import results.

After confirming the settings, click Next.

Create indexes and preview data. Then, click Next. By default, full-text indexing is enabled in Simple Log Service. You can also manually create field indexes for the collected logs or click Automatic Index Generation. Then, Simple Log Service generates field indexes. For more information, see Create an index.

ImportantIf you want to query all fields in logs, we recommend that you use full-text indexes. If you want to query only specific fields, we recommend that you use field indexes. This helps reduce index traffic. If you want to analyze fields, you must create field indexes. You must include a SELECT statement in your query statement for analysis.

Click Query Logs to open the query and analysis page and confirm that the OSS data was successfully imported.

Wait for about 1 minute. The import is successful if the data is available in the target OSS location.

Related operations

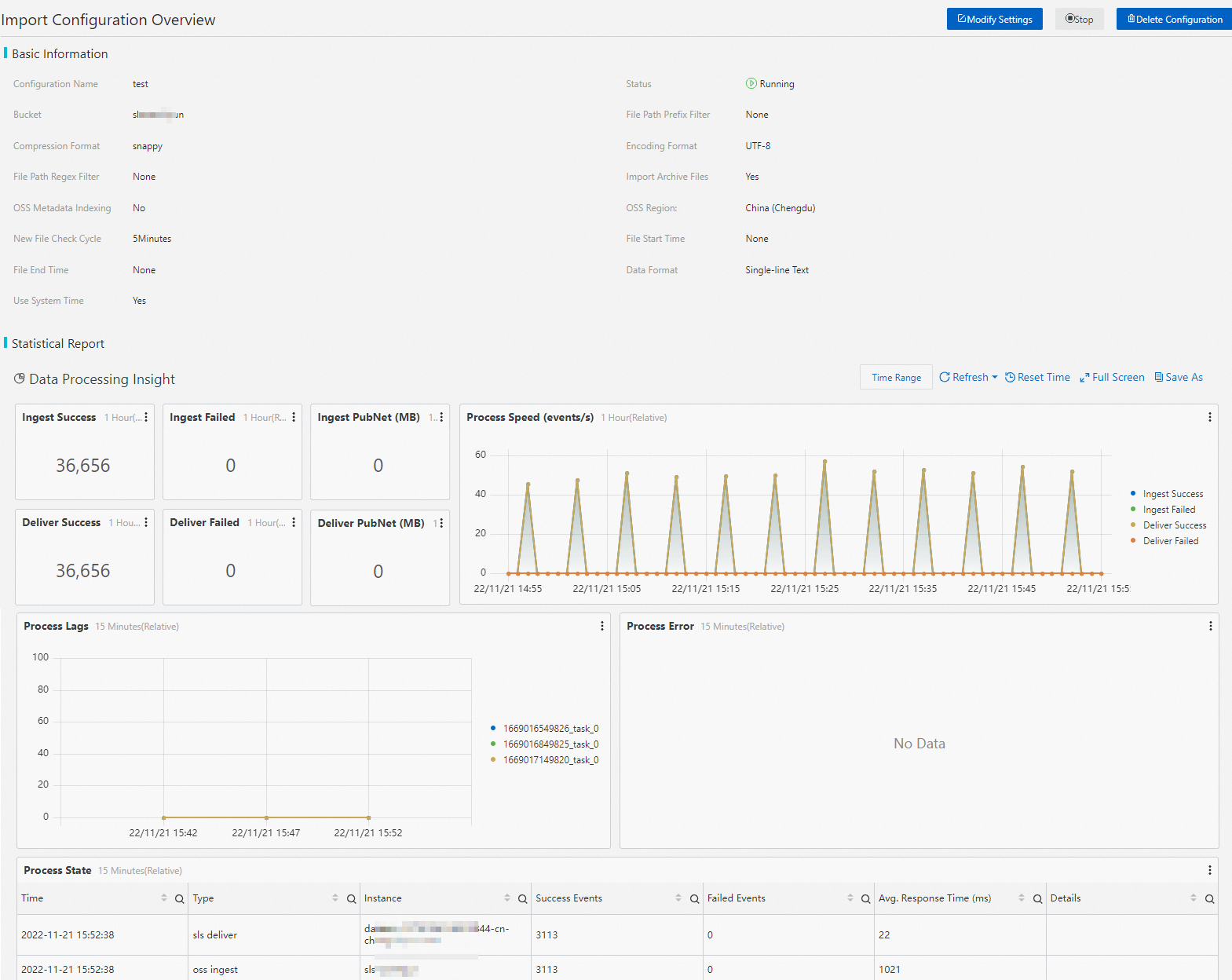

After you create an import configuration, you can view the configuration and its statistical reports in the console.

In the Projects section, click the destination project.

In the navigation pane, choose . For the destination Logstore, choose , and then click the configuration name.

View a task

On the Import Configuration Overview page, you can view basic information and statistical reports.

Modify a task

Click Modify Configuration to modify the import configuration. For more information, see Set the import configuration.

Delete a task

To delete the import configuration, click Delete Configuration.

WarningThis operation cannot be undone. Proceed with caution.

Stop a task

To stop the import task, click Stop.

Start a task

Click Start to begin the import task.

FAQ

Problem | Possible cause | Solution |

No data is displayed in the preview when I import files from the HDFS directory of a bucket. | Importing files from the HDFS directory is not supported. | If the HDFS service is enabled for a bucket, a |

No data is displayed in the preview. | There are no files in the OSS bucket, the files contain no data, or no files match the filter conditions. |

|

The data contains garbled text. | The data format, compression format, or encoding format is not configured correctly. | Check the actual format of the OSS files and adjust the relevant configuration items, such as Data Format, Compression Format, or Encoding Format. To fix existing garbled data, you must create a new Logstore and a new import configuration. |

The data timestamp displayed in Simple Log Service is inconsistent with the actual time of the data. | The log time field was not specified, or the time format or time zone was set incorrectly in the import configuration. | Specify the log time field and set the correct time format and time zone. For more information, see Create a data import configuration. |

After importing data, I cannot query and analyze the data. |

|

|

The number of imported data entries is less than expected. | Some files contain single lines of data that exceed 3 MB. This data is discarded during the import process. For more information, see Collection limits. | When you write data to OSS files, make sure that no single line of data exceeds 3 MB. |

The data import speed is slower than the expected speed of 80 MB/s, even though the number of files and total data volume are large. | The number of Logstore shards is too small. For more information, see Performance limits. | If the number of Logstore shards is small, increase the number of shards to 10 or more and monitor the latency. For more information, see Manage shards. |

When creating an import configuration, I cannot select an OSS bucket. | The authorization for the AliyunLogImportOSSRole role is incomplete. | Complete the authorization as described in the prerequisites section of this topic. |

Some files were not imported. | The filter conditions are set incorrectly, or some files are larger than 5 GB. For more information, see Collection limits. |

|

Archived objects were not imported. | The Import Archived Objects switch is off. For more information, see Collection limits. |

|

Multi-line text logs are parsed incorrectly. | The first line regular expression or last line regular expression is set incorrectly. | Verify that the first line regular expression or last line regular expression is correct. |

The latency for importing new files is high. | The number of existing files that match the file path prefix filter is too large, and the Use OSS Metadata Index switch is disabled in the import configuration. | If more than 1 million files match the file path prefix, enable the Use OSS Metadata Index switch in the import configuration. Otherwise, the file discovery process will be highly inefficient. |

An STS-related permission error is reported during creation. | The RAM user does not have sufficient permissions. |

|

Error handling mechanism

Error | Description |

Failed to read a file | When reading a file, if an incomplete file error occurs (due to network exceptions, file corruption, etc.), the import task automatically retries. If the read operation fails after three retries, the task skips the file. The retry interval is the same as the new file check interval. If the new file check interval is set to Never, the retry interval is 5 minutes. |

Compression format parsing error | When decompressing a file, if an invalid compression format error occurs, the import task skips the file. |

Data format parsing error |

|

The OSS bucket does not exist | The import task retries periodically. This means that after the bucket is recreated, the import task automatically resumes the import. |

Permission error | If a permission error occurs when reading data from an OSS bucket or writing data to a Simple Log Service Logstore, the import task retries periodically. This means that after the permission issue is fixed, the task automatically resumes. When a permission error occurs, the import task does not skip any files. Therefore, after the permission issue is fixed, the task automatically imports data from the unprocessed files in the bucket to the Simple Log Service Logstore. |

OSS delivery API operations

Operation | Interface |

Create an OSS import task | |

Modify an OSS import task | |

Obtain an OSS import task | |

Delete an OSS import task | |

Start an OSS import task | |

Stop an OSS import task |