Parameter | Description |

Logtail Deployment Mode | Select the deployment mode of Logtail. In this example, Daemonset is selected. |

File Path Type | Select the type of the file path that you want to use to collect logs. Valid values: Path in Container and Host Path. If a hostPath volume is mounted to a container and you want to collect logs from files based on the mapped file path on the container host, set this parameter to Host Path. In other scenarios, set this parameter to Path in Container. |

File Path | If the required container runs on a Linux host, specify a path that starts with a forward slash (/). Example: /apsara/nuwa/**/app.Log. If the required container runs on a Windows host, specify a path that starts with a drive letter. Example: C:\Program Files\Intel\**\*.Log.

You can specify an exact directory and an exact name. You can also use wildcard characters to specify the directory and name. For more information, see Wildcard matching. When you configure this parameter, you can use only asterisks (*) or question marks (?) as wildcard characters. Simple Log Service scans all levels of the specified directory for the log files that match specified conditions. Examples: If you specify /apsara/nuwa/**/*.log, Simple Log Service collects logs from the log files whose names are suffixed by .log in the /apsara/nuwa directory and the recursive subdirectories of the directory. If you specify /var/logs/app_*/**/*.log, Simple Log Service collects logs from the log files that meet the following conditions: The file name is suffixed by .log. The file is stored in a subdirectory under the /var/logs directory or in a recursive subdirectory of the subdirectory. The name of the subdirectory matches the app_* pattern. If you specify /var/log/nginx/**/access*, Simple Log Service collects logs from the log files whose names start with access in the /var/log/nginx directory and the recursive subdirectories of the directory.

|

Maximum Directory Monitoring Depth | Specify the maximum number of levels of subdirectories that you want to monitor. The subdirectories are in the log file directory that you specify. This parameter specifies the levels of subdirectories that can be matched for the wildcard characters ** included in the value of File Path. A value of 0 specifies that only the log file directory that you specify is monitored. Warning We recommend that you configure this parameter based on the minimum requirement. If you specify a large value, Logtail may consume more monitoring resources and cause collection latency. |

Enable Container Metadata Preview | If you turn on Enable Container Metadata Preview, you can view the container metadata after you create the Logtail configuration, including the matched container information and full container information. |

Container Filtering | Logtail version If the version of Logtail is earlier than 1.0.34, you can use only environment variables and container labels to filter containers. If the version of Logtail is 1.0.34 or later, we recommend that you use different levels of Kubernetes information to filter containers. The information includes K8s Pod Name Regular Matching, K8s Namespace Regular Matching, K8s Container Name Regular Matching, and Kubernetes Pod Label Whitelist.

Filter conditions Important Container labels are retrieved by running the docker inspect command. Container labels are different from Kubernetes labels. For more information, see Obtain labels. Environment variables are the same as the environment variables that are configured to start containers. For more information, see Obtain environment variables.

Kubernetes namespaces and container names can be mapped to container labels. The label for a namespace is io.kubernetes.pod.namespace. The label for a container name is io.kubernetes.container.name. We recommend that you use the two labels to filter containers. For example, the namespace of a pod is backend-prod, and the name of a container in the pod is worker-server. If you want to collect the logs of the worker-server container, you can specify io.kubernetes.pod.namespace : backend-prod or io.kubernetes.container.name : worker-server in the container label whitelist. If the two labels do not meet your business requirements, you can use the environment variable whitelist or the environment variable blacklist to filter containers.

-

K8s Pod Name Regular Matching

Enter the pod name. The pod name specifies the containers from which text logs are collected. Regular expression matching is supported. For example, if you specify ^(nginx-log-demo.*)$, all containers in the pod whose name starts with nginx-log-demo are matched.

-

K8s Namespace Regular Matching

Enter the namespace name. The namespace name specifies the containers from which text logs are collected. Regular expression matching is supported. For example, if you specify ^(default|nginx)$, all containers in the nginx and default namespaces are matched.

-

K8s Container Name Regular Matching

Enter the container name. The container name specifies the containers from which text logs are collected. Regular expression matching is supported. Kubernetes container names are defined in spec.containers. For example, if you specify ^(container-test)$, all containers whose name is container-test are matched.

-

Container Label Whitelist

Configure a container label whitelist. The whitelist specifies the containers from which text logs are collected. Note Do not specify duplicate values for the Label Name parameter. If you specify duplicate values, only one value takes effect. If you specify a value for the Label Name parameter but do not specify a value for the Label Value parameter, containers whose container labels contain the specified label name are matched. If you specify a value for the Label Name and Label Value parameters, containers whose container labels contain the specified Label Name:Label Value are matched. By default, string matching is performed for the values of the Label Value parameter. Containers are matched only if the values of the container labels are the same as the values of the Label Value parameter. If you specify a value that starts with a caret (^) and ends with a dollar sign ($) for the Label Value parameter, regular expression matching is performed. For example, if you set the Label Name parameter to app and set the Label Value parameter to ^(test1|test2)$, containers whose container labels contain app:test1 or app:test2 are matched.

Key-value pairs are evaluated by using the OR operator. If a container has a container label that consists of one of the specified key-value pairs, the container is matched.

-

Container Label Blacklist

Configure a container label blacklist. The blacklist specifies the containers from which text logs are not collected. Note Do not specify duplicate values for the Label Name parameter. If you specify duplicate values, only one value takes effect. If you specify a value for the Label Name parameter but do not specify a value for the Label Value parameter, containers whose container labels contain the specified label name are filtered out. If you specify a value for the Label Name and Label Value parameters, containers whose container labels contain the specified Label Name:Label Value are filtered out. By default, string matching is performed for the values of the Label Value parameter. Containers are filtered out only if the values of the container labels are the same as the values of the Label Value parameter. If you specify a value that starts with a caret (^) and ends with a dollar sign ($) for the Label Value parameter, regular expression matching is performed. For example, if you set the Label Name parameter to app and set the Label Value parameter to ^(test1|test2)$, containers whose container labels contain app:test1 or app:test2 are filtered out.

Key-value pairs are evaluated by using the OR operator. If a container has a container label that consists of one of the specified key-value pairs, the container is filtered out.

-

Environment Variable Whitelist

Configure an environment variable whitelist. The whitelist specifies the containers from which text logs are collected. If you specify a value for the Environment Variable Name parameter but do not specify a value for the Environment Variable Value parameter, containers whose environment variables contain the specified environment variable name are matched. If you specify a value for the Environment Variable Name and Environment Variable Value parameters, containers whose environment variables contain the specified Environment Variable Name:Environment Variable Value are matched. By default, string matching is performed for the values of the Environment Variable Value parameter. Containers are matched only if the values of the environment variables are the same as the values of the Environment Variable Value parameter. If you specify a value that starts with a caret (^) and ends with a dollar sign ($) for the Environment Variable Value parameter, regular expression matching is performed. For example, if you set the Environment Variable Name parameter to NGINX_SERVICE_PORT and set the Environment Variable Value parameter to ^(80|6379)$, containers whose port number is 80 or 6379 are matched.

Key-value pairs are evaluated by using the OR operator. If a container has an environment variable that consists of one of the specified key-value pairs, the container is matched.

-

Environment Variable Blacklist

Configure an environment variable blacklist. The blacklist specifies the containers from which text logs are not collected. If you specify a value for the Environment Variable Name parameter but do not specify a value for the Environment Variable Value parameter, containers whose environment variables contain the specified environment variable name are filtered out. If you specify a value for the Environment Variable Name and Environment Variable Value parameters, containers whose environment variables contain the specified Environment Variable Name:Environment Variable Value are filtered out. By default, string matching is performed for the values of the Environment Variable Value parameter. Containers are filtered out only if the values of the environment variables are the same as the values of the Environment Variable Value parameter. If you specify a value that starts with a caret (^) and ends with a dollar sign ($) for the Environment Variable Value parameter, regular expression matching is performed. For example, if you set the Environment Variable Name parameter to NGINX_SERVICE_PORT and set the Environment Variable Value parameter to ^(80|6379)$, containers whose port number is 80 or 6379 are filtered out.

Key-value pairs are evaluated by using the OR operator. If a container has an environment variable that consists of one of the specified key-value pairs, the container is filtered out.

-

Kubernetes Pod Label Whitelist

Configure a Kubernetes pod label whitelist. The whitelist specifies the containers from which text logs are collected. If you specify a value for the Label Name parameter but do not specify a value for the Label Value parameter, containers whose pod labels contain the specified label name are matched. If you specify a value for the Label Name and Label Value parameters, containers whose pod labels contain the specified Label Name:Label Value are matched. By default, string matching is performed for the values of the Label Value parameter. Containers are matched only if the values of the pod labels are the same as the values of the Label Value parameter. If you specify a value that starts with a caret (^) and ends with a dollar sign ($), regular expression matching is performed. For example, if you set the Label Name parameter to environment and set the Label Value parameter to ^(dev|pre)$, containers whose pod labels contain environment:dev or environment:pre are matched.

Key-value pairs are evaluated by using the OR operator. If a container has a pod label that consists of one of the specified key-value pairs, the container is matched.

-

Kubernetes Pod Label Blacklist

Configure a Kubernetes pod label blacklist. The blacklist specifies the containers from which text logs are not collected. If you specify a value for the Label Name parameter but do not specify a value for the Label Value parameter, containers whose pod labels contain the specified label name are filtered out. If you specify a value for the Label Name and Label Value parameters, containers whose pod labels contain the specified Label Name:Label Value are filtered out. By default, string matching is performed for the values of the Label Value parameter. Containers are filtered out only if the values of the pod labels are the same as the values of the Label Value parameter. If you specify a value that starts with a caret (^) and ends with a dollar sign ($) for the Label Value parameter, regular expression matching is performed. For example, if you set the Label Name parameter to environment and set the Label Value parameter to ^(dev|pre)$, containers whose pod labels contain environment:dev or environment:pre are filtered out.

Key-value pairs are evaluated by using the OR operator. If a container has a pod label that consists of one of the specified key-value pairs, the container is filtered out.

|

Log Tag Enrichment | Specify log tags by using environment variables and pod labels. |

File Encoding | Select the encoding format of log files. |

First Collection Size | Specify the size of data that Logtail can collect from a log file the first time Logtail collects logs from the file. The default value of First Collection Size is 1024. Unit: KB. If the file size is less than 1,024 KB, Logtail collects data from the beginning of the file. If the file size is greater than 1,024 KB, Logtail collects the last 1,024 KB of data in the file.

You can specify First Collection Size based on your business requirements. Valid values: 0 to 10485760. Unit: KB. |

Collection Blacklist | If you turn on Collection Blacklist, you must configure a blacklist to specify the directories or files that you want Simple Log Service to skip when it collects logs. You can specify exact directories and file names. You can also use wildcard characters to specify directories and file names. When you configure this parameter, you can use only asterisks (*) or question marks (?) as wildcard characters. Important If you use wildcard characters to configure File Path and you want to skip some directories in the specified directory, you must configure Collection Blacklist and enter a complete directory. For example, if you set File Path to /home/admin/app*/log/*.log and you want to skip all subdirectories in the /home/admin/app1* directory, you must select Directory Blacklist and enter /home/admin/app1*/** in the Directory Name field. If you enter /home/admin/app1*, the blacklist does not take effect. When a blacklist is in use, computational overhead is generated. We recommend that you add up to 10 entries to the blacklist. You cannot specify a directory path that ends with a forward slash (/). For example, if you set the path to /home/admin/dir1/, the directory blacklist does not take effect.

The following types of blacklists are supported: File Path Blacklist, File Blacklist, and Directory Blacklist. File Path Blacklist File Blacklist Directory Blacklist If you select File Path Blacklist and enter /home/admin/private*.log in the File Path Name field, all files whose names are prefixed by private and suffixed by .log in the /home/admin/ directory are skipped. If you select File Path Blacklist and enter /home/admin/private*/*_inner.log in the File Path Name field, all files whose names are suffixed by _inner.log in the subdirectories whose names are prefixed by private in the /home/admin/ directory are skipped. For example, the /home/admin/private/app_inner.log file is skipped, but the /home/admin/private/app.log file is not skipped.

If you select File Blacklist and enter app_inner.log in the File Name field, all files whose names are app_inner.log are skipped. If you select Directory Blacklist and enter /home/admin/dir1 in the Directory Name field, all files in the /home/admin/dir1 directory are skipped. If you select Directory Blacklist and enter /home/admin/dir* in the Directory Name field, the files in all subdirectories whose names are prefixed by dir in the /home/admin/ directory are skipped. If you select Directory Blacklist and enter /home/admin/*/dir in the Directory Name field, all files in the dir subdirectory in each second-level subdirectory of the /home/admin/ directory are skipped. For example, the files in the /home/admin/a/dir directory are skipped, but the files in the /home/admin/a/b/dir directory are not skipped.

|

Allow File to Be Collected for Multiple Times | By default, you can use only one Logtail configuration to collect logs from a log file. To use multiple Logtail configurations to collect logs from a log file, turn on Allow File to Be Collected for Multiple Times. |

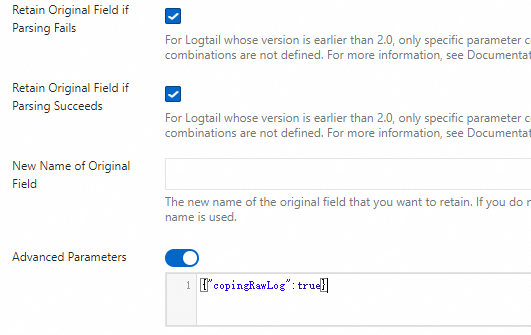

Advanced Parameters | You must manually configure specific parameters of a Logtail configuration. For more information, see Create a Logtail pipeline configuration. |

Elastic Compute Service (ECS)

Elastic Compute Service (ECS)

Container Compute Service (ACS)

Container Compute Service (ACS)

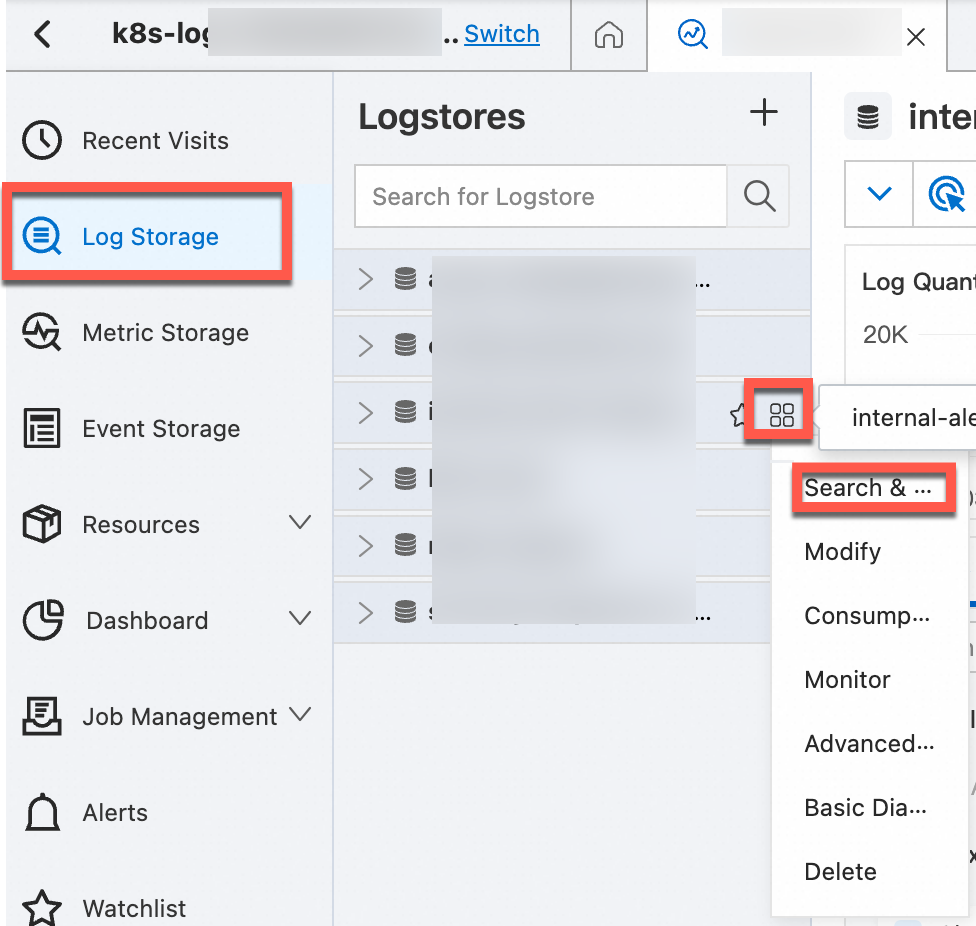

icon, and then select Search & Analysis to view the logs of your Kubernetes cluster.

icon, and then select Search & Analysis to view the logs of your Kubernetes cluster.