Overview

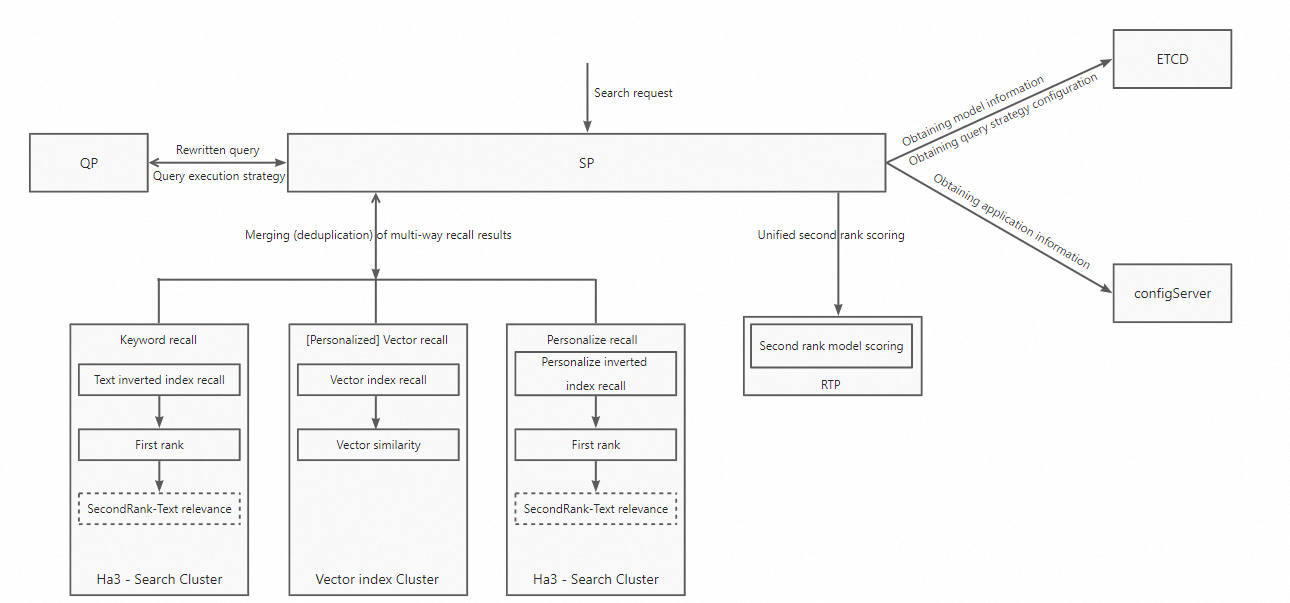

The multimodal search feature of OpenSearch combines text searches and vector searches. Multimodal searches have lower search latency, consume less computing resources, and provide higher accuracy than the searches that use the OR logical operator. These benefits of multimodal searches are verified in question-answering search scenarios. The multimodal retrieval architecture that is used by the multimodal search feature can also be used in scenarios such as vector-based image retrieval, expression-based retrieval, and personalized retrieval.

Comparison between the text search and multimodal search features in question-answering search scenarios

Why do you need multimodal searches in question-answering search scenarios? Compared with text searches on websites or E-commerce platforms, the searches in the education scenario where you search for question answers by taking photos have the following differences:

Search queries are longer: A search query in a regular search has up to 30 terms, and a search query in a question-answering search may have up to 100 terms.

Search queries are recognized from photos by using optical character recognition (OCR). If important terms are wrongly recognized, the retrieval and sort results are inaccurate.

Text search

1. Use the OR logical operator

To lower the zero-result rate, regular systems use the default OR logic of Elasticsearch in question-answering searches.

OpenSearch also supports the OR logic. In addition, OpenSearch lowers the search latency by concurrently processing the searches that use the OR logical operator. However, the computing resource consumption is still high after the optimization.

2. Use the AND logical operator

Searches that use the AND logical operator and are processed by the common query analysis feature have a high zero-result rate. In addition, such searches have lower search accuracy than the searches that use the OR logical operator.

OpenSearch optimizes the query analysis feature for the education industry. The accuracy of the searches that are processed by the optimized query analysis feature is almost the same as that of the searches that use the OR logical operator.

Vector search

Goals: The first goal is to increase the number of retrieved documents based on vectors that are generated after word embedding. The second goal is to achieve lower search latency, lower computing resource consumption, and higher search accuracy than the OR logic by using the AND logic. To achieve the preceding goals, OpenSearch uses the BERT model for vector retrieval. In addition, OpenSearch optimizes the model for question-answering search scenarios in the education industry. The following optimizations are performed:

The StructBERT model developed by Alibaba DAMO Academy is used as the BERT model. This model is customized for the education industry.

The Proxima engine developed by Alibaba DAMO Academy is used as the vector search engine. The accuracy and speed of Proxima are far higher than those of open source systems.

Training data can be accumulated based on search logs, and the search performance is continuously improved.

Optimization results:

The recall rate reaches that of the OR logic.

The accuracy is 3% to 5% higher than that of the OR logic.

The total number of retrieved documents is reduced by 40 times, and the search latency is lowered by more than 10 times.

Configure the multimodal search feature for an application of Industry-specific Enhanced Edition for education

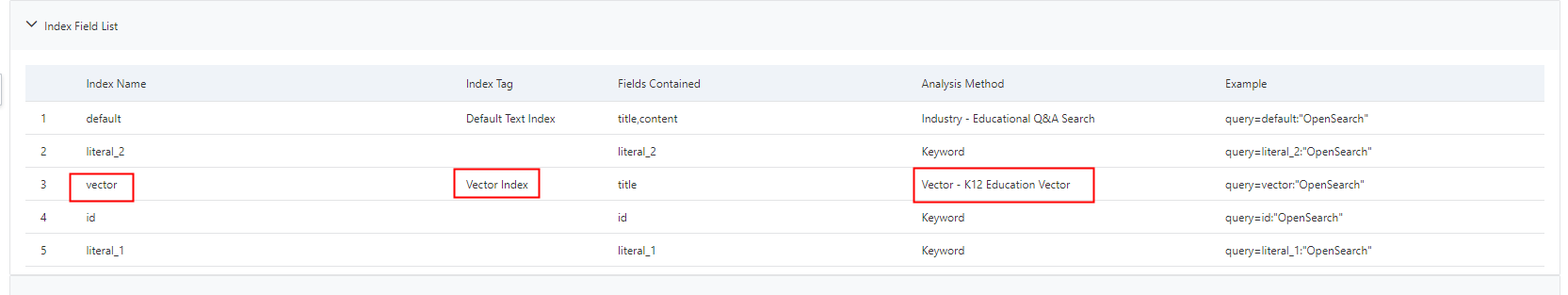

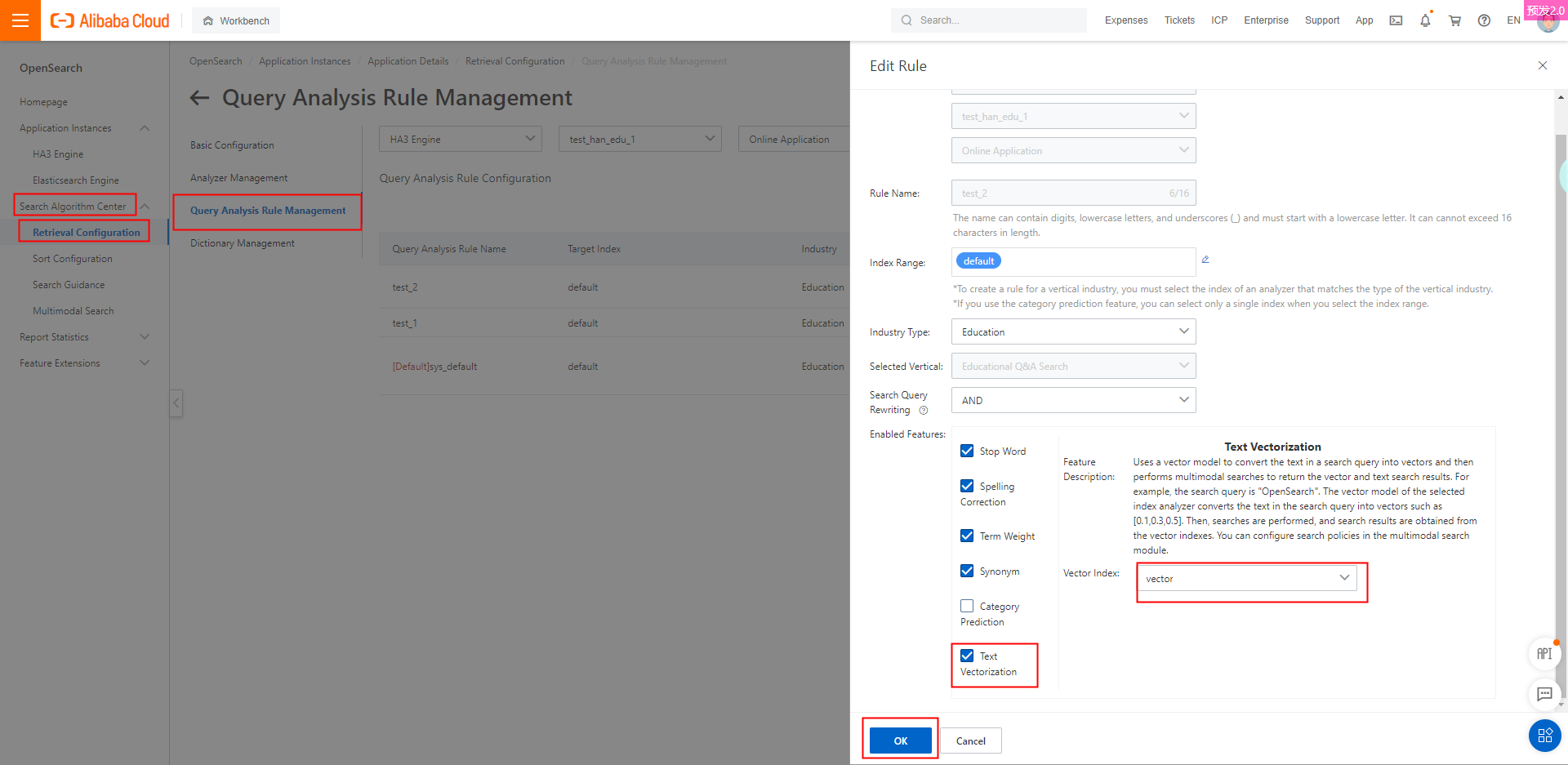

1. Create an application of Industry-specific Enhanced Edition for education. After the application is created, check whether a vector index is configured. In this example, the vector index uses the Vector - K12 Education Vector analyzer. 2. Create a query analysis rule. Select Text Vectorization to enable the word embedding feature and select the vector index that is configured in Step 1.

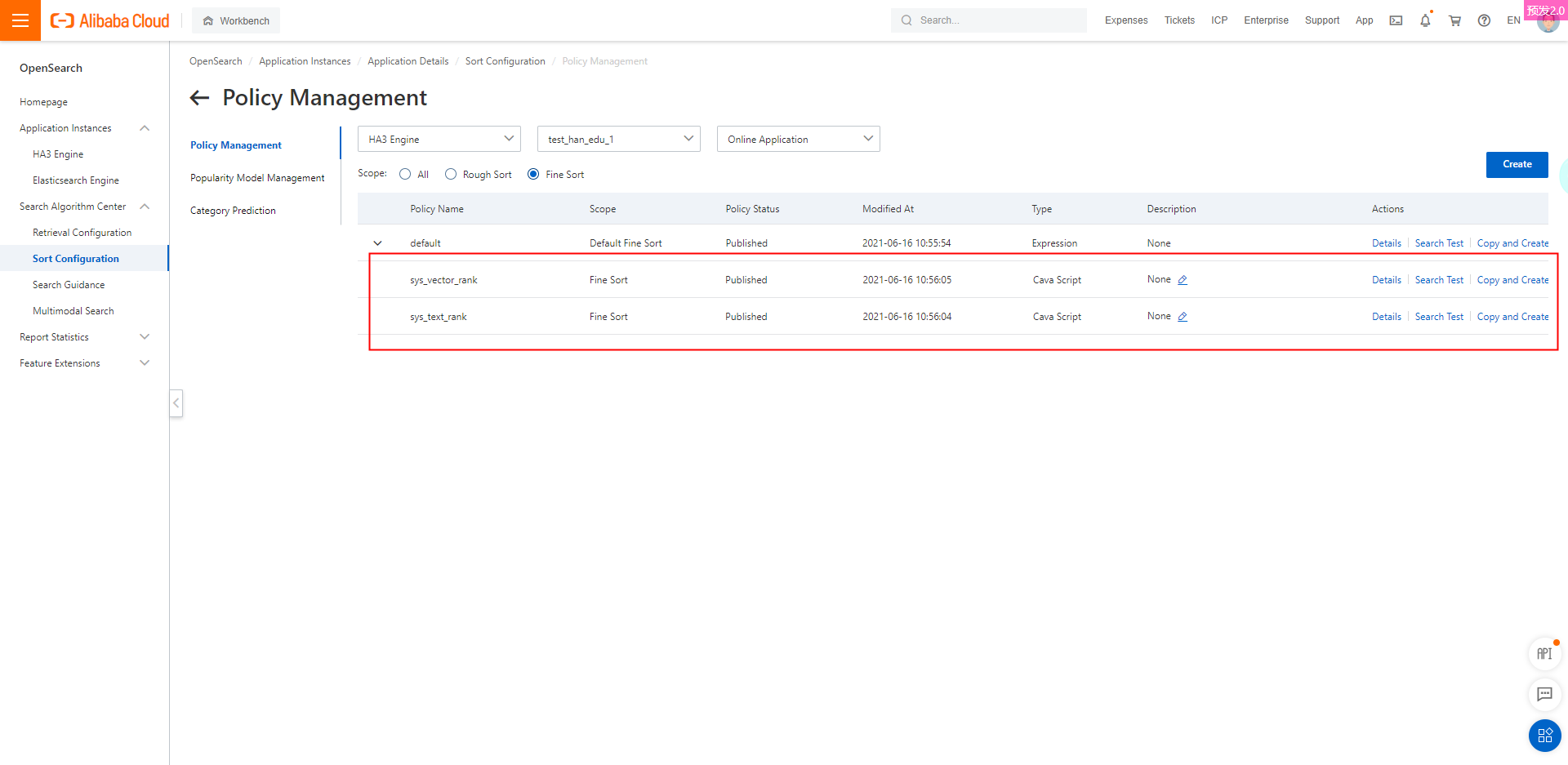

2. Create a query analysis rule. Select Text Vectorization to enable the word embedding feature and select the vector index that is configured in Step 1. 3. Configure sort policies. By default, the following two fine sort policies that use cava scripts are created for an application of Industry-specific Enhanced Edition for education: sys_text_rank for text searches and sys_vector_rank for vector searches.

3. Configure sort policies. By default, the following two fine sort policies that use cava scripts are created for an application of Industry-specific Enhanced Edition for education: sys_text_rank for text searches and sys_vector_rank for vector searches.

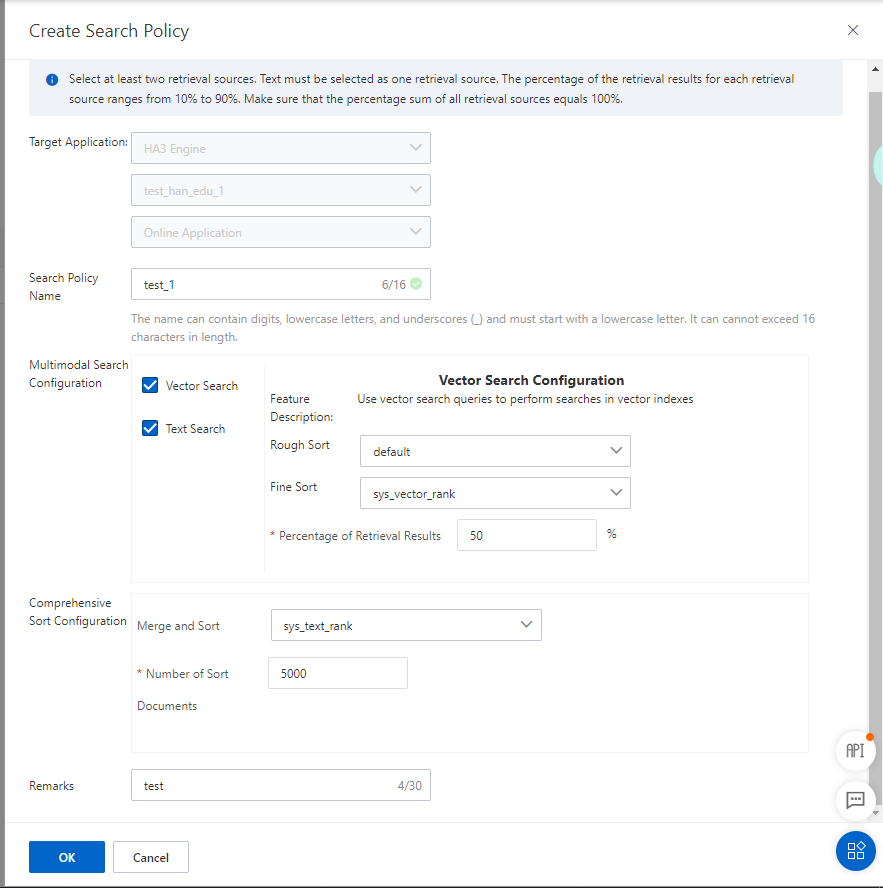

4. Create a multimodal search policy by performing the following operations: Set the policy name, configure the vector search and text search features, and then configure the merge sort feature. To configure the merge sort feature, select a sort policy for merge sort and specify the number of documents to be sorted.

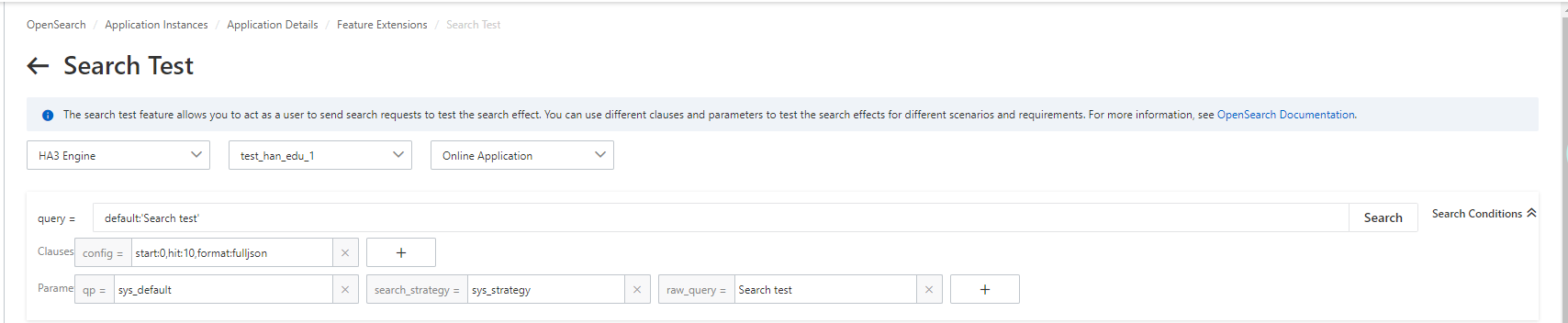

5. Perform a search test in the OpenSearch console.

In this example, the search query is "Search test", the multimodal search policy is sys_strategy, and the query analysis rule is sys_default. Note: All the three parameters shown in the preceding figure must be set. If the default query analysis rule is used, you can ignore the qp parameter. Search request in this example:

Note: All the three parameters shown in the preceding figure must be set. If the default query analysis rule is used, you can ignore the qp parameter. Search request in this example:

query=default:'Search test'&search_strategy=sys_strategy&raw_query=Search test&qp=sys_defaultPerform a search test by using OpenSearch SDK. In this example, OpenSearch SDK for Java is used.

...

// Create a Config object that is used to configure the paging-related parameters or the data format of returned results in the config clause.

Config config = new Config(Lists.<String>newArrayList(appName));

config.setStart(0);

config.setHits(10);

// Set the data format of returned results to JSON.

config.setSearchFormat(SearchFormat.FULLJSON);

// Create a SearchParams object.

SearchParams searchParams = new SearchParams(config);

// Set the input parameters in the search request.

HashMap<String,String> paraMap=new HashMap<String,String>();

// Set the raw_query parameter.

paraMap.put("raw_query","Search test");

// Specify the multimodal search policy.

paraMap.put("search_strategy","sys_strategy");

searchParams.setCustomParam(paraMap);

// Specify the query analysis rule.

List<String> qpName = new ArrayList<String>();

qpName.add("sys_default"); // Specify the name of the query analysis rule.

searchParams.setQueryProcessorNames(qpName);

...Usage notes

Only exclusive applications can use the multimodal search feature.

The multimodal search feature does not support the aggregate or distinct clause.

A maximum of 10 multimodal search policies can be created for each application.

Both the text search and vector search features must be enabled for multimodal searches. If you specify the proportions of retrieval results for the text search and vector search features, the sum of the proportions must be 100%.

When you configure the text search and vector search features, select rough and fine sort policies that are configured in Step 3.

When you configure the merge sort feature, you can select a fine sort policy for merge sort. By default, this sort policy is not specified. The Number of Sort Documents parameter is required. The value ranges from 1 to 5000.

A vector index that is not generated by the system cannot be selected when you configure the word embedding feature.

If you want to use a custom vector index for multimodal searches, contact Alibaba Cloud.