This topic describes the details of the parameters that are used in the ApsaraVideo Media Processing (MPS) API, including the parameter types, description, and valid values. You can configure these API parameters to use the features of MPS, including transcoding, MPS queues, and workflows.

Input

This parameter is referenced by the SubmitJobs operation.

Parameter | Type | Required | Description |

Bucket | String | Yes | The Object Storage Service (OSS) bucket that stores the input file. For more information about OSS buckets, see Terms in OSS. |

Location | String | Yes | The region in which the OSS bucket that stores the input file resides.

|

Object | String | Yes | The OSS path of the input file. The OSS path is a full path that includes the name of the input file.

|

Referer | String | No | The configuration of hotlink protection. If you enable the hotlink protection feature for the OSS bucket to allow only specified referers in the whitelist to download files, you must specify this parameter. If you do not enable the hotlink protection feature for the OSS bucket, you do not need to specify this parameter. For more information, see Hotlink protection.

|

Output

This parameter is referenced by the SubmitJobs, AddMediaWorkflow, and UpdateMediaWorkflow operations.

Parameter | Type | Required | Description |

OutputObject | String | Yes | The OSS path of the output file. The OSS path is a full path that includes the name of the output file.

|

TemplateId | String | Yes | The ID of the transcoding template.

|

Container | Object | No | The container format. For more information, see the Container section of this topic.

|

Video | Object | No | The parameter related to video transcoding. For more information, see the Video section of this topic.

|

Audio | Object | No | The parameter related to audio transcoding. For more information, see the Audio section of this topic.

|

TransConfig | Object | No | The parameter related to the transcoding process. For more information, see the TransConfig section of this topic.

|

VideoStreamMap | String | No | The identifier of the video stream to be retained in the input file. Valid values:

|

AudioStreamMap | String | No | The identifier of the audio stream to be retained in the input file. Valid values:

|

Rotate | String | No | The rotation angle of the video in the clockwise direction.

|

WaterMarks | Object[] | No | The watermarks. Watermarks are images or text added to video images. If you specify this parameter, the corresponding parameter in the specified watermark template is overwritten. For more information, see the WaterMarks section of this topic.

|

DeWatermark | Object | No | The blur operation. For more information, see the DeWatermark section of this topic.

|

SubtitleConfig | Object | No | The configurations of the hard subtitle. This parameter allows you to add external subtitle files to the video. For more information, see the SubtitleConfig section of this topic.

|

Clip | Object | No | The clip. For more information, see the Clip section of this topic.

|

MergeList | Object[] | No | The merge list. You can merge multiple input files and clips in sequence to generate a new video. For more information, see the MergeList section of this topic.

|

MergeConfigUrl | String | No | The OSS path of the configuration file for merging clips.

|

OpeningList | Object[] | No | The opening parts. Opening is a special merging effect that allows you to embed opening parts at the beginning of the input video. The opening parts are displayed in Picture-in-Picture (PiP) mode. For more information, see the OpeningList section of this topic.

|

TailSlateList | Object[] | No | The ending parts. Ending is a special merging effect that allows you to add ending parts to the end of the input video. The ending parts are displayed in fade-in and fade-out mode. For more information, see the TailSlateList section of this topic.

|

Amix | Object[] | No | The audio mixing configuration. This parameter is suitable for scenarios in which you want to merge multiple audio tracks in a video or add background music. For more information, see the Amix section of this topic.

|

MuxConfig | Object | No | The packaging configurations. For more information, see the MuxConfig section of this topic.

|

M3U8NonStandardSupport | Object | No | The non-standard support for the M3U8 format. For more information, see the M3U8NonStandardSupport section of this topic.

|

Encryption | String | No | The encryption configuration. This parameter takes effect only if the container format is set to M3U8. For more information, see the Encryption section of this topic.

|

UserData | String | No | The custom data, which can be up to 1,024 bytes in size. |

Priority | String | No | The priority of the transcoding job in the MPS queue to which the transcoding job is added.

|

Metata | Map | No | Specify metadata for the output video container format. The format is a JSON key-value object, for example:

|

Container

This parameter is referenced by the Output.Container parameter.

Parameter | Type | Required | Description |

Format | String | No | The container format.

|

TransConfig

This parameter is referenced by the Output.TransConfig parameter.

Parameter | Type | Required | Description |

TransMode | String | No | The video transcoding mode. This parameter takes effect only if the Codec parameter is set to H.264, H.265, or AV1, and the Bitrate and Crf parameters are set to valid values. For more information, see the Bitrate control mode section of this topic. Valid values:

|

AdjDarMethod | String | No | The method that is used to adjust the resolution. This parameter takes effect only if both the Width and Height parameters are specified. You can use this parameter together with the LongShortMode parameter.

|

IsCheckReso | String | No | Specifies whether to check the video resolution. You can specify only one of the IsCheckReso and IsCheckResoFail parameters. The IsCheckResoFail parameter has a higher priority than the IsCheckReso parameter. Valid values:

|

IsCheckResoFail | String | No | Specifies whether to check the video resolution. You can specify only one of the IsCheckReso and IsCheckResoFail parameters. The IsCheckResoFail parameter has a higher priority than the IsCheckReso parameter. Valid values:

|

IsCheckVideoBitrate | String | No | Specifies whether to check the video bitrate. You can specify only one of the IsCheckVideoBitrate and IsCheckVideoBitrateFail parameters. The IsCheckVideoBitrateFail parameter has a higher priority than the IsCheckVideoBitrate parameter. Valid values:

|

IsCheckVideoBitrateFail | String | No | Specifies whether to check the video bitrate. You can specify only one of the IsCheckVideoBitrate and IsCheckVideoBitrateFail parameters. The IsCheckVideoBitrateFail parameter has a higher priority than the IsCheckVideoBitrate parameter. Valid values:

|

IsCheckAudioBitrate | String | No | Specifies whether to check the audio bitrate. You can specify only one of the IsCheckAudioBitrate and IsCheckAudioBitrateFail parameters. The IsCheckAudioBitrateFail parameter has a higher priority than the IsCheckAudioBitrate parameter. Valid values:

|

IsCheckAudioBitrateFail | String | No | Specifies whether to check the audio bitrate. You can specify only one of the IsCheckAudioBitrate and IsCheckAudioBitrateFail parameters. The IsCheckAudioBitrateFail parameter has a higher priority than the IsCheckAudioBitrate parameter. Valid values:

|

Bitrate control mode

The following table describes the requirements of different bitrate control modes for setting the TransMode, Bitrate, Maxrate, Bufsize, and Crf parameters.

Bitrate control mode | Setting of the TransMode parameter | Setting of the bitrate-related parameters |

Constant bitrate (CBR) | CBR | Set the Bitrate, Maxrate, and Bufsize parameters to the same value. |

Average bitrate (ABR) | Set the TransMode parameter to onepass or leave the parameter empty. | The Bitrate parameter is required. The Maxrate and Bufsize parameters are optional, which can be used to configure the bitrate range during peak hours. |

Variable bitrate (VBR) | twopass | The Bitrate, Maxrate, and Bufsize parameters are required. |

Constant rate factor (CRF) | fixCRF | A CRF value is required. If the Crf parameter is not specified, the default value of the Crf parameter corresponding to the specified Codec value takes effect. The Maxrate and Bufsize parameters are optional, which can be used to configure the bitrate range during peak hours. |

Leave the TransMode parameter empty. | Leave the Bitrate parameter empty, and the default value of the Crf parameter corresponding to the specified Codec value takes effect. |

Video

This parameter is referenced by the Output.Video parameter.

Parameter | Type | Required | Description |

Remove | String | No | Specifies whether to delete the video stream. Valid values:

|

Codec | String | No | The video encoding format.

|

Width | String | No | The width or long side of the output video. If the LongShortMode parameter is set to false or left empty, this parameter specifies the width of the output video. If the LongShortMode parameter is set to true, this parameter specifies the long side of the output video.

|

Height | String | No | The height or short side of the output video. If the LongShortMode parameter is set to false or left empty, this parameter specifies the height of the output video. If the LongShortMode parameter is set to true, this parameter specifies the short side of the output video.

|

LongShortMode | String | No | Specifies whether to enable the auto-rotate screen feature. This parameter takes effect if at least one of the Width and Height parameters is specified. Valid values:

|

Fps | String | No | The frame rate of the video stream.

|

MaxFps | String | No | The maximum frame rate. |

Gop | String | No | The time interval or frame interval of two consecutive I frames. Note The larger the group of pictures (GOP) value, the higher the compression ratio, the lower the encoding speed, the longer the length of a single segment of streaming media, and the longer the response time to seeking. For more information, see the description of the term GOP in Terms.

|

Bitrate | String | No | The average bitrate of the output video. If you use the CRB, ABR, or VBR bitrate control mode, you must specify the Bitrate parameter, and you must set the TransMode parameter to a valid value. For more information, see the Bitrate control mode section of this topic.

|

BitrateBnd | String | No | The average bitrate range of the output video.

|

Maxrate | String | No | The peak bitrate of the output video. For more information, see the Bitrate control mode section of this topic.

|

Bufsize | String | No | The buffer size for bitrate control. You can specify this parameter to control the bitrate fluctuation. For more information, see the Bitrate control mode section of this topic. Note The larger the value of Bufsize, the greater the bitrate fluctuation and the higher the video quality.

|

Crf | String | No | The quality control factor. To use the CRF mode, you must specify the Crf parameter and set the TransMode parameter to fixCRF. For more information, see the Bitrate control mode section of this topic. Note The larger the value of the Crf parameter, the lower the video quality and the higher the compression ratio.

|

Qscale | String | No | The value of the factor for video quality control. This parameter takes effect if you use the VBR mode. Note The larger the value of the Qscale parameter, the lower the video quality and the higher the compression ratio.

|

Profile | String | No | The encoding profile. For more information, see the description of the term encoding profile in Terms.

|

Preset | String | No | The preset mode of the H.264 encoder. Note The faster the mode you select, the lower the video quality.

|

ScanMode | String | No | The scan mode. Valid values:

Best practice: The interlaced scan mode saves data traffic than the progressive scan mode but provides poor image quality. Therefore, the progressive scan mode is commonly used in mainstream video production.

|

PixFmt | String | No | The pixel format.

|

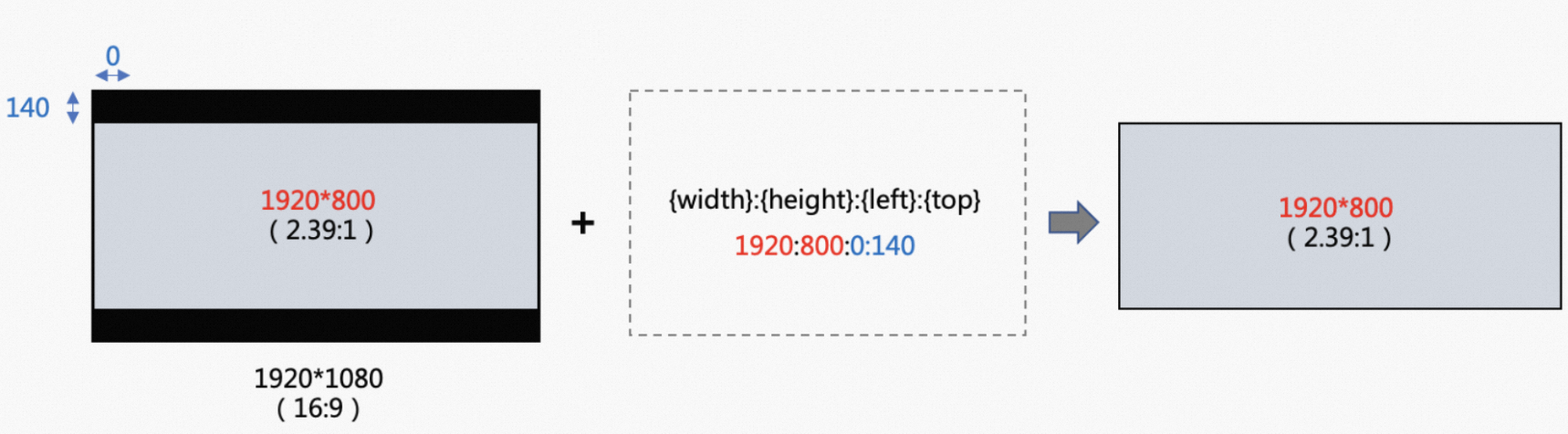

Crop | String | No | The method that is used to crop videos. The borders can be automatically detected and removed. You can also manually crop the video.

|

Pad | String | No | The information about the black bars.

|

Audio

This parameter is referenced by the Output.Audio parameter.

Parameter | Type | Required | Description |

Remove | String | No | Specifies whether to delete the audio stream. Valid values:

|

Codec | String | No | The audio codec format.

|

Profile | String | No | The audio encoding profile.

|

Bitrate | String | No | The audio bitrate of the output file.

|

Samplerate | String | No | The sampling rate.

|

Channels | String | No | The number of sound channels.

|

Volume | String | No | The volume configuration. For more information, see the Volume section of this topic.

|

Volume

This parameter is referenced by the Output.Audio.Volume parameter.

Parameter | Type | Required | Description |

Method | String | No | The method that you want to use to adjust the volume. Valid values:

|

Level | String | No | The level of volume adjustment performed based on the volume of the input audio.

|

IntegratedLoudnessTarget | String | No | The volume of the output video.

|

TruePeak | String | No | The maximum volume.

|

LoudnessRangeTarget | String | No | The magnitude of volume adjustment performed based on the volume of the output video.

|

WaterMarks

This parameter is referenced by the Output.WaterMarks parameter.

Parameter | Type | Required | Description |

Type | String | No | The type of the watermark. Valid values:

|

TextWaterMark | Object | No | The configuration of the text watermark. For more information, see the TextWaterMark section of this topic.

|

InputFile | Object | No | The file to be used as the image watermark. You can use the Bucket, Location, and Object parameters to specify the location of the file.

Note If you add an image watermark whose type is not HDR to an HDR video, the color of the image watermark may become inaccurate. |

WaterMarkTemplateId | String | No | The ID of the image watermark template. If you do not specify this parameter, the following default configurations are used for the parameters related to the image watermark:

|

ReferPos | String | No | The location of the image watermark.

|

Dx | String | No | The horizontal offset of the image watermark relative to the output video. If you specify this parameter, the corresponding parameter in the specified watermark template is overwritten. The following value types are supported:

|

Dy | String | No | The vertical offset of the image watermark relative to the output video. The following value types are supported:

|

Width | String | No | The width of the image watermark. The following value types are supported:

|

Height | String | No | The height of the image watermark. The following value types are supported:

|

Timeline | String | No | The display time of the image watermark. For more information, see the Timeline section of this topic. |

TextWaterMark

This parameter is referenced by the Output.WaterMarks.TextWaterMark parameter.

Parameter | Type | Required | Description |

Content | String | Yes | The text to be displayed as the watermark. The text must be encoded in the Base64 format.

Note If the text contains special characters such as emojis and single quotation marks ('), the watermark may be truncated or fails to be added. You must escape special characters before you add them. |

FontName | String | No | The font of the text watermark.

|

FontSize | Int | No | The font size of the text watermark.

|

FontColor | String | No | The color of the text watermark.

|

FontAlpha | Float | No | The transparency of the text watermark.

|

BorderWidth | Int | No | The outline width of the text watermark.

|

BorderColor | String | No | The outline color of the text watermark.

|

Top | Int | No | The top margin of the text watermark.

|

Left | Int | No | The left margin of the text watermark.

|

Timeline

This parameter is referenced by the Output.WaterMarks.Timeline parameter.

Parameter | Type | Required | Description |

Start | String | No | The beginning of the time range in which the image watermark is displayed.

|

Duration | String | No | The time range in which the image watermark is displayed.

|

Config

This parameter is referenced by the AddWaterMarkTemplate and UpdateWaterMarkTemplate operations.

Parameter | Type | Required | Description |

Type | String | No | The type of the watermark. Valid values:

|

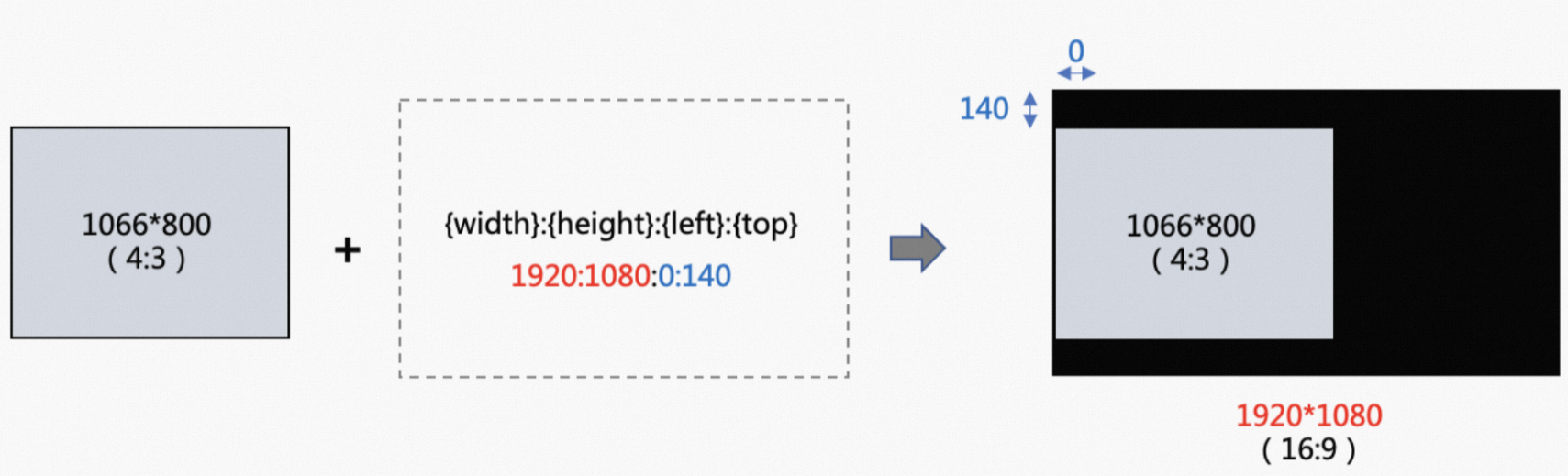

ReferPos | String | No | The location of the image watermark.

|

Dx | String | No | The horizontal offset of the image watermark relative to the output video. The following value types are supported:

|

Dy | String | No | The vertical offset of the image watermark relative to the output video. The following value types are supported:

|

Width | String | No | The width of the image watermark. The following value types are supported:

|

Height | String | No | The height of the image watermark. The following value types are supported:

|

Timeline | String | No | The timeline of the dynamic watermark. For more information, see the Timeline section of this topic. |

The following figure shows an example on how to use the ReferPos, Dx, and Dy parameters to specify the location of the image watermark.

Take note of the following items when you specify the Width and Height parameters:

If you specify neither the Width nor Height parameter, the watermark width is 0.12 times the width of the output video, and the watermark height is proportionally scaled based on the watermark width and the aspect ratio of the original image.

If you specify only the Width parameter, the watermark height is proportionally scaled based on the specified width and the aspect ratio of the original image. If you specify only the Height parameter, the watermark width is proportionally scaled based on the specified height and the aspect ratio of the original image.

If you specify both the Width and Height parameters, the watermark is displayed in the specified width and height.

DeWatermark

This parameter is referenced by the Output.DeWatermark parameter.

{

// Blur two watermarks in the video image starting from the first frame. The first watermark is 10 × 10 pixels away from the upper-left corner of the video image and is 10 × 10 pixels in size. The second watermark is 100 pixels away from the left side of the video image and is 10 × 10 pixels in size. The distance between the top of the video image and the watermark is calculated by multiplying 0.1 by the height of the video image.

"0": [

{

"l": 10,

"t": 10,

"w": 10,

"h": 10

},

{

"l": 100,

"t": 0.1,

"w": 10,

"h": 10

}

],

// Stop blurring the logos at the 128,000th millisecond. In this case, the logos are blurred from the start of the video to the 128,000th millisecond.

"128000": [],

// Blur the watermark in the video image starting from the 250,000th millisecond. The watermark width is 0.01 times the width of the video image, and the watermark height is 0.05 times the height of the video image. The distance between the left side of the video image and the watermark is calculated by multiplying 0.2 by the width of the video image. The distance between the top of the video image and the watermark is calculated by multiplying 0.1 by the height of the video image.

"250000": [

{

"l": 0.2,

"t": 0.1,

"w": 0.01,

"h": 0.05

}

]

} Parameters

pts: the point in time at which a frame is displayed. Unit: millisecond.

l: the left margin of the blurred area.

t: the top margin of the blurred area.

w: the width of the blurred area.

h: the height of the blurred area.

If the values of the l, t, w, and h parameters are greater than 1, the values specify the number of pixels. Otherwise, the values specify the ratio of the pixel value to the corresponding pixel value of the video image. The blurred area is specified based on the nearest integers of the values of the l, t, w, and h parameters.

SubtitleConfig

This parameter is referenced by the Output.SubtitleConfig parameter.

Parameter | Type | Required | Description |

ExtSubtitleList | Object[] | No | The external subtitles. For more information, see the ExtSubtitle section of this topic.

|

ExtSubtitle

This parameter is referenced by the Output.SubtitleConfig.ExtSubtitle parameter.

Parameter | Type | Required | Description |

Input | String | Yes | The external subtitle file. You can use the Bucket, Location, and Object parameters to specify the location of the file.

Note If the length of a subtitle file exceeds the length of a video, the video length prevails. If the characters of a subtitle are excessive and cannot be displayed in one line, the subtitle is truncated. |

CharEnc | String | No | The encoding format of the external subtitles.

Note If you set this parameter to auto, the detected character set may not be the actual character set. We recommend that you set this parameter to another value. |

FontName | String | No | The font of the subtitle.

|

FontSize | Int | No | The font size of the subtitle.

|

Clip

This parameter is referenced by the Output.Clip parameter.

Parameter | Type | Required | Description |

TimeSpan | String | No | The time span of cropping a clip from the input file. For more information, see the TimeSpan section of this topic.

|

ConfigToClipFirstPart | Boolean | No | Specifies whether to crop the first part of the file into a clip before clip merging. Valid values:

|

TimeSpan

This parameter is referenced by the Output.Clip.TimeSpan parameter.

Parameter | Type | Required | Description |

Seek | String | No | The start point in time of the clip. You can use this parameter to specify the start point in time of the clip. The default start point in time is the beginning of the video.

|

Duration | String | No | The length of the clip. You can specify the length of the clip relative to the point in time specified by the Seek parameter. By default, the length of the clip is from the point in time specified by the Seek parameter to the end of the video. You can specify only one of the Duration and End parameters. If you specify the End parameter, the setting of the Duration parameter is invalid.

|

End | String | No | The length of the ending part of the original video to be cropped out. You can specify only one of the Duration and End parameters. If you specify the End parameter, the setting of the Duration parameter is invalid.

|

MergeList

This parameter is referenced by the Output.MergeList parameter.

Parameter | Type | Required | Description |

MergeURL | String | Yes | The OSS path of the clip to be merged.

|

Start | String | No | The point in time at which the output clip is cut from the original clip. Specify this parameter if you want to merge only part of the video into the output file. The default start point in time is the beginning of the video.

|

Duration | String | No | The length of the mixed video. The length is relative to the start point in time specified by the Start parameter. Specify this parameter if you want to merge only part of the video into the output file. By default, the length is the period from the start point in time specified by the Start parameter to the end of the video.

|

OpeningList

This parameter is referenced by the Output.OpeningList parameter.

Parameter | Type | Required | Description |

OpenUrl | String | Yes | The OSS path of the opening part.

|

Start | String | No | The duration that elapses after the input video is played before the opening scene is played . The value starts from 0.

|

Width | String | No | The width of the output opening part. Valid values:

Note The output opening part is center-aligned based on the central point of the main part. The width of the opening part must be equal to or less than the width of the main part. Otherwise, the result is unknown. |

Height | String | No | The height of the output opening part. Valid values:

Note The output opening part is center-aligned based on the central point of the main part. The height of the opening part must be equal to or less than the height of the main part. Otherwise, the result is unknown. |

TailSlateList

This parameter is referenced by the Output.TailSlateList parameter.

Parameter | Type | Required | Description |

TailUrl | String | Yes | The OSS path of the ending part of the video.

|

BlendDuration | String | No | The amount of time between the end of the main part and the beginning of the ending part. During the transition, the last frame of the main part fades out, and the first frame of the ending part fades in.

|

Width | String | No | The width of the output ending part. Valid values:

Note The output ending part is center-aligned based on the central point of the main part. The width of the ending part must be equal to or less than the width of the main part. Otherwise, the result is unknown. |

Height | String | No | The height of the output ending part. Valid values:

Note The output ending part is center-aligned based on the central point of the main part. The height of the ending part must be equal to or less than the height of the main part. Otherwise, the result is unknown. |

IsMergeAudio | Boolean | No | Specifies whether to merge the audio content of the ending part. Valid values:

|

BgColor | String | No | The color of the margin if the width and height of the ending part are less than those of the main part.

|

Amix

This parameter is referenced by the Output.Amix parameter.

Parameter | Type | Required | Description |

AmixURL | String | Yes | The audio stream to be mixed. Valid values:

|

Map | String | No | The serial number of the audio stream in the input file. After you specify an audio stream by using the AmixURL parameter, you must use the Map parameter to specify an audio stream of the input file.

|

MixDurMode | String | No | The mode for determining the length of the output file after mixing. Valid values:

|

Start | String | No | The start point in time of the audio stream. Specify this parameter if you want to mix only part of the audio into the output file. The default start point in time is the beginning of the audio.

|

Duration | String | No | The length of the mixed audio. The length is relative to the start point in time specified by the Start parameter. Specify this parameter if you want to mix only part of the audio into the output file. By default, the length is the period from the start point in time specified by the Start parameter to the end of the audio.

|

MuxConfig

This parameter is referenced by the Output.MuxConfig parameter.

Parameter | Type | Required | Description |

Segment | String | No | The segment configuration. For more information, see the Segment section of this topic.

|

Segment

This parameter is referenced by the Output.MuxConfig.Segment parameter.

Parameter | Type | Required | Description |

Duration | Int | No | The segment duration.

|

ForceSegTime | String | No | The points in time at which the video is forcibly segmented. Separate points in time with commas (,). You can specify up to 10 points in time.

|

M3U8NonStandardSupport

This parameter is referenced by the Output.M3U8NonStandardSupport parameter.

Parameter | Type | Required | Description |

TS | Object | No | The non-standard support for TS files. For more information, see the TS section of this topic. |

TS

This parameter is referenced by the Output.M3U8NonStandardSupport.TS parameter.

Parameter | Type | Required | Description |

Md5Support | Boolean | No | Specifies whether to include the MD5 value of each TS file in the output M3U8 video. |

SizeSupport | Boolean | No | Specifies whether to include the size of each TS file in the output M3U8 video. |

Encryption

This parameter is referenced by the Output.Encryption parameter.

Parameter | Type | Required | Description |

Type | String | Yes | The encryption method of the video. Valid values:

|

KeyType | String | Yes | The method in which the key is encrypted. Valid values:

|

Key | String | Yes | The ciphertext key that is used to encrypt the video. Specify this parameter based on the value of the KeyType parameter. Valid values:

Note Alibaba Cloud provides the CMK. To obtain the CMK, submit a ticket to contact us. |

KeyUri | String | Yes | The URL of the key. You must construct the URL.

|

SkipCnt | String | No | The number of clips that are not encrypted at the beginning of the video. This ensures a shorter loading time during startup.

|

Placeholder replacement rules

The following placeholders can be used in file paths.

For example, the path of the input file is a/b/example.flv and you want to configure the path of the output file to a/b/c/example+test.mp4. In this case, you can use the {ObjectPrefix} and {FileName} placeholders to specify the path of the output file. After URL encoding, the path is displayed as %7BObjectPrefix%7D/c/%7BFileName%7D%2Btest.mp4.

Placeholder description | Transcoding output file | Input subtitle file | Output snapshot file | |||

Placeholder | Description | Perform transcoding by using a workflow | Submit a transcoding job | Subtitle | Capture a snapshot by using a workflow | Submit a snapshot job |

{ObjectPrefix} | The prefix of the input file. | Supported | Supported | Supported | Supported | Supported |

{FileName} | The name of the input file. | Supported | Supported | Supported | Supported | Supported |

{ExtName} | The file name extension of the input file. | Supported | Supported | Supported | Supported | Supported |

{DestMd5} | The MD5 value of the output file. | Supported | Supported | Not supported | Not supported | Not supported |

{DestAvgBitrate} | The average bitrate of the output file. | Supported | Supported | Not supported | Not supported | Not supported |

{SnapshotTime} | The point in time of the snapshot. | Not supported | Not supported | Not supported | Supported | Supported |

{Count} | The serial number of a snapshot in multiple snapshots that are captured at a time. | Not supported | Not supported | Not supported | Supported | Supported |

{RunId} | The ID of the execution instance of the workflow. | Supported | Not supported | Not supported | Not supported | Not supported |

{MediaId} | The ID of the media file in the workflow. | Supported | Not supported | Not supported | Not supported | Not supported |

SnapshotConfig

This parameter is referenced by the SubmitSnapshotJob operation.

You can specify whether to capture snapshots in synchronous or asynchronous mode. If you use the asynchronous mode, the snapshot job is submitted to and scheduled in an MPS queue. In this case, the snapshot job may be queued. The snapshot may not be generated when the response to the SubmitSnapshotJob operation is returned. After you submit a snapshot job, call the QuerySnapshotJobList operation to query the result of the snapshot job. Alternatively, you can configure Simple Message Queue (formerly MNS) (SMQ) callbacks for the queue to obtain the results. For more information, see Notifications and monitoring. If you specify one of the Interval and Num parameters, the asynchronous mode is used by default.

Parameter | Type | Required | Description |

Num | String | No | The number of snapshots to be captured.

|

Time | String | No | The point in time at which the system starts to capture snapshots in the input video.

|

Interval | String | No | The interval at which snapshots are captured.

|

TimeArray | Array | No | The array of specific points in time. This parameter is required if you capture snapshots at specific points in time.

Important

|

FrameType | String | No | The snapshot type. Valid values:

Note Only the normal type of snapshots support snapshot capturing at specific points in time. |

Width | String | No | The width of snapshots.

|

Height | String | No | The height of snapshots.

|

BlackLevel | String | No | The upper limit of black pixels in a snapshot. If the black pixels in a snapshot exceed this value, the system determines that the image is a black screen. For more information about black pixels, see the description of the PixelBlackThreshold parameter. This parameter takes effect if the following conditions are met:

Parameter description:

|

PixelBlackThreshold | String | No | The color value threshold for pixels. If the color value of a pixel is less than the threshold, the system determines that the pixel is a black pixel.

|

Format | String | No | The format of the output file.

|

SubOut | Object | No | The configurations of the WebVTT file. For more information, see the SubOut Webvtt section of this topic.

|

TileOut | Object | No | The image sprite configurations. For more information, see the TileOut section of this topic.

|

OutputFile | Object | Yes | The original snapshots. You must specify the storage path of the objects in OSS. For more information, see the OutputFile section of this topic.

|

TileOutputFile | Object | No | The output image sprite. You must specify the storage path of the object in OSS. The value of this parameter is similar to that of the OutputFile parameter.

Note

|

SubOut Webvtt

This parameter is referenced by the SnapshotConfig.SubOut parameter.

Parameter | Type | Required | Description |

IsSptFrag | String | No | Specifies whether to generate WebVTT index files. Valid values:

|

TileOut

This parameter is referenced by the SnapshotConfig.TileOut parameter.

Parameter | Type | Required | Description |

Lines | Int | No | The number of rows that the tiled snapshot contains.

|

Columns | Int | No | The number of columns that the tiled snapshot contains.

|

CellWidth | String | No | The width of a single snapshot before tiling.

|

CellHeight | String | No | The height of a single snapshot before tiling.

|

Padding | String | No | The distance between two snapshots.

|

Margin | String | No | The margin width of the tiled snapshot.

|

Color | String | No | The background color. The background color that is used to fill the margins, the padding between snapshots, and the area in which no snapshots are displayed.

|

IsKeepCellPic | String | No | Specifies whether to store the original snapshots. Valid values:

|

OutputFile

Parameter | Type | Required | Description |

Bucket | String | Yes | The OSS bucket in which the original snapshots are stored.

|

Location | String | Yes | The region in which the OSS bucket resides.

|

Object | String | Yes | The path in which the output snapshots are stored in OSS.

Note

|

NotifyConfig

This parameter is referenced by the AddPipeline and UpdatePipeline operations.

Parameter | Type | Required | Description |

QueueName | String | No | The SMQ queue in which you want to receive notifications. After the job is complete in the MPS queue, the job results are pushed to the SMQ queue. For more information about receiving notifications, see Receive notifications.

|

Topic | String | No | The SMQ topic in which you want to receive notifications. After the job is complete, the job results are pushed to the SMQ topic. Then, the SMQ topic pushes the message to multiple queues or URLs that subscribe to the topic. For more information about receiving notifications, see Receive notifications.

|

Parameters related to transcoding input files

Parameter | Type | Required | Description |

Bucket | String | Yes | The OSS bucket that stores the input file.

|

Location | String | Yes | The region in which the OSS bucket resides. For more information about the term region, see Terms. |

Object | String | Yes | The OSS object that is used as the input file.

|

Audio | String | No | The audio configuration of the input file. The value must be a JSON object. Note This parameter is required if the input file is in the ADPCM or PCM format.

|

Container | String | No | The container configuration of the input file. The value must be a JSON object. Note This parameter is required if the input file is in the ADPCM or PCM format.

|

InputContainer

Parameter | Type | Required | Description |

Format | String | Yes | The audio format of the input file. Valid values: alaw, f32be, f32le, f64be, f64le, mulaw, s16be, s16le, s24be, s24le, s32be, s32le, s8, u16be, u16le, u24be, u24le, u32be, u32le, and u8. |

InputAudio

Parameter | Type | Required | Description |

Channels | String | Yes | The number of sound channels in the input file. Valid values: [1,8]. |

Samplerate | String | Yes | The audio sampling rate of the input file.

|

AnalysisConfig

Parameter | Type | Required | Description |

QualityControl | String | No | The configuration of the output file quality. The value must be a JSON object. For more information, see the AnalysisConfig section of this topic. |

PropertiesControl | String | No | The property configuration. The value must be a JSON object. For more information, see the PropertiesControl section of this topic. |

QualityControl

Parameter | Type | Required | Description |

RateQuality | String | No | The quality level of the output file.

|

MethodStreaming | String | No | The playback mode. Valid values: network and local. Default value: network. |

PropertiesControl

Parameter | Type | Required | Description |

Deinterlace | String | No | Specifies whether to forcibly run deinterlacing. Valid values:

|

Crop | String | No | The cropping configuration of the video image.

|

Crop

Parameter | Type | Required | Description |

Mode | String | No | This parameter is required if the value of the Crop parameter is not an empty JSON object. Valid values:

|

Width | Integer | No | The width of the video image after the margins are cropped out.

|

Height | Integer | No | The height of the video image after the margins are cropped out.

|

Top | Integer | No | The top margin to be cropped out.

|

Left | Integer | No | The left margin to be cropped out.

|

TransFeatures

Parameter | Type | Required | Description |

MergeList | String | No | The URLs of the clips to be merged.

|

Parameters related to the output in the SubmitJobs operation

Parameter | Type | Required | Description |

URL | String | No | The OSS path of the output file.

|

Bucket | String | No |

|

Location | String | No |

|

Object | String | No |

|

MultiBitrateVideoStream

Parameter | Type | Required | Description |

URI | String | No | The name of the output video stream, which must end with .m3u8. Example: a/b/test.m3u8. Format: ^[a-z]{1}[a-z0-9./-]+$. |

RefActivityName | String | Yes | The name of the associated activity. |

ExtXStreamInfo | Json | Yes | The information about the stream. Example: |

ExtXMedia

Parameter | Type | Required | Description |

Name | String | Yes | The name of the resource. The name can be up to 64 bytes in length and must be encoded in UTF-8. This parameter corresponds to NAME in the HTTP Live Streaming (HLS) V5 protocol. |

Language | String | No | The language of the resource, which must comply with RFC 5646. This parameter corresponds to LANGUAGE in the HLS V5 protocol. |

URI | String | Yes | The path of the resource. Format: ^[a-z]{1}[a-z0-9./-]+$. Example: a/b/c/d/audio-1.m3u8. |

MasterPlayList

Parameter | Type | Required | Description |

MultiBitrateVideoStreams | JsonArray | Yes | The array of multiple streams. Example: |

ExtXStreamInfo

Parameter | Type | Required | Description |

BandWidth | String | Yes | The bandwidth. This parameter specifies the upper limit of the total bitrate and corresponds to BANDWIDTH in the HLS V5 protocol. |

Audio | String | No | The ID of the audio stream group. This parameter corresponds to AUDIO in the HLS V5 protocol. |

Subtitles | String | No | The ID of the subtitle stream group. This parameter corresponds to SUBTITLES in the HLS V5 protocol. |

AdaptationSet

Parameter | Type | Required | Description |

Group | String | Yes | The name of the group. Example: |

Lang | String | No | The language of the resource. You can specify this parameter for audio and subtitle resources. |

Representation

Parameter | Type | Required | Description |

Id | String | Yes | The ID of the stream. Example: |

URI | String | Yes | The path of the resource. Format: ^[a-z]{1}[a-z0-9./-]+$. Example: a/b/c/d/video-1.mpd. |

InputConfig

Parameter | Type | Required | Description |

Format | String | Yes | The format of the input subtitle file. Valid values: stl, ttml, and vtt. |

InputFile | String | Yes | |