This topic describes how to integrate Real-time Conversational AI audio and video agents on iOS.

Source Code Description

Source Code Download

For download links, see the GitHub open source project.

Source Code Structure

├── iOS // Root directory for the iOS platform

│ ├── AUIAICall.podspec // Pod description file

│ ├── Source // Source code files

│ ├── Resources // Resource files

│ ├── Example // Demo code

│ ├── AUIBaseKits // Basic UI components

│ ├── README.md // Readme Environment Requirements

Xcode 16.0 or later. We recommend using the latest official version.

CocoaPods 1.9.3 or later.

Prepare a physical iOS device running iOS 11.0 or later.

Prerequisites

Develop relevant API operations on your server or deploy the provided server source code. For more information, see Deploy a project.

Run the Demo

After downloading the source code, go to the `Example` directory.

In the `Example` directory, execute the command

pod install --repo-updateto automatically install the dependent SDKs.Open the project file

AUIAICallExample.xcworkspaceand modify the bundle ID.Go to the

AUIAICallAgentConfig.swiftfile and configure the agent ID and region.// AUIAICallAgentConfig.swift // Configure agent ID let VoiceAgentId = "Your voice call agent ID" let AvatarAgentId = "Your digital human call agent ID" let VisionAgentId = "Your visual understanding call agent ID" let ChatAgentId = "Your message-based chat agent ID" // Configure region let Region = "cn-shanghai"Region name

Region ID

China (Hangzhou)

cn-hangzhou

China (Shanghai)

cn-shanghai

China (Beijing)

cn-beijing

China (Shenzhen)

cn-shenzhen

Singapore

ap-southeast-1

After configuring the agent, you can start it in one of the following ways:

AppServer deployed: If you have deployed the AppServer source code provided by Alibaba Cloud on your server-side, go to the file

AUIAICallAppServer.swiftand modify the server-side domain name.// AUIAICallAppServer.swift public let AICallServerDomain = "Your application server domain name"AppServer not deployed: If you have not deployed the AppServer source code and want to quickly run the demo and experience the agent, go to the

AUIAICallAuthTokenHelper.javafile, configure theEnableDevelopTokenparameter, copy the App ID and App Key of the ARTC used by the agent from the console, and generate the authentication token on the app side.NoteThis method requires embedding your AppKey and other sensitive information into your application. It is for testing and development only. Never use this method in a production environment. Exposing your AppKey on the client side creates a serious security risk.

// AUIAICallAuthTokenHelper.swift @objcMembers public class AUIAICallAuthTokenHelper: NSObject { // Set to true to enable Develop mode private static let EnableDevelopToken: Bool = true // Copy the RTCAppId from the console private static let RTCDevelopAppId: String = "The AppId of the ARTC instance used by the agent" // Copy the RTCAppKey from the console private static let RTCDevelopAppKey: String = "The AppKey of the ARTC instance used by the agent" ... }To obtain the AppID and AppKey for the ARTC application:

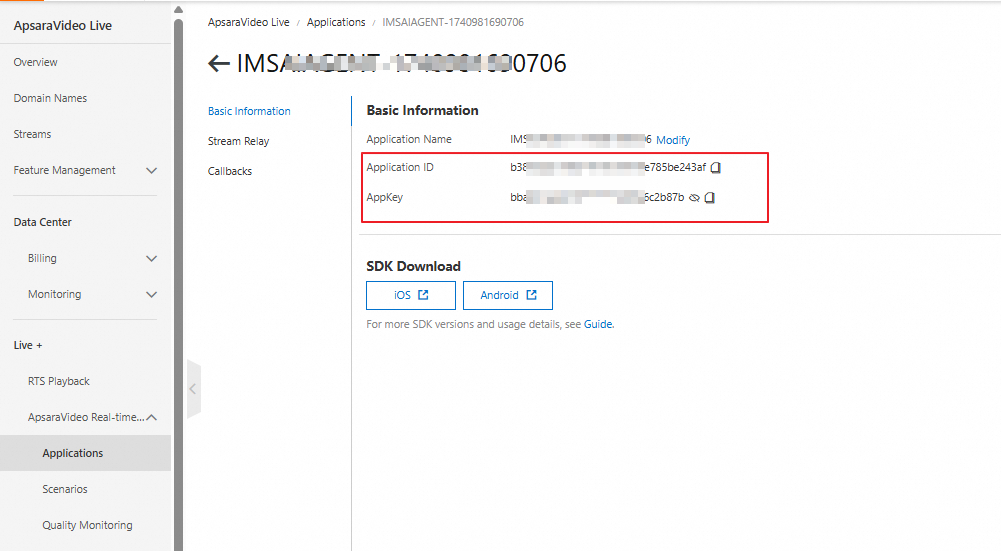

Go to the AI Agents page in the IMS console. Click an agent to go to the agent details page.

Click ARTC application ID. You are redirected to the ApsaraVideo Live console, where the AppID and AppKey are provided.

Select the "Example" target to compile and run.

Quickly Develop Your Own AI Call Feature

Integrate AUIAICall into your app by following these steps to enable agent audio and video call features.

Integrate Source Code

Import AUIAICall: After downloading the repository code, copy the `iOS` folder to your app's code directory. Rename it to `AUIAICall`. Ensure it is at the same level as your `Podfile` file. You can delete the `Example` and `AICallKit` directories.

Modify the Podfile to import:

AliVCSDK_ARTC: An audio and video client SDK for real-time interaction. You can also use AliVCSDK_Standard or AliVCSDK_InteractiveLive. For specific integration methods, see iOS Client.

ARTCAICallKit: An SDK for Real-time Conversational AI call scenarios.

AUIFoundation: Basic UI components.

AUIAICall: UI component source code for AI call scenarios.

# Requires iOS 11.0 or later platform :ios, '11.0' target 'Your App target' do # Integrate the appropriate audio and video client SDK based on your business scenario pod 'AliVCSDK_ARTC', '~> x.x.x' # Real-time Conversational AI call scenario SDK pod 'ARTCAICallKit', '~> x.x.x' # Basic UI component source code pod 'AUIFoundation', :path => "./AUIAICall/AUIBaseKits/AUIFoundation/", :modular_headers => true # UI component source code for AI call scenarios pod 'AUIAICall', :path => "./AUIAICall/" endNoteLatest ARTC SDK version: 7.10.0

Latest AICallKit SDK version: 2.11.0.

Execute

pod install --repo-update.Source code integration is complete.

Project Configuration

Add microphone and camera permissions. Open the project's

info.Plistfile. Add microphone permission (NSMicrophoneUsageDescription), camera permission (NSCameraUsageDescription), and photo library permission (NSPhotoLibraryUsageDescription).Open Project Settings. Enable Background Modes in Signing & Capabilities. If you do not enable background modes, you must manually handle ending calls when the app enters the background.

Source Code Configuration

You have completed the steps required in Prerequisites.

Go to the

AUIAICallAppServer.swiftfile and modify the server-side domain name.// AUIAICallAppServer.swift public let AICallServerDomain = "Your application server domain name"NoteIf you have not deployed AppServer, you can use the app-side authentication token generation method to quickly test and run the demo. For details, see AppServer not deployed.

Call API

After completing the preceding steps, start AI calls through component interfaces on other modules or the homepage of your app, based on your business scenarios and interactions. You can also modify the source code as needed.

// Import components

import AUIAICall

import ARTCAICallKit

import AUIFoundation

// Check if microphone permission is enabled

AVDeviceAuth.checkMicAuth { auth in

if auth == false {

return

}

// We recommend using your app's user ID after logon for userId

let userId = "xxx"

// Build the controller using userId

let controller = AUIAICallController(userId: userId)

// Set the agent ID. It cannot be nil.

controller.config.agentId = "xxx"

// Set the call type (voice, digital human, or visual understanding). It must match the agent ID type.

controller.config.agentType = agentType

// The region where the agent is located. It cannot be nil.

controller.config.region = "xx-xxx"

// Create a call ViewController

let vc = AUIAICallViewController(controller)

// Open the call interface in full screen mode

vc.modalPresentationStyle = .fullScreen

vc.modalTransitionStyle = .coverVertical

vc.modalPresentationCapturesStatusBarAppearance = true

self.present(vc, animated: true)

}