You can write data from Simple Log Service to Hologres in multiple ways. This topic describes how to synchronize data from Simple Log Service to Hologres in real-time by using Realtime Compute for Apache Flink or DataWorks.

Prerequisites

Log Service is activated, and a project and a Logstore are created. For more information, see Getting Started.

A Hologres instance is purchased and is connected to a development tool. For more information, see Procedure to use Hologres.

Realtime Compute for Apache Flink is activated, and a project is created if you want to write data from Simple Log Service to Hologres by using Realtime Compute for Apache Flink. For more information, see Activate fully managed Flink and Create and manage a namespace.

DataWorks is activated, and a workspace is created if you want to write data from Log Service to Hologres by using DataWorks. For more information, see Activate DataWorks and Create a workspace.

Background information

Simple Log Service is a cloud-native observability and analytics platform that provides large-scale, low-cost, and real-time services to process multiple types of data such as logs, metrics, and traces. Simple Log Service allows you to collect, process, query, analyze, visualize, consume, and deliver data. You can configure alerts in the Simple Log Service console. Simple Log Service helps enterprises improve their digital capabilities in terms of R&D, O&M, and data security.

Hologres is designed to provide a real-time compute engine that features high performance, high reliability, cost efficiency, and high scalability. Hologres provides real-time data warehouse solutions that help manage large amounts of data and interactive query services that can respond in sub-seconds. Hologres is widely used in scenarios such as the construction of real-time data mid-ends, fine-grained analysis, self-service analysis, marketing profile setup, audience grouping, and real-time risk control. You can write data from Simple Log Service to Hologres in a quick manner for real-time analysis and query. This helps exploit data for your business.

Write data from Simple Log Service to Hologres by using Realtime Compute for Apache Flink

Prepare data in Simple Log Service.

Simulated data provided by Simple Log Service is used as source data in this example. The data is generated by simulating gaming platform logons and consumption logs. You can also use your business data.

Log on to the Simple Log Service console.

In the Import Data section, click the Simulated Data Import tab.

On the Simulated Data Import tab, click Simulation in the Game Operation Logs section.

In the Specify Logstore step, select the project and Logstore, and click Next.

In the Simulated Data Import step, configure the parameters related to the time range and frequency, and click Import.

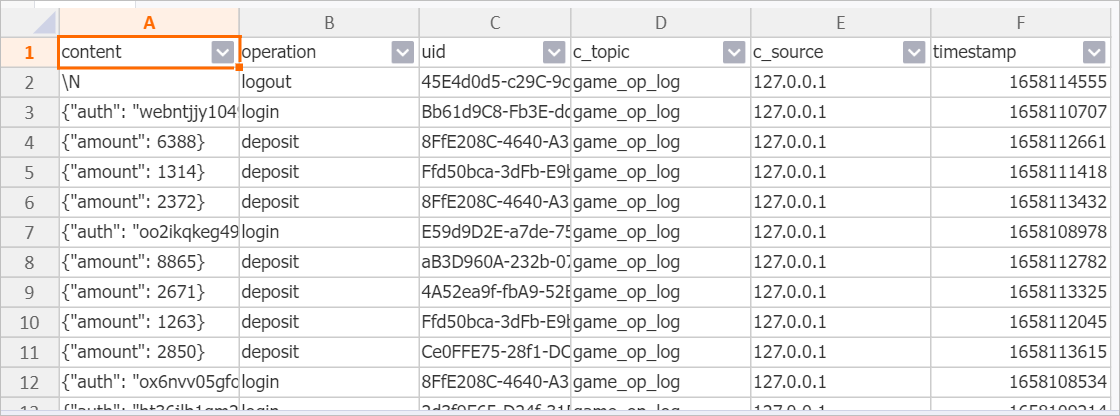

The following figure shows the simulation fields and data that you can query. For more information, see Query and analyze logs.

The

contentfield is of the JSON data type.

Create a table in Hologres.

Create a table that is used to receive data in Hologres. You can create indexes for fields in the table based on your query requirements. This helps improve query efficiency. For more information about indexes, see Overview. The following DDL statement is used to create the table:

CREATE TABLE sls_flink_holo ( content JSONB , operation TEXT, uid TEXT, topic TEXT , source TEXT , c__timestamp TIMESTAMPTZ, receive_time BIGINT, PRIMARY KEY (uid) );Use Realtime Compute for Apache Flink to write data to Hologres.

Perform the following steps to write data from Simple Log Service to Hologres by using Realtime Compute for Apache Flink:

Read data from Simple Log Service by using Realtime Compute for Apache Flink. For more information, see Create a Simple Log Service source table.

Write data to Hologres by using Realtime Compute for Apache Flink. For more information, see Create a Hologres result table.

The following sample SQL job provides an example on how to write data from Simple Log Service to Hologres by using Realtime Compute for Apache Flink. JSON-formatted fields are directly written as the JSON data type in the Hologres table. The JSON data type corresponds to the VARCHAR type in Realtime Compute for Apache Flink.

NoteFor more information about how to create and run SQL jobs in Realtime Compute for Apache Flink, see Develop an SQL draft and Start a deployment.

If data that is read from Simple Log Service contains JSON-formatted data, you can parse such data before you write the data to Realtime Compute for Apache Flink. You can also directly write the JSON-formatted data from Simple Log Service to Hologres.

CREATE TEMPORARY TABLE sls_input ( content STRING, operation STRING, uid STRING, `__topic__` STRING METADATA VIRTUAL, `__source__` STRING METADATA VIRTUAL, `__timestamp__` BIGINT METADATA VIRTUAL, `__tag__` MAP<VARCHAR, VARCHAR> METADATA VIRTUAL ) WITH ( 'connector' = 'sls', 'endpoint' = 'Internal endpoint of the Simple Log Service project',-- The internal endpoint that is used to access the Simple Log Service project. 'accessid'='AccessKey ID of your Alibaba Cloud account',-- The AccessKey ID of your Alibaba Cloud account. 'accesskey'='AccessKey secret of your Alibaba Cloud account',-- The AccessKey secret of your Alibaba Cloud account. 'starttime' = '2024-08-30 00:00:00',-- The time at which logs start to be consumed. 'project'='Project name',-- The name of the Simple Log Service project. 'logstore'='Logstore name'-- The name of the Logstore. ); CREATE TEMPORARY TABLE hologres_sink ( content VARCHAR, operation VARCHAR, uid VARCHAR, topic STRING , source STRING , c__timestamp TIMESTAMP , receive_time BIGINT ) WITH ( 'connector' = 'hologres', 'dbname'='Name of the Hologres database',-- The name of the Hologres database. 'tablename'='Name of the Hologres table',-- The name of the Hologres table to which you write data. 'username'='AccessKey ID of your Alibaba Cloud account',-- The AccessKey ID of your Alibaba Cloud account. 'password'='AccessKey secret of your Alibaba Cloud account',-- The AccessKey secret of your Alibaba Cloud account 'endpoint'='VPC endpoint of your Hologres instance.'-- The VPC endpoint of your Hologres instance. ); INSERT INTO hologres_sink SELECT content, operation, uid, `__topic__` , `__source__` , CAST ( FROM_UNIXTIME (`__timestamp__`) AS TIMESTAMP ), CAST (__tag__['__receive_time__'] AS BIGINT) AS receive_time FROM sls_input;Query data in Hologres.

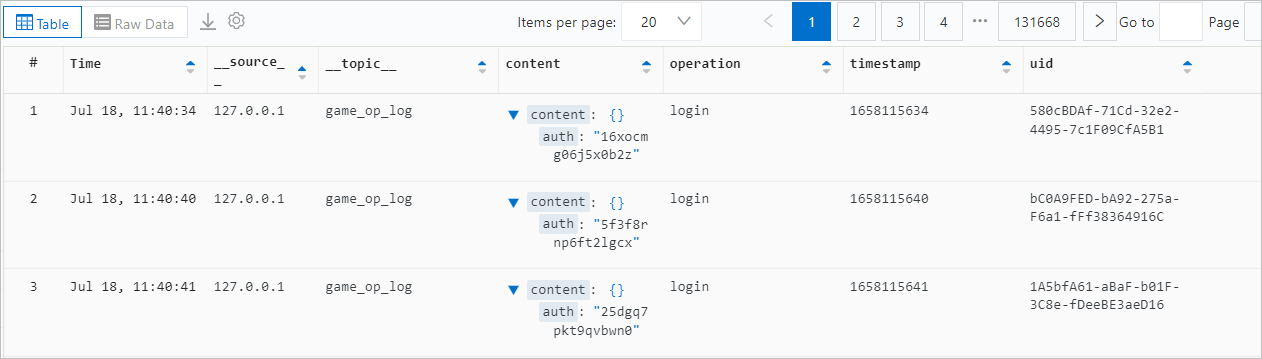

You can query the data that is written from Simple Log Service to Hologres by using Realtime Compute for Apache Flink. You can also develop the data based on your business requirements.

Write data to Hologres by using DataWorks

Prepare data in Simple Log Service.

Simulated data provided by Simple Log Service is used as source data in this example. The data is generated by simulating gaming platform logons and consumption logs. You can also use your business data.

Log on to the Simple Log Service console.

In the Import Data section, click the Simulated Data Import tab.

On the Simulated Data Import tab, click Simulation in the Game Operation Logs section.

In the Specify Logstore step, select the project and Logstore, and click Next.

In the Simulated Data Import step, configure the parameters related to the time range and frequency, and click Import.

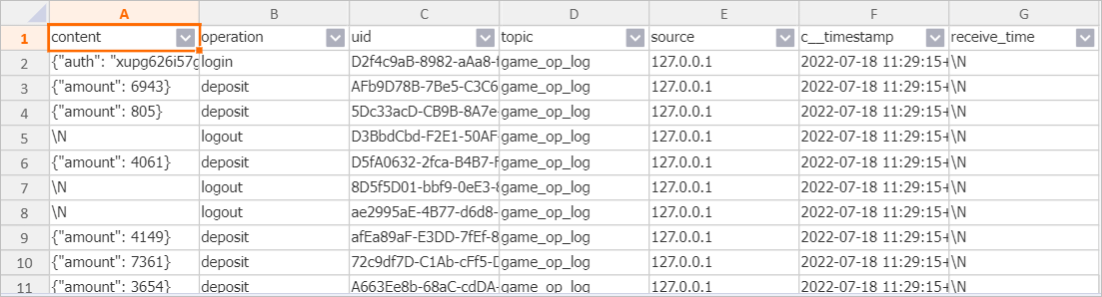

The following figure shows the simulation fields and data that you can query. For more information, see Query and analyze logs.

The

contentfield is of the JSON data type.

Create a table in Hologres.

Create a table that is used to receive data in Hologres. You can create indexes for fields in the table based on your query requirements. This helps improve query efficiency. For more information about indexes, see Overview. The following DDL statement is used to create the table:

NoteIn this example, the

uidfield is configured as the primary key that is used to uniquely identify data. You can configure the primary key based on your business requirements.The

uidfield is also configured as the distribution key. This way, data records that have the same value of theuidfield are written to the same shard. This helps improve query performance.

BEGIN; CREATE TABLE sls_dw_holo ( content JSONB , operation TEXT, uid TEXT, C_Topic TEXT , C_Source TEXT , timestamp BIGINT, PRIMARY KEY (uid) ); CALL set_table_property('sls_dw_holo', 'distribution_key', 'uid'); CALL set_table_property('sls_dw_holo', 'event_time_column', 'timestamp'); COMMIT;Configure the data source.

Before you write data to Hologres by using the Data Integration service of DataWorks, you must add the Simple Log Service data source and Hologres data source in the specified DataWorks workspace.

Configure the Log Service LogHub data source. For more information, see Add a LogHub (SLS) data source.

Configure the Hologres data source. For more information, see Add a Hologres data source.

Synchronize data from Simple Log Service to Hologres in real time.

Create a real-time synchronization node and run the node in Data Integration. For more information, see Create a real-time synchronization node to synchronize incremental data from a single table and O&M for real-time synchronization nodes.

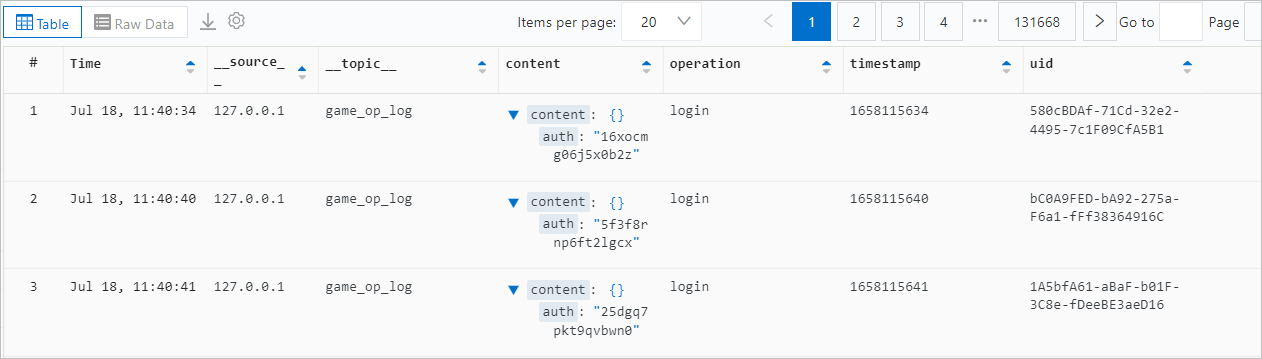

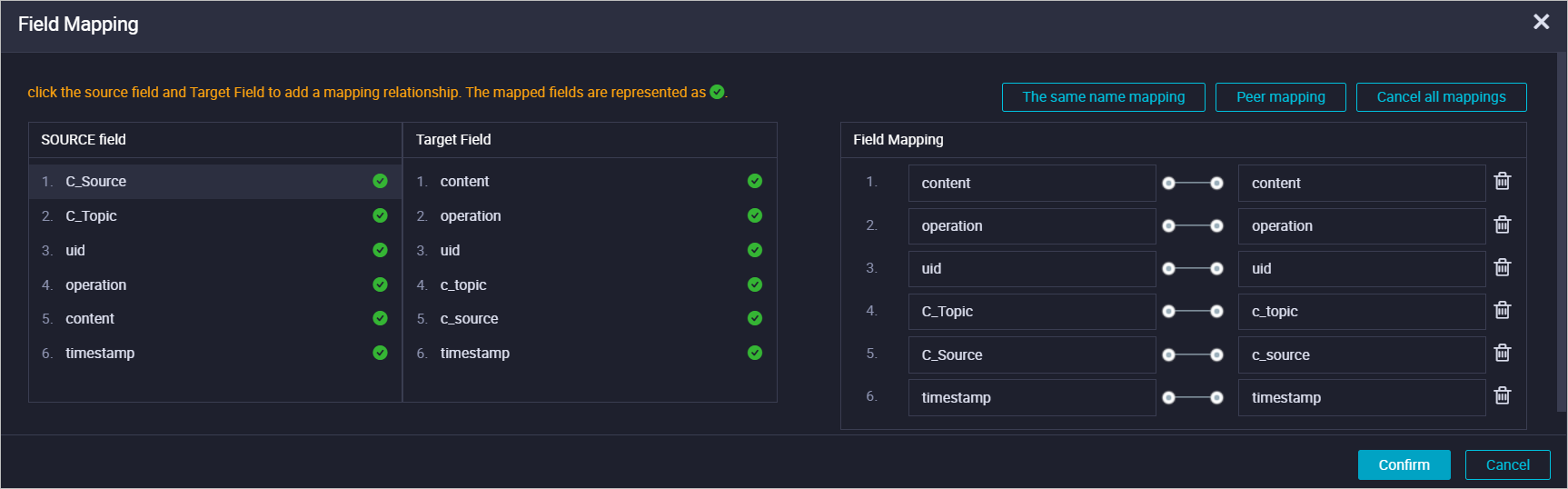

In this example, Simple Log Service LogHub is configured as the source data source and Hologres is configured as the destination data source for the real-time synchronization node. Field mappings are configured to synchronize data from the source to the destination. The following figure shows the example of field mappings.

Query data in Hologres.

After the real-time synchronization node is run, you can query the data that is written to Hologres by using the Data Integration service of DataWorks in Hologres.