You can experience the best practices of GPU-accelerated instances in the Function Compute console. This topic describes how to use code to transcode a video from .mp4 to .flv in the Function Compute console. In this topic, Python is used as an example.

Scenarios and benefits

With the emergence of highly interactive application scenarios, such as social live streaming, online classroom, and telemedicine, real-time and quasi-real-time Internet traffic is becoming the trend. In most cases, video platforms must transcode a source video content to output multiple distribution video formats in a 1:N manner based on factors such as bitrate, resolution, channel patch, and playback platform to provide viewers on different playback platforms with different network qualities. Video transcoding is a key step in video production and distribution. An ideal video transcoding solution must be cost-effective in terms of cost (RMB/stream) and power efficiency (watts/stream).

This section describes the benefits of GPU-accelerated instances compared with the instances with no GPU acceleration in Function Compute:

Real-time and quasi-real-time application scenarios

GPU-accelerated instances can transcode videos several times faster and push production content to users more efficiently.

Cost-prioritized GPU application scenarios

GPU-accelerated instances can be elastically provisioned based on your business requirements, and are more cost-effective than self-purchased virtual machines (VMs).

Efficiency-prioritized GPU application scenarios

Focus on code development and the business objectives without the need to perform the O&M of GPU clusters, such as driver and CUDA version management, machine operation management, and GPU bad card management.

For more information about GPU-accelerated instances, see Instance types and usage modes.

Performance comparison

GPU-accelerated instances of Function Compute are based on the Turing architecture and support the following encoding and decoding formats:

Encoding formats

H.264 (AVCHD) YUV 4:2:0

H.264 (AVCHD) YUV 4:4:4

H.264 (AVCHD) Lossless

H.265 (HEVC) 4K YUV 4:2:0

H.265 (HEVC) 4K YUV 4:4:4

H.265 (HEVC) 4K Lossless

H.265 (HEVC) 8k

HEVC10-bitsupport

HEVCB Framesupport

Decoding formats

MPEG-1

MPEG-2

VC-1

VP8

VP9

H.264 (AVCHD)

H.265 (HEVC) 4:2:0

*H.265 (HEVC) 4:4:4

8 bit

10 bit

12 bit

8 bit

10 bit

12 bit

8 bit

10 bit

12 bit

The following table describes the information about the source video.

Item | Data |

The duration of the audio. | 2 minutes and 5 seconds |

Bitrate | 4085 Kb/s |

Video stream information | h264 (High), yuv420p (progressive), 1920x1080 [SAR 1:1 DAR 16:9], 25 fps, 25 tbr, 1k tbn, 50 tbc |

Audio and video information | aac (LC), 44100 Hz, stereo, fltp |

The following table describes information about the test machine that uses GPU acceleration and the machine without GPU acceleration.

Item | Instances with no GPU acceleration | GPU-accelerated instances |

CPU | CPU Xeon® Platinum 8163 4C | CPU Xeon® Platinum 8163 4C |

RAM | 16 GB | 16 GB |

GPU | N/A | T4 |

FFmpeg | git-2020-08-12-1201687 | git-2020-08-12-1201687 |

Video transcoding (1:1)

Performance test: 1 input stream and 1 output stream

Resolution | Transcoding duration without GPU acceleration | Transcoding duration with GPU acceleration |

H264: 1920x1080 (1080p) (Full HD) | 3 minutes and 19.331 seconds | 9.399 seconds |

H264: 1280x720 (720p) (Half HD) | 2 minutes and 3.708 seconds | 5.791 seconds |

H264: 640x480 (480p) | 1 minute and 1.018 seconds | 5.753 seconds |

H264: 480x360 (360p) | 44.376 seconds | 5.749 seconds |

Video transcoding (1: N)

Performance test: 1 input stream and 3 output streams

Resolution | Transcoding duration without GPU acceleration | Transcoding duration with GPU acceleration |

H264: 1920x1080 (1080p) (Full HD) | 5 minutes and 58.696 seconds | 45.268 seconds |

H264: 1280x720 (720p) (Half HD) | ||

H264: 640x480 (480p) |

Transcoding commands

Commands for transcoding without GPU acceleration

Single-stream transcoding (1:1)

docker run --rm -it --volume $PWD:/workspace --runtime=nvidia willprice/nvidia-ffmpeg -y -i input.mp4 -c:v h264 -vf scale=1920:1080 -b:v 5M output.mp4Multiple-stream transcoding (1:N)

docker run --rm -it --volume $PWD:/workspace --runtime=nvidia willprice/nvidia-ffmpeg \ -y -i input.mp4 \ -c:a copy -c:v h264 -vf scale=1920:1080 -b:v 5M output_1080.mp4 \ -c:a copy -c:v h264 -vf scale=1280:720 -b:v 5M output_720.mp4 \ -c:a copy -c:v h264 -vf scale=640:480 -b:v 5M output_480.mp4

Table 1. Parameters Parameter

Description

-c:a copy

The audio stream can be copied without recording.

-c:v h264

Select the software H.264 encoder for the output stream.

-b:v 5M

Set the output bitrate to 5 Mb/s.

Commands for transcoding with GPU acceleration

Single-stream transcoding (1:1)

docker run --rm -it --volume $PWD:/workspace --runtime=nvidia willprice/nvidia-ffmpeg -y -hwaccel cuda -hwaccel_output_format cuda -i input.mp4 -c:v h264_nvenc -vf scale_cuda=1920:1080:1:4 -b:v 5M output.mp4Multiple-stream transcoding (1:N)

docker run --rm -it --volume $PWD:/workspace --runtime=nvidia willprice/nvidia-ffmpeg \ -y -hwaccel cuda -hwaccel_output_format cuda -i input.mp4 \ -c:a copy -c:v h264_nvenc -vf scale_npp=1920:1080 -b:v 5M output_1080.mp4 \ -c:a copy -c:v h264_nvenc -vf scale_npp=1280:720 -b:v 5M output_720.mp4 \ -c:a copy -c:v h264_nvenc -vf scale_npp=640:480 -b:v 5M output_480.mp4

Table 2. Parameters Parameter

Description

-hwaccel cuda

Select a proper hardware accelerator.

-hwaccel_output_format cuda

Save the decoded frames in the GPU memory.

-c:v h264_nvenc

Use the NVIDIA hardware to accelerate the H.264 encoder.

Preparations

GPU-accelerated instances can be deployed only by using container images.

Performed the following operations in the region where the GPU-accelerated instance resides:

Create a Container Registry Enterprise Edition instance or Personal Edition instance. We recommend that you create an Enterprise Edition instance. For more information, see Step 1: Create a Container Registry Enterprise Edition instance.

Create a namespace and an image repository. For more information, see Step 2: Create a namespace and Step 3: Create an image repository.

Upload the audio and video resources that you want to process to an Object Storage Service (OSS) bucket in the region where the GPU-accelerated instances are located. Make sure that you have the read and write permissions on objects in the bucket. For more information, see Upload objects. For more information about permissions, see Modify the ACL of a bucket.

Use the Function Compute console to deploy a GPU application

Deploy an image.

Create a Container Registry Enterprise Edition instance or Container Registry Personal Edition instance.

We recommend that you create an Enterprise Edition instance. For more information, see Create a Container Registry Enterprise Edition instance.

Create a namespace and an image repository.

For more information, see the Step 2: Create a namespace and Step 3: Create an image repository sections of the "Use Container Registry Enterprise Edition instances to build images" topic.

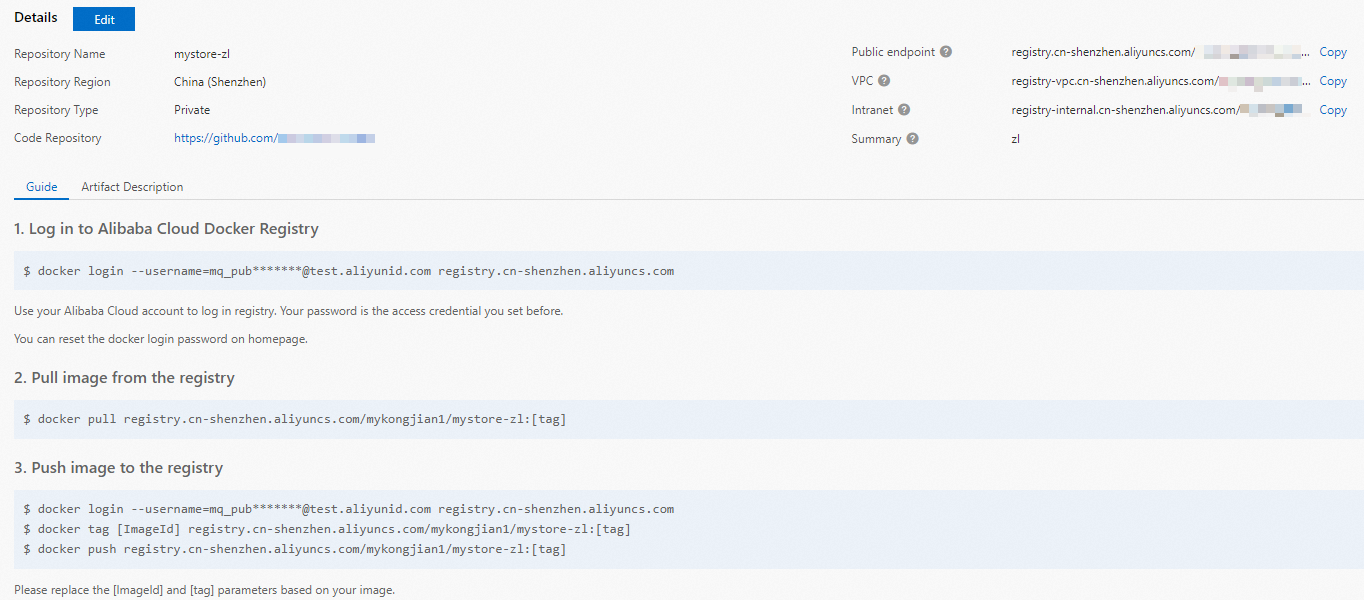

Perform operations on Docker as prompted in the Container Registry console. Then, push the preceding sample app.py and Dockerfile to the instance image repository. For more information about the files, see app.py and Dockerfile in the /code directory when you deploy a GPU application by using Serverless Devs.

Create a GPU function. For more information, see Create a custom container function.

Change the execution timeout period of the function.

Click the name of the function that you want to manage. In the navigation bar of the details page, click the Configurations tab. In the Environment Information section, click Modify.

In the panel that appears, configure the Execution Timeout Period parameter and click OK.

NoteThe CPU transcoding duration exceeds the default value of 60 seconds. Therefore, we recommend that you set the Execution Timeout Period parameter to a larger value.

Configure a provisioned GPU instance. For more information about how to configure provisioned instances, see Configure provisioned instances and auto scaling rules.

After the configuration is complete, you can check whether the provisioned GPU-accelerated instances are ready in the rule list. Specifically, check whether the value of the Current Reserved Instances parameter is the specified number of provisioned instances.

Use cURL to test the function.

On the function details page, click the Triggers tab to view trigger configurations and obtain the trigger endpoint.

Run the following command in the CLI to invoke the GPU-accelerated function:

View online function versions

curl -v "https://tgpu-ff-console-tgpu-ff-console-ajezot****.cn-shenzhen.fcapp.run" {"function": "trans_gpu"}Use CPUs for transcoding

curl "https://tgpu-ff-console-tgpu-ff-console-ajezot****.cn-shenzhen.fcapp.run" -H 'TRANS-MODE: cpu' {"result": "ok", "upload_time": 8.75510573387146, "download_time": 4.910430669784546, "trans_time": 105.37688875198364}Use GPU for transcoding

curl "https://tgpu-ff-console-tgpu-ff-console-ajezotchpx.cn-shenzhen.fcapp.run" -H 'TRANS-MODE: gpu' {"result": "ok", "upload_time": 8.313958644866943, "download_time": 5.096682548522949, "trans_time": 8.72346019744873}

View the execution result

You can view the video after transcoding by accessing the following domain name in your browser:

https://cri-zbtsehbrr8******-registry.oss-cn-shenzhen.aliyuncs.com/output.flvThis domain name is used as an example. The actual domain name prevails.