A session is a Spark Session available in an EMR Serverless Spark workspace. You can use notebook sessions for notebook development. This topic describes how to create a notebook session.

Create a notebook session

After you create a notebook session, you can select this session for notebook development.

Go to the Notebook Sessions page.

Log on to the EMR console.

In the navigation pane on the left, choose .

On the Spark page, click the name of the target workspace.

On the EMR Serverless Spark page, choose Sessions in the navigation pane on the left.

Click the Notebook Session tab.

On the Notebook Session page, click Create Notebook Session.

On the Create Notebook Session page, configure the parameters and click Create.

ImportantSet the maximum concurrency of the selected deployment queue to a value that is greater than or equal to the resource size required by the notebook session. The specific value is displayed in the console.

Parameter

Description

Name

The name of the new notebook session.

The name must be 1 to 64 characters in length and can contain only letters, digits, hyphens (-), underscores (_), and spaces.

Resource Queue

Select a queue to deploy the session. You can select only a development queue or a queue shared by development and production environments.

For more information about queues, see Manage resource queues.

Engine Version

The engine version for the current session. For more information about engine versions, see Engine versions.

Use Fusion Acceleration

Fusion Engine can accelerate Spark workloads and reduce the total cost of tasks. For billing information, see Billing. For more information about Fusion Engine, see Fusion Engine.

Runtime Environment

Select a custom environment created on the Runtime Environments page. When the notebook session starts, the system pre-installs related libraries based on the selected environment.

NoteYou can select only a runtime environment that is in the Ready state.

Automatic Stop

Enabled by default. Set a custom time after which an inactive notebook session automatically stops.

Network Connection

Select a created network connection to directly access data sources or external services within a VPC. For more information about how to create a network connection, see Establish network connectivity between EMR Serverless Spark and other VPCs.

Mount Integrated File Directory

This feature is disabled by default. To use this feature, first add a file directory on the Files page of the Integrated File Directory tab. For more information, see Integrated file directory.

When enabled, the system mounts the integrated file directory to the session resources. This lets you read from and write to files in the directory directly within the notebook session.

The mount operation consumes a specific amount of Driver compute resources. The consumed resources are the greater of the following two values:

Fixed resources: 0.3 CPU core + 1 GB memory.

Dynamic resources: 10% of the

spark.driverresources (which is0.1 × spark.drivercores and memory).

For example, if

spark.driveris configured with 4 CPU cores and 8 GB of memory, the dynamic resources are 0.4 CPU core + 0.8 GB memory. In this case, the actual consumed resources aremax(0.3 Core + 1GB, 0.4 Core + 0.8GB), which is 0.4 CPU core + 1 GB memory.NoteAfter you enable mounting, the directory is mounted only to the driver by default. To also mount the directory to executors, enable Mount to Executor.

ImportantAfter you mount an integrated NAS file directory, you must configure a network connection. The VPC of the network connection must be the same as the VPC where the NAS mount target resides.

Mount to Executor

When enabled, the system mounts the integrated file directory to the session executors. This lets you read from and write to files in the directory directly from the notebook session executors.

The mount operation consumes executor resources. The percentage of resources consumed varies based on file usage in the mounted directory.

spark.driver.cores

The number of CPU cores used by the driver process in the Spark application. The default value is 1 CPU.

spark.driver.memory

The amount of memory that the driver process in the Spark application can use. The default value is 3.5 GB.

spark.executor.cores

The number of CPU cores that each executor process can use. The default value is 1 CPU.

spark.executor.memory

The amount of memory that each executor process can use. The default value is 3.5 GB.

spark.executor.instances

The number of executors allocated by Spark. The default value is 2.

Dynamic Resource Allocation

This feature is disabled by default. After you enable this feature, configure the following parameters:

Minimum Number of Executors: The default value is 2.

Maximum Number of Executors: If spark.executor.instances is not set, the default value is 10.

More memory configurations

spark.driver.memoryOverhead: The non-heap memory available to each driver. If this parameter is not set, Spark automatically allocates a value based on the default formula:

max(384 MB, 10% × spark.driver.memory).spark.executor.memoryOverhead: The non-heap memory available to each executor. If this parameter is not set, Spark automatically allocates a value based on the default formula:

max(384 MB, 10% × spark.executor.memory).spark.memory.offHeap.size: The size of off-heap memory available to Spark. The default value is 1 GB.

This parameter takes effect only when

spark.memory.offHeap.enabledis set totrue. By default, when you use the Fusion Engine, this feature is enabled and the non-heap memory is set to 1 GB.

Spark configuration

Enter the Spark configuration information. Separate configurations with spaces. Example:

spark.sql.catalog.paimon.metastore dlf.

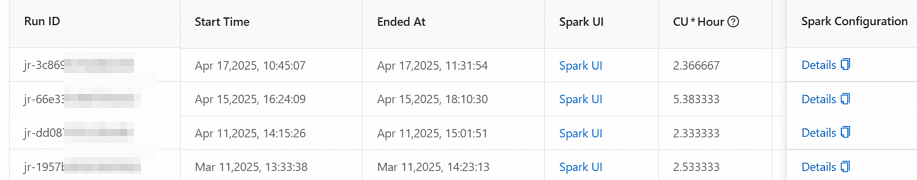

View run records

After a data development task is complete, you can view its run records on the session management page. The procedure is as follows:

On the session list page, click the session name.

Click the Run Records tab.

On this page, you can view detailed run information for the task, such as the run ID, start time, and Spark UI.

References

For more information about queue operations, see Manage resource queues.

For more information about session roles and permissions, see Manage users and roles.

For a complete example of the notebook development process, see Quick Start for notebook development.