The Image Moderation V2.0 API from Content Moderation provides preset configurations for detailed risk detection scopes. These configurations are based on extensive content governance experience and common industry practices.

When you first use the service, you can log on to the Rules page in the console to view the default detection scope configurations.

Scenarios

If your business has the following requirements, you can use the Alibaba Cloud Image Moderation V2.0 console to customize detection rules and scopes. You can also use the console to query detection results and view usage statistics.

Application scenario | Description |

You can adjust the detection scope and specific items for risk detection based on common industry practices or your business needs. Baseline Check (baselineCheck_global): The adjustable scope includes pornographic content, suggestive content, terrorist content, prohibited content, flag content, undesirable content, and abusive content. For example, if your business displays many images of swimwear and you do not want these images to be flagged as risky, you can turn off the detection switch for related elements in the console. | |

Setting different risk detection scopes for multiple business scenarios | If you have multiple business scenarios that use the same service but require different risk detection scopes, you can copy the service to meet the requirements of each scenario. For example, you may have three business scenarios (A, B, and C) that all require the Common Baseline Moderation (baselineCheck) service, but each has different standards. You can copy the baselineCheck service in the console to create baselineCheck_01 and baselineCheck_02. By setting different risk detection scopes for these three services, you can meet the requirements of each business scenario. |

If you have the following business requirements for image moderation, you can use the console and API of Image Moderation V2.0 to perform specialized moderation.

| |

You can designate certain images as trusted based on their source or purpose. To prevent Content Moderation's algorithms from flagging these images as risky, you can exempt trusted image libraries from risk detection. Examples of such images include marketing materials created by your business or platform, official images, and manually reviewed official profile pictures. | |

For text within images, you can configure custom vocabularies to either ignore or flag specific keywords.

| |

You can visually test the moderation performance of a specific image service in the console.

| |

You can view or search for recently detected images on the query results page to analyze their details. | |

You can view statistics for recent image detections on the Usage Statistics page. |

Prerequisites

Go to the Content Moderation V2.0 page and activate the Image Moderation V2.0 service.

Before you activate the Image Moderation 2.0 service, ensure that you are familiar with the billing rules of Image Moderation 2.0. For more information, see Introduction to Image Moderation Enhanced Edition 2.0 and its billing information.

Adjust the risk detection scope for images

You can adjust the detection scopes and specific items for risk detection based on common industry practices or your business needs.

Log on to the Content Moderation console.

In the navigation pane on the left, choose .

On the Rules Management tab, find the service that you want to manage. This example uses the Common Baseline Moderation (baselineCheck_global) service. Click Settings in the Actions column.

On the Detection Scope page, select a detection type to adjust. This example uses Prohibited Content Detection.

On the Prohibited Content Detection tab, view the default configurations in the Detection Scope Configuration section. For example, the following figure shows that four items are detected by default. If an item is hit, the corresponding label is returned.

Click Edit to enter edit mode. Change the Detection Status as needed. For example, the following figure shows that the detection switch for the third item is turned off.

You can also adjust the Medium-risk Score and High-risk Score for labels to determine the returned risk level.

NoteRisk level rules:

If a risk label is detected and the confidence score is within the high-risk score range, the risk level is high.

If a risk label is detected and the confidence score is within the medium-risk score range, the risk level is medium.

If a risk label is detected and the confidence score is below the medium-risk score range, the risk level is low.

If multiple labels with different risk levels are hit, the highest risk level is returned.

If no risk labels are hit, the risk level is safe.

If a custom blocklist is hit, the risk level is high.

Click Save to save the new detection scope. The new configuration takes effect and is applied to the production environment in about 2 to 5 minutes.

Set different risk detection scopes for multiple business scenarios

You can copy a service and set different risk detection scopes to meet the different moderation requirements of your business scenarios.

Log on to the Content Moderation console.

In the navigation pane on the left, choose .

On the Rules Management tab, find the service that you want to copy. This example uses the Common Baseline Moderation (baselineCheck_global) service.

On the service list page, click Copy in the Actions column for the Common Baseline Moderation service.

In the Copy Service panel, enter a Service Name and Service Description.

Click Create to save the copied service information. The new service can be called 1 to 2 minutes after it is created.

After the service is created, you can configure and edit the copied baselineCheck_global_01 service. You can call the baselineCheck_global_01 service and the baselineCheck_global service separately to meet the needs of different business scenarios that require different risk detection scopes.

Perform special or emergency moderation on specific known images

You can configure a custom image library for images that may have risks. If a user-uploaded image matches an image in the configured library, a risk label is returned.

Log on to the Content Moderation console.

Before you configure a custom image library, you must create and maintain the library. If an existing library meets your business requirements, you can skip this step.

NoteEach account can create up to 10 image libraries. The total number of images in all libraries cannot exceed 100,000.

Create an image library and upload images

In the navigation pane on the left, choose .

Click Create Image Library. In the Create Image Library dialog box, enter a library name and description, and then click OK.

Find the library that you created and click Image Detail in the Actions column.

Click Add Image. In the Add Image dialog box, Select Image and follow the instructions to upload images.

You can upload a maximum of 10 images at a time. Each image must be 4 MB or smaller. We recommend that the image resolution be at least 256 × 256 pixels. The upload list shows the upload status of up to 10 images. To upload more images, clear list and continue uploading.

On the details page of the image library, you can view the list of uploaded images. You can also query and delete images.

Query images: You can search for images by Image ID or Add Time.

Delete images: You can delete images from the library, including in batches.

Maintain an existing image library

In the navigation pane on the left, choose .

Find the library that you want to maintain. Click Edit in the Actions column to modify the library name and description. Click Image Detail in the Actions column to upload or delete images.

In the navigation pane on the left, choose .

On the Rules Management tab, find the service that you want to manage. This example uses the Common Baseline Moderation (baselineCheck_global) service. Click Settings in the Actions column.

On the Detection Scope page, select a detection type to adjust. This example uses Prohibited Content Detection.

In the Set Labels by Customized Libraries section of the Prohibited Content Detection tab, view the information about the configured custom image library.

Click Edit to enter edit mode and select the custom image library that you want to configure.

Click Save to save the new custom image library configuration.

The new configuration takes effect and is applied to the production environment in about 2 to 5 minutes. If a user-uploaded image matches an image in the configured library, the "contraband_drug_lib" label is returned.

Exempt trusted images from risk detection

You can exempt trusted image libraries from risk detection to prevent images from being flagged as risky by Content Moderation's algorithms.

Log on to the Content Moderation console.

In the navigation pane on the left, choose .

On the Rules Management tab, find the service that you want to manage and click Settings in the Actions column.

Click the Exemption Configuration tab and modify the configuration.

On the Exemption Configuration tab, view the list of custom image libraries and their exemption status.

For example, the following figure shows that the exemption switches for all image libraries are turned off.

Click Edit and turn on the exemption switch for the image library that you require.

Click Save to save the new configuration for exempted images.

The exemption library configuration takes effect in about 2 to 5 minutes. Alibaba Cloud Content Moderation compares the input image with each image in the selected libraries for similarity. For images that the algorithm considers identical, the Content Moderation system returns the "nonLabel_lib" label and no other risk labels.

Customize detection settings for text in images

For text within images, you can configure custom vocabularies to either ignore or flag specific keywords.

Log on to the Content Moderation console.

Before you configure a custom vocabulary, you must create and maintain the vocabulary. If an existing vocabulary meets your business requirements, you can skip this step.

On the page, configure a vocabulary as follows.

On the Keyword Library Management tab, click Create Library.

In the Create Library panel, enter the vocabulary information as required.

NoteYou can also create a vocabulary without adding keywords and add them later as needed. A single account can have up to 20 vocabularies with a total of 100,000 keywords. A single keyword cannot exceed 20 characters. Special characters are not supported.

Click Create Library.

If the vocabulary fails to be created, a specific error message is displayed. You can try to create it again based on the message.

In the navigation pane on the left, choose .

On the Rules Management tab, find the service that you want to manage. This example uses the Common Baseline Moderation (baselineCheck) service. Click Settings in the Actions column.

Configure ignored keywords for text in images.

On the Ignoring vocabulary configuration tab, view the list of custom vocabularies and their configuration status.

For example, the following figure shows that the switches for all vocabularies are turned off.

Click Edit and turn on the switch for the vocabulary that you want to ignore.

Click Save to save the new ignored vocabulary configuration.

NoteThe ignored vocabulary configuration takes effect in about 2 to 5 minutes. Alibaba Cloud Content Moderation ignores the keywords in the vocabulary before performing further risk detection. For example, if the text in an image is "Here is a small cat" and you select a vocabulary containing "is" and "a" to be ignored, risk detection will only be performed on "Here small cat".

Configure flagged keywords for text in images.

On the Detection Scope page, select a detection type to adjust. This example uses Prohibited Content Detection.

In the Set Labels by Customized Libraries section of the Prohibited Content Detection tab, view the information about the configured custom vocabulary.

NoteIn the Set Labels by Customized Libraries section, you can set a custom vocabulary for all labels that end with "tii". The "tii" suffix indicates that a risk was detected in the text of an image.

Click Edit to enter edit mode and select the custom vocabulary that you want to configure.

Click Save to save the new custom vocabulary configuration.

The new configuration takes effect and is applied to the production environment in about 2 to 5 minutes. If the text in a user-uploaded image matches a keyword in the configured vocabulary, the "contraband_drug_tii_lib" label is returned.

Test image moderation effects online

In the Content Moderation console, you can directly test the moderation effects of your image URLs or local image files.

Log on to the Content Moderation console.

In the navigation pane on the left, choose .

On the Online Test page, click the Image tab.

Test the image moderation.

From the Service drop-down list, select the service that you want to test.

NoteBefore testing, we recommend that you adjust the rules for the service on the rules configuration page. For more information, see Adjust the risk detection scope for images.

The DataId and Auxiliary Information parameters are optional. You can enter or select them as needed. For parameter descriptions, see the Image moderation API documentation.

You can provide images by entering an Image URL or by uploading a local image. You can input up to 100 images at a time.

Click Test to test the input images. The moderation results are displayed in the moderation result area.

Query detailed detection results for each image

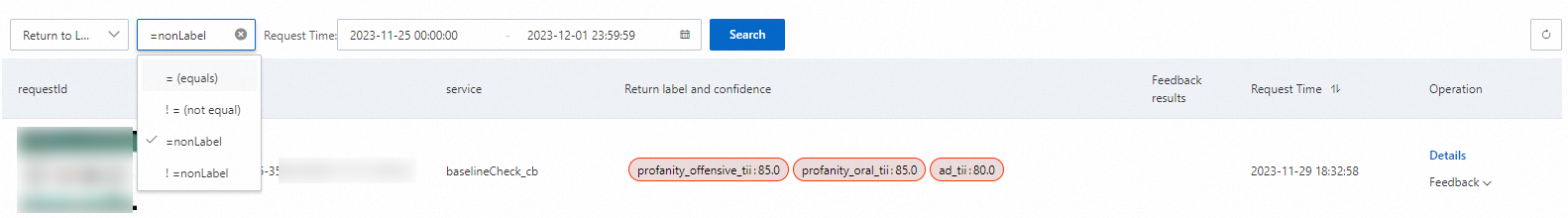

For detected images, you can query detailed detection results by request ID, data ID, or returned label.

Log on to the Content Moderation console.

In the navigation pane on the left, choose .

On the Detection Results page, enter query conditions to search for detection results.

Supported query conditions include request ID, data ID, service, and returned label.

NoteBy default, results are displayed in reverse chronological order, with a maximum of 50,000 data entries shown. The Content Moderation console provides query results from the last 30 days. We recommend that you store the data or logs from each API call. This allows for data analysis and statistics over a longer period.

If you disagree with a moderation result, you can submit feedback by selecting False Positive or Missed Violation from the Feedback drop-down list in the Actions column for that result.

The Returned Label search item lets you search by label. You can enter multiple labels separated by commas (,).

For example, to search for all records that have a hit label, set Returned Label to !=nonLabel.

You can click a specific image or click Details in the Actions column to view detailed information.

View statistics on recent image detections

You can view statistics on the volume of recent image detections to formulate further moderation or governance measures for specific image content.

Log on to the Content Moderation console.

In the navigation pane on the left, choose .

On the Usage Statistics page, select a time range to query or export usage data.

Query Usage: You can view usage statistics by day or by month. The statistical data is stored for one year. You can query data spanning up to two months.

Export Usage: Click the

icon in the upper-right corner to export usage data by day or by month.

icon in the upper-right corner to export usage data by day or by month.The exported report is in Excel format and includes only data with call volumes. The following table describes the row headers in the exported report.

Name

Description

Unit

Account UID

The UID of the account that exported the data.

None

Service

Information about the called detection service.

None

Usage

The total number of calls.

Count

Date

The date the statistics were collected.

Day/Month

View service hit details: After the usage statistics are generated, the label hit details for each called service are displayed. The details are shown as a column chart of daily call volumes and a treemap chart of label proportions.

Column chart of daily call volumes: Shows the number of daily requests that hit risk labels and the number of requests that did not hit risk labels.

Treemap chart of label proportions: Shows the overall label hit situation, arranged in descending order of label proportion. Labels with the same prefix have the same background color.