After you install an Application Real-Time Monitoring Service (ARMS) agent for a Java application, ARMS starts to monitor the application. You can view information about application instances, such as basic metrics, garbage collection (GC), and Java virtual machine (VM) memory on the Instance Monitoring tab of the application details page.

Prerequisites

Application Monitoring provides a new application details page for users who have enabled the new billing mode. For more information, see Billing (new).

If you have not enabled the new billing mode, you can click Switch to New Version on the Application List page to view the new application details page.

An ARMS agent is installed for the application. For more information, see Application Monitoring overview.

Procedure

Log on to the ARMS console. In the left-side navigation pane, choose .

On the Application List page, select a region in the top navigation bar and click the name of the application that you want to manage.

NoteIcons displayed in the Language column indicate languages in which applications are written.

: Java application

: Java application : Go application

: Go application : Python application

: Python applicationHyphen (-): application monitored in Managed Service for OpenTelemetry.

In the top navigation bar, click the Instance Monitoring tab.

Instance Monitoring tab

The Instance Monitoring tab provides dashboard data based on whether the application is deployed in an Elastic Compute Service (ECS) instance or a container cluster, and whether the environment is monitored in Managed Service for Prometheus.

If your application is deployed in a container cluster that is monitored in Managed Service for Prometheus, the data of Managed Service for Prometheus is displayed in the dashboard. For information about how to use Managed Service for Prometheus to a monitor a container cluster, see Monitor an ACK cluster.

If the container cluster is not monitored in Managed Service for Prometheus, make sure that the ARMS agent version is 4.1.0 or later. Then, the data of Application Monitoring is displayed. For information about the ARMS agent for Java, see Release notes of the ARMS agent for Java.

ECS instance

In the Quick Filter section (icon 1), you can query charts and instances by host IP address.

In the trend charts section (icon 2), you can view the time series of basic metrics, GCs, and JVM memory.

Instance base monitoring: shows the trend charts of CPU utilization, memory usage, and disk usage in the specified time period. Switch between the average and maximum values from the drop-down list.

Instance GC: shows the trend charts of full GCs and young GCs in the specified time period. Switch between the number of GCs and the average duration.

JVM memory: shows the trend charts of used and maximum heap memory within the specified time period from the drop-down list. Switch between the heap memory and non-heap memory.

NoteThe data collected by Application Monitoring comes from JMX, excluding some non-heap memory areas of Java processes. Therefore, the sum of heap and non-heap memory displayed in Application Monitoring differ greatly from the RES queried by running the

topcommand. For more information, see JVM memory details.

Click the

icon. In the dialog box that appears, you can view the metric data in a specific period of time or compare the metric data in the same period of time on different dates. You can click the

icon. In the dialog box that appears, you can view the metric data in a specific period of time or compare the metric data in the same period of time on different dates. You can click the  icon to display the data in a column chart or a trend chart.

icon to display the data in a column chart or a trend chart. In the instance list section (icon 3), you can view instance information, such as the IP address, CPU utilization, memory usage, disk usage, load, number of full GCs, number of young GCs, heap memory usage, non-heap memory usage, and key metrics of each instance defined by the RED Method, including rate, errors, and duration.

In the instance list, you can perform the following operations:

Click an instance IP address to view the instance details. For more information, see the Instance details section.

Click Traces in the Actions column to view the trace details of an instance. For more information, see Trace Explorer.

Container cluster monitored in Managed Service for Prometheus

In the Quick Filter section (icon 1), you can query charts and instances by cluster ID or host IP address.

In the trend charts section (icon 2), you can view the time series of basic metrics, GCs, and JVM memory.

Instance base monitoring: shows the trend charts of CPU utilization and memory usage in the specified time period.

Instance GC: shows the trend charts of full GCs and young GCs in the specified time period. Switch between the number of GCs and the average duration.

JVM memory: shows the trend charts of used and maximum heap memory within the specified time period from the drop-down list. Switch between the heap memory and non-heap memory.

NoteThe data collected by Application Monitoring comes from JMX, excluding some non-heap memory areas of Java processes. Therefore, the sum of heap and non-heap memory displayed in Application Monitoring differ greatly from the RES queried by running the

topcommand. For more information, see JVM memory details.

Click the

icon. In the dialog box that appears, you can view the metric data in a specific period of time or compare the metric data in the same period of time on different dates. You can click the

icon. In the dialog box that appears, you can view the metric data in a specific period of time or compare the metric data in the same period of time on different dates. You can click the  icon to display the data in a column chart or a trend chart.

icon to display the data in a column chart or a trend chart. In the instance list section (icon 3), you can view instance information, such as the IP address, used CPU, requested CPU, maximum CPU, CPU utilization (%), used memory, requested memory, maximum memory, memory usage (%), used disk size, maximum disk size, disk usage (%), load, number of full GCs, number of young GCs, heap memory usage, non-heap memory usage, and key metrics of each instance defined by the RED Method, including rate, errors, and duration. Note that if the maximum CPU, memory, or disk size is not configured, - is displayed in place of the CPU utilization, memory usage, or disk usage.

In the instance list, you can perform the following operations:

Click an instance IP address or click Details in the Actions column to view the instance details. For more information, see the Instance details section.

Click Traces in the Actions column to view the trace details of an instance. For more information, see Trace Explorer.

Container cluster (custom data collection)

In the Quick Filter section (icon 1), you can query charts and instances by host IP address.

In the trend charts section (icon 2), you can view the time series of basic metrics, GCs, and JVM memory.

Instance base monitoring: shows the trend charts of CPU utilization and memory usage in the specified time period.

Instance GC: shows the trend charts of full GCs and young GCs in the specified time period. Switch between the number of GCs and the average duration.

JVM memory: shows the trend charts of used and maximum heap memory within the specified time period from the drop-down list. Switch between the heap memory and non-heap memory.

NoteThe data collected by Application Monitoring comes from JMX, excluding some non-heap memory areas of Java processes. Therefore, the sum of heap and non-heap memory displayed in Application Monitoring differ greatly from the RES queried by running the

topcommand. For more information, see JVM memory details.

Click the

icon. In the dialog box that appears, you can view the metric data in a specific period of time or compare the metric data in the same period of time on different dates. You can click the

icon. In the dialog box that appears, you can view the metric data in a specific period of time or compare the metric data in the same period of time on different dates. You can click the  icon to display the data in a column chart or a trend chart.

icon to display the data in a column chart or a trend chart. In the instance list section (icon 3), you can view instance information, such as the IP address, CPU utilization, memory usage, load, number of full GCs, number of young GCs, heap memory usage, non-heap memory usage, and key metrics of each instance defined by the RED Method, including rate, errors, and duration.

In the instance list, you can perform the following operations:

Click an instance IP address or click Details in the Actions column to view the instance details. For more information, see the Instance details section.

Click Traces in the Actions column to view the trace details of an instance. For more information, see Trace Explorer.

Instance details

Overview

On the Overview tab, you can view the number of requests, number of errors, average duration, and slow calls.

JVM Monitoring

On the JVM Monitoring tab, you can view the GCs, memory, threads, and files of the instance.

Pooling Monitoring

On the Pooling Monitoring tab, you can view the metrics of the thread pool or connection pool used by the application, including the number of core threads, number of existing threads, maximum number of allowed threads, number of active threads, and maximum number of tasks allowed in the task queue.

Host Monitoring

On the Host Monitoring tab, you can view the metrics about CPU utilization, memory usage, disk usage, load, traffic, and packets.

Container monitoring

Container cluster monitored in Managed Service for Prometheus

For information about how to connect your container cluster to Managed Service for Prometheus, see Create a Prometheus instance to monitor an ACK cluster.

On the Container monitoring tab, you can view the metrics about CPU utilization, memory usage, disk usage, load, traffic, and packets.

Container cluster (custom data collection)

If the container cluster is not monitored in Managed Service for Prometheus, make sure that the ARMS agent version is 4.1.0 or later. For information about ARMS agent for Java, see Release notes of the ARMS agent for Java.

On the Container monitoring tab, you can view the time series of CPU, memory, and network traffic.

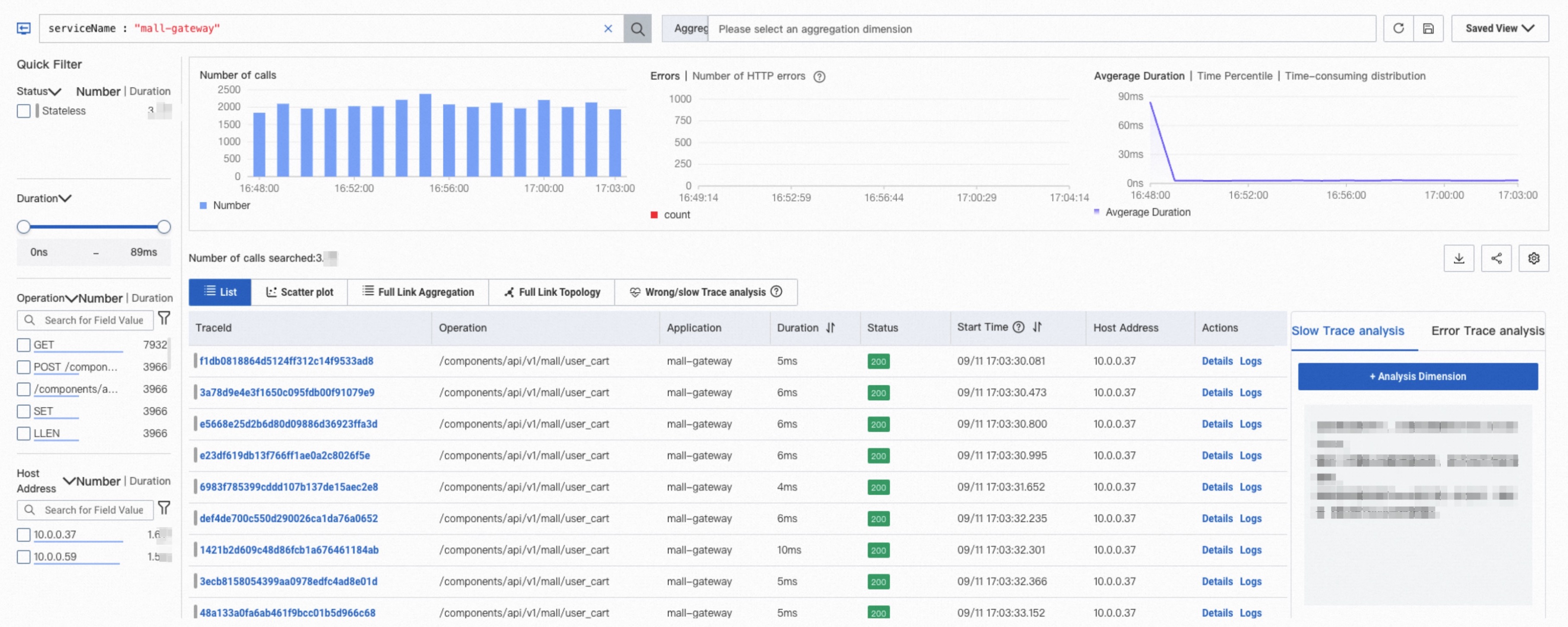

Trace Explorer

Trace Explorer allows you to combine filter conditions and aggregation dimensions for real-time analysis based on the stored full trace data. This can meet the custom diagnostics requirements in various scenarios. For more information, see Trace Explorer.

References

For information about Application Monitoring metrics, see Application Monitoring metrics.

FAQ

What are the relationships between application-level metrics and single-machine metrics?

RED metrics (rate, errors, and duration):

Request count, slow call count, and HTTP status code frequency: The application-level metrics are aggregated sums of the corresponding single-machine metrics.

Response time: The application-level metric represents the average response time across all machines.

JVM metrics:

GC frequency and duration: The application-level metrics are the sum of the single-machine metrics.

Heap memory usage and thread count: The application-level metrics take the maximum value observed among the single machines.

Thread pool and connection pool metrics

For all metrics in this category, the application-level data is the average of the single-machine metrics.

System metrics

For all system metrics, the application-level data takes the maximum value observed among the single machines.

SQL and NoSQL calls: Similar to RED metrics, Frequency metrics are aggregated sums of the single-machine metrics. Other metrics, represent the average values across all machines.

Exception metrics: All exception-related metrics at the application level are aggregated sums of the single-machine metrics.

Why is traffic between instances uneven?

The ARMS agent v3.x may miss some metric data if memory optimization is enabled. The agent v4.x has fixed the issue.

Why was an Undertow request counted twice?

The ARMS agent earlier than v3.2.x executes implementation methods twice when using DeferredResult. The agent v3.2.x and later has fixed the issue.

Why do the CPU/memory quotas of the container not match the actual settings of the pod?

Check whether your pod defines multiple containers. The CPU/memory quotas aggregate the total quotas of all containers within the pod.

Why are some system metrics missing or inaccurate? Why is CPU utilization 100%?

The ARMS agent earlier than v4.x does not support the collection of system metrics in Windows environments. The ARMS agent v4.x and later has fixed the issue.

Why was a Full GC triggered when the application was started?

This may be because you have not configured the metaspace size. The default metaspace size is 20 MB. When the application is started, the metaspace may be expanded to trigger Full GC. You can set the initial and maximum metaspace sizes through the -XX:MetaspaceSize and -XX:MaxMetaspaceSize parameters.

How is the total VM stack size calculated?

If the default thread stack size (1 MB) has been modified through the -Xss parameter, the total VM stack size is calculated by multiplying the number of threads by the thread stack size. Otherwise, the total VM stack size is calculated by multiplying the number of threads by 1 MB.

How are JVM metrics obtained?

The JVM metrics of ARMS are obtained through the following standard JDK interfaces:

Memory metrics

ManagementFactory.getMemoryPoolMXBeans

java.lang.management.MemoryPoolMXBean#getUsage

GC metrics

ManagementFactory.getGarbageCollectorMXBeans

java.lang.management.GarbageCollectorMXBean#getCollectionCount

java.lang.management.GarbageCollectorMXBean#getCollectionTime

Why is the maximum JVM heap memory -1?

-1 indicates that the maximum heap memory size is not set.

Why is the used JVM heap memory not equal to the maximum heap memory?

In the context of the JVM memory allocation mechanism, the -Xms parameter sets the initial size of the heap memory. With the application running and the remaining heap memory becoming insufficient, the JVM will increase the heap size incrementally until it reaches the maximum heap size specified by the -Xmx parameter. If the total allocated memory is less than the maximum value, this indicates that the heap has not yet expanded to its full capacity. The used memory represents the actual amount of heap memory currently being utilized by the application.

Why does JVM GC frequency increase?

The increasing frequency of JVM GC may be due to the use of the default GC algorithm in JDK 8, Parallel GC. This algorithm enables -XX:+UseAdaptiveSizePolicy by default, which automatically adjusts heap size parameters, including the young generation size and SurvivorRatio, to meet GC pause time targets. When Young GC occurs frequently, it may dynamically reduce the size of the Survivor spaces. As a result, objects in the Survivor spaces can easily be promoted to the Old generation, leading to rapid growth in Old generation space and, consequently, an increased frequency of Full GCs. For more information, see Java documentation.

Why is thread pool or connection pool monitoring missing data?

In the Advanced Settings section of the Custom Configuration tab, check whether thread pool or connection pool monitoring is enabled.

Check whether the framework of the application is supported. For more information, see Thread pool and connection pool monitoring.

Why is the maximum number of connections obtained from the HikariCP connection pool incorrect?

This is caused by the coding issue of the ARMS agent earlier than v3.2.x. The agent v3.2.x and later has fixed the issue.

Why are the values in the pool monitoring metrics displayed as decimals?

As the ARMS agent collects data every 15 seconds, it gathers four data points per minute. The ARMS console displays the average value over a specified time period based on this collected data. Suppose that the four data points collected in one minute are 0, 0, 1, and 0. The theoretical average is 0.25.

Why do thread pool or connection pool metrics not increase when the thread pool or connection pool is fully utilized as shown in the logs or other records?

It may be because of a mismatch between the metric sampling time and the time when the pool was fully utilized. As ARMS automatically collects thread pool and connection pool status metrics every 15 seconds, instantaneous spikes that occur within this interval may not be captured.

Why is the maximum number of threads of the thread pool not as expected? Why is the maximum number of threads 2.1 billion?

The maximum thread pool size is obtained by directly calling the method to get the maximum number of threads from the thread pool object, which is generally correct. If it does not meet your expectations, it may be because the user-defined maximum thread count has not taken effect.

If the maximum thread count is 2.1 billion, this usually indicates a scheduled thread pool. In scheduled thread pools, the default maximum thread count is set to Integer.MAX_VALUE, as shown in the following figure.

What do I do if the Tomcat thread pool metrics do not meet expectations?

ARMS thread pool metrics are obtained directly by calling the corresponding methods of the thread pool object, which generally does not result in errors. If several dimensions do not match (such as maximum thread count, active thread count, or core thread count), first confirm whether your application provides Tomcat services through multiple ports (for example, components like spring-actuator also open a port to expose metrics). In such cases, due to the dimension convergence mechanism, the ARMS agent may combine data from multiple thread pools during statistics. If the data is combined, you can upgrade the agent version to 4.1.10 or later and modify the Thread Pool Thread Name Pattern Extraction Policy parameter to Replace ending numeric characters * in the Pooled Monitoring Configuration section of the Custom Configurations tab.

Why does a particular thread pool or connection pool have no data before a certain point in time?

Data for the thread pool or connection pool was generated when a scheduled task, triggered by the business logic, initialized. Before the scheduled task was triggered, it had no corresponding thread pool or connection pool data. This issue similarly applies to traffic-related data; for example, the number of requests to an interface would only show data after the first request has been made. If no activity or initialization has occurred prior to that point, no data will be recorded.

Why does the HTTPClient connection pool have no data?

The ARMS agent V4.x and later no longer supports connection pool monitoring for frameworks such as okHttp3 and Apache HTTPClient. This decision was made considering that these frameworks create a connection pool object for each external domain name accessed by the current application. When accessing many external domains, this can lead to significant overhead and pose stability risks. Therefore, support for monitoring these connection pools has been discontinued.

Why can container monitoring data not be seen after integrating an application in the ACK environment?

This may be because the Alibaba Cloud account used to create the ACK cluster and the Alibaba Cloud account used to integrate ARMS are different. Currently, ARMS only supports displaying container monitoring data within the same Alibaba Cloud account.

What do I do if the handle open rate is not 0, but the number of file handles is 0?

Confirm whether your application environment is JDK 9+ and the ARMS agent version is 3.x. If so, this issue arose due to compatibility problems with the metric collection logic in this environment. This problem has been fixed in the agent V4.2.2 and later. We recommend that you upgrade the agent.

Why are the actual physical memory usage of the JVM process and the heap memory usage shown in JVM monitoring are significantly different?

This may be because the JVM process has a large amount of off-heap memory usage. Currently, ARMS can only monitor the in-heap memory of the JVM process and some portions of off-heap memory usage. For information about which parts of the JVM memory usage can be monitored by ARMS and which cannot, see JVM memory details. If the off-heap memory usage is high, you can refer to the "Non-heap memory" section to perform your own analysis.