This topic describes the memory metrics of a Java virtual machine (JVM) that are supported by the Application Monitoring sub-service of Application Real-Time Monitoring Service (ARMS).

Memory areas of a Java process

The following figure shows the memory areas of a Java process.

Due to the complexity of the JVM mechanism, the figure shows only the main memory areas.

How ARMS obtains JVM memory details

The ARMS agent uses MemoryMXBean provided by the Java Development Kit (JDK) to obtain memory details during JVM runtime. Due to the limitations of MemoryMXBean, the JVM memory monitoring capability of ARMS cannot cover all memory areas occupied by Java processes. For more information, see Interface MemoryMXBean.

Heap memory

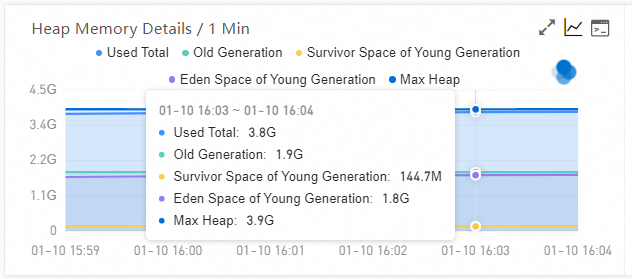

The Java heap is a core Java memory area where all objects are allocated memory and garbage collection (GC) is implemented. Depending on the Java garbage collector, the maximum allowed heap memory supported by ARMS may be slightly smaller than the user-defined upper limit of the heap memory. Take Parallel Garbage Collector (ParallelGC) as an example. If you set the -XX:+UseParallelGC -Xms4096m -Xmx4096m parameter, the maximum allowed memory size (3.8 GB) is slightly less than the user-defined memory size (4 GB), as shown in the following figure. This is because MemoryMXBean does not contain data from the From Space and To Space areas.

In general, if Garbage-First (G1) Garbage Collector is used, the maximum allowed heap memory supported by ARMS is consistent with the user-defined -Xmx or -XX:MaxRAMPercentage parameter. However, if ParallelGC, Concurrent Mark Sweep (CMS) Collector, or Serial Garbage Collector is used, a slight deviation exists.

Metaspace

Metaspace is used to store class metadata, including class structure information, method information, and field information. The metaspace usage is generally stable.

Non-heap memory

The non-heap memory supported by ARMS includes the following areas: metaspace, compressed class space, and code cache. Due to the limitations of MemoryMXBean, ARMS does not support all non-heap memory areas. VM thread stacks and Java Native Interface (JNI) are not supported.

Metaspace: stores class metadata. JDK 8 and later versions support specifying the default size and maximum size of the metaspace by configuring

-XX:MetaspaceSize=Nand-XX:MaxMetaspaceSize=N.Compressed class space: compresses and stores loaded class metadata. As a special JVM memory area, the compressed class space reduces the memory usage of Java applications by limiting the space of pointers. The compressed class space can be specified with the JVM startup parameter

-XX:CompressedClassSpaceSize. In JDK 11, the default compressed class space size is 1 GB.Code cache: stores native code generated by the JVM. Various sources generate native code, including interpreter loops, JNI, just-in-time (JIT) compilation, and Java methods. The native code generated by JIT compilation occupies most of the code cache space. The initial size and maximum size of the code cache can be specified with the JVM startup parameters

-XX:InitialCodeCacheSizeand-XX:ReservedCodeCacheSize.

Direct buffer

The Java direct buffer is a special buffer that allocates space directly in the memory of the operating system, rather than in the heap memory of the JVM. The direct buffer can provide faster I/O operations, prevent the overhead of memory copying, and efficiently process large amounts of data. A large number of I/O operations increase the direct buffer memory.

Heap memory leak analysis

ARMS provides a comprehensive analysis capability for heap memory leaks. You can use the JVM heap memory monitoring feature to check whether the heap memory increases slowly. If the heap memory increases for a long time, you can use the memory snapshot or continuous profiling feature provided by ARMS to troubleshoot the heap memory leaks.

Non-heap memory leak analysis

If the heap memory is relatively stable whereas the overall memory of the application keeps increasing, non-heap memory may leak. ARMS does not provide non-heap memory analysis. You can use the Native Memory Tracking (NMT) tool to monitor non-heap memory requests. For more information, see Oracle documentation.

NMT requires technology background and incurs a performance overhead of 5% to 10% on applications. We recommend that you evaluate the impact on online applications before you use the tool.

Memory FAQ

Q: Why does the sum of heap and non-heap memory displayed in the Application Monitoring module of the ARMS console differ greatly from the Resident Memory Size in KiB (RES) queried by running the

topcommand?A: The data collected by Application Monitoring comes from Java Management Extensions (JMX), excluding the VM thread stacks, local thread stacks, and non-JVM memory. Therefore, the JVM memory data provided by Application Monitoring is different from the RES queried by running the

topcommand.Q: Why does the sum of heap and non-heap memory displayed in the Application Monitoring module of the ARMS console differ greatly from the memory data provided by Managed Service for Prometheus and Managed Service for Grafana?

A: The data collected by Application Monitoring comes from JMX, whereas the memory data provided by Managed Service for Grafana comes from container metrics queried by using Prometheus Query Language. Generally, the names of these metrics contains container_memory_working_set_bytes. In fact, the sum of Resident Set Size (RSS) and active cache of memory cgroups is counted.

Q: A pod restarts due to an out-of-memory (OOM) killer. How do I use Application Monitoring to troubleshoot the problem?

A: You can use Application Monitoring to troubleshoot the capacity planning issues of heap memory and direct buffer with ease. However, the memory data that Application Monitoring obtains from JMX does not include the RSS consumption details of an entire JVM process. Therefore, you must use the Prometheus monitoring ecosystem of Kubernetes to troubleshoot the OOM killer. In addition, pay attention to the following two aspects:

Does the pod only have single-process models?

You need to check whether other processes consume memory.

Do leaks exist outside JVM processes?

For example, glibc may cause memory leaks.