This topic provides answers to some frequently asked questions about the backup center.

Index

General-purpose operation

If you use the kubectl command-line tool to use the backup center, upgrade the migrate-controller component to the latest version before you troubleshoot issues. The upgrade does not affect existing backups. For more information about how to upgrade the component, see Manage components.

When the status of a backup task, StorageClass conversion task, or restore task is Failed or PartiallyFailed, you can use one of the following methods to obtain error messages.

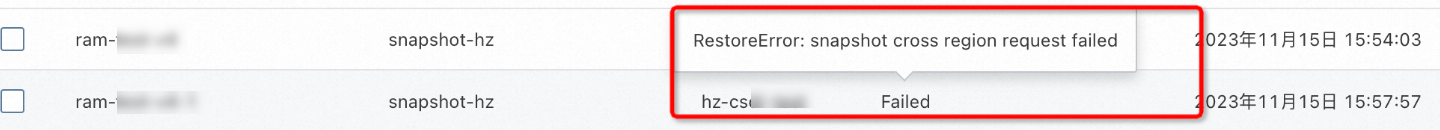

Move the pointer over Failed or PartiallyFailed in the Status column to view a brief error message, such as

RestoreError: snapshot cross region request failed.

To obtain more detailed error messages, run the following command to query the events of the task, such as

RestoreError: process advancedvolumesnapshot failed avs: snapshot-hz, err: transition canceled with error: the ECS-snapshot related ram policy is missing.Backup task

kubectl -n csdr describe applicationbackup <backup-name>StorageClass conversion task

kubectl -n csdr describe converttosnapshot <backup-name>Restore task

kubectl -n csdr describe applicationrestore <restore-name>

The console displays "The working component is abnormal" or "Failed to retrieve data"

Issue description

The console displays The Working Component Is Abnormal or Failed To Retrieve Data.

Causes

The installation of the backup center component is abnormal.

Solution

Check whether nodes that belong to the cluster exist. If cluster nodes do not exist, the backup center component cannot be installed.

Check whether the cluster uses FlexVolume. If the cluster uses FlexVolume, switch to CSI. For more information, see What do I do if the migrate-controller component in a cluster that uses FlexVolume cannot be launched?.

If you use the kubectl command-line tool to use the backup center, check whether the YAML configurations are correct. For more information, see Back up and restore cluster applications by using kubectl.

If your cluster is an ACK dedicated cluster or a registered cluster, check whether the required permissions are configured. For more information, see ACK dedicated clusters and Registered clusters.

Check whether the csdr-controller and csdr-velero Deployments in the csdr namespace fail to be deployed due to resource or scheduling limits. If yes, fix the issue.

The console displays the following error: The name is already used. Change the name and try again

Issue description

When you create or delete a backup task, StorageClass conversion task, or restore task, the console displays The Name Is Already Used. Change The Name And Try Again.

Causes

When you delete a task in the console, a deleterequest resource is created in the cluster. The working component performs a series of deletion operations instead of deleting only the corresponding backup resource. The same applies to command-line operations. For more information, see Back up and restore by using the command-line tool.

If the deletion operation is incorrect or an error occurs during the processing of the deleterequest resource, some resources in the cluster cannot be deleted. In this case, the error message that indicates the existence of resources with the same name is returned.

Solution

Delete the resources with the same name as prompted. For example, if the error message

deleterequests.csdr.alibabacloud.com "xxxxx-dbr" already existsis returned, run the following command to delete the resource:kubectl -n csdr delete deleterequests xxxxx-dbrCreate a task with a new name.

When you restore an application across clusters, you cannot select an existing backup

Issue description

When you restore an application across clusters, you cannot select a backup task for restoration.

Causes

Cause 1: The current cluster is not associated with the backup vault that stores the backup, which means that the backup vault is not initialized.

The system initializes the backup vault and synchronizes the basic information about the backup vault, including the OSS bucket information, to the cluster. Then, the system initializes the backup files from the backup vault in the cluster. Only after the initialization is complete can you select backup files from the backup vault for restoration in the current cluster.

Cause 2: The initialization of the backup vault fails. The status of the backuplocation resource in the current cluster is

Unavailable.Cause 3: The backup task is not complete or the backup task fails.

Solution

Solution 1:

On the Create Restore Task page, click Initialize Vault on the right side of Backup Vault. After the backup vault is initialized, select the task that you want to restore.

Solution 2:

Run the following command to check the status of the backuplocation resource:

kubectl get -n csdr backuplocation <backuplocation-name> Expected results:

NAME PHASE LAST VALIDATED AGE

<backuplocation-name> Available 3m36s 38mIf the status is Unavailable, see the solution in What do I do if the status of a task is Failed and the "VaultError: xxx" error is returned?.

Solution 3:

In the console of the backup cluster, check whether the backup task is successful, which means that the status of the backup task is Completed. If the status of the backup task is abnormal, troubleshoot the issue. For more information, see Index.

The console displays the following error: The service role required by the component is not authorized

Issue description

When you access the application backup console, the console displays the following error: The service role required by the component is not authorized. The error code is AddonRoleNotAuthorized.

Causes

The cloud resource authentication logic of the migrate-controller component in ACK managed clusters is optimized in migrate-controller 1.8.0. When you install or upgrade the component to this version for the first time, the Alibaba Cloud account must complete cloud resource authorization.

Solution

If you are logged on with an Alibaba Cloud account, click Authorize to complete the authorization.

If you are logged on with a RAM user, click Copy Authorization Link and send the link to the Alibaba Cloud account to complete the authorization.

The console displays the following error: Your account does not have the required cluster RBAC permissions for this operation

Issue description

When you access the application backup console, the console displays the following error: Your account does not have the required cluster RBAC permissions for this operation. Contact the Alibaba Cloud account or permission administrator for authorization. The error code is APISERVER.403

Causes

The console interacts with the API server to create backup and restore tasks and obtain task status in real time. The default permission lists for cluster O&M engineers and developers do not include some permissions required by the backup center component. The Alibaba Cloud account or permission administrator must grant the required permissions.

Solution

For more information about how to use custom RBAC to restrict operations on cluster resources, see Use custom RBAC to restrict operations on cluster resources. Grant the following ClusterRole permissions to the backup center operator:

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: csdr-console

rules:

- apiGroups: ["csdr.alibabacloud.com","velero.io"]

resources: ['*']

verbs: ["get","create","delete","update","patch","watch","list","deletecollection"]

- apiGroups: [""]

resources: ["namespaces"]

verbs: ["get","list"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get","list"]The backup center component fails to be upgraded or uninstalled

Issue description

The backup center component fails to be upgraded or uninstalled, and the csdr namespace remains in the Terminating state.

Causes

The backup center component exits abnormally during runtime, which leaves tasks in the InProgress state in the csdr namespace. The finalizers field of the cluster resources corresponding to these tasks may prevent the resources from being deleted. As a result, the csdr namespace remains in the Terminating state.

Solution

Run the following command to check why the csdr namespace is in the Terminating state:

kubectl describe ns csdrConfirm that the stuck tasks are no longer needed and delete their

finalizers.After you confirm that the csdr namespace is deleted:

For component upgrade, reinstall the migrate-controller component of the backup center.

For component uninstallation, the component should have been uninstalled.

What do I do if the status of a task is Failed and the "internal error" error is returned?

Issue description

The status of a task is Failed and the "internal error" error is returned.

Causes

The component or the underlying cloud service encounters an unexpected error. For example, the cloud service is not available in the current region.

Solution

If the error message is "HBR backup/restore internal error", log on to the Cloud Backup console to check whether container backup is available.

For more such questions, please submit a ticket for processing.

What do I do if the status of a task is Failed and the "create cluster resources timeout" error is returned?

Issue description

The status of a task is Failed and the "create cluster resources timeout" error is returned.

Causes

During StorageClass conversion or restoration, temporary pods, PVCs, and PVs may be created. If these resources remain unavailable for a long period of time after they are created, the "create cluster resources timeout" error is returned.

Solution

Run the following command to locate the abnormal resource and find the cause based on the events:

kubectl -n csdr describe <applicationbackup/converttosnapshot/applicationrestore> <task-name>Expected results:

……wait for created tmp pvc default/demo-pvc-for-convert202311151045 for convertion bound time outThe output indicates that the PVC used for StorageClass conversion remains in a state other than Bound for a long period of time. The PVC is in the

defaultnamespace and is nameddemo-pvc-for-convert202311151045.Run the following command to check the status of the PVC and identify the cause of the anomaly:

kubectl -ndefault describe pvc demo-pvc-for-convert202311151045The following list describes common causes of this issue in the backup center. For more information, see Troubleshoot storage issues.

The cluster or node resources are insufficient or abnormal.

The restore cluster does not have the corresponding StorageClass. Use the StorageClass conversion feature to select an existing StorageClass in the restore cluster for restoration.

The underlying storage associated with the StorageClass is unavailable. For example, the specified disk type is not supported in the current zone.

The CNFS associated with alibabacloud-cnfs-nas is abnormal. For more information, see Manage NAS file systems by using CNFS (recommended).

When you restore resources in a multi-zone cluster, you select a StorageClass whose volumeBindingMode is set to Immediate.

What do I do if the status of a task is Failed and the "addon status is abnormal" error is returned?

Issue description

The status of a task is Failed and the "addon status is abnormal" error is returned.

Causes

The components in the csdr namespace are abnormal.

Solution

See Cause 1 and solution: The components in the csdr namespace are abnormal.

What do I do if the status of a task is Failed and the "VaultError: xxx" error is returned?

Issue description

The status of a backup, restore, or StorageClass conversion task is Failed and the VaultError: backup vault is unavailable: xxx error is returned.

Causes

The specified OSS bucket does not exist.

The cluster does not have permissions to access OSS.

The network of the OSS bucket is unreachable.

Solution

Log on to the OSS console and check whether the OSS bucket associated with the backup vault exists.

If the OSS bucket does not exist, create a bucket and rebind it. For more information, see Create buckets.

Check whether the cluster has permissions to access OSS.

ACK Pro clusters: You do not need to configure OSS permissions. Make sure that the name of the OSS bucket associated with the backup vault in the cluster starts with cnfs-oss-**.

ACK dedicated clusters and registered clusters: You must configure OSS permissions. For more information, see Install the backup service component and configure permissions.

For ACK managed clusters for which the component is installed or upgraded to v1.8.0 or later without using the console, OSS-related permissions may be missing. You can run the following command to check whether the cluster has permissions to access OSS:

kubectl get secret -n kube-system | grep addon.aliyuncsmanagedbackuprestorerole.tokenExpected results:

addon.aliyuncsmanagedbackuprestorerole.token Opaque 1 62dIf the returned content is the same as the preceding expected output, the cluster has permissions to access OSS. You only need to specify an OSS bucket that is named in the cnfs-oss-* format for the cluster.

If the returned content is different from the expected output, use one of the following methods to grant permissions:

Configure OSS permissions for ACK dedicated clusters and registered clusters. For more information, see Install the backup service component and configure permissions.

Use an Alibaba Cloud account to click Authorize to complete the authorization. You need to perform this operation only once for an Alibaba Cloud account.

NoteYou cannot modify the backup vault or create backup vaults that use the same name. You cannot associate a backup vault with an OSS bucket whose name is not cnfs-oss-**. If you have associated a backup vault with an OSS bucket whose name is not cnfs-oss-**, create a backup vault with a different name and associate it with an OSS bucket whose name meets the naming requirements.

Run the following command to check the network configuration of the cluster:

kubectl get backuplocation <backuplocation-name> -n csdr -o yaml | grep networkThe output is similar to the following content:

network: internalIf the value of network is

internal, the backup vault accesses the OSS bucket over the internal network.If the value of network is

public, the backup vault accesses the OSS bucket over the Internet. If the backup vault accesses the OSS bucket over the Internet and the specific error is a timeout error, check whether the cluster can access the Internet. For more information about how to enable Internet access for a cluster, see Enable Internet access for a cluster.

In the following scenarios, the backup vault must access the OSS bucket over the Internet:

The cluster and the OSS bucket are deployed in different regions.

The current cluster is an ACK Edge cluster.

The current cluster is a registered cluster and is not connected to a VPC by using Cloud Enterprise Network (CEN), Express Connect, or VPN Gateway. Alternatively, the current cluster is connected to a VPC but no route is configured to point to the OSS internal endpoint of the region. You must configure a route to point to the OSS internal endpoint of the region.

For more information about how to connect an on-premises data center to a VPC, see Connection methods.

For more information about the mapping between OSS internal endpoints and virtual IP address (VIP) CIDR blocks, see Mapping between OSS internal endpoints and VIP CIDR blocks.

If the backup vault must access the OSS bucket over the Internet, run the following command to change the access method of the OSS bucket to Internet access. In the following code,

<backuplocation-name>specifies the name of the backup vault, and<region-id>specifies the region where the OSS bucket is deployed, such as cn-hangzhou.kubectl patch -n csdr backuplocation/<backuplocation-name> --type='json' -p '[{"op":"add","path":"/spec/config","value":{"network":"public","region":"<region-id>"}}]' kubectl patch -n csdr backupstoragelocation/<backuplocation-name> --type='json' -p '[{"op":"add","path":"/spec/config","value":{"network":"public","region":"<region-id>"}}]'

What do I do if the status of a task is Failed and the "HBRError: check HBR vault error" error is returned?

Issue description

The status of a backup, restore, or StorageClass conversion task is Failed and the "HBRError: check HBR vault error" error is returned.

Causes

Cloud Backup is not activated or the authorization is abnormal.

Solution

Make sure that Cloud Backup is activated. For more information, see Activate Cloud Backup.

If your cluster is deployed in China (Ulanqab), China (Heyuan), or China (Guangzhou), you must grant API Gateway permissions after you activate Cloud Backup. For more information, see (Optional) Step 3: Grant API Gateway permissions to Cloud Backup.

If your cluster is an ACK dedicated cluster or a registered cluster, make sure that the required RAM permissions are granted to Cloud Backup. For more information about the permissions and authorization methods, see Install the migrate-controller backup service component and configure permissions.

What do I do if the status of a task is Failed and the "hbr task finished with unexpected status: FAILED, errMsg ClientNotExist"?

Issue description

The status of a backup, restore, or StorageClass conversion task is Failed and the hbr task finished with unexpected status: FAILED, errMsg ClientNotExist error is returned.

Causes

The Cloud Backup client is abnormally deployed on the corresponding node. The replica of the hbr-client DaemonSet on the node in the csdr namespace is abnormal.

Solution

Run the following command to check whether hbr-client is abnormal in the cluster:

kubectl -n csdr get pod -lapp=hbr-clientIf a pod is in an abnormal state, first check whether the pod IP, memory, or CPU resources are insufficient. If the pod is in the CrashLoopBackOff state, run the following command to query the logs:

kubectl -n csdr logs -p <hbr-client-pod-name>If the output contains "SDKError:\n StatusCode: 403\n Code: MagpieBridgeSlrNotExist\n Message: code: 403, AliyunServiceRoleForHbrMagpieBridge doesn't exist, please create this role. ", see (Optional) Step 3: Grant API Gateway permissions to Cloud Backup to grant permissions to Cloud Backup.

If the log output contains other types of SDK faults, submit a ticket for processing.

What do I do if a task remains in the InProgress state for a long period of time?

Cause 1 and solution: The components in the csdr namespace are abnormal

Check the status of the components and identify the cause of the anomaly.

Run the following command to check whether the components in the csdr namespace are restarting or cannot be started:

kubectl get pod -n csdrRun the following command to identify the cause of the restart or startup failure:

kubectl describe pod <pod-name> -n csdr

If the cause is OOM Restart

If the OOM error occurs during restoration, the pod is csdr-velero-***, and many applications are running in the restore cluster, such as dozens of production namespaces. The OOM error may occur because Velero uses Informer Cache to accelerate the restore process by default. The cache occupies some memory.

If the number of cluster resources to be restored is small or you can accept some performance impact during restoration, you can run the following command to disable the Informer Cache feature:

kubectl -nkube-system edit deploy migrate-controllerAdd the

--disable-informer-cache=trueparameter to theargsof the migrate-controller container:name: migrate-controller args: - --disable-informer-cache=trueIn other cases, or if you do not want to reduce the speed of cluster resource restoration, run the following command to adjust the Limit value of the corresponding Deployment.

Among them,

csdr-controller-***corresponds to<deploy-name>ascsdr-controller;csdr-velero-***corresponds to<deploy-name>ascsdr-velero.kubectl patch deploy <deploy-name> -p '{"spec":{"containers":{"resources":{"limits":"<new-limit-memory>"}}}}'

If the cause is HBR Permissions Are Not Configured, Which Causes Startup Failure

Confirm that the Cloud Backup Service is activated for the cluster.

Not activated: Please activate the Cloud Backup service. For more information, see Cloud Backup.

If Cloud Backup is activated, proceed with the next step.

Confirm that the ACK dedicated cluster and registered cluster have been configured with Cloud Backup permissions.

Not configured: Configure Cloud Backup permissions. For more information, see Install backup service components and configure permissions.

If Cloud Backup permissions are configured, proceed with the next step.

Execute the following command to confirm whether the token required by the Cloud Backup Client widget exists.

kubectl describe <hbr-client-***>If the event error couldn't find key HBR_TOKEN is returned, the token is missing. Perform the following steps:

Run the following command to query the node where

hbr-client-***is deployed:kubectl get pod <hbr-client-***> -n csdr -owideRun the following command to change the

labels: csdr.alibabacloud.com/agent-enableof the corresponding node fromtruetofalse:kubectl label node <node-name> csdr.alibabacloud.com/agent-enable=false --overwriteImportantWhen you perform backup and recovery again, the token will be automatically created and the hbr-client will be initiated.

If you copy a token from another cluster to the current cluster, the hbr-client initiated by this token will not be active. You need to delete the copied token and the

hbr-client-*** Podinitiated by this token, and then execute the steps above.

Cause 2 and solution: Cluster snapshot permissions are not configured during disk data backup

When you back up the disk volume that is mounted to your application, if the backup task remains in the InProgress state for a long period of time, execute the following command to query the newly created VolumeSnapshot resource in the cluster.

kubectl get volumesnapshot -n <backup-namespace>Some expected outputs:

NAME READYTOUSE SOURCEPVC SOURCESNAPSHOTCONTENT ...

<volumesnapshot-name> true <volumesnapshotcontent-name> ...If all volumesnapshot resources remain in READYTOUSE false status for a long time, follow these steps to resolve the issue.

Log on to the ECS console and check whether the disk snapshot service is enabled.

If the service is not enabled: Enable the disk snapshot service in the corresponding region. For more information, see Enable snapshots.

If the feature is enabled, proceed with the next step.

Check whether the CSI component of the cluster runs as normal.

kubectl -nkube-system get pod -l app=csi-provisionerCheck whether the permissions for using disk snapshots are configured.

ACK managed clusters

Log on to the RAM console as a RAM user who has administrative rights.

In the left-side navigation pane, choose .

On the Roles page, search for AliyunCSManagedBackupRestoreRole in the search box and check whether the authorization policy of this role includes the following policy content.

{ "Statement": [ { "Effect": "Allow", "Action": [ "hbr:CreateVault", "hbr:CreateBackupJob", "hbr:DescribeVaults", "hbr:DescribeBackupJobs2", "hbr:DescribeRestoreJobs", "hbr:SearchHistoricalSnapshots", "hbr:CreateRestoreJob", "hbr:AddContainerCluster", "hbr:DescribeContainerCluster", "hbr:CancelBackupJob", "hbr:CancelRestoreJob", "hbr:DescribeRestoreJobs2" ], "Resource": "*" }, { "Effect": "Allow", "Action": [ "ecs:CreateSnapshot", "ecs:DeleteSnapshot", "ecs:DescribeSnapshotGroups", "ecs:CreateAutoSnapshotPolicy", "ecs:ApplyAutoSnapshotPolicy", "ecs:CancelAutoSnapshotPolicy", "ecs:DeleteAutoSnapshotPolicy", "ecs:DescribeAutoSnapshotPolicyEX", "ecs:ModifyAutoSnapshotPolicyEx", "ecs:DescribeSnapshots", "ecs:DescribeInstances", "ecs:CopySnapshot", "ecs:CreateSnapshotGroup", "ecs:DeleteSnapshotGroup" ], "Resource": "*" }, { "Effect": "Allow", "Action": [ "oss:PutObject", "oss:GetObject", "oss:DeleteObject", "oss:GetBucket", "oss:ListObjects", "oss:ListBuckets", "oss:GetBucketStat" ], "Resource": "acs:oss:*:*:cnfs-oss*" } ], "Version": "1" }If the AliyunCSManagedBackupRestoreRole role is missing, you can go to the Resource Access Management quick authorization page to complete the authorization of the AliyunCSManagedBackupRestoreRole role.

If the AliyunCSManagedBackupRestoreRole role exists but the policy content is incomplete, grant the above permissions to this role. For more information, see Create a custom policy and Grant permissions to a RAM role.

ACK dedicated clusters

Log on to the Container Service console, and in the left navigation bar, click Clusters.

On the Clusters page, find the cluster that you want to manage and click its name. In the left-side pane, click Cluster Information.

On the Cluster Information page, find the Master RAM Role parameter, and then click the link on the right.

On the Permission Management tab, check whether the disk snapshot permissions are properly configured.

If the k8sMasterRolePolicy-Csi-*** permission policy does not exist or does not include the following permissions, grant the following disk snapshot permission policy to the Master RAM role. For more information, see Create a custom policy and Grant permissions to a RAM role.

{ "Statement": [ { "Effect": "Allow", "Action": [ "hbr:CreateVault", "hbr:CreateBackupJob", "hbr:DescribeVaults", "hbr:DescribeBackupJobs2", "hbr:DescribeRestoreJobs", "hbr:SearchHistoricalSnapshots", "hbr:CreateRestoreJob", "hbr:AddContainerCluster", "hbr:DescribeContainerCluster", "hbr:CancelBackupJob", "hbr:CancelRestoreJob", "hbr:DescribeRestoreJobs2" ], "Resource": "*" }, { "Effect": "Allow", "Action": [ "ecs:CreateSnapshot", "ecs:DeleteSnapshot", "ecs:DescribeSnapshotGroups", "ecs:CreateAutoSnapshotPolicy", "ecs:ApplyAutoSnapshotPolicy", "ecs:CancelAutoSnapshotPolicy", "ecs:DeleteAutoSnapshotPolicy", "ecs:DescribeAutoSnapshotPolicyEX", "ecs:ModifyAutoSnapshotPolicyEx", "ecs:DescribeSnapshots", "ecs:DescribeInstances", "ecs:CopySnapshot", "ecs:CreateSnapshotGroup", "ecs:DeleteSnapshotGroup" ], "Resource": "*" }, { "Effect": "Allow", "Action": [ "oss:PutObject", "oss:GetObject", "oss:DeleteObject", "oss:GetBucket", "oss:ListObjects", "oss:ListBuckets", "oss:GetBucketStat" ], "Resource": "acs:oss:*:*:cnfs-oss*" } ], "Version": "1" }If the issue persists after the permissions are configured, submit a ticket for assistance.

Registered clusters

The disk snapshot feature can be used only for registered clusters where all nodes are Alibaba Cloud ECS instances. Check whether the relevant permissions were granted when the CSI storage plugin was installed. For more information, see Configure RAM permissions for CSI components.

Cause 3 and solution: Using storage volume types other than disks

From version v1.7.7, the migrate-controller component of Backup Center supports cross-region recovery of disk type data backups. Cross-region recovery for other types of data is not yet supported. If you are using a storage product that supports Internet access, such as Alibaba Cloud OSS, you can create a statically provisioned persistent volume claim (PVC) and persistent volume (PV) before restoring the application. For more information, see Use ossfs 1.0 static persistent volumes.

Backup status is Failed, and the prompt contains "backup already exists in OSS bucket"

Issue description

The backup status is Failed, and the prompt contains "backup already exists in OSS bucket".

Causes

A backup with the same name is stored in the OSS bucket associated with the backup vault.

Reasons why the backup is invisible in the current cluster:

Backups in ongoing backup tasks and failed backup tasks are not synchronized to other clusters.

If you delete a backup in a cluster other than the backup cluster, the backup file in the OSS bucket is labeled but not deleted. The labeled backup file will not be synchronized to newly associated clusters.

The current cluster is not associated with the backup vault that stores the backup, which means that the backup vault is not initialized.

Solution

Recreate a backup vault with another name.

What do I do if the status of a backup task is Failed and the "get target namespace failed" error is returned?

Issue description

The backup status is Failed, and the error message contains "get target namespace failed".

Possible causes

In most cases, this error occurs in backup tasks that are created at a scheduled time. The cause varies based on the way how you select namespaces.

When you select the Include method, all selected namespaces have been deleted.

When you select the Exclude method, no namespaces other than the selected ones exist in the cluster.

Solution

You can edit the backup plan to adjust the method for selecting namespaces and the corresponding namespaces.

What do I do if the status of a backup task is Failed and the "velero backup process timeout" error is returned?

Issue description

The backup status is Failed, and the error message contains "velero backup process timeout".

Possible causes

Cause 1: The subtask of the application backup times out. The duration of a subtask varies based on the amount of cluster resources, the response latency of the API server, and other factors. In migrate-controller 1.7.7 and later, the default timeout period of subtasks is 60 minutes.

Cause 2: The storage class of the bucket used by the backup repository is Archive Storage, Cold Archive, or Deep Cold Archive. To ensure data consistency during the backup process, files that record metadata must be updated by the component on the OSS server. The component cannot update files that are not restored.

Solutions

Solution 1: Modify the global configuration of the backup subtask timeout period in the backup cluster.

Run the following command to add the

velero_timeout_minutesconfiguration item in applicationBackup. The unit is minutes.kubectl edit -n csdr cm csdr-configFor example, the following code block sets the timeout period to 100 minutes:

apiVersion: v1 data: applicationBackup: | ... #Details not shown. velero_timeout_minutes: 100After you modify the timeout period, run the following command to restart csdr-controller for the modification to take effect:

kubectl -n csdr delete pod -l control-plane=csdr-controllerSolution 2: Change the storage class of the bucket used by the backup repository to Standard.

If you want to store backup data in Archive Storage, you can configure a lifecycle rule to automatically transform the storage class and restore the data before recovery. For more information, see Transform storage classes.

Backup status is Failed, with a prompt containing "HBR backup request failed"

Issue description

The backup job status is Failed, with a prompt containing "HBR backup request failed".

Possible causes

Cause 1: The storage plugin used by the cluster is not currently compatible.

Cause 2: Cloud Backup does not currently support backing up persistent volumes with volumeMode set to Block mode. For more information, see volumeMode introduction.

Cause 3: The Cloud Backup client is abnormal, causing backup or recovery tasks for file system type data (such as OSS, NAS, CPFS, local storage volume data, etc.) to timeout or fail.

Solutions

Solution 1: If your cluster uses a non-Alibaba Cloud CSI storage plugin, or the persistent volume is not a general K8s storage volume such as NFS or LocalVolume, and you encounter compatibility issues, please submit a ticket for resolution.

Solution 2: Please submit a ticket for resolution.

Solution 3: Please follow these steps:

Log on to the Cloud Backup console.

In the left-side navigation pane, select Backup > Container Backup, and then click the Backup Jobs tab.

In the top navigation bar, select a region.

On the Backup Jobs tab, click the dropdown menu in front of the search box and select Job Name, then search for

<backup-name>-hbrto query the status of the backup job and the corresponding reason. For more information, see ACK Backup.NoteIf you need to query storage class transformation or backup jobs, please search using the corresponding backup name.

Backup status is Failed, with a prompt containing "HBR get empty backup info"

Issue description

Backup status is Failed, with a prompt containing "HBR get empty backup info".

Possible causes

In hybrid cloud scenarios, Backup Center uses the standard K8s persistent volume mount path as the data backup path by default. For standard CSI storage drivers, the default mount path is /var/lib/kubelet/pods/<pod-uid>/volumes/kubernetes.io~csi/<pv-name>/mount. The same applies to NFS, FlexVolume, and other storage drivers officially supported by K8s.

Here, /var/lib/kubelet is the default kubelet root path. If you have modified this path in your K8s cluster, Cloud Backup may not be able to access the data to be backed up.

Solution

Log on to the node where the persistent volume is mounted and follow these steps to troubleshoot:

Check if the kubelet root path on the node has been changed

Execute the following instruction to query the kubelet startup instruction

ps -elf | grep kubeletIf the startup instruction includes the parameter

--root-dir, the parameter value is the kubelet root path.If the startup instruction includes the parameter

--config, the parameter value is the kubelet configuration file. Check the configuration file. If it contains theroot-dirfield, the parameter value is the kubelet root path.If the startup instruction does not contain root path information, check the content of the kubelet service startup file

/etc/systemd/system/kubelet.service. If it contains the EnvironmentFile field, such as:EnvironmentFile=-/etc/kubernetes/kubeletThe environment variable configuration file is

/etc/kubernetes/kubelet. Check the content of the configuration file. If it contains the following:ROOT_DIR="--root-dir=/xxx"Then /xxx is the kubelet root path.

If no related changes are found, the kubelet root path is the default

/var/lib/kubelet.

Execute the following instruction to check if the kubelet root path is a symbolic link to another path:

ls -al <root-dir>If it contains output similar to:

lrwxrwxrwx 1 root root 26 Dec 4 10:51 kubelet -> /var/lib/container/kubeletThen the actual root path is

/var/lib/container/kubelet.Verify that the data for the backup target persistent volume exists under the root path.

Confirm that the persistent volume mount path

<root-dir>/pods/<pod-uid>/volumesexists, and that there are target type persistent volume subpaths under it, such askubernetes.io~csi,kubernetes.io~nfs, etc.Append the ENV

KUBELET_ROOT_PATH = /var/lib/container/kubelet/podsfor the stateless applications csdr/csdr-controller. Here,/var/lib/container/kubeletis the actual kubelet root path obtained through the previous configuration and symbolic link queries.

Backup status is Failed, and the prompt contains "check backup files in OSS bucket failed" or "upload backup files to OSS bucket failed" or "download backup files from OSS bucket failed"

Problem description

The backup status is Failed, and the prompt contains "upload backup files to OSS bucket failed".

Causes

The secret generated when the application is created.

Reason 1: The OSS bucket is configured with data encryption, but the related KMS permissions are not appended.

Reason 2: When installing widgets and configuring permissions for ACK dedicated clusters and registered clusters, some access control lists are missing.

Reason 3: The RAM user authentication credentials used to configure permissions for ACK dedicated clusters and registered clusters have been revoked.

Solution

Solution 2: Confirm the access policy of the RAM user used when configuring permissions. For the required access policies for the widget, see Step 1: Configure related permissions.

Solution 3: Confirm whether the authentication credential of the RAM user used for permission configuration is enabled. If it has been revoked, obtain a new authentication credential and update the content of the Secret

alibaba-addon-secretin the csdr namespace, and execute the following operation to restart the widget:kubectl -nkube-system delete pod -lapp=migrate-controller

Backup status is PartiallyFailed, and the prompt contains "PROCESS velero partially completed"

Issue description

The backup status is PartiallyFailed, and the prompt contains "PROCESS velero partially completed".

Cause

When you use the velero component to back up applications (resources in the cluster), the component fails to back up some resources.

Solution

Execute the following command to confirm the failed resources and the cause of failure.

kubectl -n csdr exec -it $(kubectl -n csdr get pod -l component=csdr | tail -n 1 | cut -d ' ' -f1) -- ./velero describe backup <backup-name>Fix the issue according to the prompts in the Errors and Warnings fields in the output.

If the cause of failure is not directly displayed, execute the following command to obtain the relevant abnormal logs.

kubectl -n csdr exec -it $(kubectl -n csdr get pod -l component=csdr | tail -n 1 | cut -d ' ' -f1) -- ./velero backup logs <backup-name>If you cannot fix the issue based on the cause of failure or abnormal logs, submit a ticket for processing.

Backup status is PartiallyFailed, and the prompt contains "PROCESS hbr partially completed"

Issue description

The backup status is PartiallyFailed, and the prompt contains "PROCESS hbr partially completed".

Causes

When you use Cloud Backup to back up file system volumes, such as OSS volumes, NAS volumes, CPFS volumes, or local volumes, Cloud Backup fails to back up some resources. The issue may occur due to one of the following reasons:

Reason 1: The storage plugin used by some volumes is not supported.

Reason 2: Cloud Backup does not guarantee data consistency. If files are deleted during backup, the backup may fail.

Solution

Log on to the Cloud Backup console.

In the left-side navigation pane, select Backup > Container Backup, and then click the Backup Jobs tab.

In the top navigation bar, select a region.

On the Backup Jobs tab, click the drop-down list in front of the search box and select Job Name, and then search for

<backup-name>-hbrto query the reason why the volume backup failed or partially failed. For more information, see ACK backup.

Storage class conversion status is Failed, with a prompt containing "storageclass xxx not exists"

Issue description

The storage class conversion status is Failed, with a prompt containing "storageclass xxx not exists".

Possible causes

The target StorageClass selected during the storage class conversion does not exist in the current cluster.

Solution

Execute the following command to reset the storage class conversion job.

cat << EOF | kubectl apply -f - apiVersion: csdr.alibabacloud.com/v1beta1 kind: DeleteRequest metadata: name: reset-convert namespace: csdr spec: deleteObjectName: "<backup-name>" deleteObjectType: "Convert" EOFCreate the desired StorageClass in the current cluster.

Execute the restore job again and configure the storage class conversion.

Storage class conversion status is Failed, with a prompt containing "only support convert to storageclass with CSI diskplugin or nasplugin provisioner"

Issue description

The storage class conversion status is Failed, with a prompt containing "only support convert to storageclass with CSI diskplugin or nasplugin provisioner".

Causes

During storage class conversion, the selected target storage class is not an Alibaba Cloud CSI disk type or NAS type.

Solution

The current version only supports snapshot creation and recovery for disk type and NAS type by default. If you have other recovery requirements, please contact relevant support submit a ticket.

If you are using storage products that support public network access, such as Alibaba Cloud OSS, you can create storage claims and persistent volumes through static mounting and then directly recover applications without the storage class conversion step. For more information, see Use ossfs 1.0 static persistent volume.

StorageClass conversion status is Failed, and the prompt contains "current cluster is multi-zoned"

Issue description

The StorageClass conversion status is Failed, and the prompt contains "current cluster is multi-zoned".

Causes

The current cluster is a multi-zone cluster. When converting to a disk volume type StorageClass, the target StorageClass volumeBindingMode is set to Immediate. If you use this type of StorageClass in a multi-zone cluster, pods cannot be scheduled to the specified node and remain in the Pending state after disk volumes are created. For more information about the volumeBindingMode field, see StorageClass.

Solution

Execute the following command to reset the StorageClass conversion job.

cat << EOF | kubectl apply -f - apiVersion: csdr.alibabacloud.com/v1beta1 kind: DeleteRequest metadata: name: reset-convert namespace: csdr spec: deleteObjectName: "<backup-name>" deleteObjectType: "Convert" EOFIf you need to convert to a disk StorageClass.

When using the console, select alicloud-disk. alicloud-disk uses alicloud-disk-topology-alltype StorageClass by default.

When using the command line, select the alicloud-disk-topology-alltype type. The alicloud-disk-topology-alltype type is the default StorageClass provided by the CSI storage plugin. You can also customize a StorageClass with volumeBindingMode set to WaitForFirstConsumer.

Execute the restore job again and configure the StorageClass conversion.

Restore job status is Failed, and the prompt contains "multi-node writing is only supported for block volume"

Issue description

The restore or storage class transform job status is Failed, and the prompt contains "multi-node writing is only supported for block volume. For Kubernetes users, if unsure, use ReadWriteOnce access mode in PersistentVolumeClaim for disk volume".

Possible causes

To avoid the risk of forced disk detachment when a disk is mounted by another node during the mounting process, CSI verifies the AccessModes configuration of disk-type persistent volumes during mounting and prohibits the use of ReadWriteMany or ReadOnlyMany configurations.

The application being backed up mounts a persistent volume with AccessMode set to ReadWriteMany or ReadOnlyMany (mostly network storage that supports multiple mounts such as OSS or NAS). When restoring to Alibaba Cloud disk storage that does not support multiple mounts by default, CSI may throw the above error.

Specifically, the following three scenarios may cause this error:

Scenario 1: The CSI version in the backup cluster is older (or uses the FlexVolume storage plugin). Earlier CSI versions do not verify the AccessModes field of Alibaba Cloud disk persistent volumes during mounting, causing the original disk persistent volume to report an error when restored to a cluster with a newer CSI version.

Scenario 2: The custom storage class used by the backup persistent volume does not exist in the restore cluster. According to certain matching rules, it is restored by default as an Alibaba Cloud disk storage class persistent volume in the new cluster.

Scenario 3: During restoration, you manually specify that the backed-up persistent volume should be restored to an Alibaba Cloud disk type persistent volume using the storage class transform feature.

Solution

Scenario 1: Starting from version v1.8.4, the backup component supports automatic conversion of the AccessModes field of disk persistent volumes to ReadWriteOnce. Please upgrade the backup center component and then restore again.

Scenario 2: Automatic restoration of the storage class by the component in the target cluster may risk data inaccessibility or data overwriting. Please create a storage class with the same name in the target cluster before restoring, or specify the storage class to be used during restoration through the storage class transform feature.

Scenario 3: When restoring a network storage type persistent volume to disk storage, configure the convertToAccessModes parameter to convert AccessModes to ReadWriteOnce. For more information, see convertToAccessModes: target AccessModes list.

Restore job status is Failed, with the message "only disk type PVs support cross-region restore in current version"

Issue description

The restore job status is Failed, with the message "only disk type PVs support cross-region restore in current version".

Causes

In migrate-controller 1.7.7 and later versions, backups of disk volumes can be restored across regions. Backups of other volume types cannot be restored across regions.

Solution

If you are using a storage service that supports Internet access, such as OSS, you can create a statically provisioned PV and PVC and then restore the application. For more information, see Use ossfs 1.0 static volumes.

If you need to restore backups of other volume types across regions, submit a ticket.

Restore job status is Failed, and the prompt contains "ECS snapshot cross region request failed"

Issue description

The restore job status is Failed, and the prompt contains "ECS snapshot cross region request failed".

Causes

The backup center migrate-controller component supports cross-region recovery of disk type data backups starting from version v1.7.7, but the ECS disk snapshot permission is not authorized.

Solution

If your cluster is an ACK dedicated cluster, or a registered cluster that uses a self-built Kubernetes cluster on ECS, you need to supplement the relevant access policy for ECS disk snapshots. For more information, see Cluster registration.

What do I do if the status of the restore task is Failed and the "accessMode of PVC xxx is xxx" error is returned?

Issue description

The status of the restore task is Failed and the "accessMode of PVC xxx is xxx" error is returned.

Causes

The AccessMode of the disk volume that you want to restore is set to ReadOnlyMany (read-only multi-mount) or ReadWriteMany (read-write multi-mount).

When you restore the disk volume, the new volume is mounted by using CSI. Take note of the following items when you use the current version of CSI:

CSI supports only multi-instance mounting for volumes that have the

multiAttachattribute enabled.Volumes whose

VolumeModeis set toFilesystem(mounted by using file systems such as ext4 and xfs) support only read-only multi-mounting.

For more information about disk storage, see Use dynamic disk volumes.

Solution

If you use the storage class transform feature to transform volumes that support multi-mounting, such as OSS and NAS volumes, into disk volumes, we recommend that you create a restore task and set the destination storage class to

alibabacloud-cnfs-nas. This way, you can use NAS volumes that are managed by Container Network File System (CNFS) to ensure that different replicas of your workloads can properly share data on the volumes. For more information, see Manage NAS file systems by using CNFS (recommended).If the CSI version that you used to create the disk volume is outdated (the

AccessModeis not verified) and the backed-up volume does not meet the requirements of the current CSI version, we recommend that you use dynamic disk volumes to modify your workloads. This way, you can prevent the risk of forcibly detaching disks when the workloads are scheduled to other nodes. If you have more questions or requirements about multi-mounting scenarios, submit a ticket.

Restore job status is Completed, but some resources are not created in the restored cluster

Issue description

The restore job status is Completed, but some resources are not created in the restored cluster.

Possible causes

Cause 1: The resource was not backed up.

Cause 2: The resource was excluded during restoration according to configuration items.

Cause 3: The application restore sub-job partially failed.

Cause 4: The resource was successfully restored but was revoked due to ownerReferences configuration or business logic.

Solutions

Solution 1:

Execute the following command to query backup details.

kubectl -n csdr exec -it $(kubectl -n csdr get pod -l component=csdr | tail -n 1 | cut -d ' ' -f1) -- ./velero describe backup <backup-name> --detailsConfirm whether the target resource has been backed up. If the target resource has not been backed up, confirm whether it was excluded due to the backup job's specified/excluded namespaces, resources, or other configurations, and perform a new backup. Cluster-level resources of applications (pods) running in namespaces that are not selected will not be backed up by default. If you need to back up all Cluster-level resources, see Cluster-level backup.

Solution 2:

If the target resource was not restored, confirm whether it was excluded due to the backup job's specified/excluded namespaces, resources, or other configurations, and perform a new restore.

Solution 3:

Execute the following command to identify the failed resources and failure reasons.

kubectl -n csdr exec -it $(kubectl -n csdr get pod -l component=csdr | tail -n 1 | cut -d ' ' -f1) -- ./velero describe restore <restore-name> Fix the issues according to the prompts in the Errors and Warnings fields in the output. If you cannot fix the problem based on the failure reason, submit a ticket for assistance.

Solution 4:

Query the audit logs for the corresponding resource to confirm whether it was abnormally deleted after creation.

What do I do if the migrate-controller component in a cluster that uses FlexVolume cannot be launched?

The migrate-controller component of backup center does not support clusters that use FlexVolume. To use the backup center feature, use one of the following methods to migrate from FlexVolume to CSI:

Migrate from FlexVolume to CSI by using the csi-compatible-controller component

Migrate static NAS persistent volumes (PVs) from FlexVolume to CSI

Migrate static OSS persistent volumes (PVs) from FlexVolume to CSI

For other scenarios, join the DingTalk group (Group ID: 35532895) for consultation.

If you need to back up applications in a cluster that uses FlexVolume and recover the applications in a cluster that uses CSI during the migration, see Migrate applications from a low-version Kubernetes cluster by using backup center.

Can I modify the backup vault?

You cannot modify the backup vault of the backup center. You can only delete the current one and create a backup vault with another name.

Because the backup vault is shared, an existing backup vault may be in the Backup or Recover status at any time. If you modify a parameter of the backup vault, the system may fail to find the required data when backing up or restoring an application. Therefore, you cannot modify the backup vault or create backup vaults that use the same name.

Can a backup vault be associated with an OSS bucket that is not named in the "cnfs-oss-*" format

For clusters other than ACK dedicated clusters and registered clusters, the backup center component has read and write permissions for OSS buckets named with the cnfs-oss-* pattern by default. To prevent backups from overwriting existing data in the bucket, we recommend that you create a dedicated OSS bucket that follows the cnfs-oss-* naming convention for the backup center.

If you need to associate a backup vault with an OSS bucket that is not named in the "cnfs-oss-*" format, you must configure permissions for the component. For more information, see ACK dedicated cluster.

After you grant permissions, run the following command to restart the backup service component.

kubectl -n csdr delete pod -l control-plane=csdr-controller kubectl -n csdr delete pod -l component=csdrIf you have already created a backup vault associated with an OSS bucket that is not named in the "cnfs-oss-*" format, you can wait for the connectivity check to complete and the status to change to Available before attempting to back up or restore. The interval between connectivity checks is approximately five minutes. You can run the following command to query the status of the backup vault.

kubectl -n csdr get backuplocationExpected output:

NAME PHASE LAST VALIDATED AGE a-test-backuplocation Available 7s 6d1h

How to fill in the backup cycle when creating a backup plan

The backup cycle supports Crontab expressions (such as 1 4 * * *); or you can specify interval-based backups (such as 6h30m), which means a backup is performed every 6h30m.

The Crontab expression is parsed as follows, with optional values (except for minute, which can be 0-59), where * represents any active value for a given field. Sample crontab expressions:

1 4 * * *: indicates a backup once a day at 4:01 AM.0 2 15 * 1: indicates a backup once a month on the 15th at 2:00 AM.

* * * * *

| | | | |

| | | | ·----- day of week (0 - 6) (Sun to Sat)

| | | ·-------- month (1 - 12)

| | .----------- day of month (1 - 31)

| ·-------------- hour (0 - 23)

·----------------- minute (0 - 59)

What default adjustments are made to the YAML resources during restore jobs?

When you restore resources, the following changes are made to the YAML files of resources:

Adjustment 1:

If the size of a disk volume is less than 20 GiB, the volume size is changed to 20 GiB.

Adjustment 2:

Services are restored based on Service types:

NodePort Services: The ports of NodePort Services are retained by default during cross-cluster restoration.

LoadBalancer Services: When ExternalTrafficPolicy is set to Local, HealthCheckNodePort uses a random port by default. If you want to retain the port number, set

spec.preserveNodePorts: truewhen you create a restore job.If you restore a Service that uses an existing SLB instance in the backup cluster, the restored Service uses the same SLB instance and disables the listeners by default. You need to log on to the SLB console to configure the listeners.

If you restore a Service that uses an SLB instance managed by CCM in the backup cluster, CCM creates a new SLB instance. For more information, see Considerations for load balancing configuration of Services.

How to view specific backup resources?

Cluster application backup resources

The YAML files in the cluster are stored in the OSS bucket associated with the backup vault. You can use one of the following methods to view backup resources.

Run the following command in a cluster to which backup files are synchronized to view backup resources:

kubectl -n csdr get pod -l component=csdr | tail -n 1 | cut -d ' ' -f1 kubectl -n csdr exec -it csdr-velero-xxx -c velero -- ./velero describe backup <backup-name> --detailsView backup resources in the ACK console.

Log on to the ACK console. In the left-side navigation pane, click Clusters.

On the Clusters page, find the cluster that you want to manage and click its name. In the left-side pane, choose .

On the Application Backup page, click the Backup Records tab. In the Backup Records column, click the target backup record that you want to view.

Disk volume backup resources

Log on to the ECS console.

In the left-side navigation pane, choose .

In the top navigation bar, select the region and resource group of the resource that you want to manage.

On the Snapshots page, query snapshots based on the disk ID.

Non-disk volume backup resources

Log on to the Cloud Backup console.

In the left-side navigation pane, choose .

In the top navigation bar, select a region.

View the basic information of cluster backups.

Clusters: The list of clusters that have been backed up and protected. Click ACK Cluster ID to view the protected persistent volume claims (PVCs). For more information about PVCs, see Persistent volume claim (PVC).

If Client Status is abnormal, Cloud Backup is not running as expected in the ACK cluster. Go to the DaemonSets page in the ACK console to troubleshoot the issue.

Backup Jobs: The status of backup jobs.

Does the backup center support restoring backups from clusters of earlier Kubernetes versions to clusters of later Kubernetes versions?

Yes, the backup center supports restoring backups from clusters of earlier Kubernetes versions to clusters of later Kubernetes versions.

By default, when you back up resources, all API versions supported by the resources are backed up. For example, a Deployment in a cluster that runs Kubernetes 1.16 supports extensions/v1beta1, apps/v1beta1, apps/v1beta2, and apps/v1. When you back up the Deployment, the backup vault stores all four API versions regardless of which version you use when you create the Deployment. The KubernetesConvert feature is used for API version conversion.

When you restore resources, the API version recommended by the restore cluster is used to restore the resources. For example, if you restore the preceding Deployment in a cluster that runs Kubernetes 1.28 and the recommended API version is apps/v1, the restored Deployment uses apps/v1.

If no API version is supported by both clusters, you must manually deploy the resource. For example, Ingresses in clusters that run Kubernetes 1.16 support extensions/v1beta1 and networking.k8s.io/v1beta1. You cannot restore the Ingresses in clusters that run Kubernetes 1.22 or later because Ingresses in these clusters support only networking.k8s.io/v1. For more information about the migration of Kubernetes API versions, see official documentation. Due to API version compatibility issues, we recommend that you do not use the backup center to migrate applications from clusters of later Kubernetes versions to clusters of earlier Kubernetes versions. We also recommend that you do not migrate applications from clusters of Kubernetes versions earlier than 1.16 to clusters of later Kubernetes versions.

Is load balancing traffic automatically switched during restoration?

No, load balancing traffic is not automatically switched during restoration.

Services are restored based on Service types:

NodePort Services: The ports of NodePort Services are retained by default during cross-cluster restoration.

LoadBalancer Services: When ExternalTrafficPolicy is set to Local, HealthCheckNodePort uses a random port by default. If you want to retain the port number, set

spec.preserveNodePorts: truewhen you create a restore job.If you restore a Service that uses an existing SLB instance in the backup cluster, the restored Service uses the same SLB instance and disables the listeners by default. You need to log on to the SLB console to configure the listeners.

If you restore a Service that uses an SLB instance managed by CCM in the backup cluster, CCM creates a new SLB instance. For more information, see Considerations for load balancing configuration of Services.

By default, after listeners are disabled or new SLB instances are used, traffic is not automatically switched to the new SLB instances. If you use service discovery from other cloud products or third-party services and do not want automated service discovery to switch traffic to new SLB instances, you can exclude Service resources during backup. When you need to switch traffic, you can manually deploy the Services.

Why is backup not supported by default for resources in namespaces such as csdr, ack-csi-fuse, kube-system, kube-public, and kube-node-lease?

csdr is the namespace of the backup center. If you directly back up and restore the namespace, components fail to work in the restore cluster. In addition, the backup center has backup synchronization logic, so you do not need to manually migrate backups to a new cluster.

ack-csi-fuse is the working namespace of the CSI storage component, which is used to run FUSE client pods maintained by CSI. When you restore storage in a new cluster, the CSI of the new cluster automatically synchronizes to the corresponding client. You do not need to manually back up and restore it.

kube-system, kube-public, and kube-node-lease are the default system namespaces of Kubernetes clusters. Due to the differences in cluster parameters and configurations, you cannot restore the namespaces across clusters. The backup center is used to back up and restore applications. Before you run a restore task, you must install and configure system components in the restore cluster. For example:

Container Registry password-free image pulling component: You need to grant permissions to and configure acr-configuration in the restore cluster.

ALB Ingresses: You need to configure ALBConfigs.

Directly backing up system components in kube-system to a new cluster may cause the system components to run abnormally.

Does Backup Center use ECS disk snapshots to back up disk data? What is the default type of snapshot?

In the following scenarios, Backup Center uses ECS disk snapshots by default to back up disk data.

The cluster is an ACK managed cluster or ACK dedicated cluster.

The cluster version is 1.18 or later, and uses the CSI storage plugin of version 1.18 or later.

In other cases, Backup Center uses Cloud Backup by default to back up disk data.

Disk snapshots created by Backup Center have the instant access feature enabled by default, and the snapshot retention period is consistent with the retention period configured for the backup by default. Starting from 11:00 on October 12, 2023, Alibaba Cloud ECS no longer charges for snapshot instant access storage fees and snapshot instant access usage fees in all regions. For more information, see Snapshot instant access capability.

Why is the validity period of ECS disk snapshots created by backups inconsistent with the backup configuration?

The creation of disk snapshots depends on the csi-provisioner component (or managed-csiprovisioner component) of a cluster. If the version of the csi-provisioner component is earlier than 1.20.6, you cannot specify the validity period or enable the instant access feature when you create VolumeSnapshots. In this case, the validity period in the backup configuration does not take effect on disk snapshots.

Therefore, when you use the persistent volume data backup feature for disks, you need to update the csi-provisioner component to version 1.20.6 or later.

If csi-provisioner cannot be updated to this version, you can configure the default snapshot validity period in the following ways:

The backup center provides a unified platform for managing backup tasks. You can use the backup center to create, manage, and restore backups. This topic describes how to use the backup center to create a backup task.

Run the following command to check whether a VolumeSnapshotClass with a retentionDays value of 30 exists in the cluster.

kubectl get volumesnapshotclass csdr-disk-snapshot-with-default-ttlIf the VolumeSnapshotClass does not exist, use the following YAML to create a VolumeSnapshotClass named csdr-disk-snapshot-with-default-ttl.

If the VolumeSnapshotClass already exists, set the

retentionDaysfield in the default csdr-disk-snapshot-with-default-ttl VolumeSnapshotClass to 30.apiVersion: snapshot.storage.k8s.io/v1 deletionPolicy: Retain driver: diskplugin.csi.alibabacloud.com kind: VolumeSnapshotClass metadata: name: csdr-disk-snapshot-with-default-ttl parameters: retentionDays: "30"

After the configuration, all backups involving disk persistent volumes created in this cluster will create disk snapshots with the same validity period as the

retentionDaysfield specified above.ImportantIf you want the validity period of ECS disk snapshots created by backups to always be consistent with the backup configuration, we recommend that you update the csi-provisioner component to version 1.20.6 or later.

In application backup, which scenarios are suitable for backing up persistent volume data?

What is backing up persistent volume data?

Through ECS disk snapshots or Cloud Backup service, the data in the persistent volume is stored in cloud storage. During recovery, the data is stored in new disks or NAS for use by the recovered application. The recovered application does not share data sources with the original application, and they do not affect each other.

If you do not need to copy data or have shared data source requirements, you can choose not to back up volume data. Ensure that the excluded resource list in the backup does not include PVC and PV resources. During recovery, the system will directly deploy to the new cluster according to the original YAML of the persistent volume.

Which scenarios are suitable for backing up persistent volume data?

Data disaster recovery and version recording.

When the storage class is disk, because basic disks can only be mounted to a single node.

Cross-region backup and recovery, as typically all storage types except OSS do not have cross-region access capabilities.

When the data of the backup application and the recovered application needs to be isolated.

When there are significant differences in storage plugins or versions between the backup cluster and the recovery cluster, and YAML cannot be directly recovered.

For stateful applications, what risks might exist if persistent volume data is not backed up?

If you do not back up persistent volume data during backup, and the backup includes stateful applications, the behavior during recovery is:

For persistent volumes with reclaim policy set to Delete:

Similar to deploying PVC for the first time, if there is a corresponding storage class in the recovery cluster, CSI will automatically create a new PV. For example, with disk storage, a newly created empty disk will be mounted for the recovered application. For static persistent volumes without a specified storage class, or if the recovery cluster does not have a corresponding storage class, the recovered PVC and Pod will remain in Pending status until you manually create the corresponding PV or storage class.

For persistent volumes with reclaim policy set to Retain:

During recovery, the system will recover according to the original YAML file in the order of PV first, then PVC. For storage types that support multiple mounts such as NAS and OSS, the original FileSystem or Bucket can be reused directly. For disks, there might be a risk of forced detachment.

You can use the following instruction to query the reclaim policy of persistent volumes:

kubectl get pv -o=custom-columns=CLAIM:.spec.claimRef.name,NAMESPACE:.spec.claimRef.namespace,NAME:.metadata.name,RECLAIMPOLICY:.spec.persistentVolumeReclaimPolicyExpected results:

CLAIM NAMESPACE NAME RECLAIMPOLICY

www-web-0 default d-2ze53mvwvrt4o3xxxxxx Delete

essd-pvc-0 default d-2ze5o2kq5yg4kdxxxxxx Delete

www-web-1 default d-2ze7plpd4247c5xxxxxx Delete

pvc-oss default oss-e5923d5a-10c1-xxxx-xxxx-7fdf82xxxxxx RetainHow to select nodes available for file system backup operations in data protection?

By default, when backing up storage volumes other than Alibaba Cloud disk types, Cloud Backup is used for data backup and recovery, which requires executing the Cloud Backup job on a node. The default scheduling policy of ACK Scheduler is consistent with the community Kubernetes scheduler. Based on your business requirements, you can also configure jobs to be scheduled only on specific nodes.

Currently, Cloud Backup jobs cannot be scheduled to virtual nodes.

By default, backup jobs are low-priority tasks. For the same backup job, a node can execute a backup task for only one persistent volume (PV) at most.

Backup center node scheduling policies

exclude policy (default): By default, all nodes are available for backup and recovery. If you do not want Cloud Backup jobs to be scheduled on certain nodes, you need to add the

csdr.alibabacloud.com/agent-excluded="true"tag to those nodes.kubectl label node <node-name-1> <node-name-2> csdr.alibabacloud.com/agent-excluded="true"include policy: By default, nodes without tags are not available for backup and recovery. You need to add the

csdr.alibabacloud.com/agent-included="true"tag to nodes that you want to allow to execute Cloud Backup jobs.kubectl label node <node-name-1> <node-name-2> csdr.alibabacloud.com/agent-included="true"prefer policy: By default, all nodes are available for backup and recovery, with the following scheduling priority:

Priority is given to nodes with the

csdr.alibabacloud.com/agent-included="true"tag.Nodes without special tags are next in priority.

Finally, nodes with the

csdr.alibabacloud.com/agent-excluded="true"tag are considered.

Switch node selection policy

Run the following command to edit the

csdr-configConfigMap.kubectl -n csdr edit cm csdr-configAppend the

node_schedule_policyconfiguration to theapplicationBackupconfiguration. Example:Run the following command to restart the

csdr-controllerDeployment to apply the configuration.kubectl -n csdr delete pod -lapp=csdr-controller

What are the scenarios for application backup and data protection?

Application backup:

You want to back up your business in your cluster, including applications, Services, and configuration files.

Optional: When you back up an application, you want to also back up the volumes mounted to the application.

NoteThe application backup feature does not back up volumes that are not mounted to pods.

If you want to back up applications and all volumes, you can create data protection backup tasks.

You want to migrate applications between clusters and quickly restore applications for disaster recovery.

Data protection:

You want to backup volumes, including only Persistent Volume Claims (PVCs) and Persistent Volumes (PVs).

You want to restore PVCs independently of the backup data. When you use the backup center to restore a deleted PVC, a new disk is created with data identical to the backup file. The mount parameters of the new PVC remain unchanged, allowing it to be directly mounted to applications.

You want to implement data replication and disaster recovery.

Does Backup Center support data encryption for associated OSS buckets? How do I grant the required permissions when enabling KMS server-side encryption?

OSS bucket data encryption methods are divided into server-side encryption and client-side encryption. Currently, Backup Center only supports server-side encryption for OSS buckets. You can manually enable server-side encryption for your attached bucket through the OSS console and configure the encryption method. For more information about OSS bucket server-side encryption and operation procedures, see Server-side encryption.

If you use KMS managed keys for encryption and decryption, and use Bring-Your-Own-Key (BYOK), which means you have configured a specific CMK ID, you need to grant Backup Center the permissions to access KMS. Perform the following steps:

Create the following custom access policy. For more information, see Create a custom access policy.

{ "Version": "1", "Statement": [ { "Effect": "Allow", "Action": [ "kms:List*", "kms:DescribeKey", "kms:GenerateDataKey", "kms:Decrypt" ], "Resource": [ "acs:kms:*:141661496593****:*" ] } ] }The preceding access policy allows calls to all KMS keys under this Alibaba Cloud account ID. If you need more precise Resource configuration, see Authorization information.

For ACK dedicated clusters and registered clusters, grant permissions to the RAM user used during installation. For more information, see Grant permissions to a RAM user. For other clusters, grant permissions to the AliyunCSManagedBackupRestoreRole role. For more information, see Grant permissions to a RAM role.

If you use the default KMS key managed by OSS or use fully managed keys by OSS for encryption and decryption, no additional authorization is required.

How do I change the image used by an application during restoration?

Assume that the image used by the application in the backup is: docker.io/library/app1:v1

Change the image repository address

In hybrid cloud scenarios, you might need to deploy an application across the clouds of multiple cloud service providers or migrate an application from the data center to the cloud. In these cases, you must upload the image used by the application to an image repository on Container Registry.

You must use the imageRegistryMapping field to specify the image repository address. For example, you can use the following configuration to change the image to

registry.cn-beijing.aliyuncs.com/my-registry/app1:v1.docker.io/library/: registry.cn-beijing.aliyuncs.com/my-registry/Change the image repository and version

Changing the image repository and version is an advanced feature. Before you create a restore task, you must specify the change details in a ConfigMap.

Assume that you want to change the image repository to

app2:v2. You can create the following ConfigMap:apiVersion: v1 kind: ConfigMap metadata: name: <ConfigMap name> namespace: csdr labels: velero.io/plugin-config: "" velero.io/change-image-name: RestoreItemAction data: "case1":"app1:v1,app2:v2" # If you want to change only the image repository, use the following setting. # "case1": "app1,app2" # If you want to change only the image version, use the following setting. # "case1": "v1:v2" # If you want to change only an image in an image repository, use the following setting. # "case1": "docker.io/library/app1:v1,registry.cn-beijing.aliyuncs.com/my-registry/app2:v2"If you have multiple changes to make, you can continue to configure case2, case3, and so on in the

datafield.After you create the ConfigMap, create a restore task as usual and leave the imageRegistryMapping field empty.

NoteThe changes take effect on all restore tasks in the cluster. We recommend that you configure fine-grained modifications based on the preceding description. For example, configure image changes within a single repository. If the ConfigMap is no longer required, you can delete it.