Container Service for Kubernetes (ACK) provides GPU sharing capabilities that allow multiple models to share one GPU and support GPU memory isolation based on the NVIDIA kernel mode driver. If your cluster has the GPU sharing component installed but the GPU driver version or operating system version on the node is incompatible with the existing cGPU version in the cluster, you need to upgrade the GPU sharing component to the latest version. This topic describes how to manage the GPU sharing component on GPU-accelerated nodes to implement GPU scheduling and isolation capabilities.

Prerequisites

The cloud-native AI suite is activated before you use GPU sharing. For more information about the billing information, see Billing of the cloud-native AI suite.

An ACK managed cluster is created, and the architecture for the instance type is set to GPU-accelerated.

Limits

Do not set the CPU Policy to

staticfor nodes where GPU sharing is enabled.To specify a custom path for the KubeConfig file, run the

export KUBECONFIG=<kubeconfig>command. Note that thekubectl inspect cgpucommand does not support the--kubeconfigparameter.If you use cGPU to isolate GPU resources, you cannot request GPU memory by using Unified Virtual Memory (UVM). Therefore, you cannot request GPU memory by calling cudaMallocManaged() of the Compute Unified Device Architecture (CUDA) API. You can request GPU memory by using other methods. For example, you can call cudaMalloc(). For more information, see Unified Memory for CUDA Beginners.

The pods managed by the DaemonSet of the shared GPU do not have the highest priority. Therefore, resources may be scheduled to pods with higher priority, and the node may evict the pods managed by the DaemonSet. To prevent this issue, you can modify the actual DaemonSet of the shared GPU. For example, you can modify the

gpushare-device-plugin-dsDaemonSet used to share GPU memory and specifypriorityClassName: system-node-criticalto ensure the priority of the pods managed by the DaemonSet.For performance optimization, a maximum of 20 pods can be created per GPU when using cGPU. If the number of created pods exceeds this limit, subsequent pods scheduled to the same GPU will fail to run and return the error:

Error occurs when creating cGPU instance: unknown.You can install the GPU sharing component without region limits. However, GPU memory isolation is supported only in the regions described in the following table. Make sure that your ACK cluster is deployed in one of these regions.

Version requirements.

Configuration

Version requirement

Kubernetes version

If the ack-ai-installer component version is lower than 1.12.0, Kubernetes 1.18.8 or later is supported.

If the ack-ai-installer component version is 1.12.0 or later, only Kubernetes 1.20 or later is supported.

NVIDIA driver version

≥ 418.87.01

Container runtime version

Docker: ≥ 19.03.5

containerd: ≥ 1.4.3

Operating system

Alibaba Cloud Linux 3.x (Container-optimized OS requires ack-ai-installer component version 1.12.6 or later), Alibaba Cloud Linux 2.x, CentOS 7.6, CentOS 7.7, CentOS 7.9, Ubuntu 22.04

GPU model

NVIDIA P, NVIDIA T, NVIDIA V, NVIDIA A, and NVIDIA H series

Install the GPU sharing component

Step 1: Install the GPU sharing component

The cloud-native AI suite is not deployed

Log on to the ACK console. In the left navigation pane, click Clusters.

On the Clusters page, find the cluster you want and click its name. In the left-side navigation pane, choose .

On the Cloud-native AI Suite page, click Deploy.

On the Deploy Cloud-native AI Suite page, select Scheduling Policy Extension (Batch Task Scheduling, GPU Sharing, Topology-aware GPU Scheduling).

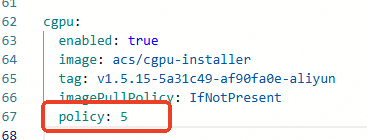

(Optional) Click Advanced to the right of Scheduling Policy Extension (Batch Task Scheduling, GPU Sharing, Topology-aware GPU Scheduling). In the Parameters panel, modify the

policyparameter of cGPU. After you complete the modification, click OK.If you do not have requirements for the computing power sharing feature provided by cGPU, we recommend that you use the default setting

policy: 5, which is native scheduling. For more information about the policies supported by cGPU, see Install and use cGPU.

In the lower part of the Cloud-native AI Suite page, click Deploy Cloud-native AI Suite.

After the component is installed, you can find the installed GPU sharing component ack-ai-installer in the component list on the Cloud-native AI Suite page.

The cloud-native AI suite is deployed

Log on to the ACK console. In the left navigation pane, click Clusters.

On the Clusters page, find the cluster you want and click its name. In the left-side navigation pane, choose .

Find the ack-ai-installer component, click Deploy in the Actions column.

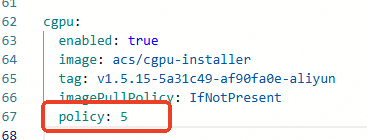

(Optional) In the Parameters panel, modify the

policyparameter of cGPU.If you do not have requirements for the computing power sharing feature provided by cGPU, we recommend that you use the default setting

policy: 5, which is native scheduling. For more information about the policies supported by cGPU, see Install and use cGPU.

After you complete the modification, click OK.

After the component is installed, the Status of ack-ai-installer changes to Deployed.

Step 2: Enable GPU sharing and GPU memory isolation

On the Clusters page, find the cluster to manage and click its name. In the left navigation pane, choose .

On the Node Pools page, click Create Node Pool. For more information about how to configure the node pool, see Create and manage a node pool.

On the Create Node Pool page, configure the parameters to create a node pool and click Confirm. The following table describes the key parameters:

Parameter

Description

Expected Nodes

The initial number of nodes in the node pool. If you do not want to create nodes in the node pool, set this parameter to 0.

Node Labels

Add labels based on your business requirement. For more information about node labels, see GPU node model specifications and scheduling label guidelines.

In the following example, the value of the label is set to cgpu, which indicates that the node has GPU sharing enabled. The pods on the node need to request only GPU memory. Multiple pods can share the same GPU to implement GPU memory isolation and computing power sharing.

Click the

icon next to Node Label, and set Key to

icon next to Node Label, and set Key to ack.node.gpu.scheduleand Value tocgpu.ImportantFor more information about some common issues when you use the memory isolation capability provided by cGPU, see Usage notes for the memory isolation capability of cGPU.

After you add the label for enabling GPU sharing to a node, do not run the

kubectl label nodescommand to change the label value or use the label management feature to change the node label on the Nodes page in the ACK console. This prevents potential issues. For more information about these potential issues, see Issues that may occur if you use the kubectl label nodes command or use the label management feature to change label values in the ACK console. We recommend that you configure GPU sharing based on node pools.

Step 3: Add GPU-accelerated nodes

If you have already added GPU-accelerated nodes to the node pool when you created the node pool, skip this step.

After the node pool is created, you can add GPU-accelerated nodes to the node pool. To add GPU-accelerated nodes, you need to set the architecture for the instance type to GPU-accelerated. For more information, see Add existing ECS instances or Create and manage a node pool.

Step 4: Install and use the GPU inspection tool

Download kubectl-inspect-cgpu. The executable file must be downloaded to a directory included in the PATH environment variable. In this example,

/usr/local/bin/is used.If you use Linux, run the following command to download kubectl-inspect-cgpu:

wget http://aliacs-k8s-cn-beijing.oss-cn-beijing.aliyuncs.com/gpushare/kubectl-inspect-cgpu-linux -O /usr/local/bin/kubectl-inspect-cgpuIf you use macOS, run the following command to download kubectl-inspect-cgpu:

wget http://aliacs-k8s-cn-beijing.oss-cn-beijing.aliyuncs.com/gpushare/kubectl-inspect-cgpu-darwin -O /usr/local/bin/kubectl-inspect-cgpu

Run the following command to grant execute permissions to kubectl-inspect-cgpu:

chmod +x /usr/local/bin/kubectl-inspect-cgpuRun the following command to query the GPU usage of the cluster:

kubectl inspect cgpuThe system displays information similar to the following output:

NAME IPADDRESS GPU0(Allocated/Total) GPU Memory(GiB) cn-shanghai.192.168.6.104 192.168.6.104 0/15 0/15 ---------------------------------------------------------------------- Allocated/Total GPU Memory In Cluster: 0/15 (0%)

Update the GPU sharing component

Step 1: Determine the update method for the GPU sharing component

You must select an update method based on how the GPU sharing component (ack-ai-installer) was installed in your cluster. There are two ways to install the GPU sharing component.

Use the cloud-native AI suite (recommended): Install the GPU sharing component ack-ai-installer on the Cloud-native AI Suite page.

Use the Helm (this method is no longer available): Install the GPU sharing component ack-ai-installer on the Helm page. This installation method is no longer available. However, for components that were already installed using this method, you can still update them using this method.

ImportantIf you uninstall a component that was installed using this method from your cluster, you must activate the cloud-native AI suite service and complete the installation when you reinstall the component.

Step 2: Update the component

Update through the cloud-native AI suite

Log on to the ACK console. In the left navigation pane, click Clusters.

On the Clusters page, find the cluster you want and click its name. In the left-side navigation pane, choose .

In the Components section, find the ack-ai-installer component and click Upgrade in the Actions column.

Update through the App Catalog

Log on to the ACK console. In the left navigation pane, click Clusters.

On the Clusters page, find the cluster you want and click its name. In the left-side navigation pane, choose .

In the Helm list, find the ack-ai-installer component and click Update in the Actions column. Follow the page instructions to select the latest chart version and complete the component update.

ImportantIf you want to customize the chart configuration, confirm the component update after you modify the configuration.

After the update, check the Helm list to confirm that the chart version of the ack-ai-installer component is the latest version.

Step 3: Update existing nodes

After the ack-ai-installer component is updated, the cGPU version on existing nodes is not automatically updated. Refer to the following instructions to determine whether nodes have cGPU isolation enabled.

If your cluster contains GPU-accelerated nodes with cGPU isolation enabled, you must update the cGPU version on these existing nodes. For more information, see Update the cGPU version on a node.

If your cluster does not contain nodes with cGPU isolation enabled, skip this step.

NoteIf a node has the

ack.node.gpu.schedule=cgpuorack.node.gpu.schedule=core_memlabel, cGPU isolation is enabled on the node.Updating the cGPU version on existing nodes requires stopping all application pods on the nodes. Perform this operation during off-peak hours based on your business scenario.