Deploying multiple instances improves application stability but can lead to idle resources and higher cluster costs. Manual scaling is labor-intensive and often delayed. You can use Nginx Ingress to implement Horizontal Pod Autoscaler (HPA) for multiple applications. HPA dynamically adjusts the number of pod replicas based on the workload. This ensures application stability and fast responses. It also optimizes resource utilization and reduces costs. This topic describes how to use Nginx Ingress to implement HPA for multiple applications.

An Ingress forwards external requests to a Service in the cluster. The Service then sends the requests to a pod. In a production environment, you can configure automatic scaling based on request volume. This volume is exposed by the nginx_ingress_controller_requests metric. You can use this built-in metric from the Nginx Ingress Controller to implement HPA. The Nginx Ingress Controller in ACK clusters is an enhanced version of the community edition and is easier to use.

Preparations

Before you use Nginx Ingress to implement Horizontal Pod Autoscaling (HPA) for multiple applications, you must transform Alibaba Cloud Prometheus metrics into HPA-compatible metrics.

Deploy the Alibaba Cloud Prometheus monitoring component. For more information, see Use Alibaba Cloud Prometheus for monitoring.

Deploy the ack-alibaba-cloud-metrics-adapter component and configure its

prometheus.urlfield.Install the Apache Benchmark stress testing tool.

In this tutorial, you will create two Deployments and their corresponding Services, and configure an Ingress with different access paths to route external traffic. Then, you will configure an HPA for the application based on the nginx_ingress_controller_requests metric and use the selector.matchLabels.service field to filter the metric. This enables pods to scale in and out in response to traffic changes.

Step 1: Create applications and services

Use the following YAML files to create the application Deployments and their corresponding Services.

Create a file named nginx1.yaml and copy the following content into it.

Run the following command to create the test-app application and its corresponding Service.

kubectl apply -f nginx1.yamlCreate a file named nginx2.yaml and copy the following content into it.

Run the following command to create the sample-app application and its corresponding Service.

kubectl apply -f nginx2.yaml

Step 2: Create an Ingress

Create a file named ingress.yaml and copy the following content into it.

Run the following command to deploy the Ingress resource.

kubectl apply -f ingress.yamlRun the following command to retrieve the Ingress resource.

kubectl get ingress -o wideExpected output:

NAME CLASS HOSTS ADDRESS PORTS AGE test-ingress nginx test.example.com 10.XX.XX.10 80 55sAfter the deployment is successful, you can access the host using the

/and/homepaths. The NGINX Ingress controller automatically routes your requests to the sample-app and test-app applications based on the request paths. You can query thenginx_ingress_controller_requestsmetric in Alibaba Cloud Prometheus to retrieve information about requests to each application.

Step 3: Convert Prometheus metrics to HPA-compatible metrics

Modify the adapter-config file

Log on to the ACK console. In the left navigation pane, click Clusters.

On the Clusters page, find the cluster you want and click its name. In the left-side navigation pane, choose .

On the Helm page, click ack-alibaba-cloud-metrics-adapter. In the Resource section, click adapter-config, and then click Edit YAML in the upper-right corner of the page.

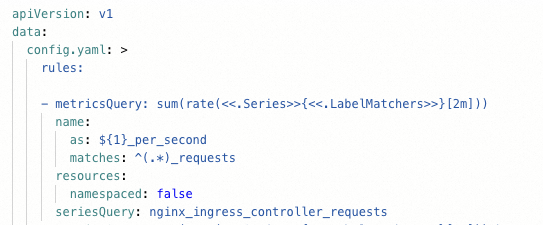

Replace the values of the corresponding fields with the values in the following code. Then, click OK at the bottom of the page.

For more information, see Horizontal pod autoscaling based on Alibaba Cloud Prometheus metrics.

rules: - metricsQuery: sum(rate(<<.Series>>{<<.LabelMatchers>>}[2m])) name: as: ${1}_per_second matches: ^(.*)_requests resources: namespaced: false seriesQuery: nginx_ingress_controller_requests

View the metric output

Run the following command to view the metric output.

kubectl get --raw "/apis/external.metrics.k8s.io/v1beta1/namespaces/*/nginx_ingress_controller_per_second" | jq .The query result is as follows:

{

"kind": "ExternalMetricValueList",

"apiVersion": "external.metrics.k8s.io/v1beta1",

"metadata": {},

"items": [

{

"metricName": "nginx_ingress_controller_per_second",

"metricLabels": {},

"timestamp": "2025-07-25T07:56:04Z",

"value": "0"

}

]

}Step 4: Create HPAs

Create a file named hpa.yaml and copy the following content into it.

Run the following command to deploy an HPA for the sample-app and test-app applications.

kubectl apply -f hpa.yamlRun the following command to check the HPA deployment status.

kubectl get hpaExpected output:

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE sample-hpa Deployment/sample-app 0/30 (avg) 1 10 1 74s test-hpa Deployment/test-app 0/30 (avg) 1 10 1 59m

Step 5: Verify the results

After the HPAs are deployed, use the Apache Benchmark tool to run a stress test. Observe whether the applications scale out as the number of requests increases.

Run the following command to stress test the

/homepath of the host.ab -c 50 -n 5000 test.example.com/homeRun the following command to check the HPA status.

kubectl get hpaExpected output:

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE sample-hpa Deployment/sample-app 0/30 (avg) 1 10 1 22m test-hpa Deployment/test-app 22096m/30 (avg) 1 10 3 80mRun the following command to stress test the root path of the host.

ab -c 50 -n 5000 test.example.com/Run the following command to check the HPA status.

kubectl get hpaExpected output:

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE sample-hpa Deployment/sample-app 27778m/30 (avg) 1 10 2 38m test-hpa Deployment/test-app 0/30 (avg) 1 10 1 96mThe results show that the applications successfully scaled out when the request volume exceeded the threshold.

References

Multi-zone balancing is a common deployment method for data-intensive services in high-availability scenarios. When the workload increases, applications that use a multi-zone balanced scheduling policy must automatically scale out instances across multiple zones to meet the scheduling demands of the cluster. For more information, see Implement rapid and simultaneous elastic scaling across multiple zones.

You can build custom operating system images to simplify elastic scaling in complex scenarios. For more information, see Elastic optimization with custom images.