The GPU sharing feature is available in ACK Pro clusters. You can select different computing power allocation policies by setting the policy of the cGPU component. This topic describes how to configure a computing power allocation policy for GPU sharing.

For more information about cGPU, see What is cGPU?

Prerequisites

An ACK Pro cluster is created and the Kubernetes version of the cluster is 1.18.8 or later. For more information about how to update the Kubernetes version, see Manually update ACK clusters.

cGPU 1.0.6 or later is used. For more information about how to update cGPU, see Update the cGPU version on a node.

Precautions

If the cGPU isolation module is installed on the node before you install the cGPU component, you must restart the node to make the cGPU policy take effect. For more information, see Restart an instance.

NoteTo check whether the cGPU isolation module is installed on a node, log on to the node and run the

cat /proc/cgpu_km/versioncommand. If the system returns a cGPU version number, the cGPU isolation module is installed.If the cGPU isolation module is not installed or the module has been uninstalled, you must install the module to make the cGPU policy take effect.

The nodes that have GPU sharing enabled in a cluster use the same cGPU policy.

Step 1: Check whether the cGPU component is installed

The operations that are required for configuring a computing power allocation policy vary based on whether the cGPU component is installed. You must check whether the cGPU component is installed before you configure a computing power allocation policy.

Log on to the ACK console. In the left-side navigation pane, click Clusters.

On the Clusters page, find the cluster that you want to manage and click its name. In the left-side navigation pane, choose .

On the Helm page, check whether the ack-ai-installer component exists.

If ack-ai-installer exists, the cGPU component is installed.

Step 2: Configure a computing power allocation policy

The following section describes how to configure a computing power allocation policy for GPU sharing when the cGPU component is installed and when the component is not installed.

The cGPU component is not installed

Log on to the ACK console. In the left-side navigation pane, click Clusters.

On the Clusters page, find the cluster that you want to manage and click its name. In the left-side pane, choose .

On the Cloud-native AI Suite page, click Deploy.

In the Scheduling section, select Scheduling Policy Extension (Batch Task Scheduling, GPU Sharing, Topology-aware GPU Scheduling), and click Advanced.

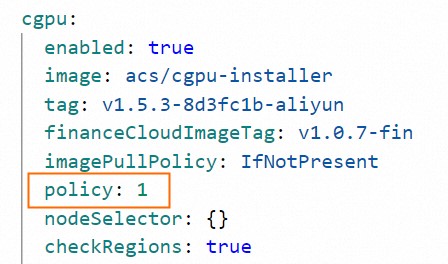

On the Parameters page, modify the

policyfield, and click OK. The following table describes the valid values. For more information, see Usage examples of cGPU.

The following table describes the valid values. For more information, see Usage examples of cGPU. Value

Description

0

Fair-share scheduling. Each container occupies a fixed time slice. The proportion of the time slice is

1/max_inst.1

Preemptive scheduling. Each container occupies as many time slices as possible. The proportion of the time slices is

1/Number of containers.2

Weight-based preemptive scheduling. When ALIYUN_COM_GPU_SCHD_WEIGHT is set to a value greater than 1, weight-based preemptive scheduling is used.

3

Fixed percentage scheduling. Computing power is scheduled at a fixed percentage.

4

Soft scheduling. Compared with preemptive scheduling, soft scheduling isolates GPU resources in a softer manner.

5

Built-in scheduling. The built-in scheduling policy for the GPU driver.

In the lower part of the page, click Deploy Cloud-native AI Suite.

The cGPU component is installed

Run the following command to modify the DaemonSet in which the cGPU isolation module of the cGPU component runs:

kubectl edit daemonset cgpu-installer -nkube-systemModify the DaemonSet in which the cGPU isolation module runs and save the changes.

View the image version of the DaemonSet in the

imagefield.Make sure that the image version is 1.0.6 or later. Example of the

imagefield:image: registry-vpc.cn-hongkong.aliyuncs.com/acs/cgpu-installer:<Image version>Modify the

valuefield.In the

containers.envparameter, set thevaluefield for thePOLICYkey.#Other fields are omitted. spec: containers: - env: - name: POLICY value: "1" #Other fields are omitted.The following table describes the values of the

valuefield.Value

Description

0

Fair-share scheduling. Each container occupies a fixed time slice. The proportion of the time slice is

1/max_inst.1

Preemptive scheduling. Each container occupies as many time slices as possible. The proportion of the time slices is

1/Number of containers.2

Weight-based preemptive scheduling. When ALIYUN_COM_GPU_SCHD_WEIGHT is set to a value greater than 1, weight-based preemptive scheduling is used.

3

Fixed percentage scheduling. Computing power is scheduled at a fixed percentage.

4

Soft scheduling. Compared with preemptive scheduling, soft scheduling isolates GPU resources in a softer manner.

5

Built-in scheduling. The built-in scheduling policy for the GPU driver.

Restart the node that has GPU sharing enabled.

For more information, see Restart an instance.