By Sun Jincheng

Currently, many popular open-source projects such as Beam, Spark, and Kafka) support Python. Being a popular project, Flink naturally needs to support Python for better usability. Why do these popular projects add support for Python? What makes Python a preferred language choice for these projects? The answer lies in the statistics. Despite the well-known fact that Apache Flink runtime is written in Java, the upcoming Apache Flink 1.9.0 will include new machine learning interfaces and Flink-Python modules. Let's take a closer look at this addition.

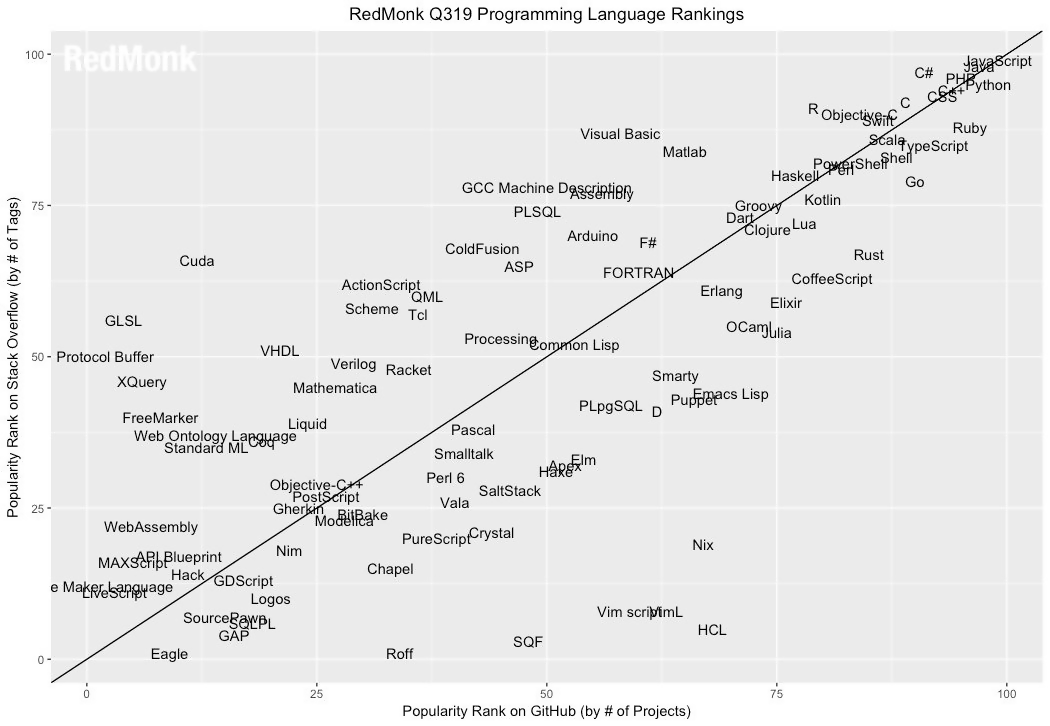

The image below shows the RedMonk Programming Language Rankings:

The top 10 rankings shown in the image above are based on their popularity on GitHub and Stack Overflow:

Python stands in third place. R and Go-two other popular programming languages, rank 15th, and 16th respectively. This authoritative ranking sufficiently proves how large the Python fan base is. Including Python support for a project is an effective way to expand the audience of that project.

Big data computing is one of the most popular fields in the Internet industry today. The era of standalone computing is gone. The standalone processing capability falls far behind data growth. Let's see why big data computing is one of the most important aspects of the Internet industry.

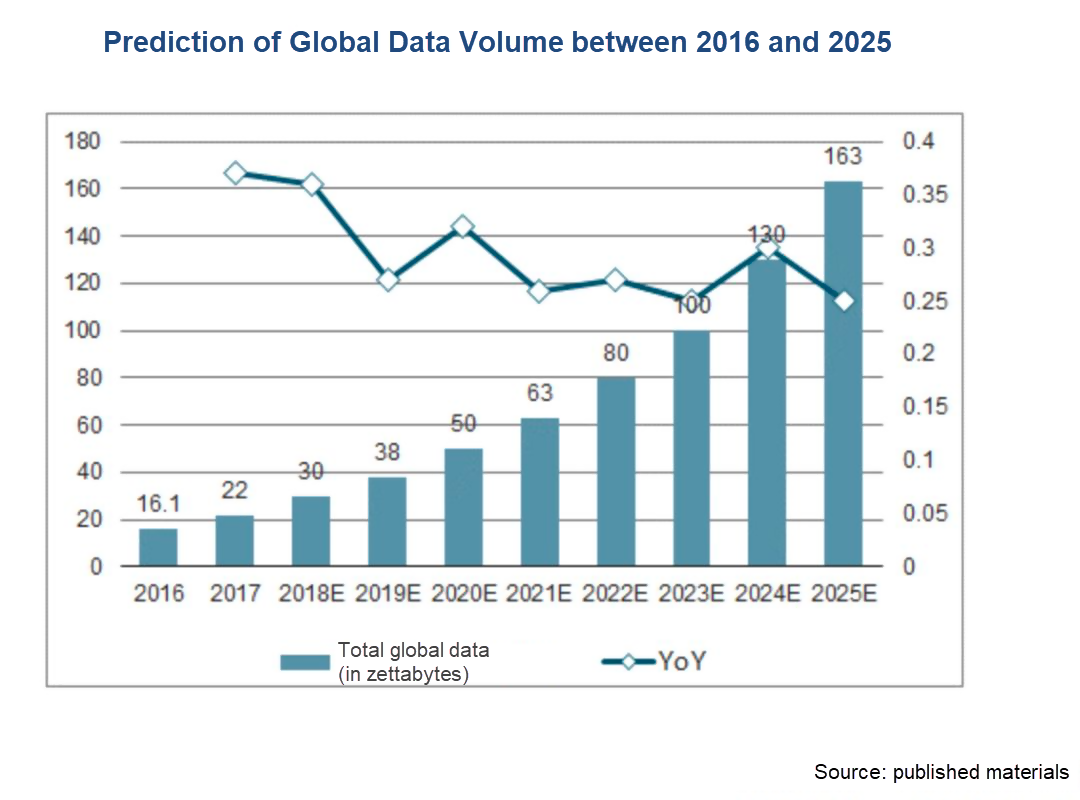

Rapid IT developments such as cloud computing, IoT, and artificial intelligence are increasing data volumes significantly. The image below shows the projection of the total amount of global data is expected to grow from 16.1 ZB to 163 ZB in just 10 years, so significant an increase that standalone servers can no longer meet the data storage and processing requirements.

The data in the preceding picture is given as ZB. Here I want to briefly mention statistical units of data in ascending order: Bit, Byte, KB, MB, GB, TB, PB, EB, ZB, YB, BB, NB, and DB. These units are converted as follows:

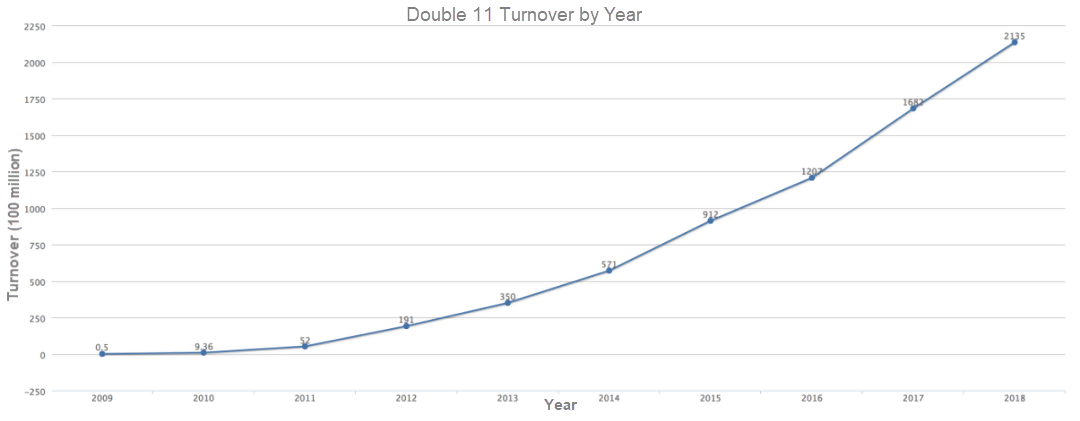

It is only normal to question the size of the global data and delve into the causes. In fact, I was also suspicious when I saw the statistics. After gathering and reviewing information, I found that global data is indeed increasing rapidly. For example, millions of pictures are posted on Facebook every day and the New York Exchange creates TBs of transaction data every day. The Double 11 promo event last year saw a record-setting transaction amount of 213.5 billion yuan, and behind this successful event is the 162 GB/s data processing capability for Alibaba's internal monitoring logs alone. Internet companies like Alibaba also contribute to rapid data growth, as evidenced by the Double 11 transaction amounts over the last 10 years:

How can we explore the value of big data? Undoubtedly, big data statistical analysis can help us make informed decisions. For example, in a recommendation system, we can analyze a buyer's long-term buying habits and purchases to find that buyer's preferred items and further provide more appropriate recommendations. As mentioned before, a standalone server cannot process such a large amount of data. So, how can we perform a statistical analysis of all the data within a limited period? In this regard, we need to thank Google for these three useful papers:

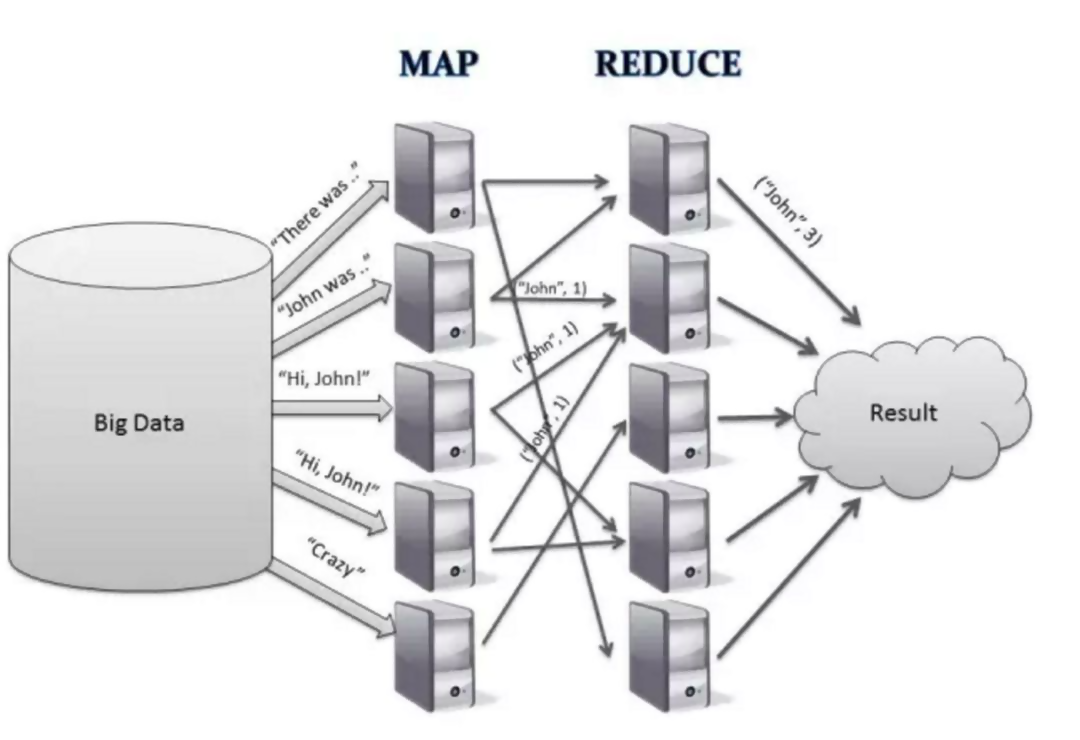

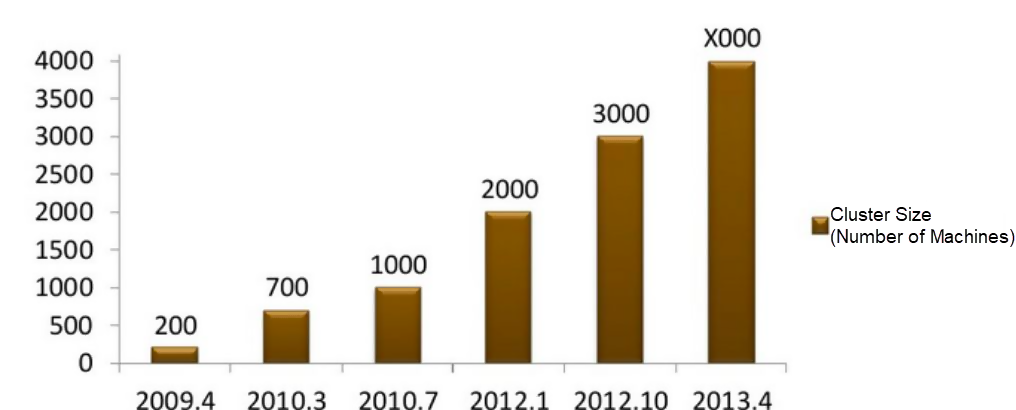

Thanks to the three Google papers, the open-source Apache community quickly built the Hadoop ecosystem-HDFS, MapReduce (a programming model), and HBase (a NoSQL database). The Hadoop ecosystem drew lots of attention from the global academic community, and the industrial world, and soon gained popularity and became applied around the world. In 2008, Alibaba launched the Hadoop-based YARN project, making Hadoop the core technical system of Alibaba's distributed computing. The project had a cluster of one thousand machines in 2010. The following picture shows the development of the Hadoop clusters at Alibaba:

However, to develop MapReduce applications by using Hadoop, developers need to be proficient in the Java language and have a good understanding of how MapReduce works. This increases the MapReduce development threshold. To make the MapReduce development easier, some open-source frameworks have been created in the open-source community, including the typical Hive project. HSQL allows you to define and describe MapReduce computations in a way similar to SQL. For example, the Word Count operation that once required tens or hundreds of lines of code now can be implemented with only one SQL statement, significantly reducing the threshold of using MapReduce for development. As the Hadoop ecosystem matures, the Hadoop-based distributed computing for big data becomes more popular industry-wide.

Each data entry carries certain information. Information timeliness is measured by the time interval from sending information from the information source to receiving, processing, transferring, and utilizing information and the informational efficiency. The shorter the interval, the higher the timeliness. In general, higher timeliness brings higher value. For example, in a preference recommendation scenario, if a special offer for an oven is recommended to a buyer seconds after the buyer purchased a steamer, the chances that the buyer will also buy the oven are good. If the oven recommendation is displayed after a day, based on the analysis of the behavior of buying a steamer, it is less likely to be purchased by the buyer. This exposes one disadvantage of the Hadoop batch computations-low timeliness. To meet the requirements in the era of big data, some typical real-time computing platforms were developed. In 2009, Spark was born in the AMP Lab at the University of California Berkeley. In 2010, Nathan proposed BackType, the core concept of Storm. In 2010, Flink was also launched as an investigative project in Berlin, Germany.

In a Go game held in 2016, Google's AlphaGo defeated Lee Sedol (4:1), a ninth dan Go player and winner of the world Go championship. This made more people see deep learning from a new perspective and provoked an AI fad. According to the definition given in the Baidu Encyclopedia, artificial intelligence (AI) is a new branch of computer science that studies and develops theories, methods, techniques, applications, and systems that stimulate, extend and expand the intelligence of humans.

Machine learning is a method or tool for exploring artificial intelligence. Machine learning is a high priority in big data platforms represented by Spark and Flink. Spark has made huge investments in machine learning in recent years. PySpark has integrated many excellent ML class libraries (for example, the typical Pandas) and provides much more comprehensive support than Flink. Therefore, Flink 1.9 will enable new ML interfaces and flink-python modules to make up for its deficiencies.

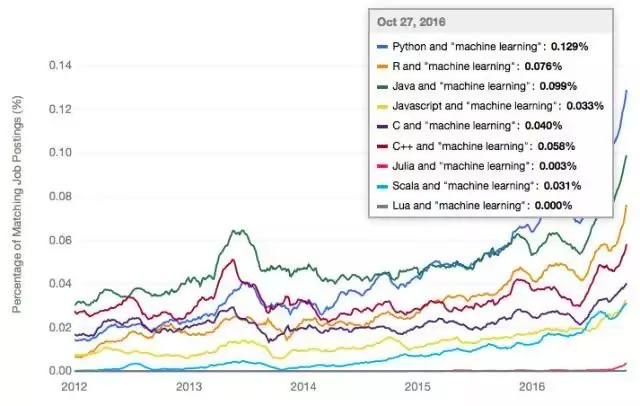

What does machine learning have to do with Python? Let's see some statistical data to see what the most popular language for machine learning is.

Jean-Francois Puget, a data scientist at IBM, once performed an interesting analysis. He gathered information about changes in job requirements from the famous job-hunting website to find out the most popular programming language at the time. By searching for machine learning, he also came to a similar conclusion.

He found that Python was the most popular programming language for machine learning at the time. Although this survey was conducted in 2016, it was enough to prove that Python plays an important role in machine learning, which can also be further indicated by the aforementioned RedMonk statistical data.

In addition to surveys, from Python features and the existing Python ecosystem, we can also see why Python is the best language for machine learning.

Python is an interpreted and object-oriented programming language created by the Dutch programmer Guido van Rossum in 1989 and first released in 1991. Although the interpreted language runs very slow, Python's design philosophy is "only one way to do it". When developing new Python syntax and having many choices available, Python developers will usually choose clear syntax that has no or little ambiguity. Due to its simplicity, Python has a large population of users. Many machine learning class libraries are also developed in Python, such as NumPy, SciPy, and Pandas (for processing structured data). Since the rich Python ecosystem provides significant convenience for machine learning, it is no surprise that Python has become the most popular programming language for machine learning.

In this article, we made an attempt to understand the reasons behind Apache Flink adding support for Python API. Concrete statistics indicate that we are now in the era of big data. Exploring data value requires big data analysis. Data timeliness gave birth to the famous stream computing platform-Apache Flink.

In the era of big data computing, AI is a hot development trend and machine learning is one important aspect of AI. Due to the features of the Python language and the advantages of the ecosystem, Python is the best language for machine learning. This is one important reason why Apache Flink plans support for Python API. The Apache Flink support for Python API is an inevitable trend to meet big data era requirements.

206 posts | 56 followers

FollowApache Flink Community China - December 25, 2019

Apache Flink Community China - April 23, 2020

Alibaba Clouder - April 25, 2021

Apache Flink Community China - September 27, 2019

Apache Flink Community China - September 27, 2019

Apache Flink Community - November 7, 2025

206 posts | 56 followers

Follow Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More Epidemic Prediction Solution

Epidemic Prediction Solution

This technology can be used to predict the spread of COVID-19 and help decision makers evaluate the impact of various prevention and control measures on the development of the epidemic.

Learn MoreMore Posts by Apache Flink Community