By Yi Li

Knative is a technical framework for Serverless computing based on Kubernetes. It can simplify the development and O&M of Kubernetes applications. It became a CNCF incubation project in March 2022. Knative consists of Knative Serving, which supports HTTP online applications, and Knative Eventing, which supports CloudEvents and event-driven applications.

Knative can support various containerized runtime environments. Today, let's explore the use of WebAssembly technology as a new serverless runtime.

WebAssembly (WASM) is an emerging W3C specification. It is a virtual instruction set architecture (ISA). Its initial goal is to write programs for C/C++ and other languages that can run safely and efficiently in the browser. In December 2019, W3C announced that the core specification of WebAssembly became a Web standard, which promoted the popularization of WASM technology. Today, WebAssembly is supported by streaming browsers (such as Google Chrome, Microsoft Edge, Apple Safari, and Mozilla Firefox). More importantly, WebAssembly, a secure, portable, and efficient virtual machine sandbox, can safely run applications anywhere (in any operating system) and with any CPU architecture.

Mozilla proposed the WebAssembly System Interface (WASI) in 2019, which provides standard APIs (like POSIX) to standardize the interaction of WebAssembly applications with file systems, memory management, and other system resources. The emergence of WASI expands the application scenarios of WASM, allowing it to run various types of server applications as a virtual machine. In order to continue promoting the development of the WebAssembly ecosystem, Mozilla, Fastly, Intel, and Red Hat jointly established the Bytecode Alliance to lead WASI standards, WebAssembly runtime, tools, and other work. Microsoft, Google, ARM, and other companies are also members of the alliance.

WebAssembly technology is still undergoing continuous and rapid evolution. In April 2022, W3C announced the first batch of public work drafts for WebAssembly 2.0, which is an important symbol of its maturity and development.

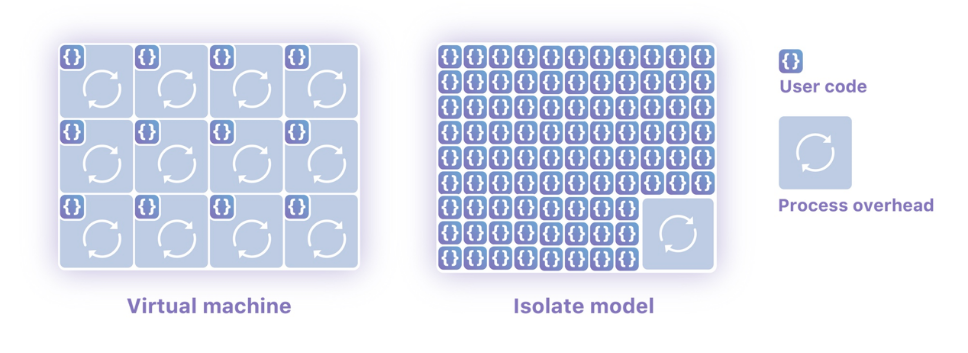

As an emerging backend technology, WASM/WASI has the characteristics of native security, portability, high performance, and lightweight, which is suitable for running as a distributed application environment. Unlike containers, which are independent and isolated operating system processes, WASM applications can implement security isolation within a process and support millisecond-level cold start time and extremely low resource consumption. The following figure shows the difference:

Image Source: Cloudflare

Currently, WASM/WASI is in the early stage of development. There are still many technical limitations, such as the failure to support threads and low-level Socket network applications, which limit the application scenarios of WASM on the server. The community is exploring an application development model that can adapt to WASM to foster strengths and avoid weaknesses. Engineers at Microsoft Deislabs drew inspiration from the history of HTTP server development and came up with WAGI-WebAssembly Gateway Interface Items [1]. The concept of WAGI comes from CGI.

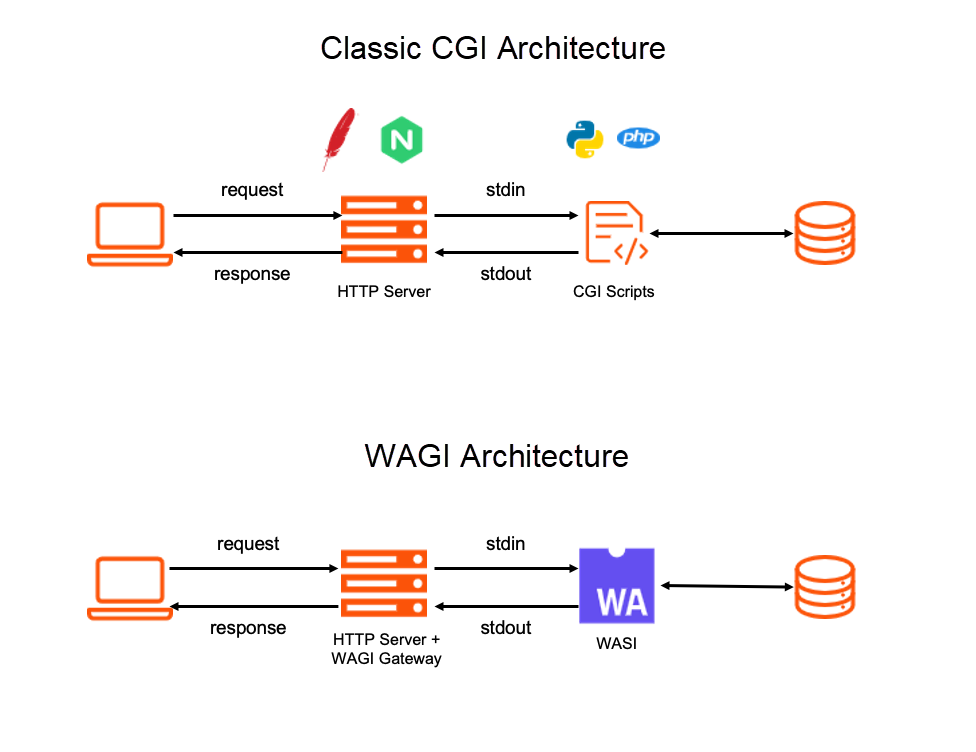

Common Gateway Interface (CGI) is a specification for the HTTP server to interact with other programs. The HTTP server communicates with the CGI scripting language through standard input and output interfaces. Developers can use Python, PHP, Perl, and other implementations to process HTTP requests.

If we can call WASI applications through CGI specifications, developers can easily use WebAssembly to write Web APIs or micro-applications without handling many network implementation details in WASM. The following figure compares the conceptual architecture diagrams of CGI and WAGI.

The two architectures are very similar but also have differences. In the traditional CGI architecture, each HTTP request will create an OS process, and the process mechanism of the operating system will realize safe isolation. In WAGI, WASI applications will be called in an independent thread for each HTTP request, and WebAssembly virtual machines are used to realize secure isolation between applications. Theoretically, WAGI can have lower resource loss and shorter response time than CGI.

This article will not analyze WAGI's architecture and WAGI application development, but you can read the project documents for more information.

Furthermore, if we can use WAGI as a Knative Serving runtime, we can build a bridge to apply WebAssembly to Serverless.

Cold start performance is a key metric in Serverless scenarios. In order to understand the execution efficiency of WAGI better, we can use ab to do a simple stress test.

$ ab -k -n 10000 -c 100 http://127.0.0.1:3000/

...

Server Software:

Server Hostname: 127.0.0.1

Server Port: 3000

Document Path: /

Document Length: 12 bytes

Concurrency Level: 100

Time taken for tests: 7.632 seconds

Complete requests: 10000

Failed requests: 0

Keep-Alive requests: 10000

Total transferred: 1510000 bytes

HTML transferred: 120000 bytes

Requests per second: 1310.31 [#/sec](mean)

Time per request: 76.318 [ms](mean)

Time per request: 0.763 [ms](mean, across all concurrent requests)

Transfer rate: 193.22 [Kbytes/sec] received

Connection Times (ms)

min mean[+/-sd] median max

Connect: 0 0 0.6 0 9

Processing: 8 76 29.6 74 214

Waiting: 1 76 29.6 74 214

Total: 8 76 29.5 74 214

Percentage of the requests served within a certain time (ms)

50% 74

66% 88

75% 95

80% 100

90% 115

95% 125

98% 139

99% 150

100% 214 (longest request)

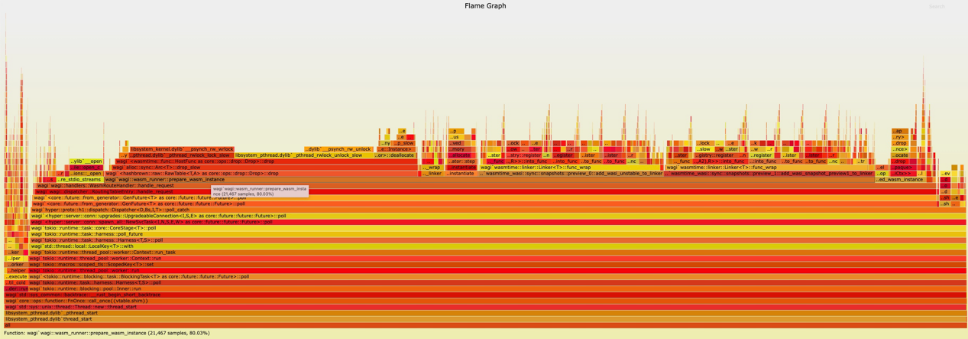

We can see that the P90 request response time is 115ms. This is different from our understanding on the lightweight of WASM. We can quickly locate the problem on the flame graph: prepare_wasm_instance function consumes 80% of the time of the overall application running.

After analyzing the code, we found that WAGI has to reconnect WASI and wasi-http extensions and configure the environment for the compiled WSM application in each response to the HTTP request. This consumes a lot of time. After the problem is located, the solution is simple. Reconstruct the execution logic so these preparations are only executed once during the initialization process, without repeating execution during each HTTP request. Please refer to Optimized Implementation [2] for details.

We rerun the stress test.

$ ab -k -n 10000 -c 100 http://127.0.0.1:3000/

...

Server Software:

Server Hostname: 127.0.0.1

Server Port: 3000

Document Path: /

Document Length: 12 bytes

Concurrency Level: 100

Time taken for tests: 1.328 seconds

Complete requests: 10000

Failed requests: 0

Keep-Alive requests: 10000

Total transferred: 1510000 bytes

HTML transferred: 120000 bytes

Requests per second: 7532.13 [#/sec](mean)

Time per request: 13.276 [ms](mean)

Time per request: 0.133 [ms](mean, across all concurrent requests)

Transfer rate: 1110.70 [Kbytes/sec] received

Connection Times (ms)

min mean[+/-sd] median max

Connect: 0 0 0.6 0 9

Processing: 1 13 5.7 13 37

Waiting: 1 13 5.7 13 37

Total: 1 13 5.6 13 37

Percentage of the requests served within a certain time (ms)

50% 13

66% 15

75% 17

80% 18

90% 21

95% 23

98% 25

99% 27

100% 37 (longest request)

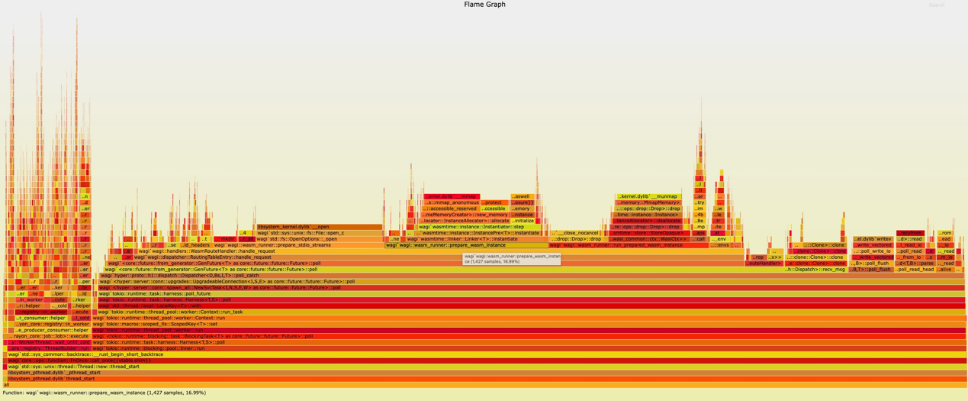

In the optimized implementation, the P90 response time has dropped to 21ms, and the time occupation of prepare_wasm_instance has dropped to 17%. The overall cold start efficiency has been improved.

Note: This article uses the performance analysis of Flamegraph [3].

In order to run WAGI as a Knative application, we need to add support for SIGTERM signals on WAGI to allow WAGI containers to go offline smoothly. The details will not be repeated.

You can use Minikube to create a local test environment by referring to the Knative Installation Documentation [4].

Note: You have to manage to access Knative images in gcr.io domestically.

A simpler way is to use Alibaba Cloud Serverless Container Service for Kubernetes (ASK) [5] to enter the serverless Kubernetes cluster. ASK has built-in Knative support [6]. You can develop and use Knative applications without complicated configuration and installation.

First, we use WAGI to define a Knative service:

apiVersion: serving.knative.dev/v1

kind: Service

metadata:

name: autoscale-wagi

namespace: default

spec:

template:

metadata:

annotations:

# Knative concurrency-based autoscaling (default).

autoscaling.knative.dev/class: kpa.autoscaling.knative.dev

autoscaling.knative.dev/metric: concurrency

# Target 10 requests in-flight per pod.

autoscaling.knative.dev/target: "10"

# Disable scale to zero with a min scale of 1.

autoscaling.knative.dev/min-scale: "1"

# Limit scaling to 100 pods.

autoscaling.knative.dev/max-scale: "10"

spec:

containers:

- image: registry.cn-hangzhou.aliyuncs.com/denverdino/knative-wagi:0.8.1-with-cache$ kubectl apply -f knative_test.yaml

$ kubectl get ksvc autoscale-wagi

NAME URL LATESTCREATED LATESTREADY READY REASON

autoscale-wagi http://autoscale-wagi.default.127.0.0.1.sslip.io autoscale-wagi-00002 autoscale-wagi-00002 True

$ curl http://autoscale-wagi.default.127.0.0.1.sslip.io

Oh hi world

$ curl http://autoscale-wagi.default.127.0.0.1.sslip.io/hello

hello worldYou can do some stress tests to learn about Knative's auto-scaling ability.

This article introduces the WAGI project, which can decouple the network processing details of the HTTP server from the WASM application logic implementation. This makes it easy to combine WASM and WASI applications with Serverless frameworks (like Knative). On the one hand, we can reuse the flexibility and large-scale resource scheduling capabilities brought by Knative and Kubernetes. On the other hand, we can take advantage of the security isolation, portability, and lightweight of WebAssembly.

In the previous article (WebAssembly + Dapr = Next-Generation Cloud-Native Runtime?), I introduced an idea to decouple WASM applications from external service dependencies through Dapr to solve the contradictions between portability and diversified service capabilities.

These jobs are used to verify the possible boundaries of technology. The main purpose is to hear everyone's thoughts about the next generation of distributed application frameworks and runtime environments.

Click here to learn more about Alibaba Cloud Serverless Container Service for Kubernetes.

[1] WAGI-WebAssembly Gateway Interface Items:

https://github.com/deislabs/wagi

[2] Optimized Implementation:

https://github.com/denverdino/wagi/tree/with_cache

[3] Flamegraph:

https://github.com/flamegraph-rs/flamegraph

[4] Knative Installation Documentation:

https://knative.dev/docs/install/

[5] Serverless Kubernetes Clusters:

https://www.alibabacloud.com/help/en/container-service-for-kubernetes/latest/ask-overview

[7] Project:

https://github.com/denverdino/knative-wagi

KubeVela: The Golden Path towards Application Delivery Standards

664 posts | 55 followers

FollowAlibaba Developer - March 3, 2020

Xi Ning Wang(王夕宁) - July 21, 2023

Alibaba Cloud Native - November 3, 2022

Alibaba Container Service - July 22, 2021

Alibaba Container Service - May 27, 2025

Alibaba Cloud Native - September 11, 2023

664 posts | 55 followers

Follow Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More Serverless Workflow

Serverless Workflow

Visualization, O&M-free orchestration, and Coordination of Stateful Application Scenarios

Learn More Serverless Application Engine

Serverless Application Engine

Serverless Application Engine (SAE) is the world's first application-oriented serverless PaaS, providing a cost-effective and highly efficient one-stop application hosting solution.

Learn More Data Lake Formation

Data Lake Formation

An end-to-end solution to efficiently build a secure data lake

Learn MoreMore Posts by Alibaba Cloud Native Community