By Lu Hao

DataX is an offline data synchronization tool/platform widely used by Alibaba Group to implement data synchronization between heterogeneous data sources. Recently, the Alibaba Cloud Cassandra Team provided the Cassandra reader and writer plug-ins for DataX, which enriches the data sources supported by DataX. Users can synchronize data between Cassandra and other data sources. This article describes how to use DataX to synchronize data in Cassandra. It provides configuration file examples for common scenarios and suggestions to improve synchronization performance and measured performance data.

The method of using DataX to synchronize data is simple. There are three steps:

1 Deploy DataX

2 Write the configuration file of synchronization tasks

3 Run DataX and wait until the synchronization task is completed

The deployment and operation of DataX are simple. You can download the DataX toolkit on the official website. (The file will download automatically.) After downloading, decompress the kit to a local directory and enter the bin directory to run the synchronization task:

$ cd {YOUR_DATAX_HOME}/bin

$ python datax.py {YOUR_JOB.json}Please see the this blog for more information about the configuration format of synchronization tasks.

The following code shows a typical configuration file:

{

"job": {

"content": [

{

"reader": {

"name": "streamreader",

"parameter": {

"sliceRecordCount": 10,

"column": [

{

"type": "long",

"value": "10"

},

{

"type": "string",

"value": "hello,你好,世界-DataX"

}

]

}

},

"writer": {

"name": "streamwriter",

"parameter": {

"encoding": "UTF-8",

"print": true

}

}

}

],

"setting": {

"speed": {

"channel": 5

}

}

}

}The configuration file of a synchronization task includes setting and content. The setting section includes task scheduling configurations. The content section describes the synchronization tasks content, including the reader and writer plug-ins configuration. For example, if you need to synchronize data from MySQL to Cassandra, you need to configure reader as mysqlreader, configure writer as cassandrawriter, and provide the corresponding plug-in configuration information.

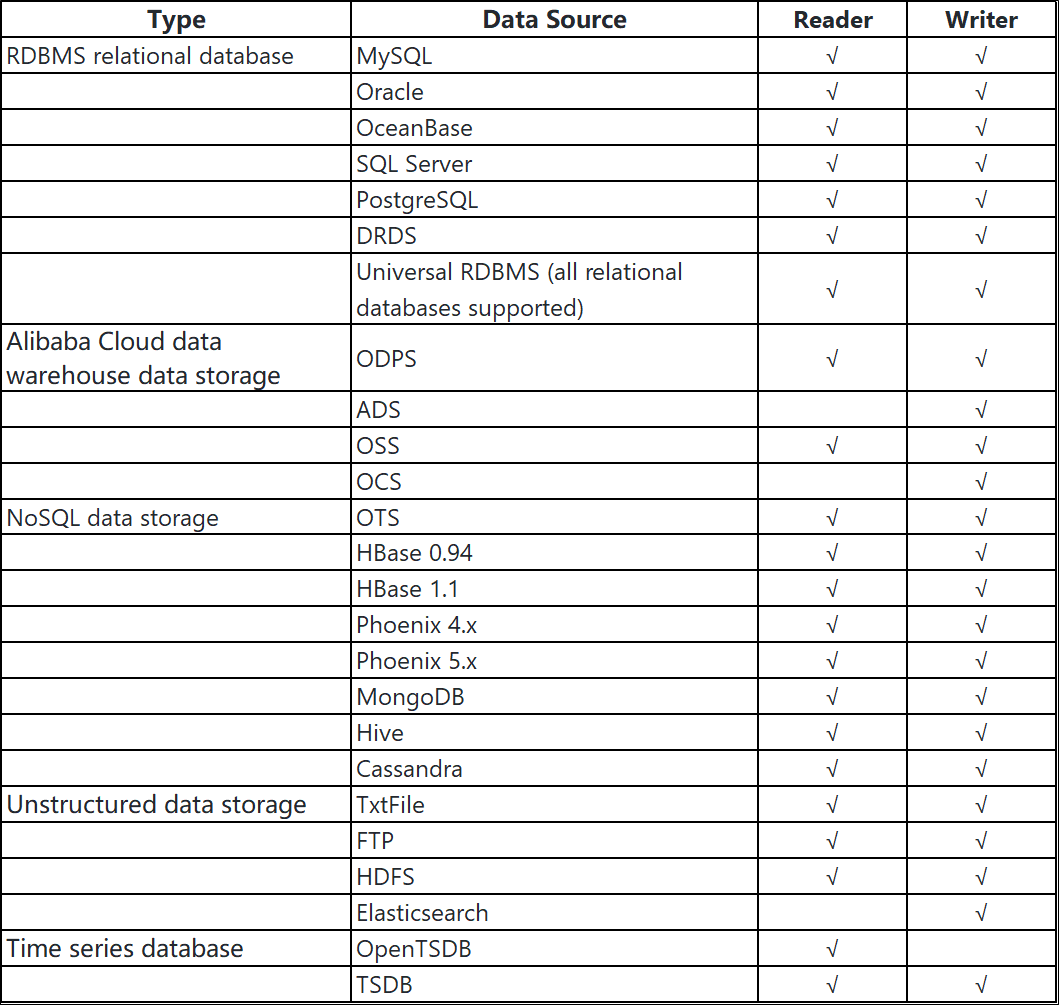

Currently, DataX has a comprehensive plug-in system, and the mainstream RDBMS database, NoSQL, and big data computing systems are all connected. The following figure shows the supported data sources.

The following section introduces several common scenarios.

The most common scenario is data synchronization from one cluster to another, such as overall IDC migration and cloud migration. You need to create the keyspace and schema of the table in the target cluster manually and then use DataX for synchronization. For example, the following configuration file synchronizes data from one table to another table in Cassandra:

{

"job": {

"setting": {

"speed": {

"channel": 3

}

},

"content": [

{

"reader": {

"name": "cassandrareader",

"parameter": {

"host": "localhost",

"port": 9042,

"useSSL": false,

"keyspace": "test",

"table": "datax_src",

"column": [

"id",

"name"

]

}

},

"writer": {

"name": "cassandrawriter",

"parameter": {

"host": "localhost",

"port": 9042,

"useSSL": false,

"keyspace": "test",

"table": "datax_dst",

"column": [

"id",

"name"

]

}

}

}

]

}

}DataX supports multiple data sources and allows you to synchronize data between Cassandra and other data sources. The following configuration file synchronizes data from MySQL to Cassandra:

{

"job": {

"setting": {

"speed": {

"channel": 3

}

},

"content": [

{

"reader": {

"name": "mysqlreader",

"parameter": {

"username": "root",

"password": "root",

"column": [

"id",

"name"

],

"splitPk": "db_id",

"connection": [

{

"table": [

"table"

],

"jdbcUrl": [

"jdbc:mysql://127.0.0.1:3306/database"

]

}

]

}

},

"writer": {

"name": "cassandrawriter",

"parameter": {

"host": "localhost",

"port": 9042,

"useSSL": false,

"keyspace": "test",

"table": "datax_dst",

"column": [

"id",

"name"

]

}

}

}

]

}

}We provide the where keyword in the configuration of the reader plug-in, which can synchronize a part of the data. For example, you can add the where condition to only synchronize incremental data for regular synchronization of time-series data. The format of the where condition is the same as the one in cql. For example, the function of "where":"textcol='a'" is similar to using select * from table_name where textcol = 'a' for querying. In addition, the allowFiltering keyword is used together with where, and its function is the same as the ALLOW FILTERING keyword in cql. Here is an example:

{

"job": {

"setting": {

"speed": {

"channel": 1

}

},

"content": [

{

"reader": {

"name": "cassandrareader",

"parameter": {

"host": "localhost",

"port": 9042,

"useSSL": false,

"keyspace": "test",

"table": "datax_src",

"column": [

"deviceId",

"time",

"log"

],

"where":"time > '2019-09-25'",

"allowFiltering":true

}

},

"writer": {

"name": "cassandrawriter",

"parameter": {

"host": "localhost",

"port": 9042,

"useSSL": false,

"keyspace": "test",

"table": "datax_dst",

"column": [

"deviceId",

"time",

"log"

]

}

}

}

]

}

}Let's take data synchronization between Cassandra objects as an example. The following configurations affect the performance of data synchronization tasks:

1) Parallelism

You can increase the synchronization speed by increasing the parallelism of tasks. This is achieved with the job.setting.speed.channel parameter. The effect of the following configuration example shows how ten threads execute synchronization tasks in parallel.

"job": {

"setting": {

"speed": {

"channel": 10

}

},

...The Cassandra reader plug-in splits tasks by adding token range conditions to the cql statement, so only clusters using RandomPartitioner and Murmur3Partitioner can be split correctly. If your cluster uses another Partitioner, Cassandra reader ignores the channel configuration and uses one thread for synchronization.

2) Batch

You can use UNLOGGED batch in the Cassandra writer plug-in by configuring the batchSize keyword to improve the write speed. Note: There are some restrictions on the use of batch in Cassandra.

3) Connection Pool Configuration

The writer plug-in also provides connection pool-related configuration parameters: connectionsPerHost and maxPendingPerConnection. Please refer to the Java Driver documentation for the specific meaning of these two parameters.

4) Consistency Configuration

The consistancyLevel keyword is provided in the reader and writer plug-ins. The default read/write consistency level is LOCAL_QUORUM. If a small amount of data between the two clusters is allowed to be inconsistent in your business scenarios, the default consistency level is not necessarily needed to improve the read/write performance. For example, you can use the ONE level to read data.

Now, a test is given to observe the performance of data synchronization in DataX.

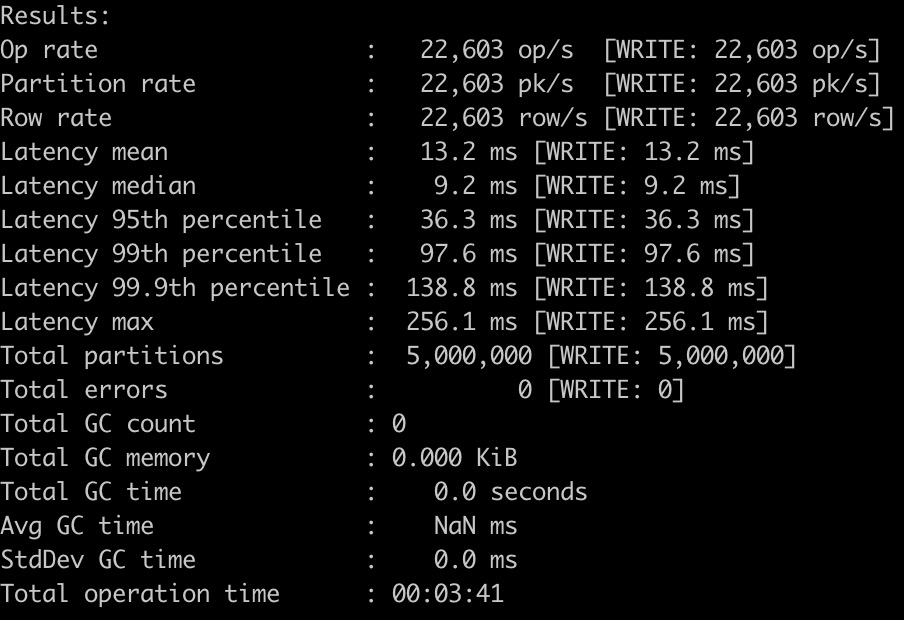

The server uses Alibaba Cloud Cassandra. The source cluster and the target cluster have three nodes, and the specification is 4 CPUs and 8-GB of memory. The client uses one ECS instance, and the specification is 4 CPUs and 16-GB of memory. First, use cassandra-stress to write five million rows of data to the source cluster:

cassandra-stress write cl=QUORUM n=5000000 -schema "replication(factor=3) keyspace=test" -rate "threads=300" -col "n=FIXED(10) size=FIXED(64)" -errors "retries=32" -mode "native cql3 user=$USER password=$PWD" -node "$NODE"The following figure shows the statistics of the write process:

{

"job": {

"setting": {

"speed": {

"channel": 10

}

},

"content": [

{

"reader": {

"name": "cassandrareader",

"parameter": {

"host": "<source cluster NODE>",

"port": 9042,

"username":"<USER>",

"password":"<PWD>",

"useSSL": false,

"keyspace": "test",

"table": "standard1",

"column": [

"key",

"\"C0\"",

"\"C1\"",

"\"C2\"",

"\"C3\"",

"\"C4\"",

"\"C5\"",

"\"C6\"",

"\"C7\"",

"\"C8\"",

"\"C9\""

]

}

},

"writer": {

"name": "cassandrawriter",

"parameter": {

"host": "<target cluster NODE>",

"port": 9042,

"username":"<USER>",

"password":"<PWD>",

"useSSL": false,

"keyspace": "test",

"table": "standard1",

"batchSize":6,

"column": [

"key",

"\"C0\"",

"\"C1\"",

"\"C2\"",

"\"C3\"",

"\"C4\"",

"\"C5\"",

"\"C6\"",

"\"C7\"",

"\"C8\"",

"\"C9\""

]

}

}

}

]

}

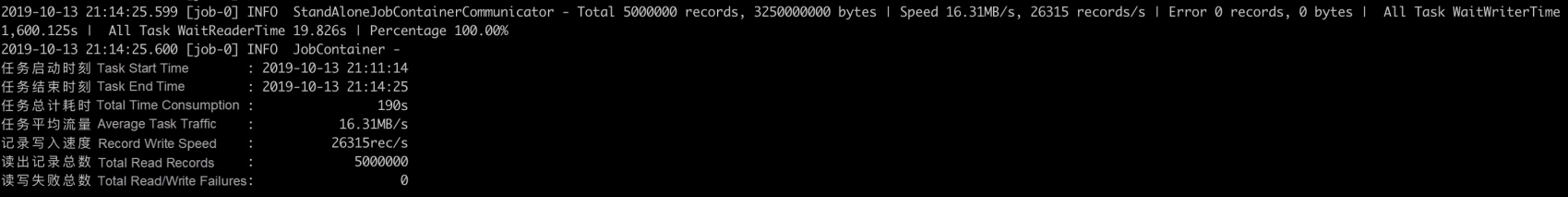

}The following figure shows the statistics of the synchronization process:

The data synchronization performance in DataX is comparable to (or even better than) cassandra-stress.

Alibaba Clouder - July 20, 2020

Alibaba Cloud MaxCompute - December 7, 2018

ApsaraDB - January 26, 2021

Alibaba Clouder - August 19, 2019

Alibaba Clouder - January 7, 2021

Alibaba Clouder - January 6, 2021

ApsaraDB for Cassandra

ApsaraDB for Cassandra

A database engine fully compatible with Apache Cassandra with enterprise-level SLA assurance.

Learn More Time Series Database (TSDB)

Time Series Database (TSDB)

TSDB is a stable, reliable, and cost-effective online high-performance time series database service.

Learn More Security Center

Security Center

A unified security management system that identifies, analyzes, and notifies you of security threats in real time

Learn More ApsaraDB for HBase

ApsaraDB for HBase

ApsaraDB for HBase is a NoSQL database engine that is highly optimized and 100% compatible with the community edition of HBase.

Learn MoreMore Posts by ApsaraDB