By Jiangnan Bao

In April 2021, I was lucky enough to learn about Sealer and its related work at the beginning of its launch and joined the development of Sealer as one of its initial developers soon after.

This article reviews the chance of participation in the open-source project of Sealer, the challenges in the process, and the reflections I have gained from it. I hope to help newcomers to open-source projects and inspire developers that want to participate in open-source work.

My name is Jiangnan Bao, one of the Sealer [6] maintainers. After graduating from the Turing Class of Central South University, I am studying at Zhejiang University as a postgraduate in the SEL Laboratory. My research focuses on mixed cluster scheduling.

GitHub: https://github.com/justadogistaken

At the beginning of my postgraduate studies, some were full of ambition and ready to have goals, but I was anxious and confused. I mainly researched cloud computing at the SEL Laboratory of Zhejiang University. Therefore, we had many seniors in the cloud computing industry. At that time, I came to a high-tech cloud computing company named Harmony Cloud Technology as an intern on cloud-native projects. Harmony Cloud Technology and Alibaba Cloud collaborated on many cutting-edge cloud-native projects. I entered the Cloud-Native Project Team of Harmony Cloud Technology and Alibaba Cloud by coincidence. Afterward, I met Sun Hongliang, a developer working for the Alibaba Cloud Cloud-Native Team. Then, I met Fang Haitao (the initiator of Sealer) through him. They both have rich experience in open-source projects. While chatting, when I learned Sun Hongliang was once a Docker maintainer, I held up him as a model. This should be the way for most people to establish their goals. One thing impressed me. They asked me what I wanted to do most that year (2021). My answer was to leave some traces on open-source projects. At that time, I did not understand why I participated in open-source projects. In retrospect, my goal at that time was not clear enough. However, once you have a goal in mind, you have to make it happen.

Around April 2021, I joined the Sealer Open-Source Team and worked with several developers to develop the core capabilities of Sealer (originally called the cluster image).

Sealer is an open-source cloud-native tool from Alibaba Cloud to help distributed software implement better encapsulation, distribution, and running. Today, considering the novelty of its concepts involved and the growth of the user base in the industry, Sealer has been donated to the CNCF and become a CNCF sandbox project, moving towards a broader industry standard.

At the beginning of software, chaos is often accompanied by hopes. Behind the big goal, the Sealer Team needs to solve numerous technical problems, such as user interface, image format, distribution mode, operation efficiency, and software architecture. In the initial development division of Sealer, I was mainly responsible for the image module, including cluster image caching, cluster dependent container image caching, and cluster image sharing.

Cluster Image Caching: How to significantly provide image building efficiency through reuse at the cluster image layer. Take Docker build as an example. Each build searches for the previously locally cached image build content first and reuses the build cache to reduce hard disk usage and improve image build efficiency.

Cluster Dependent Container Image Caching: How to cache all container images that a cluster depends on without making users aware of it. In the early days, when Sealer builds cluster images, you needed to pull up the cluster. After all loads start normally, you can package the cluster images. One of the most important parts is to cache all the container images the cluster depends on to package them.

Cluster Image Sharing: Anyone can use Sealer just like Docker tools to share a cluster image by pushing, pulling, saving, and loading.

Among the challenges of Sealer, I am deeply impressed with the cluster dependent container image caching and the container image proxy of the private repository. I remember it was Haitao who came to me and said that he needed me to be responsible for one of the core features of Sealer – How does Sealer cache all container images pulled during the building process when users do not need to provide additional information and are not aware of it? The following is a brief introduction to the relevant background.

Docker container image will package the file system and configuration information required by an application. Docker run can run directly in any environment (with the help of virtualization technology) even if it is isolated from the external network. The application itself has no logic to access the external network. Sealer is committed to defining the standards for cluster delivery. It also needs to solve the problem of pulling images from isolated external networks, especially if it is indispensable in private cloud delivery scenarios. These application container images are part of the file system required by Sealer cluster images. There are many ways to solve this problem. For example, the simplest way is to let users fill, pull it in a unified way, and package it. However, Haitao and I think this is not user-friendly or elegant enough. Users that use Sealer are lazy and tired of doing these trivial things. Then, let's do these complicated things for users.

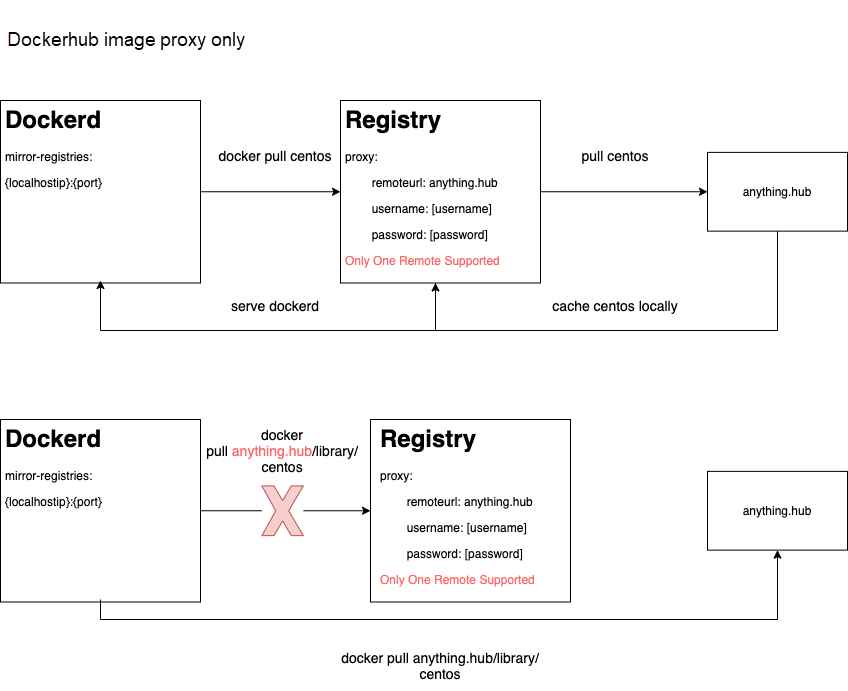

There is an open issue [3] published in 2015 in the Docker community. The issue is to request to support the image proxy of the private image repository in the Docker daemon configuration. It has not been solved so far. The visual description of this problem is shown in Figure 1. Sealer caches all the container images required by the cluster to the local registry during the image building phase. After the cluster is started, the Container Runtime Interface (CRI) can pull the container images from the registry. However, since Docker does not support the image proxy configuration of private repository, when pulling example.hub/library/centos (the example.hub is the address of any image warehouse except Docker Hub), it will be pulled directly through example.hub instead of Docker daemon to configure a mirror address. However, this is not what we expected because the container images we cached during the building phase are prepared for the current startup phase, and the cluster network is isolated from the outside world in private cloud delivery scenarios. For this problem, our initial optional solution was to use the webhook feature of Kubernetes to replace or add the address of the local registry in all image prefixes before pods are created. However, after discussing with Haitao, we still think this solution will intrude on the application YAML filled in by users, and we insist on making the solution more elegant.

Figure 1: Image Proxy Logic of Native Docker

I started to learn the source code of Docker [1]/Registry [2] to solve the two problems (the cluster dependent container image caching and the container image proxy of the private repository). Soon, the configuration part of the mirror was located in the Docker source code. I also learned from the official documents that Registry supports image cache (pulling through cache), as shown in Code Block 1. However, Registry only supports the configuration of a single remoteurl. The user images will come from multiple remote image repositories, so the original configuration of Registry cannot be used directly.

proxy:

remoteurl: https://registry-1.docker.io

username: [username]

password: [password]Code Block 1: Configuration Items of Registry Pulling through Cache

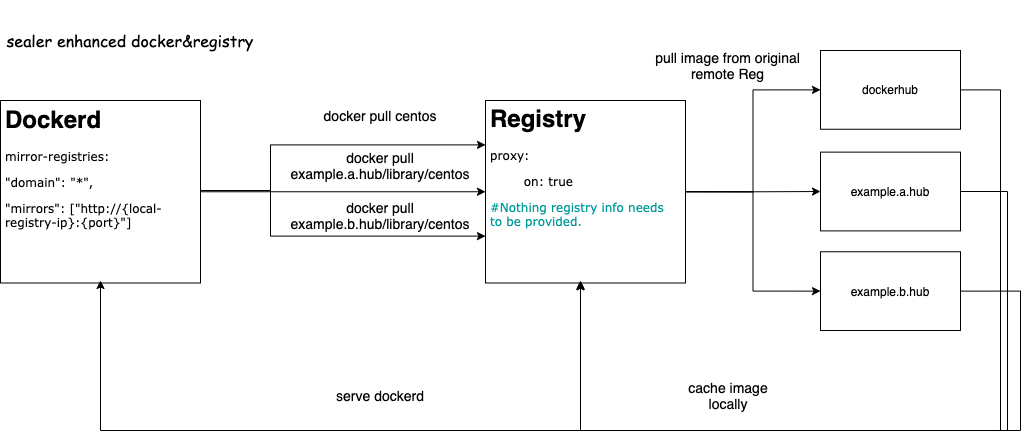

Since the community does not support it, and Docker is slow to promote the image proxy of the private repository, I directly enhance it based on the existing capabilities of the community. However, I do not affect other logics of Docker, only doing some incremental configuration items to realize the features we need. Finally, we provide the capabilities that Docker and Registry can support, as shown in Figure 2.

The main features are listed below:

Figure 2: Diagram of Docker and Registry Capabilities Enhanced by Sealer

Roughly two or three months after the open source, Sealer achieved its first production user – Government Procurement Cloud.

There have been several moments that made me excited, such as when I got an internship at TikTok in Beijing in my senior year, which brought me the freshness of entering a large company, and when I was accepted by Zhejiang University to pursue a master's degree without an entrance examination. However, a more recent exciting moment is that our open-source tool has its first customer willing to implement it in the production environment. This indicates that our work has been recognized by others. In addition, a large part of the tool was contributed by my painstaking efforts, which gives me a special sense of accomplishment.

Nevertheless, as the outside world pays more attention to Sealer, I have to bear more responsibilities. In the past, when writing software, I only cared about features and whether it could run. Now, there are too many technologies to care about when doing open-source projects for Sealer, such as how to elegantly judge and realize the needs of open-source users, how to design a good architecture to support the sustainable development of Sealer, and how to allocate energy to complete the software quality of Sealer. The most direct way to improve this aspect is to learn from excellent projects. It was the most efficient period for me to learn. I read a lot of Docker and Registry source code. One of the parts learned from Docker is the modularization of code features. There were only a few developers in the initial development of Sealer in total. We wrote a tool class for the files and metadata information that the bottom layer depends on to iterate faster. However, the modules that everyone is responsible for are different, and the metadata and files depended on by the bottom layer are highly likely to change during the continuous iteration process, which has many hidden dangers. After studying Docker code for a time, I decided to start from a certain version to converge all image-related operations to the image module, provide interfaces to other modules, operate dependent files at the bottom level, and abstract a layer of file system modules. Through this refactoring of some modules, our code is cleaner than before, and the risk of other developers misoperating the underlying files is reduced.

Self-Confidence: I believe most people will tell themselves "what have I done" when they encounter setbacks and difficulties. Don’t falter! Stick to it.

Openness and Communication: In the process of developing Sealer, many designs are based on Docker, but there are still doubts. During that time, I communicated frequently with developers in the Sealer and open-source communities. I encountered some problems in image compression at the beginning, and I did not come to a conclusion after thinking for a long time. Then, I wrote an email and asked Vbatts, the author of tar-split. Later, the issues were resolved successfully. I think communication is one of the most important abilities in work. Full communication can often solve many problems and avoid a lot of useless work.

Avoiding Blind Pursuit for Open-Source: In the Beginning of the Journey section, I mentioned that my expectation for 2021 was to leave a mark on open-source, but I was not clear why I wanted to do so at that time. It may be for the so-called reproduction. After I participated in an open-source project completely, I found it was meaningless to blindly pursue the contribution to the open-source community and gain the so-called reproduction. Be sure to do something valuable and something you recognize; the rest is not important.

Learning New Technologies and Correcting Past Defects: The main challenge mentioned in this article is container image proxy for the private repository. At that time, I felt very fulfilled and learned a lot from solving this problem. However, it seems there are still some defects now. For example, Docker specially provided by Sealer must be used, which is a type of behavior requiring heavy workloads. Although it seems reasonable to use this component as a rootFS of Sealer, we still need to provide most versions of Docker and track changes of the upstream community version in time. Now, it seems the practice at that time was not very elegant. Recently, when visiting the community, I saw an intel-resource-manager tool [4]. The tool is plug-in padding between kubelet and CRI. It is too slow for RDT [5] and other technologies to access Docker and Kubernetes communities. Therefore, the tool can realize its powerful features. After seeing the tool structure, I thought the container image proxy of the private repository of Sealer could be solved in the same way.

Improve Performance: I will continue to optimize Sealer to improve its delivery efficiency and stability, enabling Sealer to achieve its best in cluster delivery first.

Architecture Optimization: The current sub modules of Sealer are relatively coupled. It is relatively difficult for beginners to get started, which is not friendly to develop the ecosystem for developers. We will be devoted to abstracting the functional modules in the future so community members can focus more on sub fields, such as the runtime module, k0s/k3s, etc.

Expand the Ecosystem: The community provides more cluster images for users.

Absorb More Developers: The community needs to absorb more developers to expand the community. At the same time, it needs a simpler quick start to lower the development threshold.

Multi Community Cooperation: The Sealer community is working on establishing cooperation with other open-source communities (such as OpenYurt [7] and Sealos [8]) to promote a win-win situation for all parties.

[1] Moby: https://github.com/moby/moby

[2] Distribution: https://github.com/distribution/distribution

[3] Enable engine to mirror private registry: https://github.com/moby/moby/issues/18818

[4] cri-resource-manager: https://github.com/intel/cri-resource-manager.git

[5] Intel Resource Director Technology: https://www.intel.com/content/www/us/en/architecture-and-technology/resource-director-technology.html

[6] Sealer: https://github.com/sealerio/sealer

[7] OpenYurt: https://github.com/openyurtio/openyurt

[8] Sealos. https://github.com/labring/sealos

ChaosBlade-Box, a New Version of the Chaos Engineering Platform Has Released

640 posts | 55 followers

FollowAlibaba Cloud Community - July 8, 2022

Alibaba Cloud Community - July 15, 2022

Alibaba Cloud Native Community - September 7, 2022

Alibaba Cloud Native - May 23, 2022

Alibaba Developer - December 14, 2021

Alibaba Cloud Native Community - May 31, 2022

640 posts | 55 followers

Follow Function Compute

Function Compute

Alibaba Cloud Function Compute is a fully-managed event-driven compute service. It allows you to focus on writing and uploading code without the need to manage infrastructure such as servers.

Learn More Managed Service for Prometheus

Managed Service for Prometheus

Multi-source metrics are aggregated to monitor the status of your business and services in real time.

Learn More Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn More Lindorm

Lindorm

Lindorm is an elastic cloud-native database service that supports multiple data models. It is capable of processing various types of data and is compatible with multiple database engine, such as Apache HBase®, Apache Cassandra®, and OpenTSDB.

Learn MoreMore Posts by Alibaba Cloud Native Community