By Yuedong Xi (Xiwen)

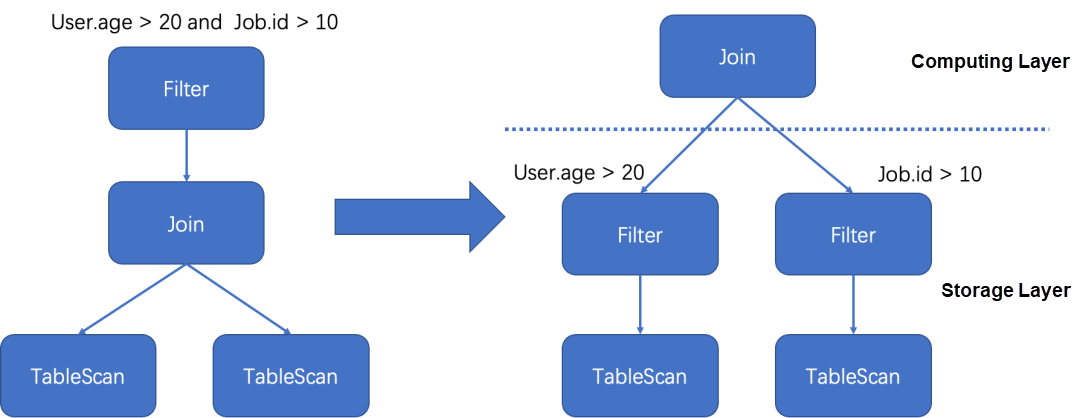

It is commonly believed that filter conditions must be pushed down as close to the bottom as possible to bring as many filter conditions closer to the data source, so the irrelevant data will not be queried. In AnalyticDB for MySQL, due to the separation of storage and computing, predicate pushdown aims to push all predicates that can be pushed to the storage node. As shown in the following figure, all filter conditions are pushed to the storage node. This reduces the computation of subsequent operators and the amount of data transmitted on the intermediate network. Predicate pushdown brings many benefits, and all databases use predicate pushdown as an important optimization feature.

Will pushing filter conditions down to the storage layer necessarily speed up queries?

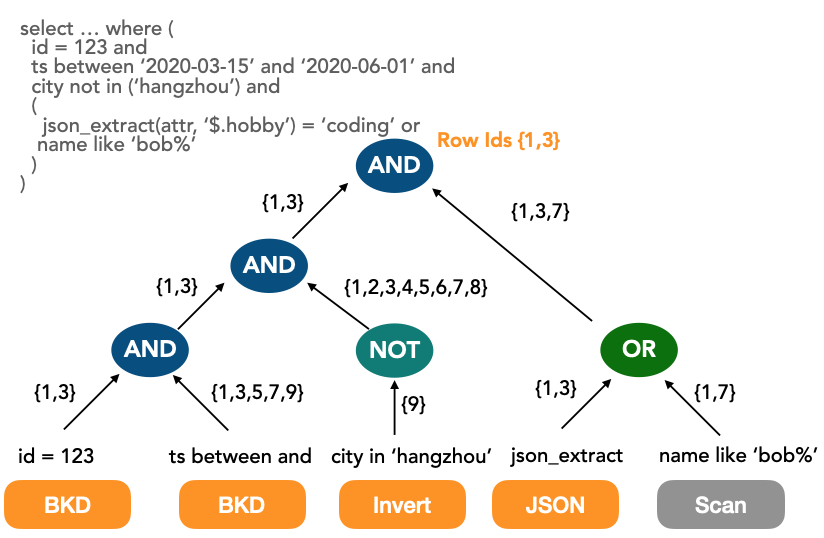

Let's take a look at the index structure of the storage node of AnalyticDB for MySQL to answer this question. AnalyticDB for MySQL creates indexes for all columns by default and allows you to use indexes to filter data based on multiple filter conditions, so you can find the result sets that meet the conditions in milliseconds. The following figure shows the query process using indexes based on multiple filter conditions in a table. As you can see, an index lookup is performed for each column, and RowIds are computed using intersection, union, and difference operations.

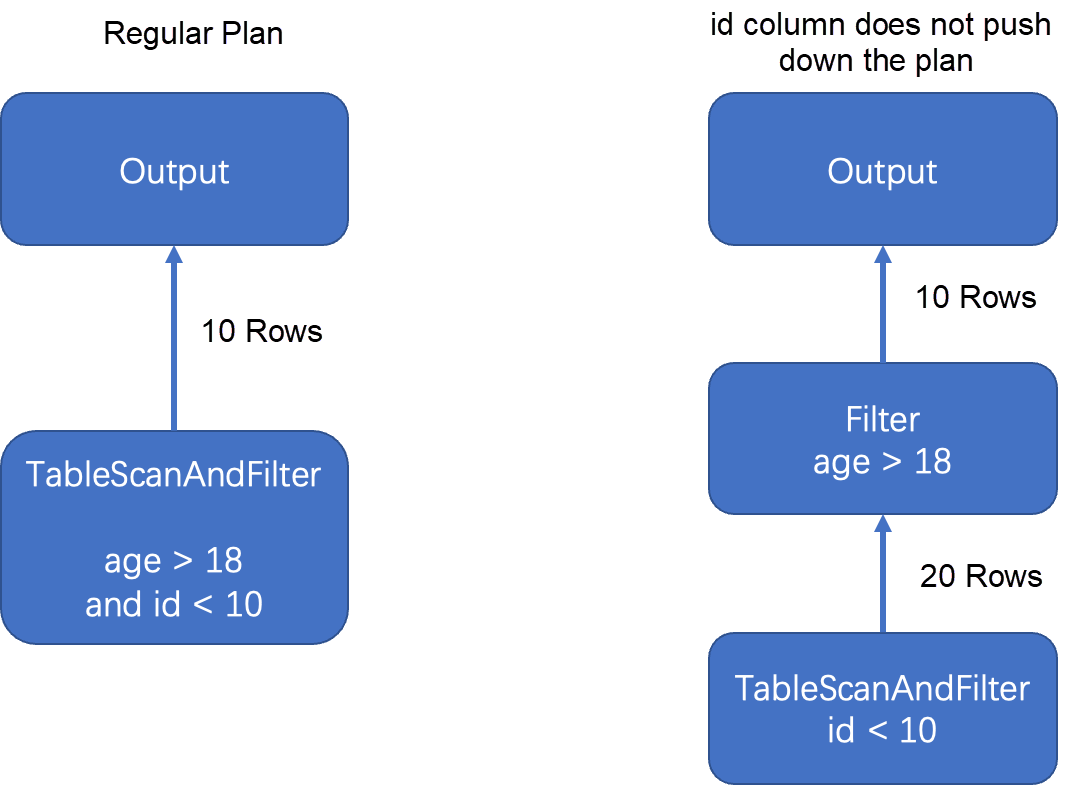

After talking about the index structure, a simple example is used to illustrate the case where the filter condition is pushed down to the storage layer, but data filtering becomes slower. Let's assume a simple cost model where the cost of scanning indexes for each row is A, the cost of obtaining details and data transmission for each row is B, and the cost of filtering for each row in the computing layer is C. Let’s consider the following SQL statements:

create table user (age int, id int);

select count(*) from user; -- The result is 10,000;

select count(*) from user where age > 18; -- The result is 9,000.

select count(*) from user where id < 10; -- The result is 20

-- Consider the following SQL statements.

select * from user where age > 18 and id < 10 -- The result is 10It can be seen that if 10B + 20C is less than 10,000A, the plan that does not push down the Id column is better.

The optimizer pushes down all indexed columns to the storage layer by default. This reduces the amount of data read to the compute engine. However, we recommend not using indexes to filter data in the following scenarios:

AnalyticDB for MySQL provides the intelligent optimization feature to optimize the performance of AnalyticDB for MySQL in the scenarios above and prevent AnalyticDB for MySQL developers and users from consuming too much effort to use hints to optimize SQL statements. The optimizer intelligently controls whether filter conditions are pushed down to storage nodes based on accurate statistics. SQL tuning allows users to no longer struggle with whether to push down filter conditions, speeding up queries by users with ease.

We define the unit after the filter condition is split according to the outermost AND as a conjunction. For example, ((A & B) | C) & D & E is composed of three conjunctions, ((A & B) | C), D, and E. The reason for this definition is that the conjunction is the smallest unit pushed down to the storage layer. In conjunction, either all conditions are pushed down, or none are pushed down.

If there are 100 rows of data and 10 rows of data satisfying the condition of A>10, the selectivity of A>10 is 10%.

AnalyticDB for MySQL optimizer supports multiple connectors. The optimizer supports the AnalyticDB for MySQL storage engine and OSS foreign tables. Different connectors require processing differently.

Here are the original rules of AnalyticDB for MySQL optimizer for filter pushdown. The filter is pushed to the top of the table and the place closest to the storage node. Then, the following work is handed over to the intelligent pushdown module. The intelligent pushdown module determines what predicates can be pushed to the storage node.

Short-Circuit Optimization: The module will roughly estimate the overhead of the entire table scan. If the table is small, this step is quickly skipped to reduce the additional overhead of subsequent processes.

Expression Conversion: Based on the operational rule of Boolean algebra, convert the filter conditions to AND connections as much as possible. For example, ( A & B ) | ( C & D ) cannot be pushed down partially, but after it is converted to ( A | C ) & ( A | D ) & ( B | C ) & ( B | D ), it can be partially pushed down. This step is limited and blind conversion is not permitted because the expression after conversion becomes longer, which may cause codegen overrun.

The Cardinality Estimation module of AnalyticDB for MySQL optimizer is called to calculate the selectivity and the corresponding reliability for each conjunction based on accurate statistics (such as histograms). This module relies on accurate statistics and cardinality estimation in the AnalyticDB for MySQL optimizer to provide high-quality selectivity and accurate input for subsequent cost calculation and pushdown scheme selection.

The custom cost model at the storage layer defines that filter conditions that meet certain conditions based on the cost calculated by selectivity will not be pushed down. This separation mode makes other storage layers connected to AnalyticDB for MySQL realize intelligent pushdown by simply implementing the connector cost model.

This module inputs all the conjunction, selectivity and reliability to the corresponding connector cost model, enumerates the pushdown combinations with a conjunction as the smallest unit, calculates the cost, and selects the pushdown scheme with the lowest cost. This way, the filtering method of AnalyticDB for MySQL has evolved from the previous full pushdown to the current intelligent pushdown.

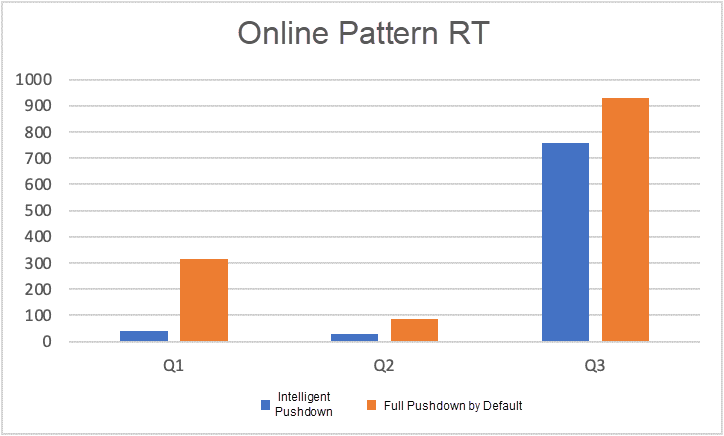

The following figure shows the acceleration effect of using intelligent pushdown for some queries with long index scan times. The data comes from the average RT change of online SQL statements before and after intelligent pushdown is enabled for internal grayscale clients. You can see a significant performance improvement.

The problems in actual production are far more complicated than those mentioned in this article. As space is limited, more technical details are not explored in depth. People interested in technology are welcome to join the AnalyticDB community for further discussion.

Hands-On Lab | Quick Backup and Restore ApsaraDB for MongoDB

Paper Interpretation | PolarDB Cost-Based Query Transformation

ApsaraDB - July 26, 2023

ApsaraDB - November 26, 2024

chenyucatcat - April 21, 2021

ApsaraDB - October 30, 2023

ApsaraDB - October 21, 2020

ApsaraDB - October 20, 2023

AnalyticDB for MySQL

AnalyticDB for MySQL

AnalyticDB for MySQL is a real-time data warehousing service that can process petabytes of data with high concurrency and low latency.

Learn More PolarDB for MySQL

PolarDB for MySQL

Alibaba Cloud PolarDB for MySQL is a cloud-native relational database service 100% compatible with MySQL.

Learn More AnalyticDB for PostgreSQL

AnalyticDB for PostgreSQL

An online MPP warehousing service based on the Greenplum Database open source program

Learn More ApsaraDB RDS for MySQL

ApsaraDB RDS for MySQL

An on-demand database hosting service for MySQL with automated monitoring, backup and disaster recovery capabilities

Learn MoreMore Posts by ApsaraDB