On October 24, the 2020 China Open Source Annual Conference and Apache China Roadshow were held in Changsha. Jin Yuntong, a senior technical operation expert of Alibaba Cloud Intelligence, was invited to give a speech titled, "The Initiation and Development of OpenAnolis Open Source Community", at the operating system sub-forum. This article is a summary of his speech.

The following content is based on his speech:

In recent years, open source has become popular in China, and it has been recognized by more and more developers and companies. I have been dealing with open source for almost my entire career. Here, I would like to share with you my fate with open source.

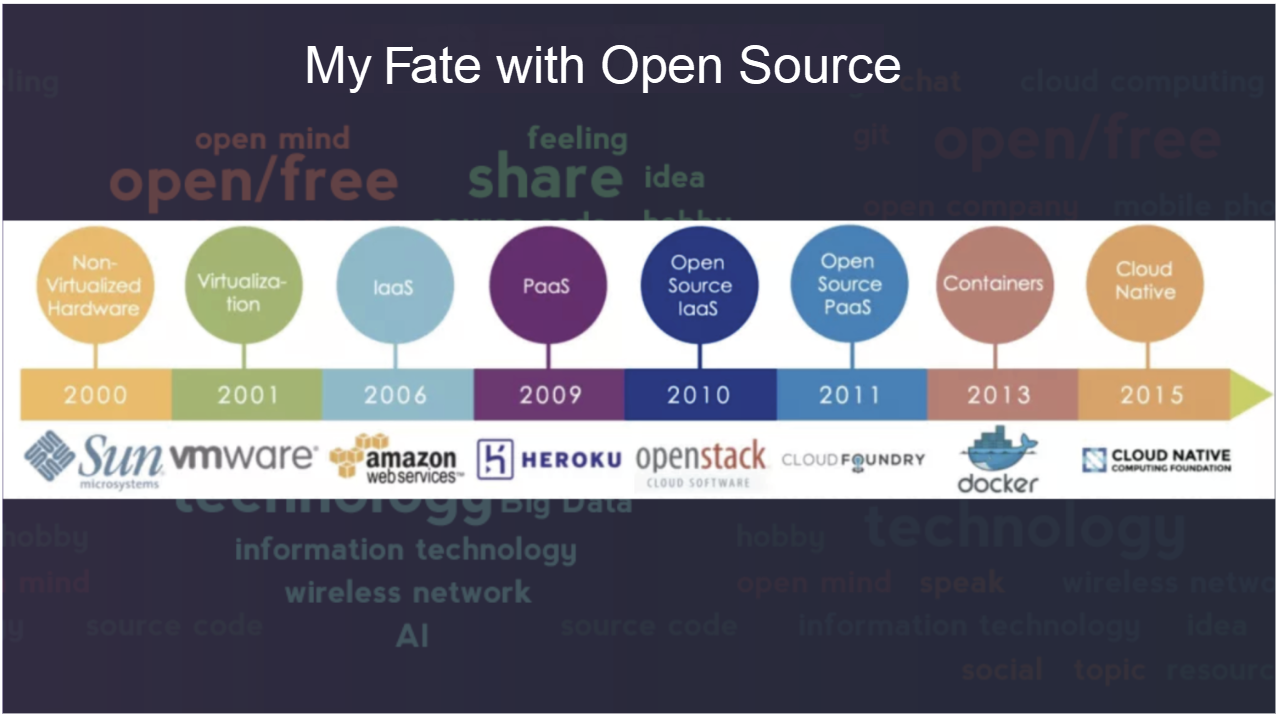

I believe many of you here have seen this picture. This image introduces the technological development of cloud computing. Since the year 2000, my first contribution to the open source project was in SUN. At that time, the open source contributors in China were just a small group. I was involved in the development of the desktop system in the openSolaris open source community, including the work of Firefox open-source browsers. I remember that with the release of Firefox 3.0 in 2008, the number of downloads in one week exceeded 20,000,000, which made me witness the power of open source for the first time.

Later, SUN was acquired and disappeared in the long history of IT development, and its decline is precisely related to open source. Although the entire company implemented a comprehensive open-source strategy in the later period and was one of the largest open source companies in the industry, its open-source strategy was implemented too late and failed to catch up with the historical trend of industrial development.

So I think open source is not only a new way of development and collaboration but also a new business strategy for companies to build a new industrial relationship with the industry through open source.

In this picture, the first stage with SUN is also known as the development stage of a single machine, having a big impact on the IT world with a strong technical insight. SUN not only invented the Java language but also put forward the concept of "network as computer" very early, which laid the foundation for the development of cloud computing.

Later, VMware started virtualization work in 2001 and AWS started cloud computing in 2006. I also joined the IBM KVM open source virtualization team in 2011. At that time, I did not realize that open source virtualization would become a strong driving force for cloud computing development and the cornerstone of the entire cloud computing technology stack because KVM had just come out and not many people around me talked about cloud computing.

Later, OpenStack was born and set off a wave of open source cloud computing. I had the honor to witness the vigorous development of OpenStack in Intel. For example, the open source hackathon activity at this conference. I participated in the first OpenStack hackathon in 2015. Back then, there were only 3 companies and with more than a dozen people participating. Now, nearly 30 companies and nearly 100 people participate in the activity. This shows that open source has been recognized by domestic developers and companies.

Later, with the development of container technology and the rise of cloud native, I also participated in kata Containers projects and met many domestic container developers. So looking back on my career, it's as if I've caught up with every wave of technology in the development of cloud computing. It is open source, instead of me, that has good judgment about technology development. Open source puts one at the forefront of technology development.

The reality is that I returned to the traditional field of operating systems. I have been working as a system manufacturer developing open-source system software serving the hardware system platform. Now I'm with Alibaba Cloud to open source system software. What is the difference between a cloud computing company and a hardware system manufacturer in developing open-source system software?

To answer this question, let's first talk about the original intention of the community, then introduce the community, and finally, discuss the future development of the community.

When I joined the Alibaba Cloud Operating System Team, I found that the field of the operating system is facing new challenges in the cloud computing era. It is growing in its old roots. For example, the stability requirements brought by the large-scale deployment of cloud computing, and with the expansion of the scale of cloud computing, every optimization and performance improvement will bring significant economic benefits. Therefore, the full-stack integration and optimization of the cloud platform have become one of the core competitiveness of the cloud platform. In addition, cloud computing hopes to provide plug-and-play computing resources for business applications like water and electricity and liberate businesses from infrastructure, so that users can pay attention to business innovation. As the nearest layer to business applications, the operating system needs to provide long-term stable support and SLA guarantee like other cloud products. This is an important part of the capabilities of the cloud platform.

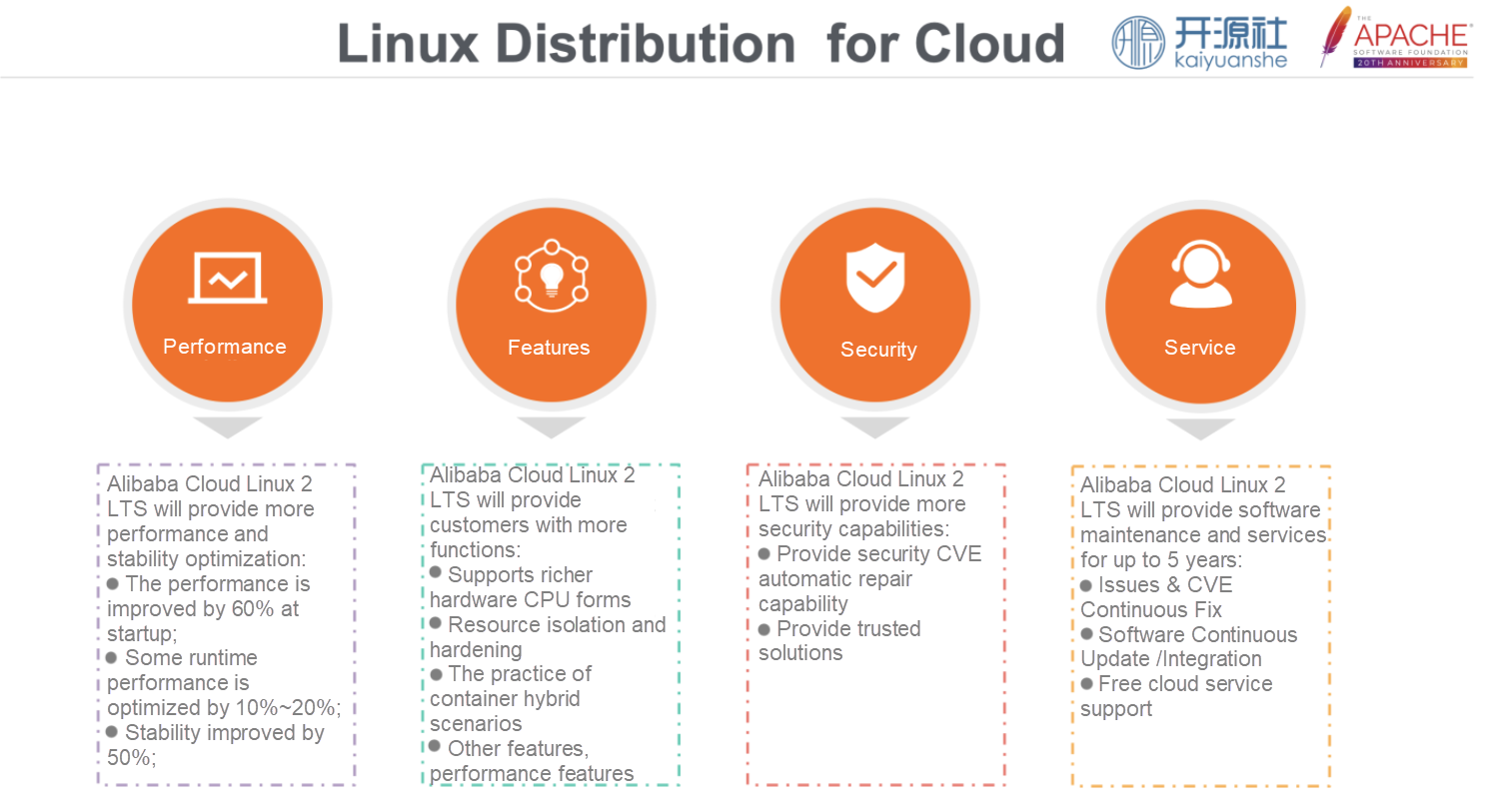

With the development of Alibaba Cloud over the past 10 years, the operating system team has been exploring and practicing and built Alibaba Cloud Linux to meet the needs of cloud computing scenarios, such as stability enhancement, startup speed, and performance improvement, which supports for multiple architectures and online business applications, resource isolation, security reinforcement, and LSA.

But we found that these problems are common challenges faced by everyone in the cloud computing era and many are not going to be solved by one department or one company. For example, the support available for the production of heterogeneous platforms and the rapid introduction of hardware capabilities, as well as the integration and optimization of software and hardware, requires close cooperation with various hardware ecosystems. In another example, to meet the needs of cloud computing and Internet business scenarios, more cloud service providers and system manufacturers are customizing their distributions, which makes the entire distribution ecology fragmented and the relationship with the upstream community is differentiated and distant. These need to be solved from the entire cloud computing ecology.

And this led to the development of the OpenAnolis operating system open source community.

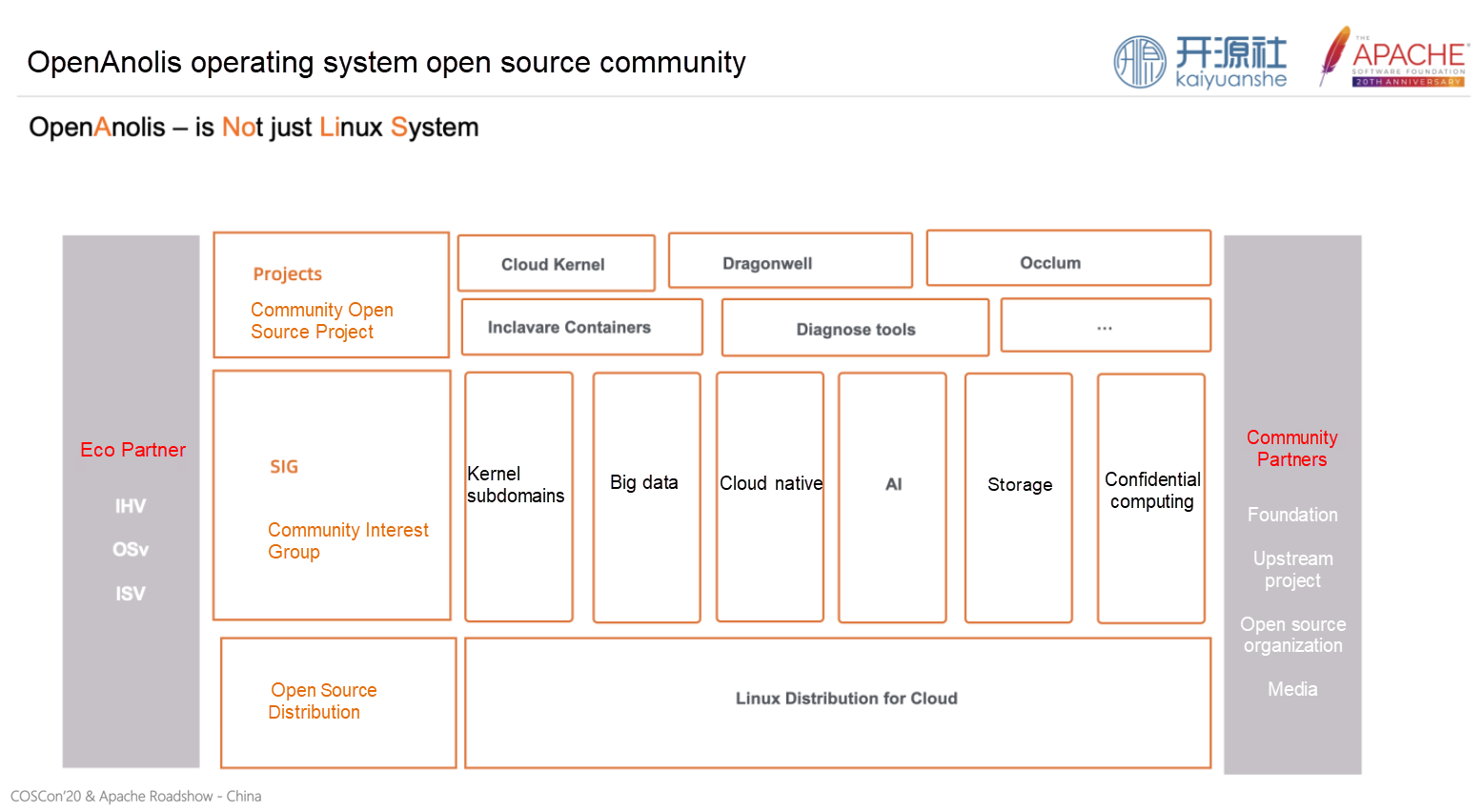

Anolis, is Not just Linux System. The community's name is a recursive abbreviation inspired by GNU, and as its name implies, the OpenAnolis community is not just a single operating system distribution community. To meet the development needs of cloud computing scenarios, the entire community consists of three parts:

The community is establishing cooperative relationships with many ecological partners. For IHV, system software and hardware platforms are a natural double-helix ecosystem. The community hopes to work with IHV to bring new hardware platform capabilities to applications, tap platform performance, and promote rapid evolution and iteration of hardware platforms.

For OSv, building a suitable OS for cloud computing is a shared responsibility. There will be a lot to learn from and work with each other. The community hopes to provide a better and cloud computing-suitable operating system with OSV.

For ISV, the operating system serves as the closest layer to business applications. The community hopes to work with ISV to make applications grow better on the cloud, improve end-to-end performance, and drive business agility.

Let's introduce the community project and SIG

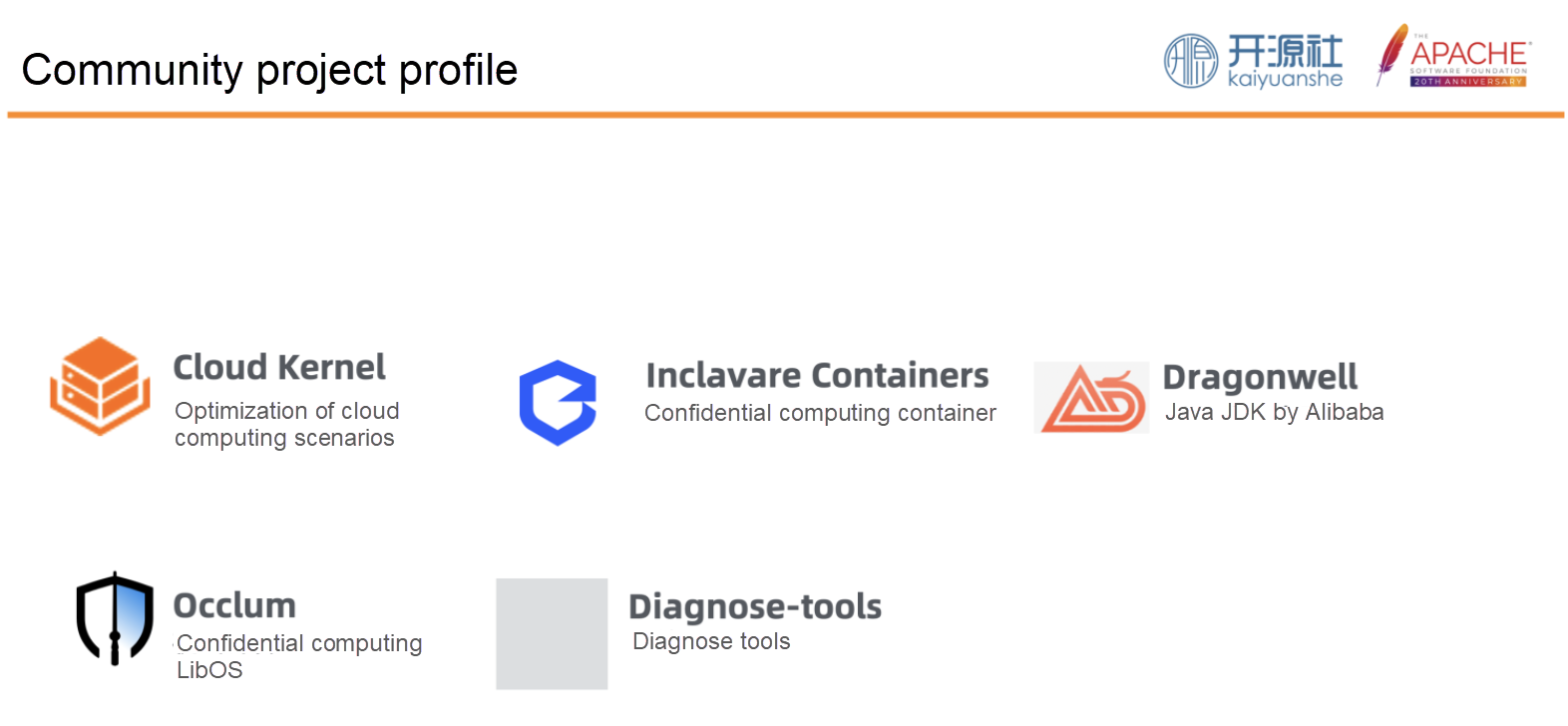

Cloud Kernel is the open-source kernel version to meet the cloud computing scenarios based on the upstream kernel of LTS version. Targeting cloud infrastructure, it developed and optimized many features, such as stability, enhancement of system resources isolation, cloud configuration, and optimizaiton of integrated applications to meet the cloud computing scenario needs, by combining with all kinds of cloud applications.

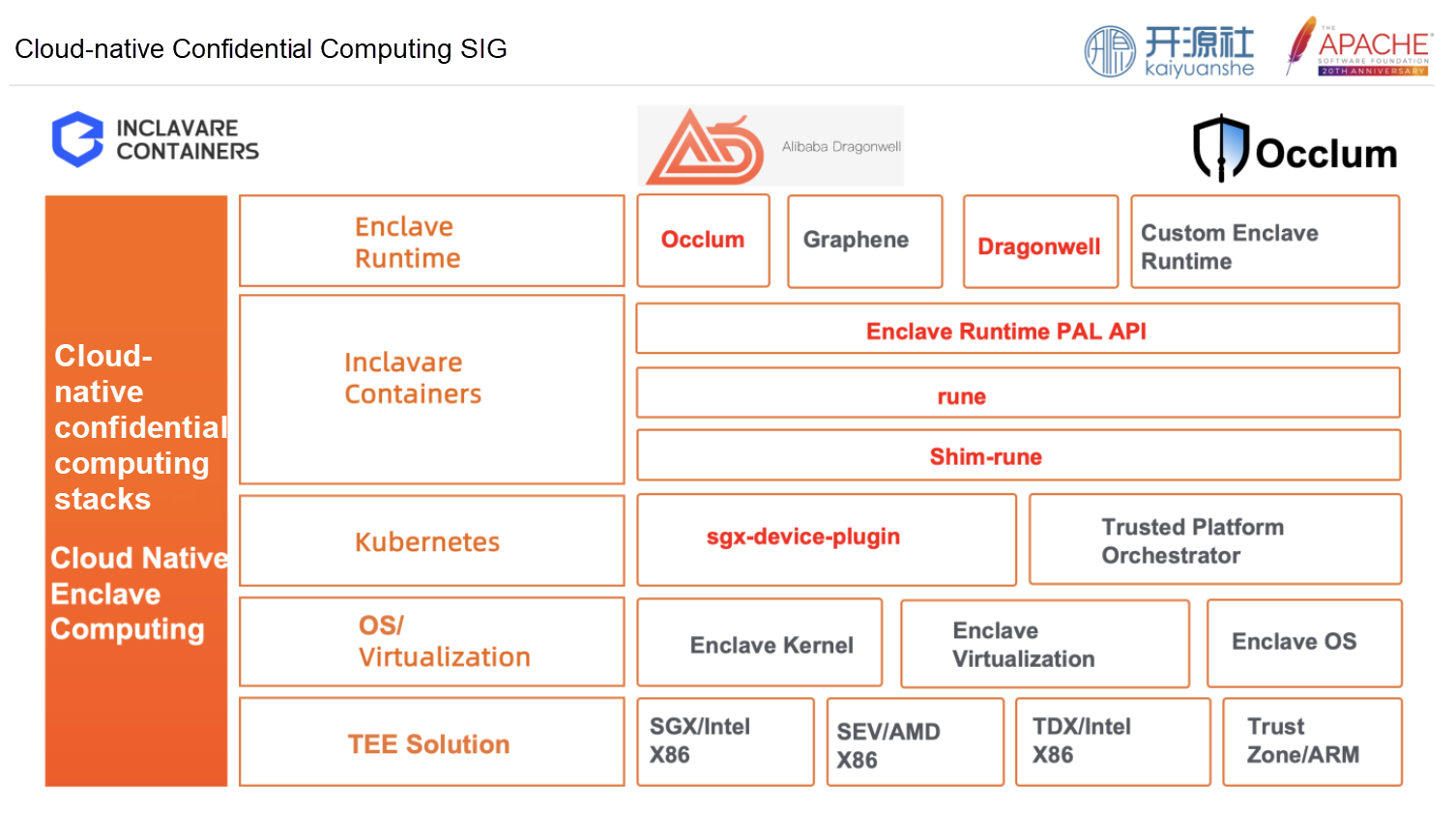

Inclavare Containers is an open-source and container runtime service for confidential computing scenarios. It is dedicated to bringing confidential computing into the cloud-native era. It is also accepted as OCI container standard implementation by Open Container Initiative (OCI) container specification organization. This means Inclavare Containers confidential computing container runtime, together with runC and Kata Containers, became one of the OCI container standards. It was initiated by the Alibaba Cloud operating system team, the Container Service team, and the Ant Trusted Native team. It is co-built with Intel and others.

Dragonwell is an open-source, production-ready version of the Open JDK. It is widely used by internal Alibaba e-commerce businesses and external cloud customers. It focuses on reshaping the Java language on the cloud. For example, it provides Java static compilation technology, through a separate compilation stage to compile Java programs into local code. No traditional Java virtual machine and runtime environment is required at runtime as long as OS class library supports. The startup speed of an application is optimized from 60 seconds to 3.8 seconds to adapt to cloud applications, especially in function computing scenarios. It also supports coroutine. Thread blocking scheduling will become a lighter coroutine switching. People liked the concurrency mechanism when using Go language. Now Dragonwell brought the coroutine to the Java language.

Occlum is a confidential computing open source project of LibOS. By abstracting the confidential computing capabilities on hardware, it lowers the threshold for confidential computing application development, which is an important part of confidential computing ecology and the default confidential computing application framework for Inclavare Containers.

Diagnose tools is also an open-source system diagnosis tool of the Alibaba Cloud operating system team. It can detect system runtime data to quickly locate various performance exceptions and jitter problems of linux operating systems.

Currently, there are four initial SIGs in the community about the kernel, including:

Core service SIG: The core service is the key system layer between the kernel and the application. It provides high-reliability and high-performance core services on the cloud by deeply optimizing the core user-mode components. For example, the systemd system and service manager are modified to solve the problems of cluster jitter and mount leak caused by the expansion of cgroup, which improves the stability of the system.

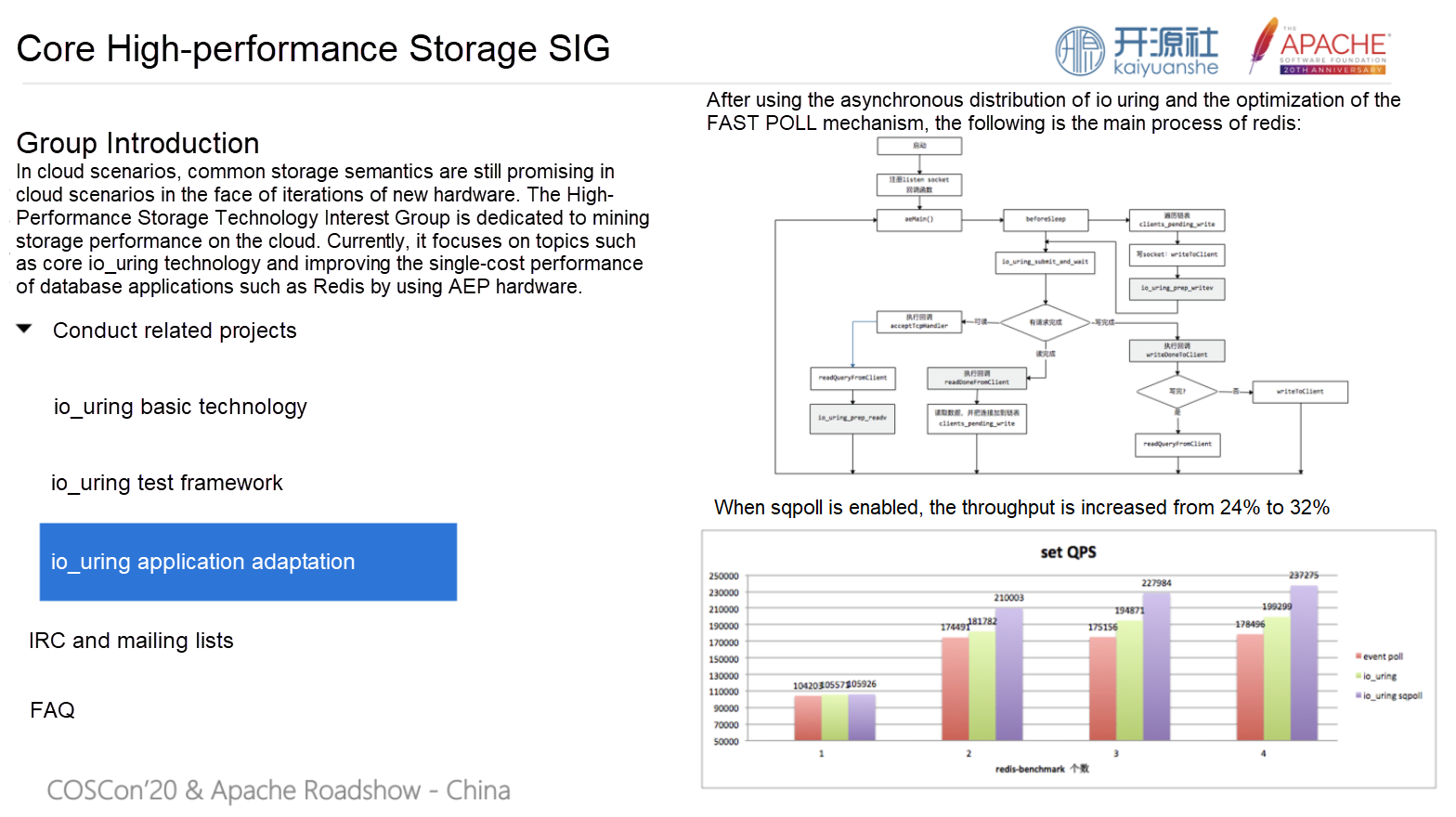

High-performance storage SIG: In cloud scenarios, the technology stack of network storage will be longer, and new storage hardware is rapidly updated and iterated. This has promoted the innovative development of storage stacks. For example, Intel was interested in building a high-performance storage software stack around Persistent Memory in the community to provide complete support for PMEM's protocols. It matches with upper-layer applications, such as Redis solutions that support Persistent Memory, to build end-to-end high-performance storage application solutions.

Trace diagnosis technology SIG: Trace diagnosis technology is an essential basic capability in the operating system. The large-scale deployment of cloud computing and the increasing complexity of software stacks have brought challenges to system diagnosis and operation and maintenance. Traditional manual operation and maintenance are not working. Therefore, cloud platforms need to build a set of automatic and intelligent operation and maintenance mid-end to automatically collect online system data, provide intelligent health diagnosis and problem discovery, and build intelligent customer service to respond to problems. At the same time, it is necessary to provide automatic, non-stop, hot repair, and active fault isolation to realize automatic problem repair.

Community open-source system Diagnose tools, which can deeply detect system runtime data, strive to use open sources to build tracking and diagnosis platforms with automatic and intelligent systems;

Resource isolation SIG: Resource isolation is a support technology for containers. The community has enhanced the isolation of host memory, CPU, network, IO resources at the core layer. For example, it is the first to improve the support for cgroup v2, which solves the long-standing problem that IO resources fail to be completely limited. Use Intel RDT techniques to isolate CPU LLC (Last Level Cache) and avoid performance interference from noisy neighbors to protect the cloud virtual resource of SLA;

The enhancement of resource isolation is applied to resource mixing scenarios through the long-term practice of e-commerce business, which can improve the utilization of CPU and other resources. Our community is also exploring the combination of resource mixing and Kubernetes and resource scheduling. Welcome developers who are interested in this field to explore together.

To better understand the work of SIG in the community, let's take a project of high-performance storage SIG as an example:

io_uring is the new asynchronous IO framework in kernel 5.1. io_uring is designed to avoid memory copies of IO during committing and completing events by using a pair of shared ring buffer for communication between the application and the kernel. The community tested it by FIO, and found that in the pull mode, its performance is comparable to SPDK;

Community developers focus on the io_uring mechanism. On the one hand, they contribute to the upstream kernel community and provide comprehensive support for Cloud Kernel. On the other hand, to use this new mechanism, they have made many explorations in matching with upper-layer applications. For example, they use io_uring to transform Redis's IO process, which improves the performance of Redis by 30%. Another example is the adaptation of the Nginx web engine, which improves the long connection performance of Nginx by over 20%. However, the upper-level applications are numerous and varied. How to maximize IO in different application scenarios? We look forward to more discussions in the community.

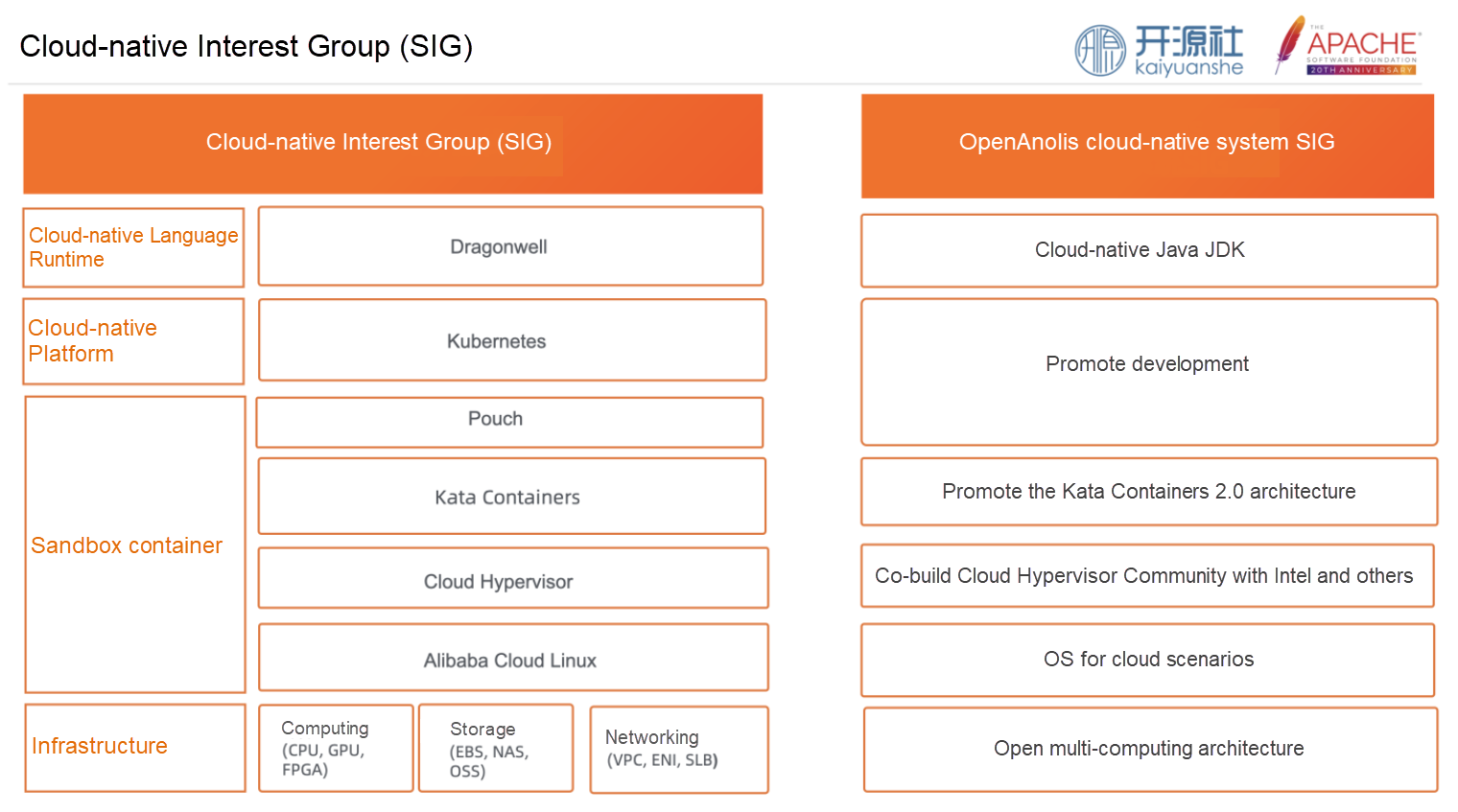

With the development of cloud native, the decoupling of applications and infrastructure is accelerated and the elasticity of the cloud is released. For enterprises, cloud native can help enterprises optimize cloud architecture and maximize the value of the cloud. The complexity of the system layer sinks to the infrastructure, which makes the service boundary of the cloud-native system layer move up. This brings new challenges to the system layer and gives the system layer more room for optimization and innovation.

This is an architecture diagram of the cloud-native open-source software stack. Sandbox containers are used to provide more secure isolation capabilities in serverless and other cloud-native scenarios. In function computing scenarios, languages, such as Java, also sink to the system layer. For example, the Alibaba Cloud sandbox container 2.0 is vertically optimized with the Redis database and Java language. The overall performance is higher than that of common containers.

Cloud-native SIG of our community is committed to developing full-stack open-source technology in the cloud-native system. From open hardware multi-architecture support to cloud scenario operating system, from co-building with the industry cloud hypervisor to promoting the design and implementation of kata containers2.0 architecture, and the next step of 3.0 evolution, from Kubernetes integration to the cloud native of Java language, it promotes the evolution of the entire cloud-native system layer technology and realizes full stack optimization from hardware to the application.

SIG cloud-native Confidential Computing is committed to bringing the ability of confidential computing to cloud-native applications. Confidential computing is used to protect sensitive code and data on the cloud from being stolen and destroyed by other malicious third parties. "Even for organizations that are most reluctant to adopt the cloud, there are now some technologies such as confidential computing that can solve their security concerns without worrying about whether cloud providers can be trusted." Therefore, confidential computing is considered the necessary technology for cloud computing in the coming 5 to 10 years.

However, a cloud-native confidential computing environment involves a full-stack system, from the solution of hardware to the TEE to the operating system and virtualization, from the Resource Management and orchestration of kubernetes to the container runtime, from LibOS to the language runtime. OpenAnolis Cloud-native confidential computing interest group hopes to incubate projects, such as Inclavare Containers and Dragonwell, and connect with other open source communities to work with the industry to promote this goal through open source and collaboration.

We have an overall understanding of the OpenAnolis community. Whether it is the vision of the community or the organization and structure of the community, OpenAnolis has distinctive features that focus on addressing the needs of cloud computing for system software development. So what kind of new model will the development of such a cloud-oriented OS community bring to Linux distributions, and what kind of new open source ecosystem will be built around the community?

Business closed-loop: Compared with the traditional distribution, the distribution for cloud computing scenario has a business closed loop. The traditional distribution communities or vendors are only responsible for OS itself. However, there is a long path from the release of the OS to providing services for business, including validation done by the hardware provider OEM, infrastructure construction done by different information integrators, application solution integration, and finally delivery. In the cloud computing scenario, the entire process is completed by the cloud computing provider, because the customer needs the cloud platform to host the business application, which forms a closed loop from the OS to the business. The impact of this closed loop on OS itself is that OS can be better integrated and optimized with the entire cloud computing platform stack. In addition, as a cloud platform provider, it can be closer to application scenarios and more convenient and quicker to obtain business requirements and feedback on OS.

End-to-end application solution: In the cloud-native era, business applications are born and developed on the cloud. The system layer, the basic application layer, and even the language runtime also sink into the infrastructure. Therefore, in terms of business requirements, whether for hardware architecture, such as X86, CPU of ARM, GPU, XPU, or the resource capabilities of the cloud platform, such as network, computing, storage, and security, as well as applications such as AI and big data, it requires the cloud platform to provide an end-to-end application solution. This is also a problem that the community SIG wants to solve.

Rapid iteration and evolution: The demand for closed-loop and end-to-end application solutions has driven the rapid iteration and evolution of the cloud computing platform for OS. Thus, OS can support new computing architectures faster, maximize the use of hardware capabilities, and adopt new technologies to meet the business requirements for new solutions and promote the development of software and hardware.

A new open-source ecosystem will be built in the OpenAnolis community.

Full-stack open-source ecosystem: As the cloud computing software stack becomes complex, the application service boundary moves up, and the demand for full-stack integration and optimization increases. The community uses SIG to build full-stack open-source technical capabilities from a certain cloud computing requirement point and technology point. We built a full-stack open-source technology capability from a certain cloud computing demand point and technology point to promote the development of hardware and software synergy and application ecological prosperity from the new platform and new features of the hardware to the optimal architecture of the system layer, and to the adaptation of the application layer;

All-round open source: The community has built a three-dimensional and all-round open source ecosystem through open source projects, interest groups SIG and open-source distribution.

Open source enabling industry: The community is also developing a complete ecosystem of IHV, OSV, ISV, and other partners. The cooperation between the community and ecological partners will also be a new cooperation relationship and develop new cooperation areas. We work together with active developers and users to cooperate and win together to become the strongest driving force for cloud computing development.

Cloud computing is providing power for the digital transformation of enterprises, which in turn enables the rapid development of related technologies. The operating system is in the middle layer of the entire cloud infrastructure. It manages and abstracts hardware resources downward, supports the cloud platform and business applications on the cloud upward, and plays a key role in the entire cloud computing architecture. How can we build an operating system that meets the development needs of cloud computing? This remains a common problem faced in the cloud era. You are welcome to participate in the OpenAnolis community to build the cloud platform system technology base and promote the development of cloud computing.

Community official website: https://openanolis.cn/?lang=en

Code base: https://github.com/openanolis

Open-Source fastFFI: An Efficient Java Cross-Language Communication Frame

"When Can the Operating System Be Recognized?" A 16-Year Insistence of Open-Source Developers

85 posts | 5 followers

FollowOpenAnolis - September 6, 2022

OpenAnolis - December 8, 2022

OpenAnolis - July 8, 2022

Alibaba Cloud Community - September 5, 2022

OpenAnolis - July 18, 2024

OpenAnolis - December 12, 2023

85 posts | 5 followers

Follow Digital Marketing Solution

Digital Marketing Solution

Transform your business into a customer-centric brand while keeping marketing campaigns cost effective.

Learn More Digital Credit Lending Solution

Digital Credit Lending Solution

This built-in AI solution allows Financial Institutions (FIs) to dynamically develop, train, and deploy credit risk and fraud risk models to decide digital lending applications in real-time and control risks.

Learn More Function Compute

Function Compute

Alibaba Cloud Function Compute is a fully-managed event-driven compute service. It allows you to focus on writing and uploading code without the need to manage infrastructure such as servers.

Learn More Managed Service for Prometheus

Managed Service for Prometheus

Multi-source metrics are aggregated to monitor the status of your business and services in real time.

Learn MoreMore Posts by OpenAnolis