On November 9, 2015, Google released an open source Artificial Intelligence (AI) system known as TensorFlow. Ever since the launch of TensorFlow, the growth of AI and machine learning has been immense. Machine learning, as a type of AI, enables software to elaborate or predict future events based on a large volume of data. Today, leading technology giants are all making substantial investments in machine learning, including Facebook, Apple, Microsoft, and even China's leading search engine – Baidu.

In 2016, Google DeepMind's AlphaGo project defeated South Korean player Lee Se-dol in the world-famous Go game. The media used the terms AI, machine learning, and deep learning to explain the reasons for DeepMind's victory, causing a mass confusion of these terms among the public.

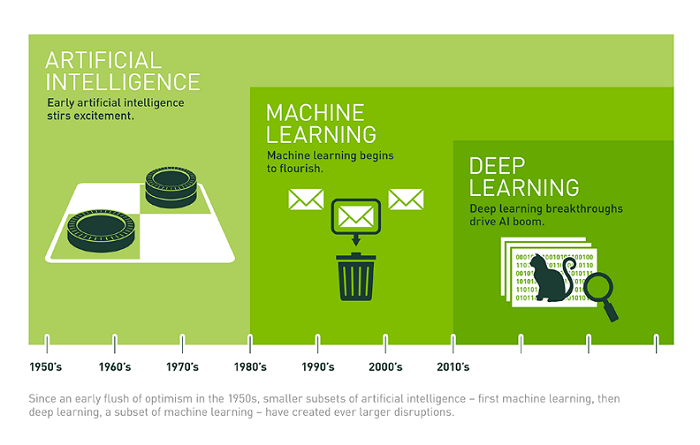

Although conceptually similar, the terms AI, machine learning, and deep learning are not interchangeable. Referencing the interpretations from Michael Copeland of NVIDIA, this article unveils the concepts of AI, machine learning, and deep learning. To understand the relationship between the three, let us look at the figure below:

Figure 1

As shown in the figure, machine learning and deep learning belong are subcategories of AI. The concept of AI appeared in the 50's, while machine learning and deep learning are relatively newer topics.

Since 1956, when computer scientists coined the term AI at Dartmouth Conferences, there has been an endless stream of creative ideas about AI. AI was one of the hottest topic or research because many perceived AI as the key to a bright future of human civilization. However, the idea of AI was quickly discarded for being too pretentious and whimsical.

In the past few years, especially after 2015, AI has experienced a new surge. A large contributor to this growth is the widespread use of graphics processors (GPUs) that make parallel processing faster, economical, and powerful. Additionally, the emergence of almost infinite storage spaces and massive data (big data movement) also benefitted the development of AI. These technologies allow unlimited access to all kinds of files, including images, text, transaction data, and map data.

Next, we will look at AI, machine learning, and deep learning one by one from their development processes.

Figure 2

When AI pioneers sat in the meeting room in Dartmouth College, they dreamed of creating a complicated machine with a human-level intelligence using the emerging computers at that time. This is what we call the "General AI" (General AI) concept, a machine capable of reasoning and the ability to interact with the five senses. This is a recurrent theme in films, such as the human-friendly C-3PO and the enemy of humanity, Terminator. However, so far general AI machines are only fictional for a simple reason: we cannot achieve it yet, at least so far.

One of the main challenges of general AI is its extensive scope. Instead of creating an all-purpose machine, we can narrow down our requirements to achieve specific goals. This task-specific implementation of AI is also known as narrow AI. There are many examples of narrow AI in reality, but how are they created? Where does the intelligence come from? The answer to these questions lies within the next topic – machine learning.

Figure 3

The concept of machine learning comes from AI and it is a way to achieve general AI. Researchers in earlier days got together and developed algorithms that include decision tree learning, inductive logic programming, enhanced learning, and Bayesian networks. In simple terms, machine learning utilizes algorithms to analyze data, and then learns from the results to make inferences or predictions. Unlike the traditional use of preprogrammed software, machine learning uses data and algorithms to "train" itself and improve on its own program.

Computer vision is one of the most well-known application of machine learning, but it requires a lot of manual coding work to complete tasks. Researchers manually write some classifiers, such as edge detection filters, to help programs identify the boundaries of objects. Based on these manually written classifiers, researchers can then develop algorithms for machines to analyze, identify, and understand images.

Nevertheless, this process is prone to errors because of the primitiveness of existing technologies.

Deep learning is a technology for achieving machine learning. The concept of artificial neural networks is an early development of deep learning, but it remained unknown for decades after its invention. The idea of creating interconnected components was inspired by the human brain, but its implementation differs significantly from biological systems. In humans, the neurons of the human brain can connect to any neuron within a specific range to implement a variety of tasks. However, the data transmission in an artificial neural network must go through different layers in different directions.

For example, you can splice an image into smaller pieces and input them into the first layer of the neural network. The preliminary calculation occurs in the first layer, and then the neurons pass the data to the second layer. Neurons in the second layer will then execute the task. All the layers follow the same rule, and the result is presented as an output.

Each neuron has a specific weight assigned to it. These weights determine the final output, and are calculated based on the correctness and error of the neuron relative to the task of execution. Let us look at an example of a system analyzing a stop sign. The neurons subdivide and "check" for the properties of a stop sign image, such as the shape, color, character, size, and movement. The neural network will produce a probability vector, which is, in fact, an estimation result based on the weights. In this example, the system may be 86 percent sure that the image is a stop sign. The network architecture then determines whether this judgment is correct.

However, the problem is that even the most basic neural network consumes a lot of computing resources, an aspect that made neural network infeasible back then. A small group of enthusiastic researchers, led by Professor Geoffrey Hinton of the University of Toronto, stuck to this method and eventually enabled the supercomputer to execute the algorithm in parallel to prove the viability of the algorithm.

If we go back to the stop sign example, the accuracy of the prediction is dictated by the amount of training the neural network receives. This implies that constant training is necessary. Tens of thousands or even millions of images are needed to train the machine. With sufficient training, the input weights of neurons can be adjusted to a very precise level for a consistently accurate answer.

Currently, deep learning trained machines can outperform humans in image recognition, including challenging and critical tasks such as identifying signs of cancer in blood. Facebook uses a similar type of neural network to recognize faces in pictures, and Google's AlphaGo is capable of beating the world's best Go players by training its algorithm intensively.

The foundation of AI lies in the intelligence of machines, while machine learning specifically refers to the deployment of calculation methods that support AI. Simply put, AI is science, while machine learning is the experimental methods that make AI possible. To some extent, machine learning makes AI. We hope that this article was helpful in explaining the differences and relationships between the three variants of machine intelligence.

The Influx of Banking Trojans – A New Variant of BankBot Trojan

Conway's Law – A Theoretical Basis for the Microservice Architecture

2,593 posts | 793 followers

FollowJJ Lim - April 19, 2023

Alibaba Clouder - June 17, 2020

Alibaba Clouder - October 15, 2019

Alibaba Cloud Community - October 10, 2024

Alibaba Clouder - May 10, 2021

Alibaba Clouder - November 11, 2020

2,593 posts | 793 followers

Follow Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn More Epidemic Prediction Solution

Epidemic Prediction Solution

This technology can be used to predict the spread of COVID-19 and help decision makers evaluate the impact of various prevention and control measures on the development of the epidemic.

Learn More Online Education Solution

Online Education Solution

This solution enables you to rapidly build cost-effective platforms to bring the best education to the world anytime and anywhere.

Learn More Accelerated Global Networking Solution for Distance Learning

Accelerated Global Networking Solution for Distance Learning

Alibaba Cloud offers an accelerated global networking solution that makes distance learning just the same as in-class teaching.

Learn MoreMore Posts by Alibaba Clouder

Raja_KT March 7, 2019 at 6:39 am

Theory, real practical implementation use-cases and media spell out different terms and it brings forth this huge conundrum :)