By Alberto Roura, Alibaba Cloud Tech Share Author. Tech Share is Alibaba Cloud's incentive program to encourage the sharing of technical knowledge and best practices within the cloud community.

From a DevOps point of view, the importance of a good architected solution, with proper separation of responsibilities, is fundamental for the long-term success of any application. This case we are presenting today is a very simplified example made to showcase the concept and to be easily understood, but it sets the base to scale it on your own as you gain confidence on this topic. We will make use of Elastic Compute Service (ECS), Server Load Balancer (SLB) and Virtual Private Cloud (VPC), all very common Alibaba Cloud services that you should be familiar with.

When using Docker, like in our case today, one should never run more than 1 function per container. Running more than 1 defeats the whole purpose of using containers, as adding them doesn't cost much in terms of resources. In my experience, as DevOps lead engineer, I saw too many projects made by others with supervisord managing multiple functions in a single container. This is considered an anti-pattern as makes it very hard to track, debug and scale them horizontally. Please notice that I'm using the word function, not process. The official Docker documentation has moved away from saying one "process" to instead recommending one "concern" or "function" per container.

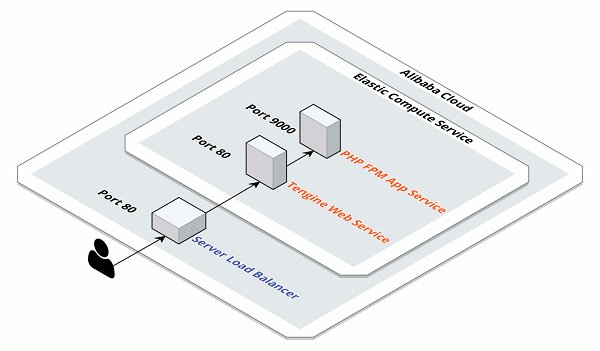

In today's small example we are showing how to separate the responsibilities using a web service and an app service, as shown below:

When using PHP applications we have to make a choice about the web server to use, being Apache the one that would fit most of cases. Apache is great in terms of modules, as you can use mod_php so Apache itself executes the PHP code directly. This means that you would only need one container and still be able to track and debug the application properly. It also helps in reducing the complexity of the deployment in websites that don't experience a high concurrency of connections.

Our goal today is to build a highly-scalable project and Tengine is here so save us all, as we would need to separate the web server from the application codebase itself, and this web server makes it very simple.

Tengine is, in a nutshell, Nginx with super-powers. This web server is everything you love about Nginx but with some neat extra features like dynamic module loading (loading modules without recompiling), unbuffered request proxy forwarding (saving precious disk I/Os), support for dynamic scripting language (Lua) for config files, and way more. You can have a look to the full list of features in the Tengine official site.

This web server has been an open source project since December 2011. Made by the Taobao team, an Alibaba Group company and, since then, it's been used in sites like Taobao and Tmall, and used internally in Alibaba Cloud as a key part of their CDN's Load Balancers.

As you can see, Tengine has been tested in some of the busiest websites in the world.

As we mentioned, we are planning to build a very simple but scalable project where we will separate the web server from the application codebase. This is achieved by running 2 containers, the web server listening to user requests and the PHP FPM interpreter as backend. Because this is a "Best Practices" type of article, we are setting up a Server Load Balancer to leave a playground afterwards to yo to scale the whole thing. At the end, we will deploy everything using Terraform, a tool used to create, change and improve infrastructure in a predictable way. Learn how to setup your workstation to work with Alibaba Cloud by watching this video webinar about it.

Given the maturity of the Docker Compose project, we are going to deploy this application by writing a docker-compose.yml file as shown below:

version: '3.7'

networks:

webappnet:

driver: bridge

services:

app:

image: php:7.3-fpm

container_name: fpm

networks:

- webappnet

restart: on-failure

volumes:

- ./index.php:/var/www/html/index.php

web:

image: roura/php:tengine-fpm

container_name: tengine

networks:

- webappnet

restart: on-failure

ports:

- 80:80

volumes:

- ./index.php:/var/www/html/index.phpAs you can see, there are 2 services running in the network, app and web. The web service will pass all requests to the app one on port 9000, which is the one exposed by FPM by default. In the future, you can scale that 2 services independently and horizontally as needed.

Did I mentioned the app was going to be simple? You see, it has only a file, but enough to test that a PHP script is being rendered in the backend. Create the index.php next to the docker-compose.yml one with the following content:

<?php phpinfo();This file will serve as the main template to bootstrap all services in the ECS Instance. It basically install all dependencies and creates the minimal files to start the containers.

#!/usr/bin/env bash

mkdir /var/docker

cat <<- 'EOF' > /var/docker/docker-compose.yml

${docker_compose}

EOF

cat <<- 'EOF' >/var/docker/index.php

${index_php}

EOF

apt-get update && apt-get install -y apt-transport-https ca-certificates curl software-properties-common

curl -fsSL https://download.docker.com/linux/ubuntu/gpg | apt-key add -

add-apt-repository "deb [arch=amd64] https://download.docker.com/linux/ubuntu $(lsb_release -cs) stable"

apt-get update && apt-get install -y docker-ce docker-compose

curl -L https://github.com/docker/compose/releases/download/1.23.2/docker-compose-`uname -s`-`uname -m` -o /usr/bin/docker-compose

cd /var/docker && docker-compose up -dThis is the file that will orchestrate it all. It creates a new VPC, a Server Load Balancer, an ECS without Internet IP and a Security Group locking it all down to the port 80 only. The file, named main.tf needs to be next to the others we just created and should look like the following:

provider "alicloud" {}

variable "app_name" {

default = "webapp"

}

data "template_file" "docker_compose" {

template = "${file("docker-compose.yml")}"

}

data "template_file" "index_php" {

template = "${file("index.php")}"

}

data "template_file" "user_data" {

template = "${file("user-data.sh")}"

vars {

docker_compose = "${data.template_file.docker_compose.rendered}"

index_php = "${data.template_file.index_php.rendered}"

}

}

data "alicloud_images" "default" {

name_regex = "^ubuntu_16.*_64"

}

data "alicloud_instance_types" "default" {

instance_type_family = "ecs.n4"

cpu_core_count = 1

memory_size = 2

}

resource "alicloud_vpc" "main" {

cidr_block = "172.16.0.0/12"

}

data "alicloud_zones" "default" {

available_disk_category = "cloud_efficiency"

available_instance_type = "${data.alicloud_instance_types.default.instance_types.0.id}"

}

resource "alicloud_vswitch" "main" {

availability_zone = "${data.alicloud_zones.default.zones.0.id}"

cidr_block = "172.16.0.0/16"

vpc_id = "${alicloud_vpc.main.id}"

}

resource "alicloud_security_group" "group" {

name = "${var.app_name}-sg"

vpc_id = "${alicloud_vpc.main.id}"

}

resource "alicloud_security_group_rule" "allow_http" {

type = "ingress"

ip_protocol = "tcp"

nic_type = "intranet"

policy = "accept"

port_range = "80/80"

priority = 1

security_group_id = "${alicloud_security_group.group.id}"

cidr_ip = "0.0.0.0/0"

}

resource "alicloud_security_group_rule" "egress" {

type = "egress"

ip_protocol = "tcp"

nic_type = "intranet"

policy = "accept"

port_range = "80/80"

priority = 1

security_group_id = "${alicloud_security_group.group.id}"

cidr_ip = "0.0.0.0/0"

}

resource "alicloud_slb" "main" {

name = "${var.app_name}-slb"

vswitch_id = "${alicloud_vswitch.main.id}"

internet = true

}

resource "alicloud_slb_listener" "https" {

load_balancer_id = "${alicloud_slb.main.id}"

backend_port = 80

frontend_port = 80

health_check_connect_port = 80

bandwidth = -1

protocol = "http"

sticky_session = "on"

sticky_session_type = "insert"

cookie = "webappslbcookie"

cookie_timeout = 86400

}

resource "alicloud_slb_attachment" "main" {

load_balancer_id = "${alicloud_slb.main.id}"

instance_ids = [

"${alicloud_instance.webapp.id}"

]

}

resource "alicloud_instance" "webapp" {

instance_name = "${var.app_name}"

image_id = "${data.alicloud_images.default.images.0.image_id}"

instance_type = "${data.alicloud_instance_types.default.instance_types.0.id}"

vswitch_id = "${alicloud_vswitch.main.id}"

security_groups = [

"${alicloud_security_group.group.id}"

]

password = "Test1234!"

user_data = "${data.template_file.user_data.rendered}"

}

output "slb_ip" {

value = "${alicloud_slb.main.address}"

}Since we have all the files written, we are ready to go. Run terraform init && terraform apply and wait until all your infrastructure resources are created.

When everything finishes, you will see the output printing something like slb_ip = 47.xx.xx.164. That is the public IP of the Load Balancer. Copy it and paste it into your web browser. Ta-da! A screen like the following screenshot should show up:

Congratulations, you are a better cloud architect now!

2,593 posts | 793 followers

Followroura356a - April 4, 2020

Alibaba Clouder - July 19, 2019

Alibaba Clouder - December 18, 2017

Alibaba Clouder - April 19, 2019

Alibaba Clouder - January 2, 2019

Alibaba Clouder - May 25, 2018

2,593 posts | 793 followers

Follow ECS(Elastic Compute Service)

ECS(Elastic Compute Service)

Elastic and secure virtual cloud servers to cater all your cloud hosting needs.

Learn MoreLearn More

Simple Application Server

Simple Application Server

Cloud-based and lightweight servers that are easy to set up and manage

Learn MoreMore Posts by Alibaba Clouder