By Digoal

This blog discusses data storage optimization in spatial systems using PostgreSQL in the context of pilotless driving and delivery robots.

All point cloud data is stored on the server. A terminal, such as a driverless car, requires only point data of locations (including the planned route, range of motion, and administrative region) that the driverless car needs to pass through.

The server delivers the data required by the terminal.

Assume that the data on the server has been partitioned by administrative region or geohash code range, a partition table has been created, and a GiST index has been built.

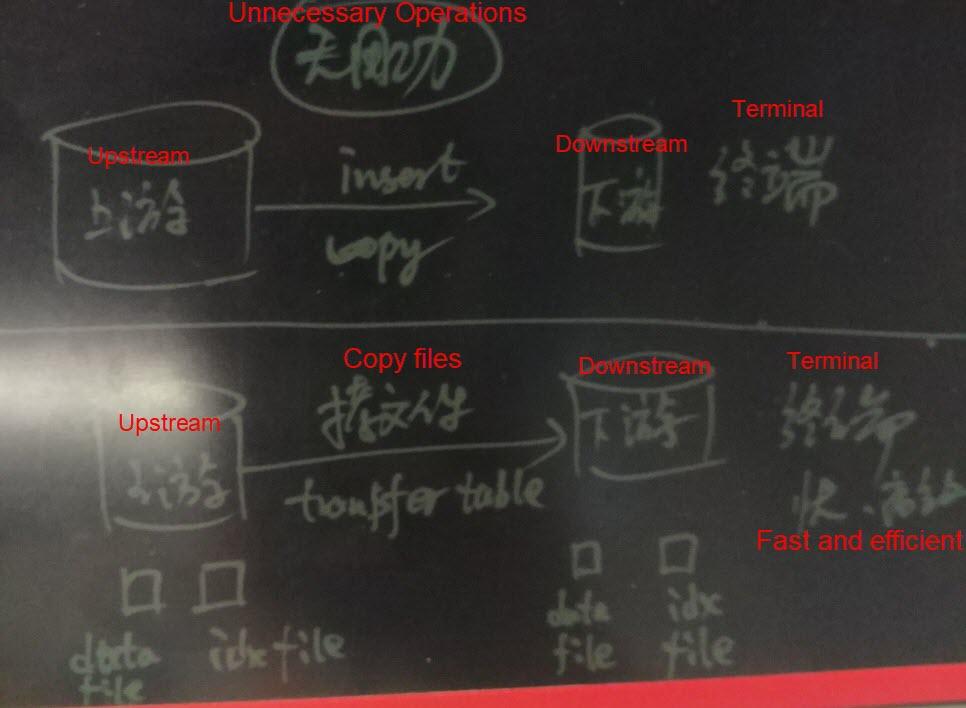

When the data in these tables is loaded to the terminal (the driverless car) from the server using the INSERT or COPY operation, the driverless car usually needs to build the GiST index again. The repeated building of the GiST index is unnecessary and wastes resources.

If you use the table transfer method, data files and index files are directly loaded, and the database’s catalog metadata is built. This avoids unnecessary operations such as building indexes and checking data formats due to inserting or copying the data to the terminal. In this way, you can achieve rapid data import, save energy, and reduce emissions.

The table transfer method saves energy and reduces emissions. The key to avoiding redundancy in data imported to the terminal lies in sufficient refinement of data partitions. For example, a terminal (driverless vehicle) requires point cloud data for the Xihu District of Hangzhou. If data on the server is partitioned based on city-level administrative regions, when the table transfer method is used, all of Hangzhou City’s data files need to be copied to the terminal, which is impractical.

For more information on how to use the pg_transfer extension, see https://postgrespro.com/docs/postgresproee/9.6/pgtransfer.html

A GiST index is a spatial clustering index and supports the geometry data type and other data types. It supports sorting by distance and data search by distance and is highly accurate.

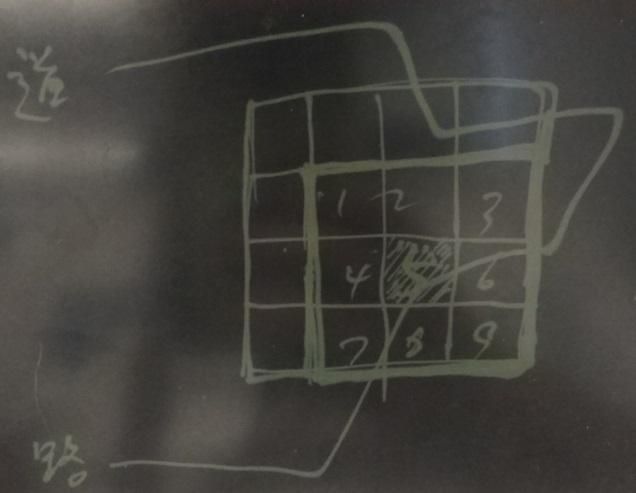

Geohashing is a coding method that converts geographical coordinates (longitude and latitude) into strings. The geohash strings of adjacent locations are similar to each other and have the same prefix.

The st_geohash function provided by PostGIS can convert geometry data into a geohash string.

1) A GiST index is highly efficient when points near a certain point need to be searched for, and the records need to be returned in order. it is not advantageous when a large number of records need to be returned by spatial order.

2) When there is a large amount of location data, it may be time-consuming to build a GiST index because spatial clustering needs to be calculated for the GiST index. If data is inserted concurrently, all CPU resources can be called to build the index. This also helps to achieve good performance. For example, when 32 cores are called, the write speed may reach 170,000 records/s (including the GiST index).

3) A GiST index contains spatial data values; therefore, it occupies a large amount of space compared to a block range index (BRIN), which is described below.

4) If data is stored in a spatially non-ordered manner, using GiST index returns a large amount of adjacent data, resulting in heap I/O amplification.

5) Using a geohash B-tree index to cluster heap tables, you can reduce discrete heap I/Os caused when searching by distance on massive data using a GiST index.

A geohash is a character string. Geohash strings of adjacent locations have a sequential similarity.

The precision of a geohash is closely related to the code length. Unlike the geometry type, a geohash does not have an absolutely accurate value. Therefore, we recommend using the geometry type if you need an absolutely accurate point.

You may get inaccurate data (usually more than needed) if you search for data by distance using a geohash. In this case, perform secondary calculations to obtain accurate data.

If data is stored in order by geohash, the data is stored in a spatially ordered mode. Heap storage features randomness, but you can use the cluster syntax and store data by a particular index. One of the benefits of ordered storage is that the boundaries of data stored in different data blocks are very clear.

If data is stored in an ordered manner, use a BRIN index to search a range of data by geohash. The data blocks in this range are returned by the BRIN index. Data is then efficiently obtained from these data blocks. BRIN index is inferior to GiST index performance in obtaining a small amount of data but can match GiST index performance in obtaining a large amount of data.

A BRIN index stores the metadata (min, max, avg, count, nulls count, etc.) of each data block or continuous data blocks. It is suitable for storing data in an ordered manner that boasts a good linear correlation between the values and row numbers.

For more information about cases where BRIN indexes are used for point cloud data, see:

1) If a small amount of data is returned for many requests, a k-nearest neighbor (KNN) search is needed, and returned records need to be sorted by distance, then we recommend using a GiST index. In this case, the GiST index is highly efficient. For example, the performance of a tracking system is 400% higher than that of Redis.

2) If a large amount of point data (for example, over 1 million records) is returned for many requests, we recommend storing data in a heap table in order by geohash to reduce I/O amplification. Besides, use a BRIN index to reduce the index building time and space occupied by the index.

Generally, geographical information is stored in geometry fields. However, it is challenging to implement geometry type partition tables. A feasible method is to perform range partitioning using geohashing because geohash strings of adjacent locations are similar.

In PostgreSQL, you can implement partitioning in two ways: one is to use entity fields, and the other is to use expression partitions. If you are using Greenplum, only entity field partitions are supported.

create table test(id int, loc geometry, loc_geohash text); -- loc_geohash=st_geohash(loc),按loc_geohash进行范围分区

或

create table test(id int, loc geometry); -- 按表达式st_geohash(loc)进行范围分区。 Data aggregation significantly improves performance for querying a large amount of data.

As described in “Part 1: Technologies Used in Pilotless Driving — PostGIS Point Cloud Application,” after data aggregation, the response time goes down from 43 seconds to 312 milliseconds when 5 million points are queried.

You can implement aggregation in many ways:

1) Grid aggregation, as described in “Part 1: Technologies Used in Pilotless Driving — PostGIS Point Cloud Application.”

2) Aggregation by geohash prefix, which is also widely used. For more information, see https://postgis.net/docs/ST_GeoHash.html

After a route is planned for a terminal (driverless car), point cloud data covering the road needs to be downloaded to the terminal. The data belongs to two aggregate types in PostGIS, and an overlap of the two aggregate types is calculated.

Example:

1) Point cloud table: Point cloud data is aggregated by geohash or grid. For example, each grid represents an area of 1 square meter.

2) Road: The area covered by the road is a polygon.

Obtain the data that overlaps with the polygon from the point cloud table. This operation can be performed by using a GiST index, which is highly efficient.

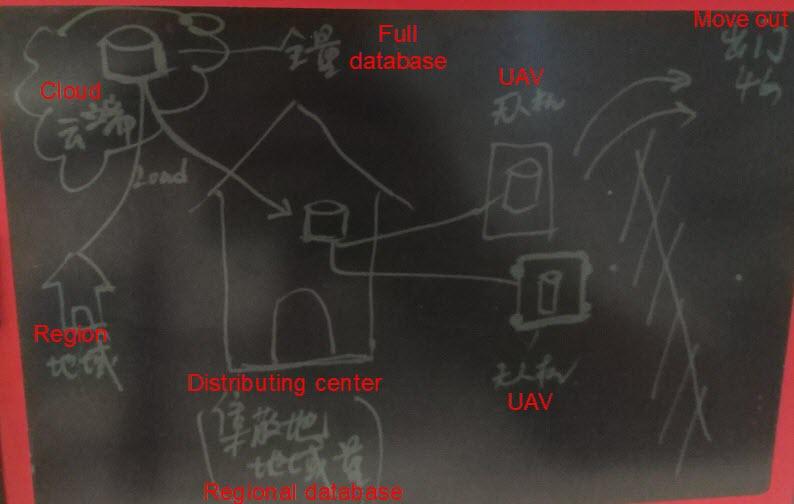

A terminal needs to store point cloud data for the region in which it is operating. Usually, many driverless cars operate in a region, and the data for all the driverless cars is the same.

How to insert point cloud data into driverless cars? How to update incremental point cloud data?

A feasible method is to deploy a local database for each region, where local database content is sourced from a full database on the cloud.

When point cloud data requires updates, data on the cloud is updated first and then pushed to each region's local database.

Driverless car data is downloaded from each region's local database. To ensure that incremental data can be updated in a stream from the local database, use PostgreSQL's streaming replication feature. Serving as a secondary database, the driverless car tracks incremental data streamed from the local database to ensure that it obtained the latest data.

Incremental data streams are synchronized from each region's local database to a driverless car provided that the driverless car is connected to the regional network.

If the driverless car data needs to be read-only, use physical stream replication, which is most efficient. If you have both read and write requirements on the driverless car data, use the logical subscription feature of PostgreSQL 10. This feature’s synchronization efficiency is lower than that of physical stream replication but is acceptable for small volumes of to-be-synchronized data.

Technologies Used in Pilotless Driving – Part 1: PostGIS Point Cloud Application

digoal - December 21, 2020

digoal - January 25, 2021

Alibaba Clouder - March 22, 2019

digoal - June 26, 2019

Alibaba Clouder - July 26, 2019

digoal - January 25, 2021

PolarDB for PostgreSQL

PolarDB for PostgreSQL

Alibaba Cloud PolarDB for PostgreSQL is an in-house relational database service 100% compatible with PostgreSQL and highly compatible with the Oracle syntax.

Learn More AnalyticDB for PostgreSQL

AnalyticDB for PostgreSQL

An online MPP warehousing service based on the Greenplum Database open source program

Learn More ApsaraDB RDS for PostgreSQL

ApsaraDB RDS for PostgreSQL

An on-demand database hosting service for PostgreSQL with automated monitoring, backup and disaster recovery capabilities

Learn More Database for FinTech Solution

Database for FinTech Solution

Leverage cloud-native database solutions dedicated for FinTech.

Learn MoreMore Posts by digoal