Population perspective is one example of combination of business and data, for example, for selecting the site of a large mall. Data available for analysis includes vehicle traffic and people flow.

We can combine data to have a deeper analysis of factors such as population composition and spending power, bringing more referential information for selecting a proper site for a large mall.

How can we use databases to obtain population data perspective?

1. Population property table

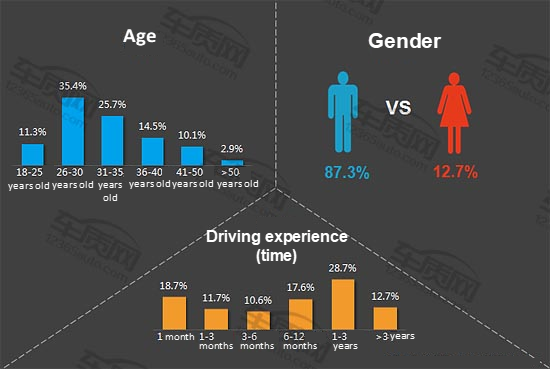

This table holds property fields of individuals, such as income, driving experience (time), and fixed asset. See below:

create table people(

id serial8 primary key, -- User ID

c1 int2, -- Age section (assume 5 sections indicated by 0, 1, 2, 3, and 4 respectively)

c2 int2, -- Personal income section (assume 3 sections indicated by 0, 1, and 2 respectively)

c3 int2, -- Driving experience by time (assume 5 sections indicated by 0, 1, 2, 3, and 4 respectively)

c4 int2, -- Family income section (assume 3 sections indicated by 0, 1, and 2 respectively)

c5 int2, -- Fixed asset section (assume 3 sections indicated by 0, 1, and 2 respectively)

c6 int2 -- Deposit section (assume 3 sections indicated by 0, 1, and 2 respectively)

); 2. Dynamic population trajectory

Population activity area or trajectory is recorded.

Using the PostgreSQL PostGIS plug-in can easily record trajectory data. In addition, GIST indexes are also supported to quickly look for the target population by region or range.

create table people_loc(

id int8, -- User ID

-- loc geometry, -- Location

crt_time timestamp -- Time

); 1. Generate 10 million pieces of population test data, and records with the driving experience section 4 and the age section 4 are not inserted to create some empty values.

insert into people (c1,c2,c3,c4,c5,c6)

select

mod((random()*10)::int,4),

mod((random()*10)::int,3),

mod((random()*10)::int,4),

mod((random()*10)::int,3),

mod((random()*10)::int,3),

mod((random()*10)::int,3)

from generate_series(1,10000000);

postgres=# select * from people limit 10;

id | c1 | c2 | c3 | c4 | c5 | c6

----+----+----+----+----+----+----

1 | 2 | 1 | 3 | 0 | 1 | 2

2 | 0 | 0 | 1 | 0 | 1 | 0

3 | 2 | 1 | 0 | 2 | 0 | 2

4 | 1 | 0 | 0 | 0 | 1 | 2

5 | 3 | 2 | 2 | 1 | 2 | 1

6 | 1 | 2 | 0 | 0 | 1 | 1

7 | 2 | 1 | 0 | 1 | 0 | 0

8 | 1 | 1 | 0 | 1 | 0 | 2

9 | 3 | 0 | 3 | 1 | 2 | 1

10 | 3 | 2 | 2 | 0 | 2 | 1

(10 rows)2. Generate 10 million pieces of population trajectory data

insert into people_loc (id, crt_time)

select random()*10000000, now()+format('%L', (500000-random()*1000000))::interval

-- 或 select random()*10000000, now()+(''||(500000-random()*1000000))::interval

from generate_series(1,10000000);

postgres=# select * from people_loc limit 10;

id | crt_time

---------+----------------------------

7278581 | 2017-03-05 16:35:13.828435

3456421 | 2017-03-07 09:08:26.853477

976602 | 2017-03-04 18:47:49.176176

1996929 | 2017-03-11 08:46:31.955573

6590325 | 2017-03-11 14:48:55.231263

7252414 | 2017-03-04 08:17:28.731733

8763332 | 2017-03-01 15:37:11.57363

9426083 | 2017-03-11 17:51:46.474757

4399781 | 2017-03-05 08:07:45.962599

9049432 | 2017-03-09 14:10:42.211882

(10 rows) 1. Choose a population group

Select a population group according to a central point or a closed-loop area (by using PostGIS)

Here I will not give a PostGIS example (If you are interested, you can use PostGIS to perform testing; the performance is very excellent). Instead, I directly select a population group by the time dimension.

select id from people_loc where crt_time between '2017-03-06'::date and '2017-03-08'::date; Some people may ask what should be done if one person have multiple trajectories within a time period.

In this case we can use IN. The optimizer in PostgreSQL is very powerful. Databases will automatically aggregate when the JOIN operation is performed, and GROUP BY is unnecessary in this step.

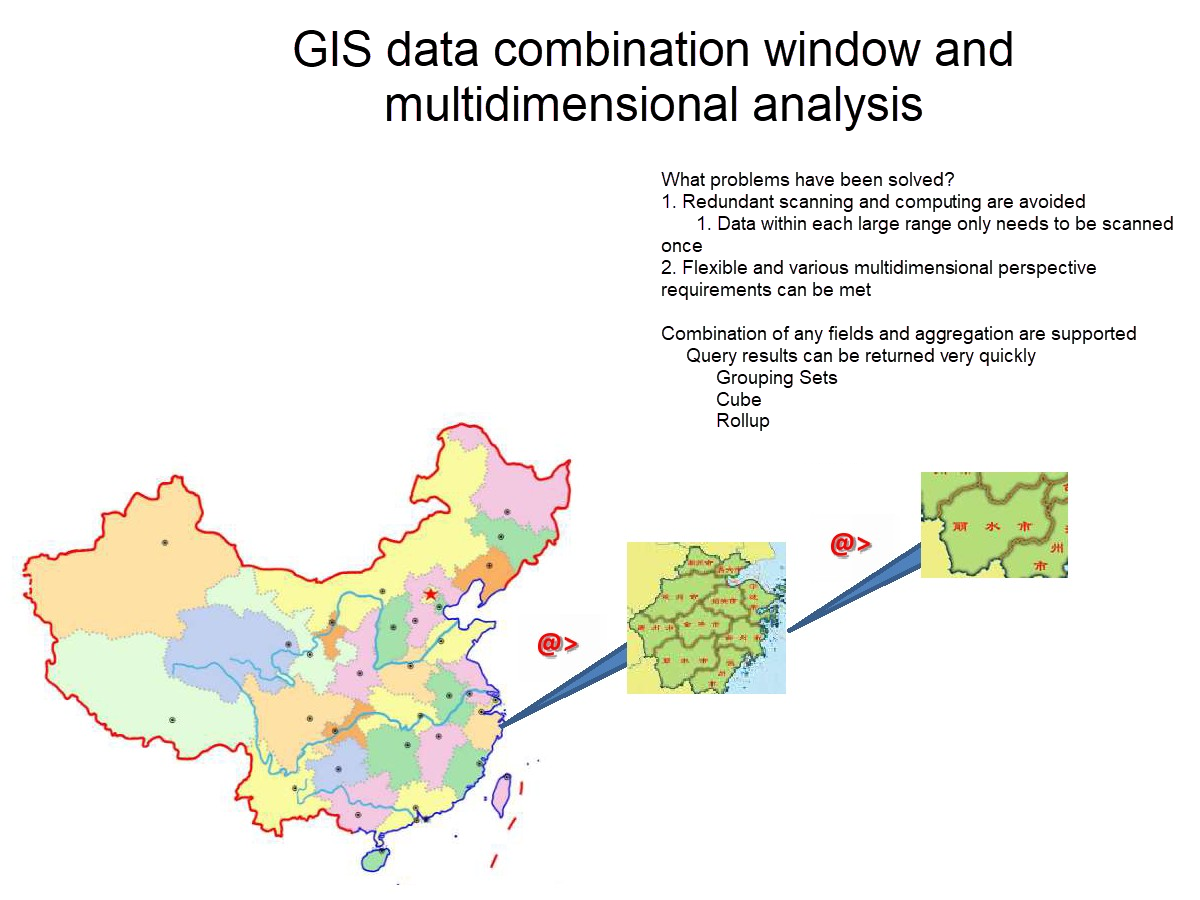

2. Data perspective

PostgreSQL is strongly compatible with SQL. Syntax such as grouping sets, cube, and rollup can be used for data perspective.

select c1,c2,c3,c4,c5,c6,count(*) cnt

from

people

where id in (

select id from people_loc where crt_time between '2017-03-06'::date and '2017-03-08'::date

)

GROUP BY GROUPING SETS (c1,c2,c3,c4,c5,c6,());

c1 | c2 | c3 | c4 | c5 | c6 | cnt

----+----+----+----+----+----+---------

| 0 | | | | | 555530

| 1 | | | | | 555525

| 2 | | | | | 475596

| | | | | | 1586651

| | | 0 | | | 554079

| | | 1 | | | 555864

| | | 2 | | | 476708

| | | | | 0 | 554738

| | | | | 1 | 554843

| | | | | 2 | 477070

| | | | 0 | | 554552

| | | | 1 | | 555073

| | | | 2 | | 477026

0 | | | | | | 396349

1 | | | | | | 475616

2 | | | | | | 397502

3 | | | | | | 317184

| | 0 | | | | 396947

| | 1 | | | | 475504

| | 2 | | | | 395852

| | 3 | | | | 318348

(21 rows) For more information about the use of perspective, refer to the use of cube, rollup, and grouping sets.

Currently PostgreSQL, HybridDB, and Greenplum all support the preceding syntax.

3. Result conversion

Use the WITH syntax to convert the preceding results.

with tmp as (

select c1,c2,c3,c4,c5,c6,count(*) cnt

from

people

where id in (

select id from people_loc where crt_time between '2017-03-06'::date and '2017-03-08'::date

)

GROUP BY GROUPING SETS (c1,c2,c3,c4,c5,c6,())

)

select case

when c1 is not null then 'c1_'||c1

when c2 is not null then 'c2_'||c2

when c3 is not null then 'c3_'||c3

when c4 is not null then 'c4_'||c4

when c5 is not null then 'c5_'||c5

when c6 is not null then 'c6_'||c6

else 'cnt' end AS col,

t1.cnt as private,

t2.cnt as all,

t1.cnt::float8/t2.cnt as ratio

from tmp t1, (select cnt from tmp where tmp.c1 is null and tmp.c2 is null and tmp.c3 is null and tmp.c4 is null and tmp.c5 is null and tmp.c6 is null) t2

;

col | private | all | ratio

------+---------+---------+------------------------

c2_0 | 555530 | 1586651 | 0.35012740672019240526

c2_1 | 555525 | 1586651 | 0.35012425542857250901

c2_2 | 475596 | 1586651 | 0.29974833785123508572

cnt | 1586651 | 1586651 | 1.00000000000000000000

c4_0 | 554079 | 1586651 | 0.34921290189209851442

c4_1 | 555864 | 1586651 | 0.35033791300040147455

c4_2 | 476708 | 1586651 | 0.30044918510750001103

c6_0 | 554738 | 1586651 | 0.34962824212760083976

c6_1 | 554843 | 1586651 | 0.34969441925161866094

c6_2 | 477070 | 1586651 | 0.30067733862078049930

c5_0 | 554552 | 1586651 | 0.34951101407934069937

c5_1 | 555073 | 1586651 | 0.34983937866613388830

c5_2 | 477026 | 1586651 | 0.30064960725452541233

c1_0 | 396349 | 1586651 | 0.24980225645085151051

c1_1 | 475616 | 1586651 | 0.29976094301771467071

c1_2 | 397502 | 1586651 | 0.25052894429839958504

c1_3 | 317184 | 1586651 | 0.19990785623303423374

c3_0 | 396947 | 1586651 | 0.25017915092859110163

c3_1 | 475504 | 1586651 | 0.29969035408542899478

c3_2 | 395852 | 1586651 | 0.24948901806383382357

c3_3 | 318348 | 1586651 | 0.20064147692214608001

(21 rows)

Time: 8466.507 ms perf report

# Events: 8K cycles

#

# Overhead Command Shared Object Symbol

# ........ ........ .................. ...................................................

#

6.29% postgres postgres [.] comparetup_heap

|

--- comparetup_heap

|

|--41.84%-- (nil)

|

|--33.36%-- 0x1

|

|--8.44%-- 0x23e8e

|

|--8.43%-- 0x2

|

--7.93%-- 0x3

5.16% postgres postgres [.] slot_deform_tuple.lto_priv.1138

|

--- slot_deform_tuple.lto_priv.1138

3.82% postgres postgres [.] mergeprereadone

|

--- mergeprereadone

3.79% postgres postgres [.] qsort_ssup

|

--- qsort_ssup

3.51% postgres postgres [.] tuplesort_gettuple_common.lto_priv.1348

|

--- tuplesort_gettuple_common.lto_priv.1348

|

|--32.14%-- 0x1

|

|--22.28%-- 0x2

|

|--18.95%-- (nil)

|

|--11.41%-- 0x10

|

|--5.72%-- 0x3

|

|--1.91%-- 0x3d84d9

|

|--1.91%-- 0xef259

|

|--1.91%-- get_select_query_def.lto_priv.1324

|

|--1.91%-- 0x95c9af

|

--1.88%-- 0x3a0e54 4. "Left join" completion (optional)

To complete empty values, simply use "left join".

select * from (values ('c1_0'),('c1_1'),('c1_2'),('c1_3'),('c1_4'),('c2_0'),('c2_1'),('c2_2'),('c3_0'),('c3_1'),('c3_2'),('c3_3'),('c3_4'),('c4_0'),('c4_1'),('c4_2'),('c5_0'),('c5_1'),('c5_2'),('c6_0'),('c6_1'),('c6_2')) t (col);

col

------

c1_0

c1_1

c1_2

c1_3

c1_4

c2_0

c2_1

c2_2

c3_0

c3_1

c3_2

c3_3

c3_4

c4_0

c4_1

c4_2

c5_0

c5_1

c5_2

c6_0

c6_1

c6_2

(22 rows) The completion is shown as follows:

with tmp as (

select c1,c2,c3,c4,c5,c6,count(*) cnt

from

people

where id in (

select id from people_loc where crt_time between '2017-03-06'::date and '2017-03-08'::date

)

GROUP BY GROUPING SETS (c1,c2,c3,c4,c5,c6,())

),

tmp2 as (

select case

when c1 is not null then 'c1_'||c1

when c2 is not null then 'c2_'||c2

when c3 is not null then 'c3_'||c3

when c4 is not null then 'c4_'||c4

when c5 is not null then 'c5_'||c5

when c6 is not null then 'c6_'||c6

else 'cnt' end AS col,

t1.cnt as private,

t2.cnt as all,

t1.cnt::float8/t2.cnt as ratio

from tmp t1, (select cnt from tmp where tmp.c1 is null and tmp.c2 is null and tmp.c3 is null and tmp.c4 is null and tmp.c5 is null and tmp.c6 is null) t2

)

select t1.col,coalesce(t2.ratio,0) ratio from (values ('c1_0'),('c1_1'),('c1_2'),('c1_3'),('c1_4'),('c2_0'),('c2_1'),('c2_2'),('c3_0'),('c3_1'),('c3_2'),('c3_3'),('c3_4'),('c4_0'),('c4_1'),('c4_2'),('c5_0'),('c5_1'),('c5_2'),('c6_0'),('c6_1'),('c6_2'))

t1 (col)

left join tmp2 t2

on (t1.col=t2.col)

order by t1.col;

col | ratio

------+------------------------

c1_0 | 0.24980225645085151051

c1_1 | 0.29976094301771467071

c1_2 | 0.25052894429839958504

c1_3 | 0.19990785623303423374

c1_4 | 0

c2_0 | 0.35012740672019240526

c2_1 | 0.35012425542857250901

c2_2 | 0.29974833785123508572

c3_0 | 0.25017915092859110163

c3_1 | 0.29969035408542899478

c3_2 | 0.24948901806383382357

c3_3 | 0.20064147692214608001

c3_4 | 0

c4_0 | 0.34921290189209851442

c4_1 | 0.35033791300040147455

c4_2 | 0.30044918510750001103

c5_0 | 0.34951101407934069937

c5_1 | 0.34983937866613388830

c5_2 | 0.30064960725452541233

c6_0 | 0.34962824212760083976

c6_1 | 0.34969441925161866094

c6_2 | 0.30067733862078049930

(22 rows) 5. Row and column conversion (optional)

If you want to convert the preceding data from multiple rows to one single row, you can use the tablefunc plug-in. PostgreSQL supports many other features.

https://www.postgresql.org/docs/9.6/static/tablefunc.html

create extension tablefunc;

select * from

crosstab(

$$

with tmp as (

select c1,c2,c3,c4,c5,c6,count(*) cnt

from

people

where id in (

select id from people_loc where crt_time between '2017-03-06'::date and '2017-03-08'::date

)

GROUP BY GROUPING SETS (c1,c2,c3,c4,c5,c6,())

),

tmp2 as (

select case

when c1 is not null then 'c1_'||c1

when c2 is not null then 'c2_'||c2

when c3 is not null then 'c3_'||c3

when c4 is not null then 'c4_'||c4

when c5 is not null then 'c5_'||c5

when c6 is not null then 'c6_'||c6

else 'cnt' end AS col,

t1.cnt as private,

t2.cnt as all,

t1.cnt::float8/t2.cnt as ratio

from tmp t1, (select cnt from tmp where tmp.c1 is null and tmp.c2 is null and tmp.c3 is null and tmp.c4 is null and tmp.c5 is null and tmp.c6 is null) t2

)

select 'row'::text , t1.col,coalesce(t2.ratio,0) ratio from (values ('c1_0'),('c1_1'),('c1_2'),('c1_3'),('c1_4'),('c2_0'),('c2_1'),('c2_2'),('c3_0'),('c3_1'),('c3_2'),('c3_3'),('c3_4'),('c4_0'),('c4_1'),('c4_2'),('c5_0'),('c5_1'),('c5_2'),('c6_0'),('c6_1'),('c6_2'))

t1 (col)

left join tmp2 t2

on (t1.col=t2.col)

order by t1.col

$$

)

as

(

row text,

c1_0 float8,

c1_1 float8,

c1_2 float8,

c1_3 float8,

c1_4 float8,

c2_0 float8,

c2_1 float8,

c2_2 float8,

c3_0 float8,

c3_1 float8,

c3_2 float8,

c3_3 float8,

c3_4 float8,

c4_0 float8,

c4_1 float8,

c4_2 float8,

c5_0 float8,

c5_1 float8,

c5_2 float8,

c6_0 float8,

c6_1 float8,

c6_2 float8

);

row | c1_0 | c1_1 | c1_2 | c1_3 | c1_4 | c2_0 | c2_1 | c2_2 | c3_0 | c3_1

| c3_2 | c3_3 | c3_4 | c4_0 | c4_1 | c4_2 | c5_0 | c5_1 | c5_2 | c6_0 |

c6_1 | c6_2

-----+------------------------+------------------------+------------------------+------------------------+------+------------------------+------------------------+------------------------+------------------------+------------------------

+------------------------+------------------------+------+------------------------+------------------------+------------------------+------------------------+------------------------+------------------------+------------------------+----

--------------------+------------------------

row | 0.24980225645085151051 | 0.29976094301771467071 | 0.25052894429839958504 | 0.19990785623303423374 | 0 | 0.35012740672019240526 | 0.35012425542857250901 | 0.29974833785123508572 | 0.25017915092859110163 | 0.29969035408542899478

| 0.24948901806383382357 | 0.20064147692214608001 | 0 | 0.34921290189209851442 | 0.35033791300040147455 | 0.30044918510750001103 | 0.34951101407934069937 | 0.34983937866613388830 | 0.30064960725452541233 | 0.34962824212760083976 | 0.3

4969441925161866094 | 0.30067733862078049930

(1 row) 1. About indexes (BRIN, GIST, and BTREE_GIST)

Typically two dimensions are configured to filter population groups: time range and geographical location range.

Because trajectory data usually has a strong linear correlation between time and heap, we can use BRIN indexes.

GiST indexes can be used to quickly filter geographical locations.

If you want to create a composite index by time and location, you can use the btree_gist plug-in so that time and geographical locations can be put in the same GiST index.

create extension btree_gist; 2. Recursive optimization

If trajectory points are too many and most of them are from duplicate population groups, you can use recursion to optimize the IN query.

3. "Case when" optimization. You can use "case when" to implement aggregation before using cube, grouping sets, and rollup or using databases that don't support data perspective syntax. However, this will lead to high CPU consumption because "case when" is performed on each piece of data.

select

sum(case when c1=0 then 1 else 0 end)/(count(*))::float8 as c1_0,

sum(case when c1=1 then 1 else 0 end)/(count(*))::float8 as c1_1,

sum(case when c1=2 then 1 else 0 end)/(count(*))::float8 as c1_2,

sum(case when c1=3 then 1 else 0 end)/(count(*))::float8 as c1_3,

sum(case when c1=4 then 1 else 0 end)/(count(*))::float8 as c1_4,

sum(case when c2=0 then 1 else 0 end)/(count(*))::float8 as c2_0,

sum(case when c2=1 then 1 else 0 end)/(count(*))::float8 as c2_1,

sum(case when c2=2 then 1 else 0 end)/(count(*))::float8 as c2_2,

sum(case when c3=0 then 1 else 0 end)/(count(*))::float8 as c3_0,

sum(case when c3=1 then 1 else 0 end)/(count(*))::float8 as c3_1,

sum(case when c3=2 then 1 else 0 end)/(count(*))::float8 as c3_2,

sum(case when c3=3 then 1 else 0 end)/(count(*))::float8 as c3_3,

sum(case when c3=4 then 1 else 0 end)/(count(*))::float8 as c3_4,

sum(case when c4=0 then 1 else 0 end)/(count(*))::float8 as c4_0,

sum(case when c4=1 then 1 else 0 end)/(count(*))::float8 as c4_1,

sum(case when c4=2 then 1 else 0 end)/(count(*))::float8 as c4_2,

sum(case when c5=0 then 1 else 0 end)/(count(*))::float8 as c5_0,

sum(case when c5=1 then 1 else 0 end)/(count(*))::float8 as c5_1,

sum(case when c5=2 then 1 else 0 end)/(count(*))::float8 as c5_2,

sum(case when c6=0 then 1 else 0 end)/(count(*))::float8 as c6_0,

sum(case when c6=1 then 1 else 0 end)/(count(*))::float8 as c6_1,

sum(case when c6=2 then 1 else 0 end)/(count(*))::float8 as c6_2

from

people

where id in (

select id from people_loc where crt_time between '2017-03-06'::date and '2017-03-08'::date

);

c1_0 | c1_1 | c1_2 | c1_3 | c1_4 | c2_0 | c2_1 | c2_2 | c3_0 |

c3_1 | c3_2 | c3_3 | c3_4 | c4_0 | c4_1 | c4_2 | c5_0 | c5_1 | c5_2

| c6_0 | c6_1 | c6_2

------------------------+------------------------+------------------------+------------------------+----------------------------+------------------------+------------------------+------------------------+------------------------+--------

----------------+------------------------+------------------------+----------------------------+------------------------+------------------------+------------------------+------------------------+------------------------+----------------

--------+------------------------+------------------------+------------------------

0.24980225645085151051 | 0.29976094301771467071 | 0.25052894429839958504 | 0.19990785623303423374 | 0.000000000000000000000000 | 0.35012740672019240526 | 0.35012425542857250901 | 0.29974833785123508572 | 0.25017915092859110163 | 0.29969

035408542899478 | 0.24948901806383382357 | 0.20064147692214608001 | 0.000000000000000000000000 | 0.34921290189209851442 | 0.35033791300040147455 | 0.30044918510750001103 | 0.34951101407934069937 | 0.34983937866613388830 | 0.3006496072545

2541233 | 0.34962824212760083976 | 0.34969441925161866094 | 0.30067733862078049930

(1 row)

Time: 8282.168 ms perf report

# Events: 8K cycles

#

# Overhead Command Shared Object Symbol

# ........ ........ .................. ...................................................

#

12.15% postgres postgres [.] ExecMakeFunctionResultNoSets

|

--- ExecMakeFunctionResultNoSets

|

--100.00%-- (nil)

7.11% postgres postgres [.] ExecEvalCase

|

--- ExecEvalCase

|

--100.00%-- (nil)

6.85% postgres postgres [.] ExecTargetList.isra.6.lto_priv.1346

|

--- ExecTargetList.isra.6.lto_priv.1346

5.43% postgres postgres [.] ExecProject.constprop.414

|

--- ExecProject.constprop.414

5.37% postgres postgres [.] ExecEvalScalarVarFast

|

--- ExecEvalScalarVarFast

4.35% postgres postgres [.] slot_getattr

|

--- slot_getattr

4.13% postgres postgres [.] advance_aggregates

|

--- advance_aggregates

3.43% postgres postgres [.] slot_deform_tuple.lto_priv.1138

|

--- slot_deform_tuple.lto_priv.1138

3.12% postgres postgres [.] ExecClearTuple

|

--- ExecClearTuple

2.82% postgres postgres [.] IndexNext

|

--- IndexNext

2.45% postgres postgres [.] ExecEvalConst

|

--- ExecEvalConst

|

--100.00%-- (nil) Typical Cases on PostgreSQL\GPDB Millisecond-Level Massive Spatio-Temporal Data Pivoting

Alibaba Clouder - October 27, 2020

Alibaba-Cloud-GTS - August 25, 2021

Alibaba Clouder - October 27, 2020

Alibaba Clouder - February 21, 2020

Alibaba Cloud Native Community - May 29, 2025

Alibaba Clouder - September 2, 2020

PolarDB for PostgreSQL

PolarDB for PostgreSQL

Alibaba Cloud PolarDB for PostgreSQL is an in-house relational database service 100% compatible with PostgreSQL and highly compatible with the Oracle syntax.

Learn More AnalyticDB for PostgreSQL

AnalyticDB for PostgreSQL

An online MPP warehousing service based on the Greenplum Database open source program

Learn More ApsaraDB RDS for PostgreSQL

ApsaraDB RDS for PostgreSQL

An on-demand database hosting service for PostgreSQL with automated monitoring, backup and disaster recovery capabilities

Learn More Database for FinTech Solution

Database for FinTech Solution

Leverage cloud-native database solutions dedicated for FinTech.

Learn MoreMore Posts by digoal